Raja Giryes

School of Electrical Engineering, Tel Aviv University, Tel Aviv, Israel

A Diffusion Model Predicts 3D Shapes from 2D Microscopy Images

Aug 31, 2022

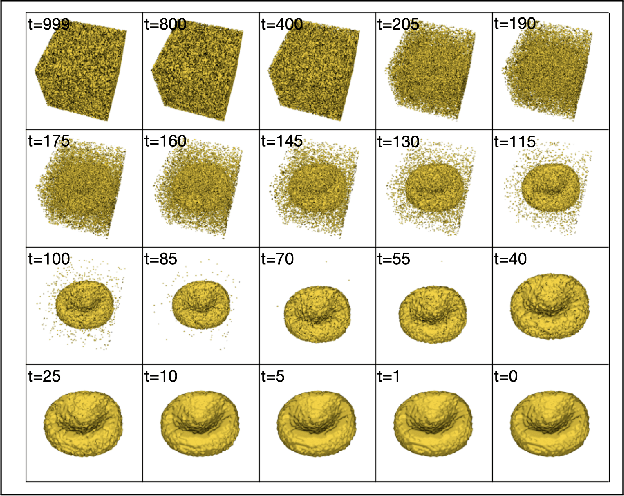

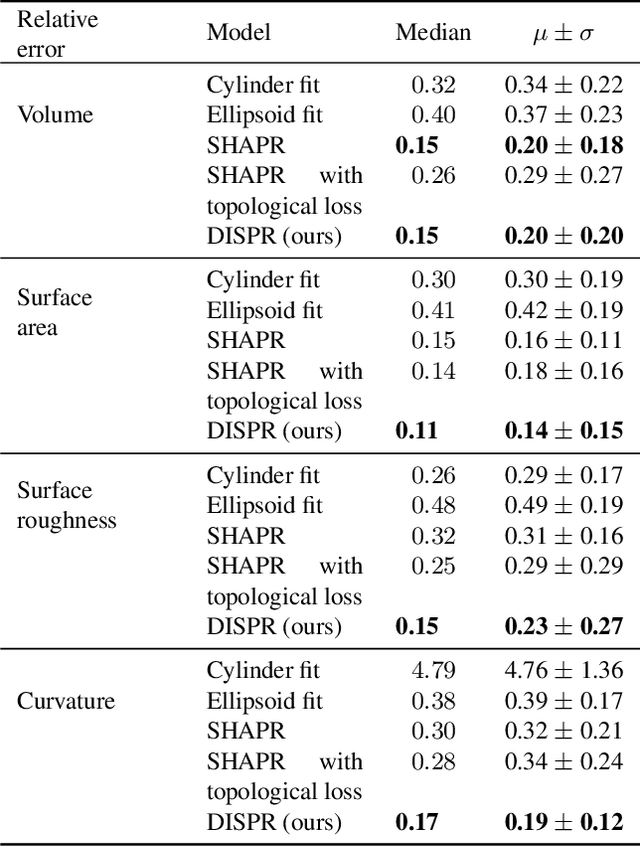

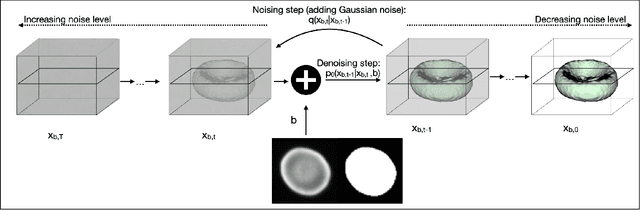

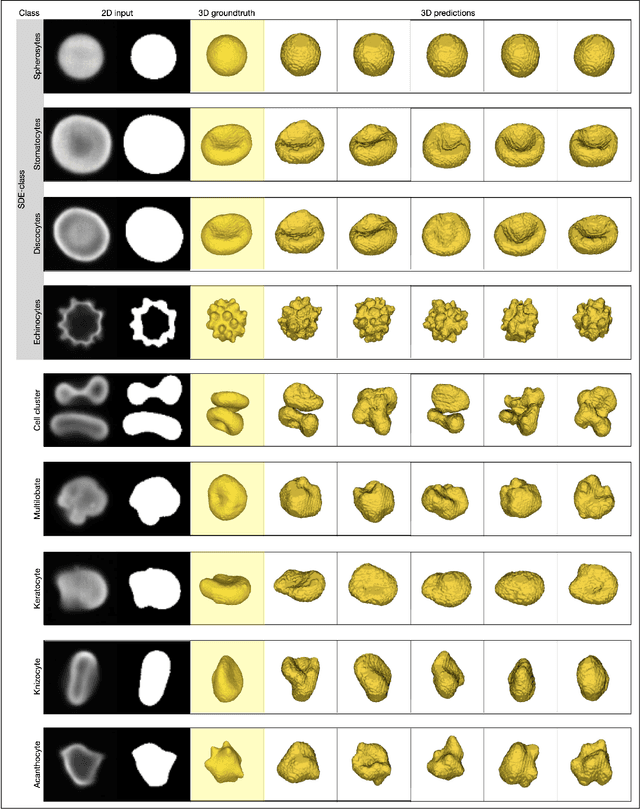

Abstract:Diffusion models are a class of generative models, showing superior performance as compared to other generative models in creating realistic images when trained on natural image datasets. We introduce DISPR, a diffusion-based model for solving the inverse problem of three-dimensional (3D) cell shape prediction from two-dimensional (2D) single cell microscopy images. Using the 2D microscopy image as a prior, DISPR is conditioned to predict realistic 3D shape reconstructions. To showcase the applicability of DISPR as a data augmentation tool in a feature-based single cell classification task, we extract morphological features from the cells grouped into six highly imbalanced classes. Adding features from predictions of DISPR to the three minority classes improved the macro F1 score from $F1_\text{macro} = 55.2 \pm 4.6\%$ to $F1_\text{macro} = 72.2 \pm 4.9\%$. With our method being the first to employ a diffusion-based model in this context, we demonstrate that diffusion models can be applied to inverse problems in 3D, and that they learn to reconstruct 3D shapes with realistic morphological features from 2D microscopy images.

Utilizing Excess Resources in Training Neural Networks

Jul 12, 2022

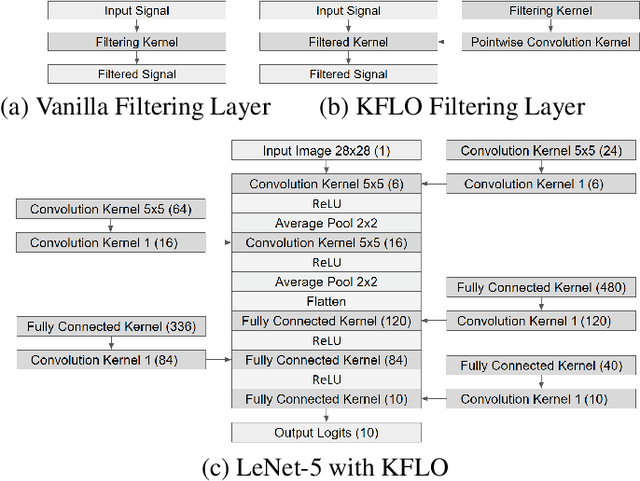

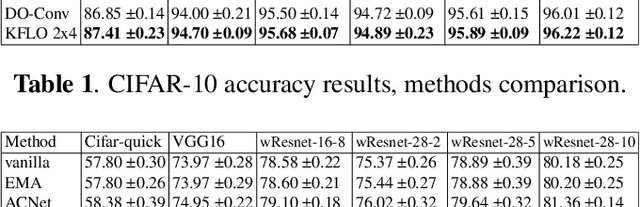

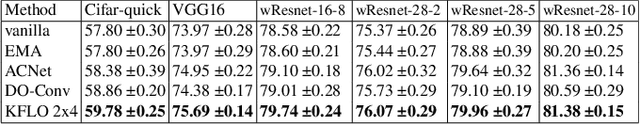

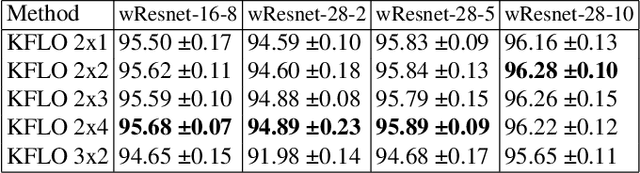

Abstract:In this work, we suggest Kernel Filtering Linear Overparameterization (KFLO), where a linear cascade of filtering layers is used during training to improve network performance in test time. We implement this cascade in a kernel filtering fashion, which prevents the trained architecture from becoming unnecessarily deeper. This also allows using our approach with almost any network architecture and let combining the filtering layers into a single layer in test time. Thus, our approach does not add computational complexity during inference. We demonstrate the advantage of KFLO on various network models and datasets in supervised learning.

Membership Inference Attack Using Self Influence Functions

May 26, 2022

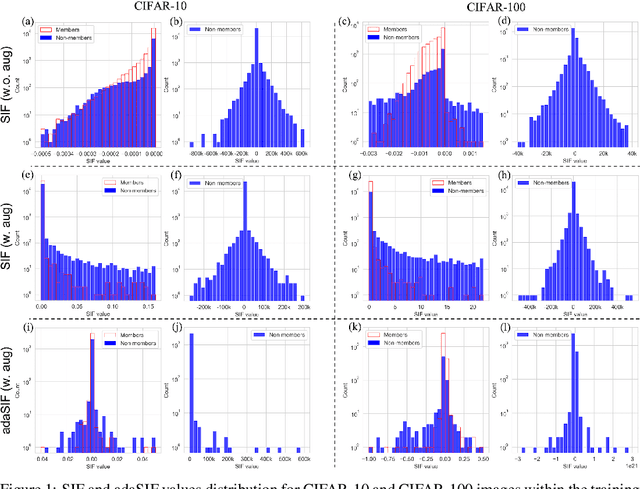

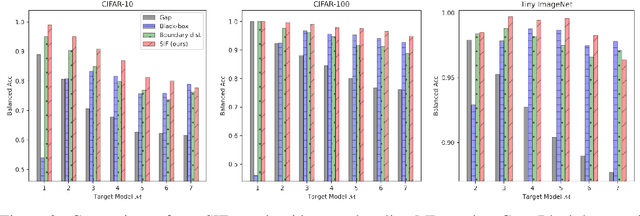

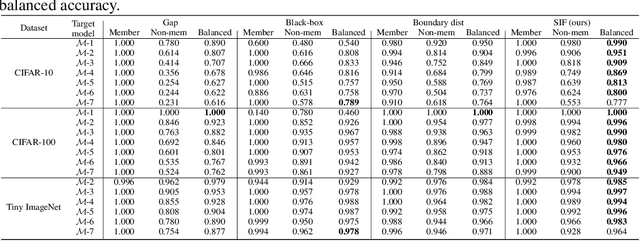

Abstract:Member inference (MI) attacks aim to determine if a specific data sample was used to train a machine learning model. Thus, MI is a major privacy threat to models trained on private sensitive data, such as medical records. In MI attacks one may consider the black-box settings, where the model's parameters and activations are hidden from the adversary, or the white-box case where they are available to the attacker. In this work, we focus on the latter and present a novel MI attack for it that employs influence functions, or more specifically the samples' self-influence scores, to perform the MI prediction. We evaluate our attack on CIFAR-10, CIFAR-100, and Tiny ImageNet datasets, using versatile architectures such as AlexNet, ResNet, and DenseNet. Our attack method achieves new state-of-the-art results for both training with and without data augmentations. Code is available at https://github.com/giladcohen/sif_mi_attack.

ProCST: Boosting Semantic Segmentation using Progressive Cyclic Style-Transfer

Apr 25, 2022

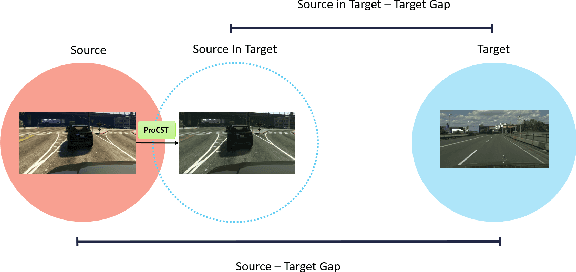

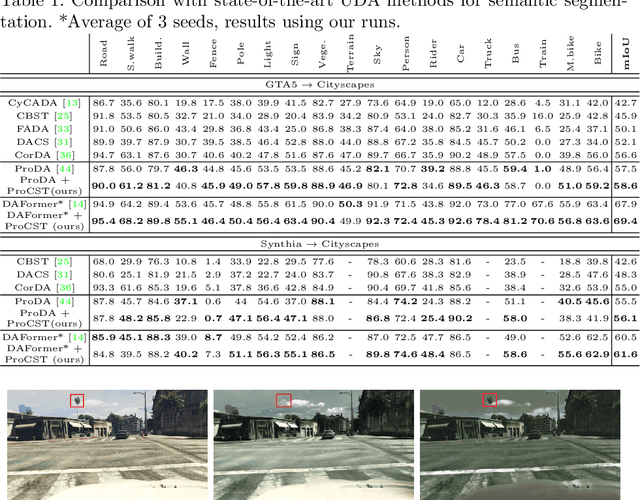

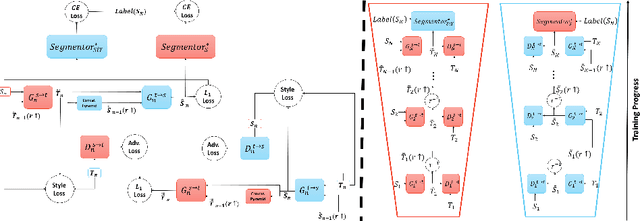

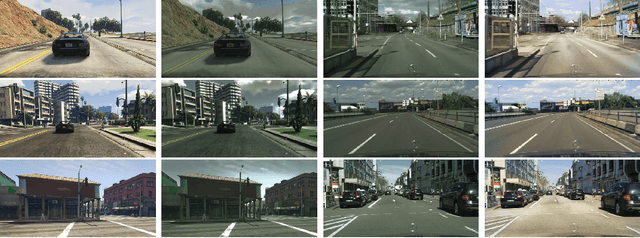

Abstract:Using synthetic data for training neural networks that achieve good performance on real-world data is an important task as it has the potential to reduce the need for costly data annotation. Yet, a network that is trained on synthetic data alone does not perform well on real data due to the domain gap between the two. Reducing this gap, also known as domain adaptation, has been widely studied in recent years. In the unsupervised domain adaptation (UDA) framework, unlabeled real data is used during training with labeled synthetic data to obtain a neural network that performs well on real data. In this work, we focus on image data. For the semantic segmentation task, it has been shown that performing image-to-image translation from source to target, and then training a network for segmentation on source annotations - leads to poor results. Therefore a joint training of both is essential, which has been a common practice in many techniques. Yet, closing the large domain gap between the source and the target by directly performing the adaptation between the two is challenging. In this work, we propose a novel two-stage framework for improving domain adaptation techniques. In the first step, we progressively train a multi-scale neural network to perform an initial transfer between the source data to the target data. We denote the new transformed data as "Source in Target" (SiT). Then, we use the generated SiT data as the input to any standard UDA approach. This new data has a reduced domain gap from the desired target domain, and the applied UDA approach further closes the gap. We demonstrate the improvement achieved by our framework with two state-of-the-art methods for semantic segmentation, DAFormer and ProDA, on two UDA tasks, GTA5 to Cityscapes and Synthia to Cityscapes. Code and state-of-the-art checkpoints of ProCST+DAFormer are provided.

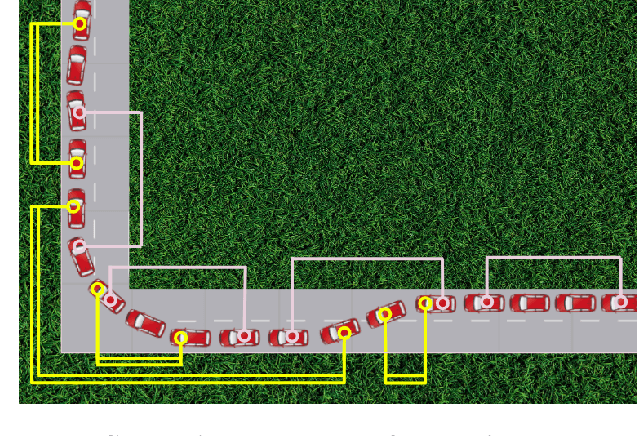

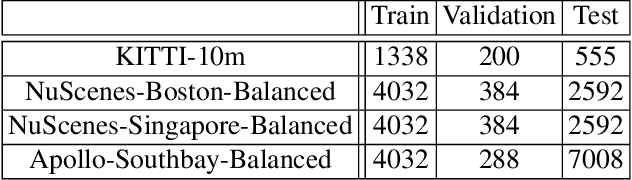

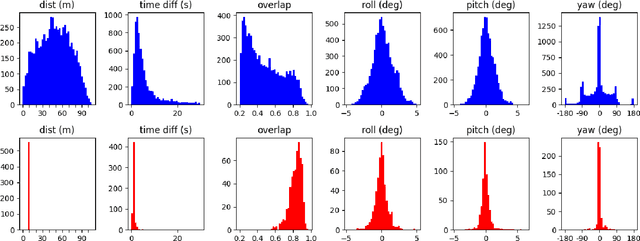

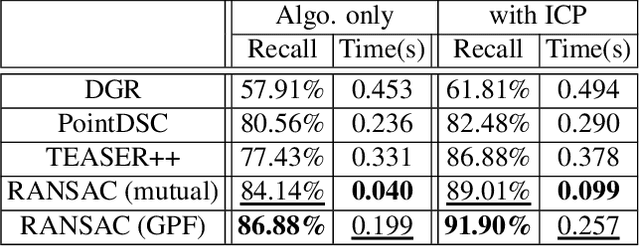

Stress-Testing LiDAR Registration

Apr 16, 2022

Abstract:Point cloud registration (PCR) is an important task in many fields including autonomous driving with LiDAR sensors. PCR algorithms have improved significantly in recent years, by combining deep-learned features with robust estimation methods. These algorithms succeed in scenarios such as indoor scenes and object models registration. However, testing in the automotive LiDAR setting, which presents its own challenges, has been limited. The standard benchmark for this setting, KITTI-10m, has essentially been saturated by recent algorithms: many of them achieve near-perfect recall. In this work, we stress-test recent PCR techniques with LiDAR data. We propose a method for selecting balanced registration sets, which are challenging sets of frame-pairs from LiDAR datasets. They contain a balanced representation of the different relative motions that appear in a dataset, i.e. small and large rotations, small and large offsets in space and time, and various combinations of these. We perform a thorough comparison of accuracy and run-time on these benchmarks. Perhaps unexpectedly, we find that the fastest and simultaneously most accurate approach is a version of advanced RANSAC. We further improve results with a novel pre-filtering method.

Denoiser-based projections for 2-D super-resolution multi-reference alignment

Apr 10, 2022

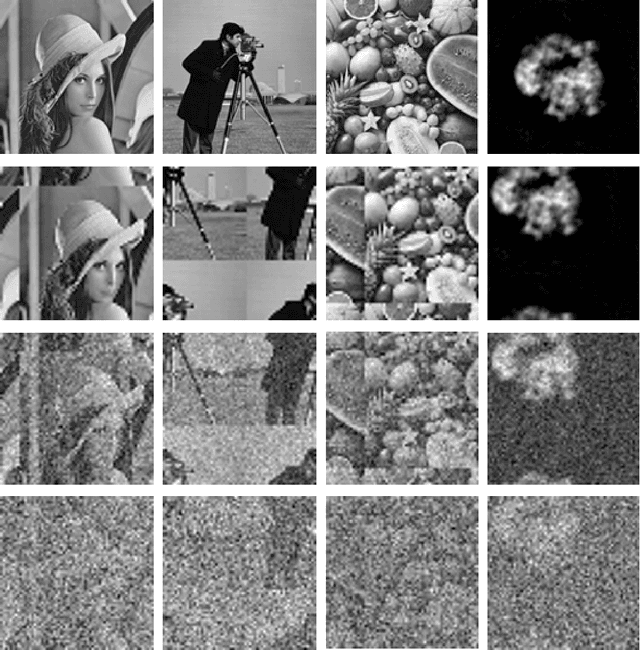

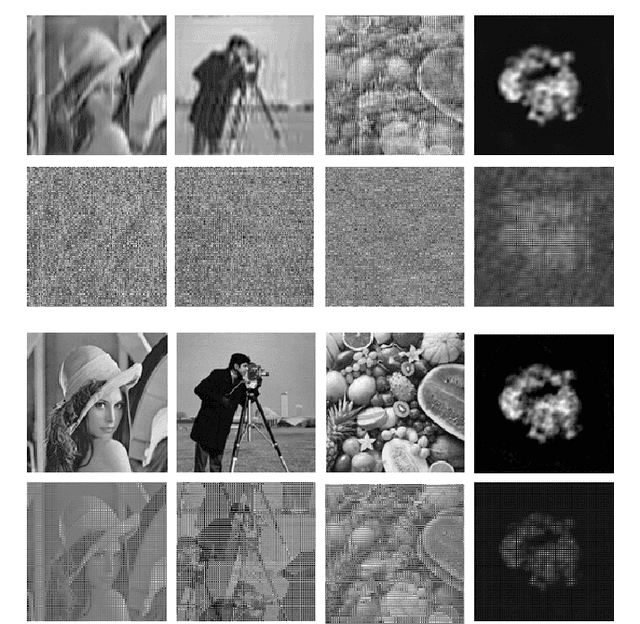

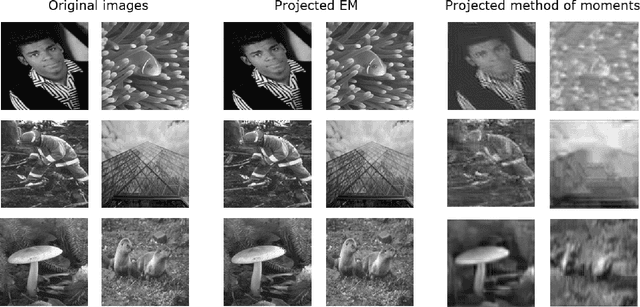

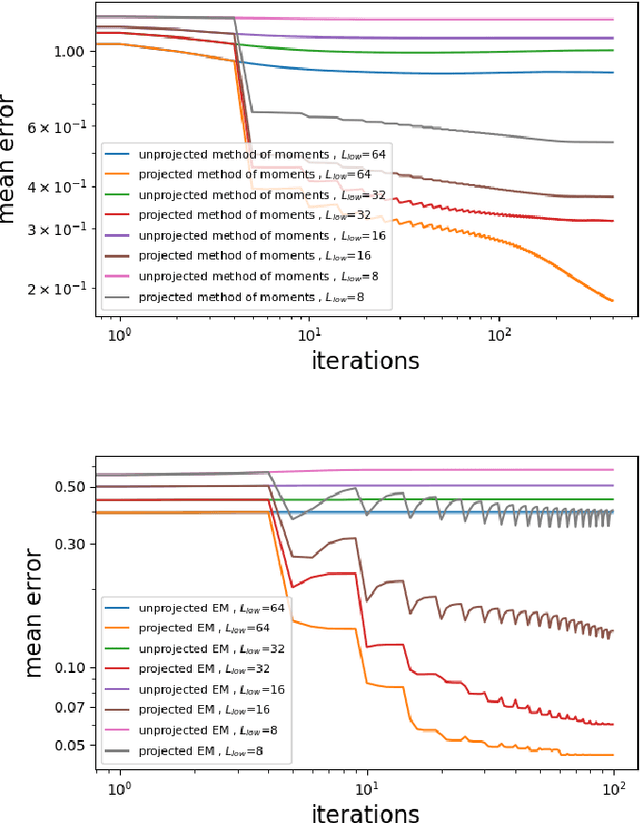

Abstract:We study the 2-D super-resolution multi-reference alignment (SR-MRA) problem: estimating an image from its down-sampled, circularly-translated, and noisy copies. The SR-MRA problem serves as a mathematical abstraction of the structure determination problem for biological molecules. Since the SR-MRA problem is ill-posed without prior knowledge, accurate image estimation relies on designing priors that well-describe the statistics of the images of interest. In this work, we build on recent advances in image processing, and harness the power of denoisers as priors of images. In particular, we suggest to use denoisers as projections, and design two computational frameworks to estimate the image: projected expectation-maximization and projected method of moments. We provide an efficient GPU implementation, and demonstrate the effectiveness of these algorithms by extensive numerical experiments on a wide range of parameters and images.

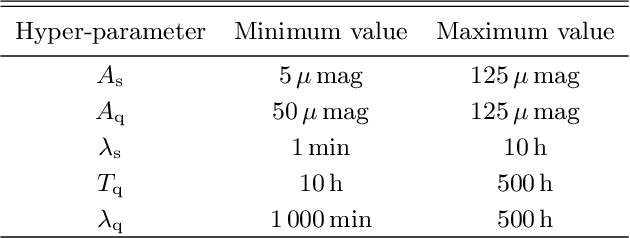

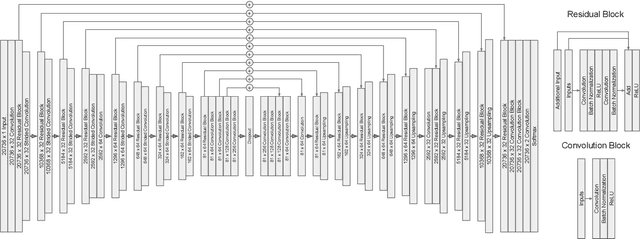

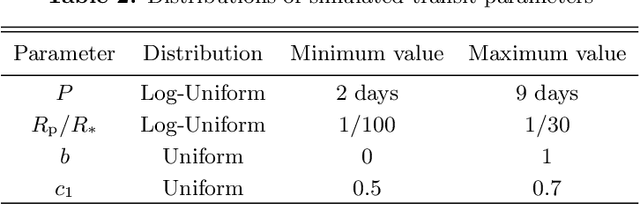

Shallow Transits -- Deep Learning II: Identify Individual Exoplanetary Transits in Red Noise using Deep Learning

Mar 15, 2022

Abstract:In a previous paper, we have introduced a deep learning neural network that should be able to detect the existence of very shallow periodic planetary transits in the presence of red noise. The network in that feasibility study would not provide any further details about the detected transits. The current paper completes this missing part. We present a neural network that tags samples that were obtained during transits. This is essentially similar to the task of identifying the semantic context of each pixel in an image -- an important task in computer vision, called `semantic segmentation', which is often performed by deep neural networks. The neural network we present makes use of novel deep learning concepts such as U-Nets, Generative Adversarial Networks (GAN), and adversarial loss. The resulting segmentation should allow further studies of the light curves which are tagged as containing transits. This approach towards the detection and study of very shallow transits is bound to play a significant role in future space-based transit surveys such as PLATO, which are specifically aimed to detect those extremely difficult cases of long-period shallow transits. Our segmentation network also adds to the growing toolbox of deep learning approaches which are being increasingly used in the study of exoplanets, but so far mainly for vetting transits, rather than their initial detection.

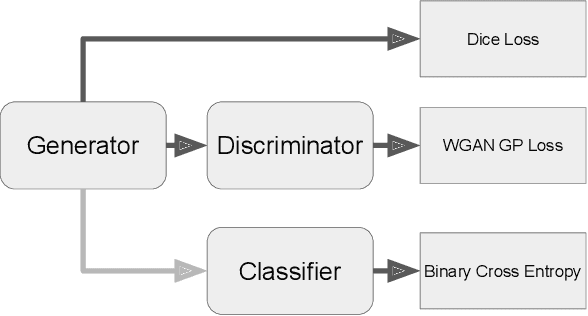

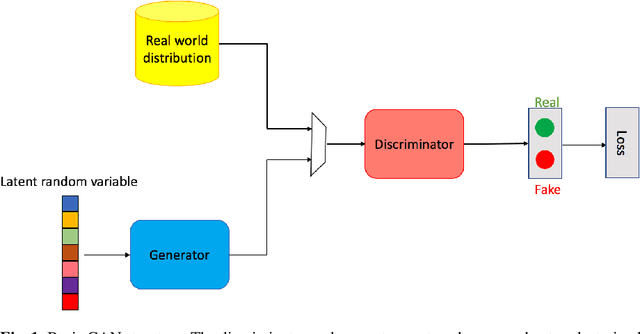

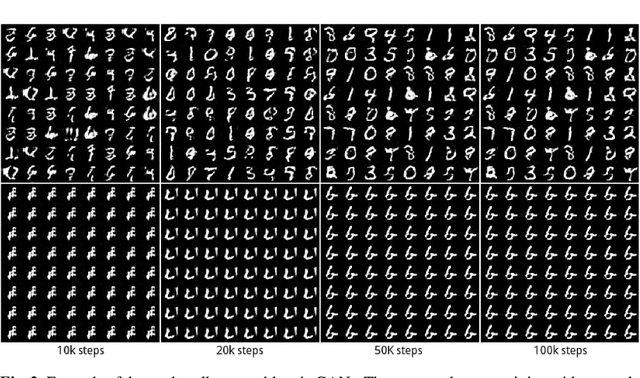

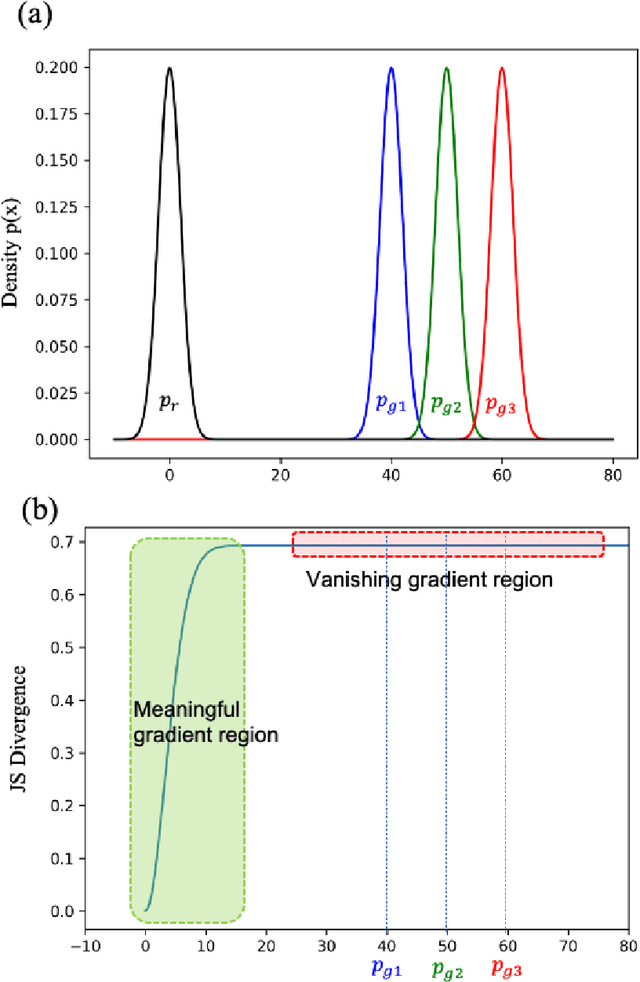

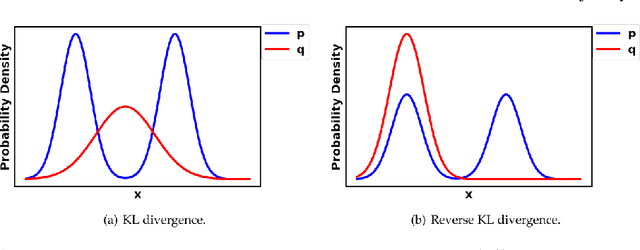

Generative Adversarial Networks

Mar 01, 2022

Abstract:Generative Adversarial Networks (GANs) are very popular frameworks for generating high-quality data, and are immensely used in both the academia and industry in many domains. Arguably, their most substantial impact has been in the area of computer vision, where they achieve state-of-the-art image generation. This chapter gives an introduction to GANs, by discussing their principle mechanism and presenting some of their inherent problems during training and evaluation. We focus on these three issues: (1) mode collapse, (2) vanishing gradients, and (3) generation of low-quality images. We then list some architecture-variant and loss-variant GANs that remedy the above challenges. Lastly, we present two utilization examples of GANs for real-world applications: Data augmentation and face images generation.

SPAGHETTI: Editing Implicit Shapes Through Part Aware Generation

Jan 31, 2022

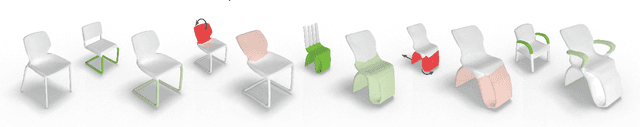

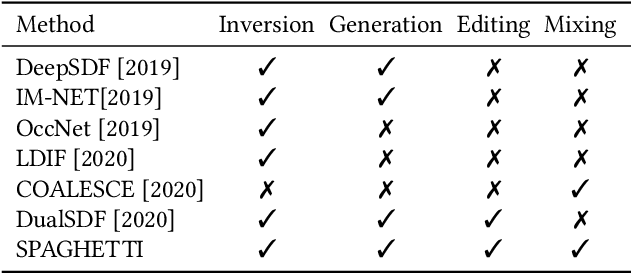

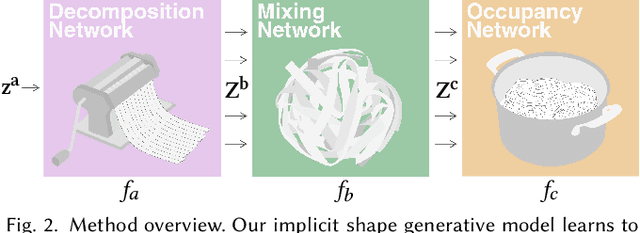

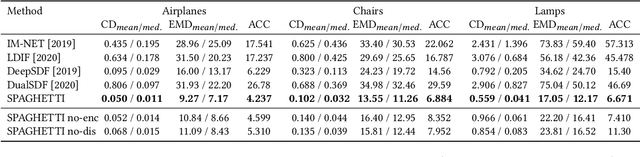

Abstract:Neural implicit fields are quickly emerging as an attractive representation for learning based techniques. However, adopting them for 3D shape modeling and editing is challenging. We introduce a method for $\mathbf{E}$diting $\mathbf{I}$mplicit $\mathbf{S}$hapes $\mathbf{T}$hrough $\mathbf{P}$art $\mathbf{A}$ware $\mathbf{G}$enera$\mathbf{T}$ion, permuted in short as SPAGHETTI. Our architecture allows for manipulation of implicit shapes by means of transforming, interpolating and combining shape segments together, without requiring explicit part supervision. SPAGHETTI disentangles shape part representation into extrinsic and intrinsic geometric information. This characteristic enables a generative framework with part-level control. The modeling capabilities of SPAGHETTI are demonstrated using an interactive graphical interface, where users can directly edit neural implicit shapes.

Extending the Vocabulary of Fictional Languages using Neural Networks

Jan 18, 2022Abstract:Fictional languages have become increasingly popular over the recent years appearing in novels, movies, TV shows, comics, and video games. While some of these fictional languages have a complete vocabulary, most do not. We propose a deep learning solution to the problem. Using style transfer and machine translation tools, we generate new words for a given target fictional language, while maintaining the style of its creator, hence extending this language vocabulary.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge