Qingxin Zhu

Adversarial Samples on Android Malware Detection Systems for IoT Systems

Feb 12, 2019

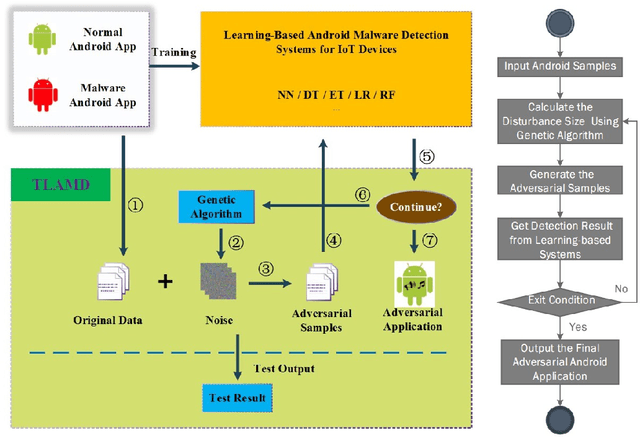

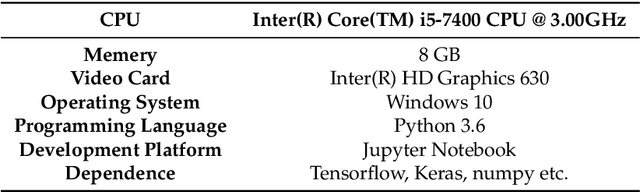

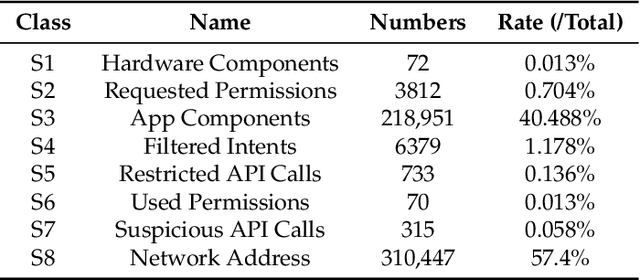

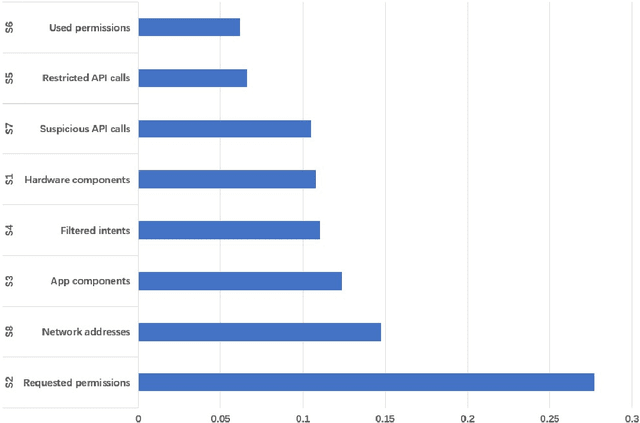

Abstract:Many IoT(Internet of Things) systems run Android systems or Android-like systems. With the continuous development of machine learning algorithms, the learning-based Android malware detection system for IoT devices has gradually increased. However, these learning-based detection models are often vulnerable to adversarial samples. An automated testing framework is needed to help these learning-based malware detection systems for IoT devices perform security analysis. The current methods of generating adversarial samples mostly require training parameters of models and most of the methods are aimed at image data. To solve this problem, we propose a \textbf{t}esting framework for \textbf{l}earning-based \textbf{A}ndroid \textbf{m}alware \textbf{d}etection systems(TLAMD) for IoT Devices. The key challenge is how to construct a suitable fitness function to generate an effective adversarial sample without affecting the features of the application. By introducing genetic algorithms and some technical improvements, our test framework can generate adversarial samples for the IoT Android Application with a success rate of nearly 100\% and can perform black-box testing on the system.

A Black-box Attack on Neural Networks Based on Swarm Evolutionary Algorithm

Jan 26, 2019

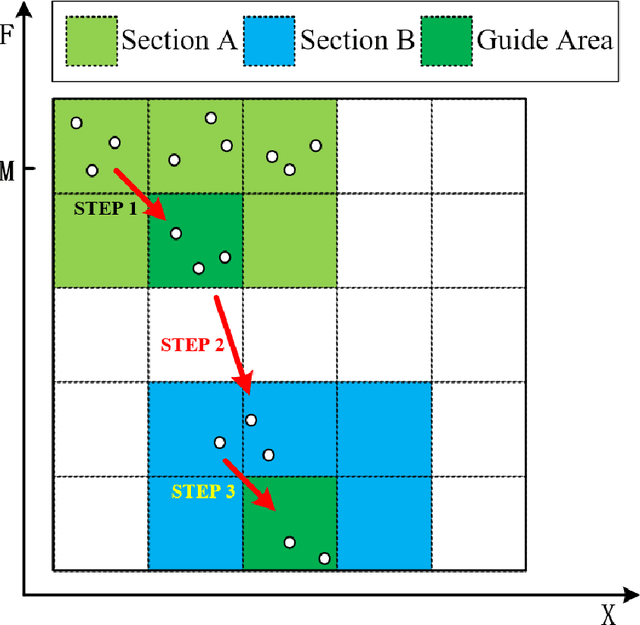

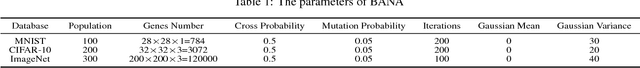

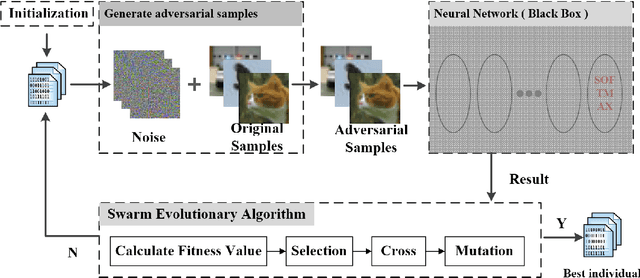

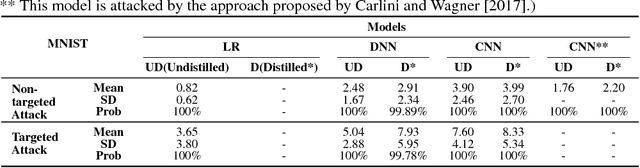

Abstract:Neural networks play an increasingly important role in the field of machine learning and are included in many applications in society. Unfortunately, neural networks suffer from adversarial samples generated to attack them. However, most of the generation approaches either assume that the attacker has full knowledge of the neural network model or are limited by the type of attacked model. In this paper, we propose a new approach that generates a black-box attack to neural networks based on the swarm evolutionary algorithm. Benefiting from the improvements in the technology and theoretical characteristics of evolutionary algorithms, our approach has the advantages of effectiveness, black-box attack, generality, and randomness. Our experimental results show that both the MNIST images and the CIFAR-10 images can be perturbed to successful generate a black-box attack with 100\% probability on average. In addition, the proposed attack, which is successful on distilled neural networks with almost 100\% probability, is resistant to defensive distillation. The experimental results also indicate that the robustness of the artificial intelligence algorithm is related to the complexity of the model and the data set. In addition, we find that the adversarial samples to some extent reproduce the characteristics of the sample data learned by the neural network model.

Characteristic matrix of covering and its application to boolean matrix decomposition and axiomatization

Mar 03, 2013Abstract:Covering is an important type of data structure while covering-based rough sets provide an efficient and systematic theory to deal with covering data. In this paper, we use boolean matrices to represent and axiomatize three types of covering approximation operators. First, we define two types of characteristic matrices of a covering which are essentially square boolean ones, and their properties are studied. Through the characteristic matrices, three important types of covering approximation operators are concisely equivalently represented. Second, matrix representations of covering approximation operators are used in boolean matrix decomposition. We provide a sufficient and necessary condition for a square boolean matrix to decompose into the boolean product of another one and its transpose. And we develop an algorithm for this boolean matrix decomposition. Finally, based on the above results, these three types of covering approximation operators are axiomatized using boolean matrices. In a word, this work borrows extensively from boolean matrices and present a new view to study covering-based rough sets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge