Nima Mohammadi

Cindy

Mobile Distributed MIMO (MD-MIMO) for NextG: Mobility Meets Cooperation in Distributed Arrays

Apr 16, 2025Abstract:Distributed multiple-input multiple-output (D\mbox{-}MIMO) is a promising technology to realize the promise of massive MIMO gains by fiber-connecting the distributed antenna arrays, thereby overcoming the form factor limitations of co-located MIMO. In this paper, we introduce the concept of mobile D-MIMO (MD-MIMO) network, a further extension of the D-MIMO technology where distributed antenna arrays are connected to the base station with a wireless link allowing all radio network nodes to be mobile. This approach significantly improves deployment flexibility and reduces operating costs, enabling the network to adapt to the highly dynamic nature of next-generation (NextG) networks. We discuss use cases, system design, network architecture, and the key enabling technologies for MD-MIMO. Furthermore, we investigate a case study of MD-MIMO for vehicular networks, presenting detailed performance evaluations for both downlink and uplink. The results show that an MD-MIMO network can provide substantial improvements in network throughput and reliability.

Making Intelligent Reflecting Surfaces More Intelligent: A Roadmap Through Reservoir Computing

Feb 06, 2021

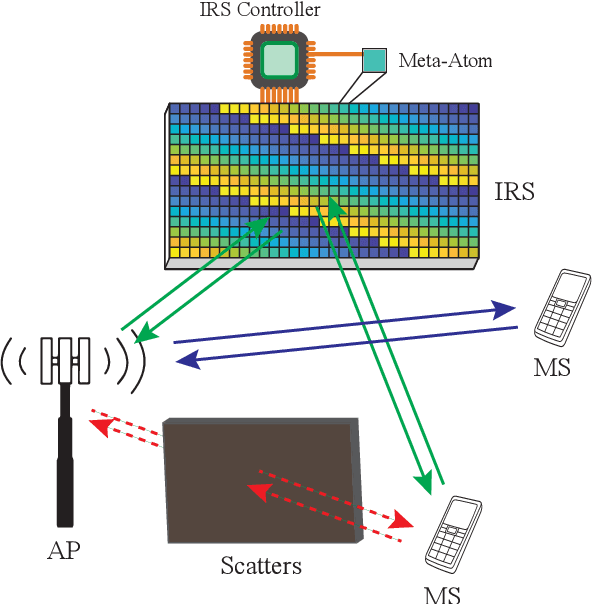

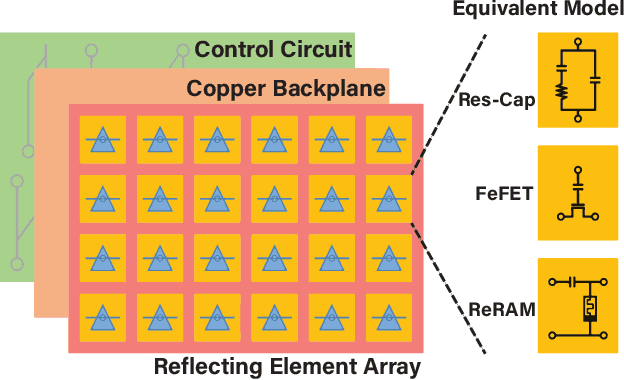

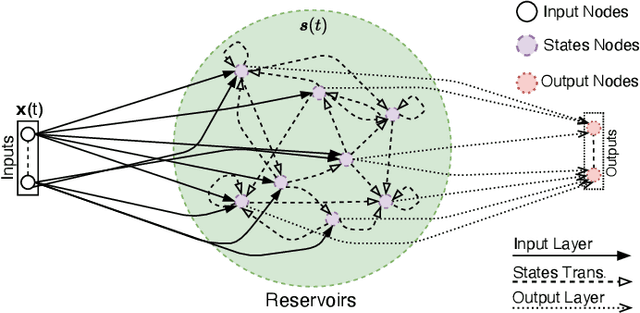

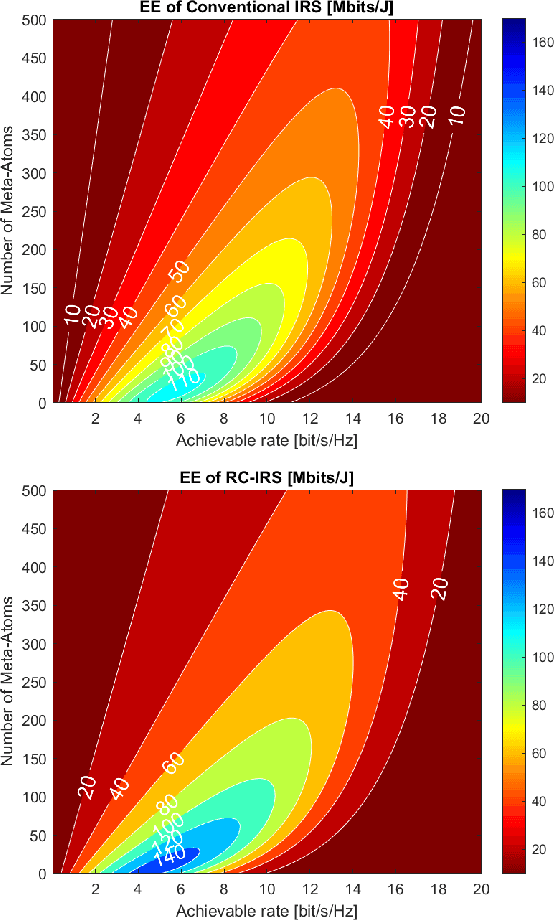

Abstract:This article introduces a neural network-based signal processing framework for intelligent reflecting surface (IRS) aided wireless communications systems. By modeling radio-frequency (RF) impairments inside the "meta-atoms" of IRS (including nonlinearity and memory effects), we present an approach that generalizes the entire IRS-aided system as a reservoir computing (RC) system, an efficient recurrent neural network (RNN) operating in a state near the "edge of chaos". This framework enables us to take advantage of the nonlinearity of this "fabricated" wireless environment to overcome link degradation due to model mismatch. Accordingly, the randomness of the wireless channel and RF imperfections are naturally embedded into the RC framework, enabling the internal RC dynamics lying on the edge of chaos. Furthermore, several practical issues, such as channel state information acquisition, passive beamforming design, and physical layer reference signal design, are discussed.

Differential Privacy Meets Federated Learning under Communication Constraints

Jan 28, 2021

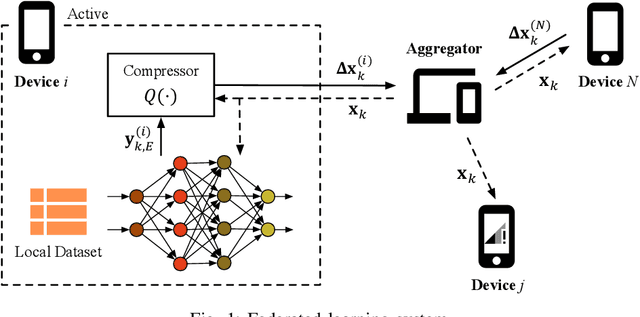

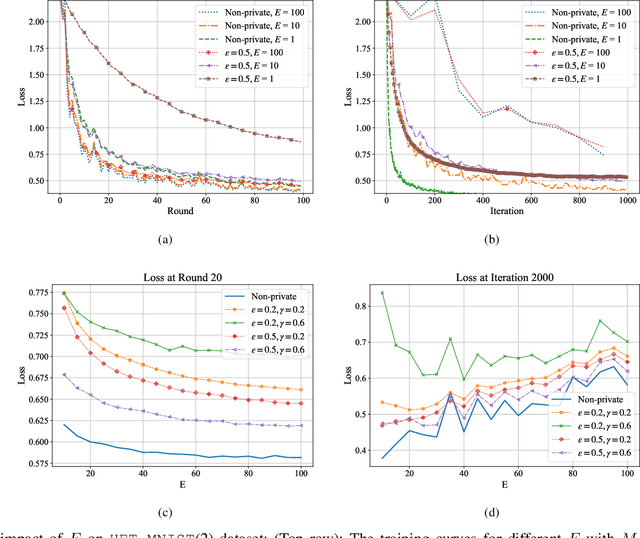

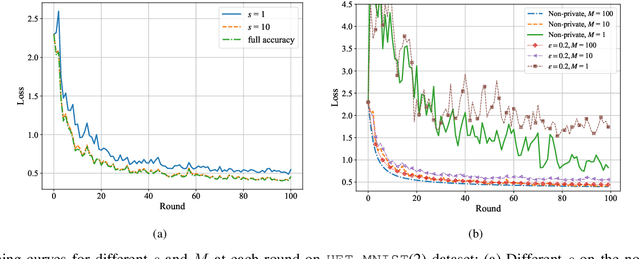

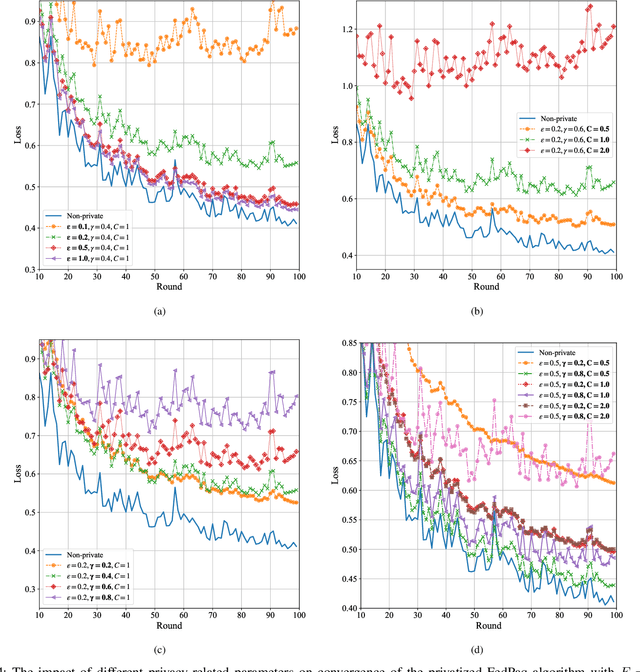

Abstract:The performance of federated learning systems is bottlenecked by communication costs and training variance. The communication overhead problem is usually addressed by three communication-reduction techniques, namely, model compression, partial device participation, and periodic aggregation, at the cost of increased training variance. Different from traditional distributed learning systems, federated learning suffers from data heterogeneity (since the devices sample their data from possibly different distributions), which induces additional variance among devices during training. Various variance-reduced training algorithms have been introduced to combat the effects of data heterogeneity, while they usually cost additional communication resources to deliver necessary control information. Additionally, data privacy remains a critical issue in FL, and thus there have been attempts at bringing Differential Privacy to this framework as a mediator between utility and privacy requirements. This paper investigates the trade-offs between communication costs and training variance under a resource-constrained federated system theoretically and experimentally, and how communication reduction techniques interplay in a differentially private setting. The results provide important insights into designing practical privacy-aware federated learning systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge