Jianan Bai

A Unified Activity Detection Framework for Massive Access: Beyond the Block-Fading Paradigm

Oct 22, 2024

Abstract:The wireless channel changes continuously with time and frequency and the block-fading assumption, which is popular in many theoretical analyses, never holds true in practical scenarios. This discrepancy is critical for user activity detection in grant-free random access, where joint processing across multiple coherence blocks is undesirable, especially when the environment becomes more dynamic. In this paper, we develop a framework for low-dimensional approximation of the channel to capture its variations over time and frequency, and use this framework to implement robust activity detection algorithms. Furthermore, we investigate how to efficiently estimate the principal subspace that defines the low-dimensional approximation. We also examine pilot hopping as a way of exploiting time and frequency diversity in scenarios with limited channel coherence, and extend our algorithms to this case. Through numerical examples, we demonstrate a substantial performance improvement achieved by our proposed framework.

Robust Covariance-Based Activity Detection for Massive Access

May 15, 2024

Abstract:The wireless channel is undergoing continuous changes, and the block-fading assumption, despite its popularity in theoretical contexts, never holds true in practical scenarios. This discrepancy is particularly critical for user activity detection in grant-free random access, where joint processing across multiple resource blocks is usually undesirable. In this paper, we propose employing a low-dimensional approximation of the channel to capture variations over time and frequency and robustify activity detection algorithms. This approximation entails projecting channel fading vectors onto their principal directions to minimize the approximation order. Through numerical examples, we demonstrate a substantial performance improvement achieved by the resulting activity detection algorithm.

Activity Detection in Distributed MIMO: Distributed AMP via Likelihood Ratio Fusion

Aug 05, 2022

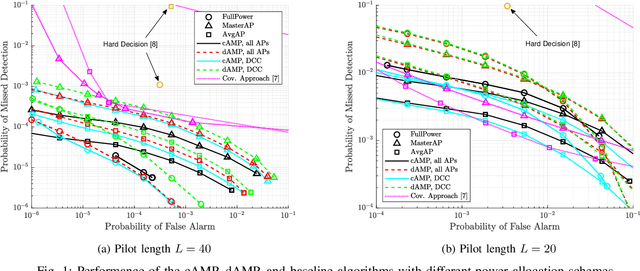

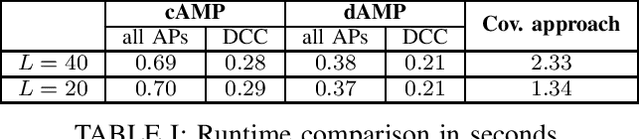

Abstract:We develop a new algorithm for activity detection for grant-free multiple access in distributed multiple-input multiple-output (MIMO). The algorithm is a distributed version of the approximate message passing (AMP) based on a soft combination of likelihood ratios computed independently at multiple access points. The underpinning theoretical basis of our algorithm is a new observation that we made about the state evolution in the AMP. Specifically, with a minimum mean-square error denoiser, the state maintains a block-diagonal structure whenever the covariance matrices of the signals have such a structure. We show by numerical examples that the algorithm outperforms competing schemes from the literature.

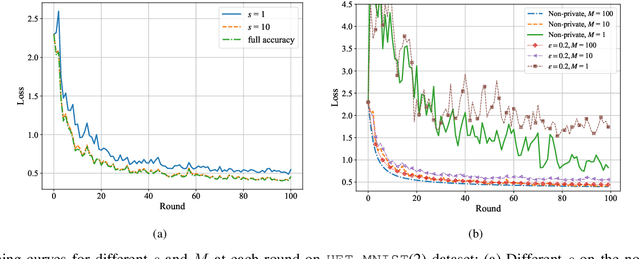

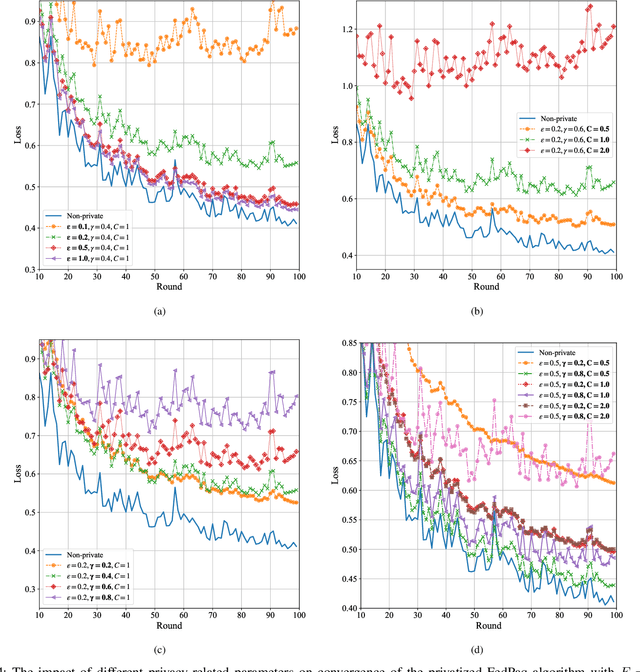

Differential Privacy Meets Federated Learning under Communication Constraints

Jan 28, 2021

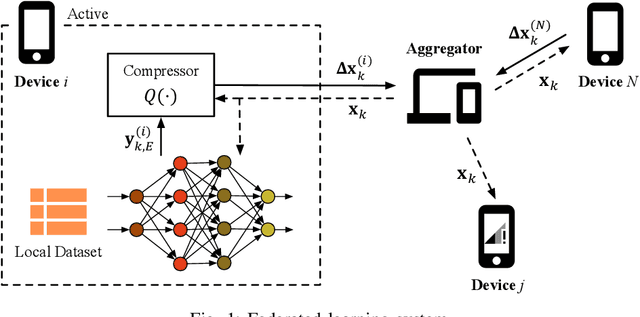

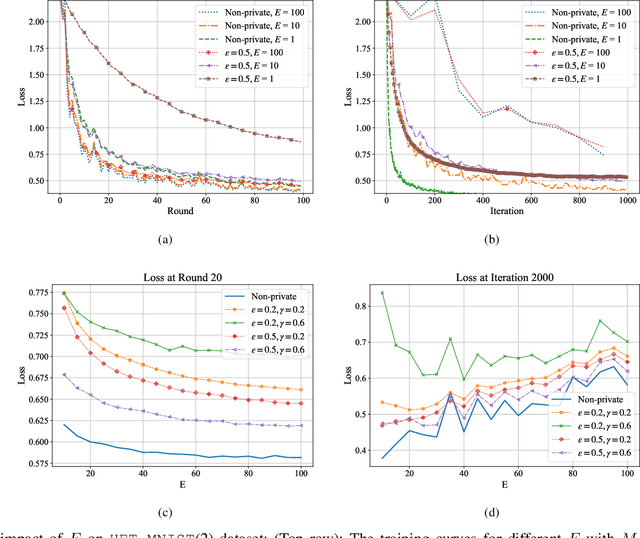

Abstract:The performance of federated learning systems is bottlenecked by communication costs and training variance. The communication overhead problem is usually addressed by three communication-reduction techniques, namely, model compression, partial device participation, and periodic aggregation, at the cost of increased training variance. Different from traditional distributed learning systems, federated learning suffers from data heterogeneity (since the devices sample their data from possibly different distributions), which induces additional variance among devices during training. Various variance-reduced training algorithms have been introduced to combat the effects of data heterogeneity, while they usually cost additional communication resources to deliver necessary control information. Additionally, data privacy remains a critical issue in FL, and thus there have been attempts at bringing Differential Privacy to this framework as a mediator between utility and privacy requirements. This paper investigates the trade-offs between communication costs and training variance under a resource-constrained federated system theoretically and experimentally, and how communication reduction techniques interplay in a differentially private setting. The results provide important insights into designing practical privacy-aware federated learning systems.

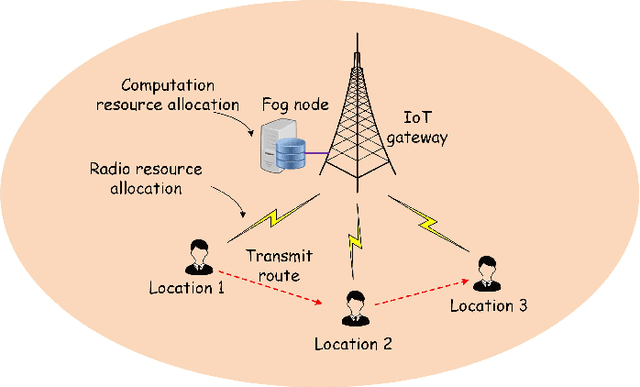

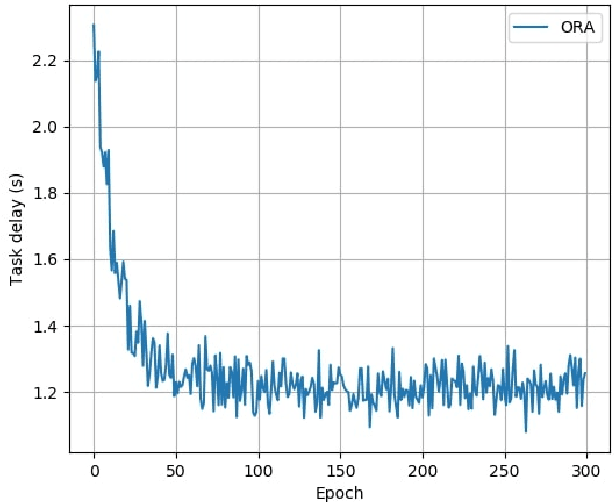

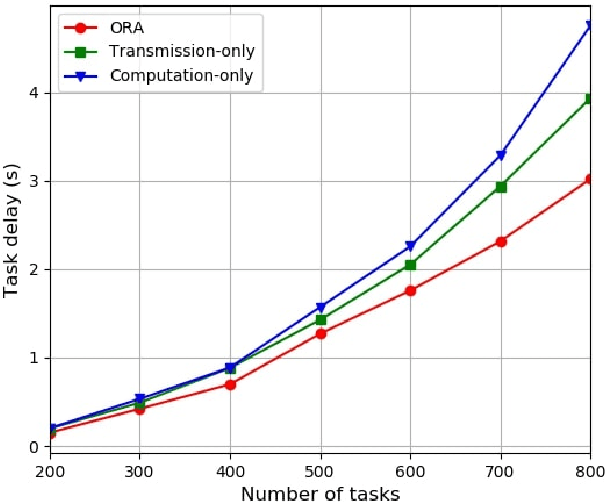

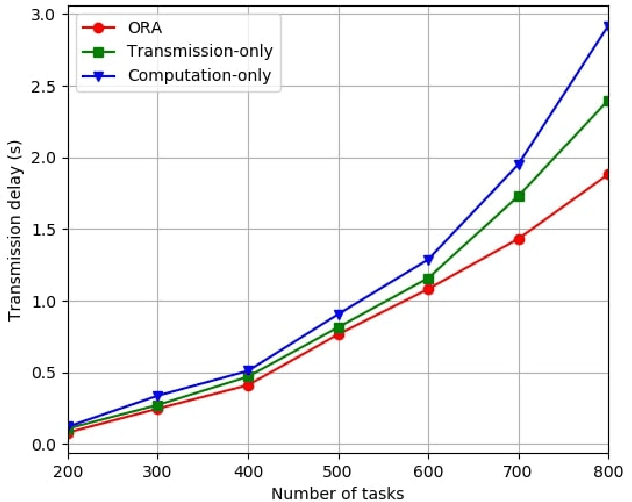

Delay-aware Resource Allocation in Fog-assisted IoT Networks Through Reinforcement Learning

Apr 30, 2020

Abstract:Fog nodes in the vicinity of IoT devices are promising to provision low latency services by offloading tasks from IoT devices to them. Mobile IoT is composed by mobile IoT devices such as vehicles, wearable devices and smartphones. Owing to the time-varying channel conditions, traffic loads and computing loads, it is challenging to improve the quality of service (QoS) of mobile IoT devices. As task delay consists of both the transmission delay and computing delay, we investigate the resource allocation (i.e., including both radio resource and computation resource) in both the wireless channel and fog node to minimize the delay of all tasks while their QoS constraints are satisfied. We formulate the resource allocation problem into an integer non-linear problem, where both the radio resource and computation resource are taken into account. As IoT tasks are dynamic, the resource allocation for different tasks are coupled with each other and the future information is impractical to be obtained. Therefore, we design an on-line reinforcement learning algorithm to make the sub-optimal decision in real time based on the historical data. The performance of the designed algorithm has been demonstrated by extensive simulation results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge