IMS' Systems for the IWSLT 2021 Low-Resource Speech Translation Task

Jun 30, 2021Pavel Denisov, Manuel Mager, Ngoc Thang Vu

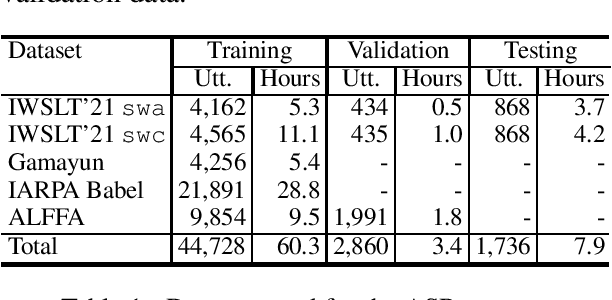

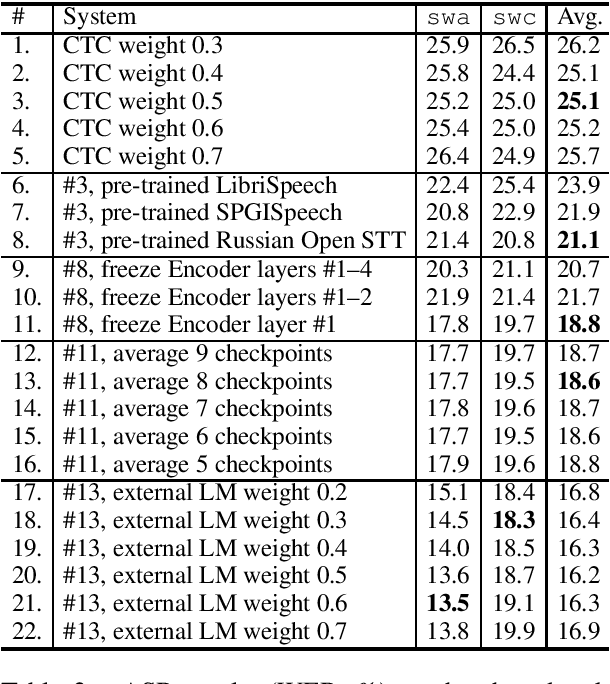

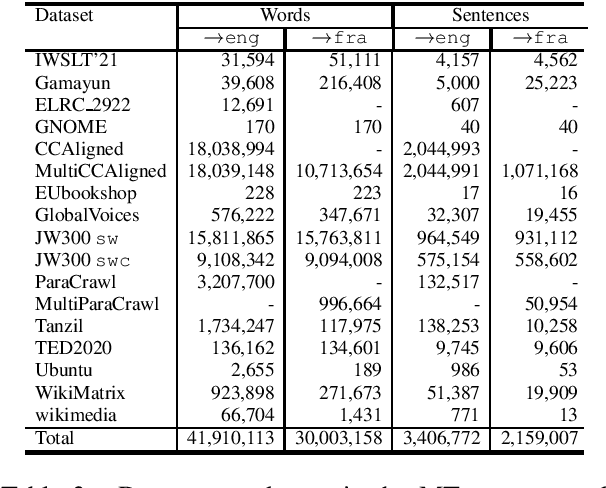

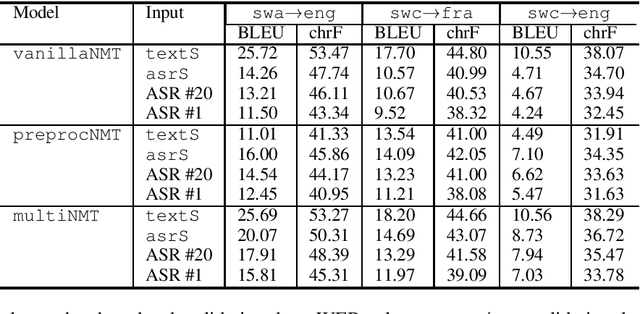

This paper describes the submission to the IWSLT 2021 Low-Resource Speech Translation Shared Task by IMS team. We utilize state-of-the-art models combined with several data augmentation, multi-task and transfer learning approaches for the automatic speech recognition (ASR) and machine translation (MT) steps of our cascaded system. Moreover, we also explore the feasibility of a full end-to-end speech translation (ST) model in the case of very constrained amount of ground truth labeled data. Our best system achieves the best performance among all submitted systems for Congolese Swahili to English and French with BLEU scores 7.7 and 13.7 respectively, and the second best result for Coastal Swahili to English with BLEU score 14.9.

AmericasNLI: Evaluating Zero-shot Natural Language Understanding of Pretrained Multilingual Models in Truly Low-resource Languages

Apr 18, 2021Abteen Ebrahimi, Manuel Mager, Arturo Oncevay, Vishrav Chaudhary, Luis Chiruzzo, Angela Fan, John Ortega, Ricardo Ramos, Annette Rios, Ivan Vladimir, Gustavo A. Giménez-Lugo, Elisabeth Mager, Graham Neubig, Alexis Palmer, Rolando A. Coto Solano, Ngoc Thang Vu, Katharina Kann

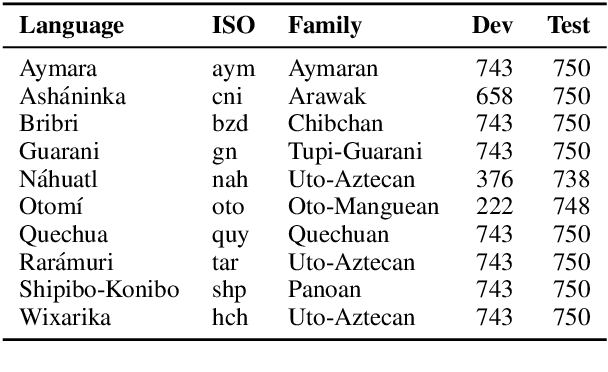

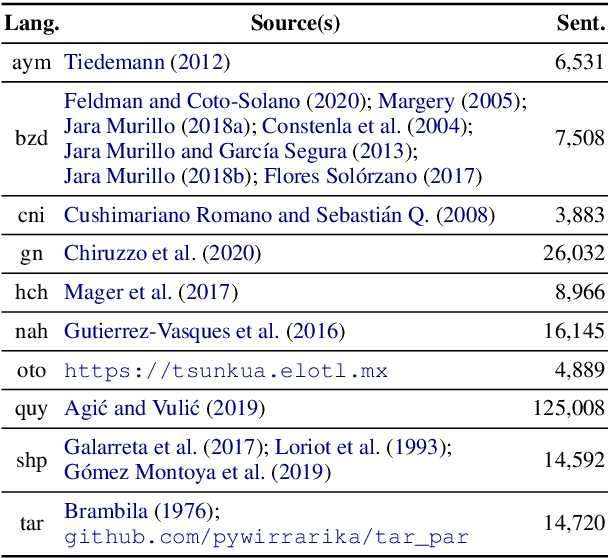

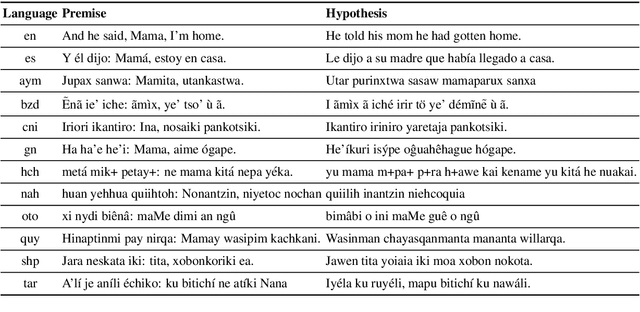

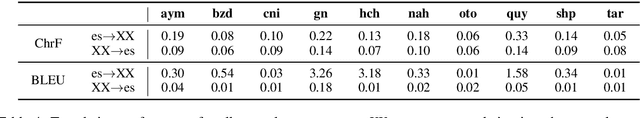

Pretrained multilingual models are able to perform cross-lingual transfer in a zero-shot setting, even for languages unseen during pretraining. However, prior work evaluating performance on unseen languages has largely been limited to low-level, syntactic tasks, and it remains unclear if zero-shot learning of high-level, semantic tasks is possible for unseen languages. To explore this question, we present AmericasNLI, an extension of XNLI (Conneau et al., 2018) to 10 indigenous languages of the Americas. We conduct experiments with XLM-R, testing multiple zero-shot and translation-based approaches. Additionally, we explore model adaptation via continued pretraining and provide an analysis of the dataset by considering hypothesis-only models. We find that XLM-R's zero-shot performance is poor for all 10 languages, with an average performance of 38.62%. Continued pretraining offers improvements, with an average accuracy of 44.05%. Surprisingly, training on poorly translated data by far outperforms all other methods with an accuracy of 48.72%.

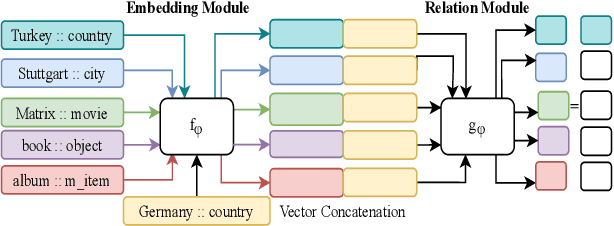

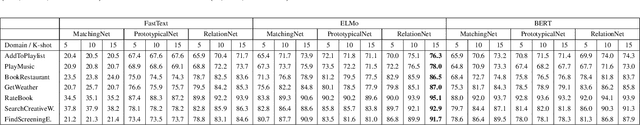

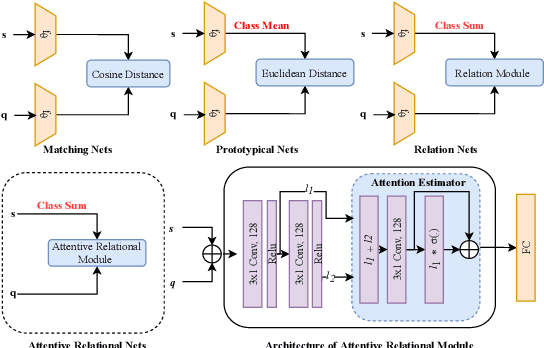

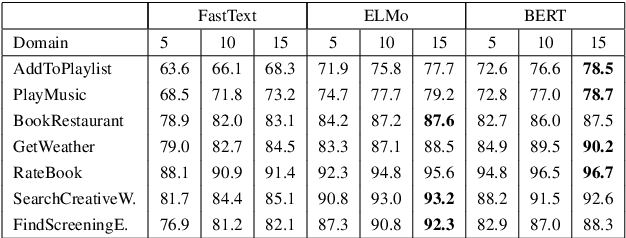

Few-shot Learning for Slot Tagging with Attentive Relational Network

Mar 03, 2021Cennet Oguz, Ngoc Thang Vu

Metric-based learning is a well-known family of methods for few-shot learning, especially in computer vision. Recently, they have been used in many natural language processing applications but not for slot tagging. In this paper, we explore metric-based learning methods in the slot tagging task and propose a novel metric-based learning architecture - Attentive Relational Network. Our proposed method extends relation networks, making them more suitable for natural language processing applications in general, by leveraging pretrained contextual embeddings such as ELMO and BERT and by using attention mechanism. The results on SNIPS data show that our proposed method outperforms other state-of-the-art metric-based learning methods.

Investigations on Audiovisual Emotion Recognition in Noisy Conditions

Mar 02, 2021Michael Neumann, Ngoc Thang Vu

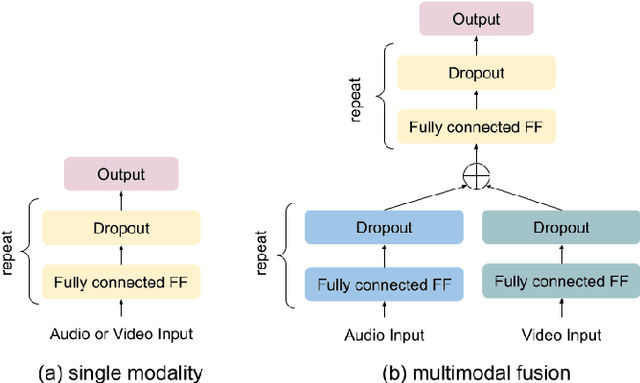

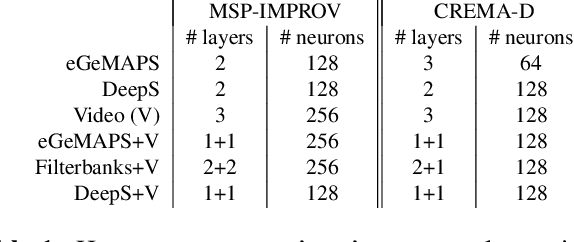

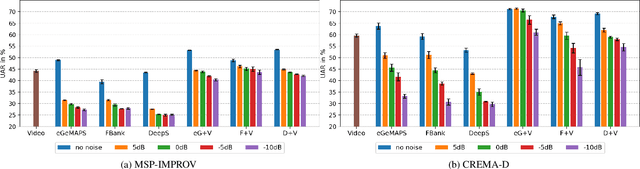

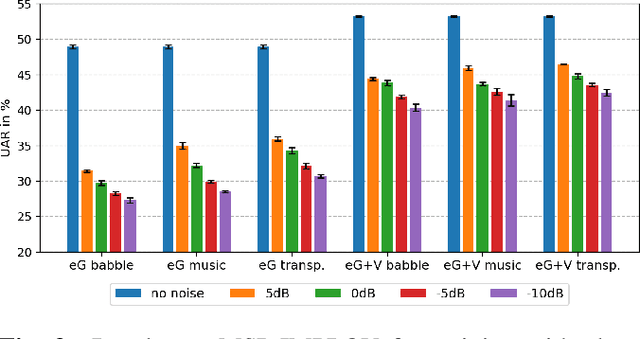

In this paper we explore audiovisual emotion recognition under noisy acoustic conditions with a focus on speech features. We attempt to answer the following research questions: (i) How does speech emotion recognition perform on noisy data? and (ii) To what extend does a multimodal approach improve the accuracy and compensate for potential performance degradation at different noise levels? We present an analytical investigation on two emotion datasets with superimposed noise at different signal-to-noise ratios, comparing three types of acoustic features. Visual features are incorporated with a hybrid fusion approach: The first neural network layers are separate modality-specific ones, followed by at least one shared layer before the final prediction. The results show a significant performance decrease when a model trained on clean audio is applied to noisy data and that the addition of visual features alleviates this effect.

Meta-Learning for improving rare word recognition in end-to-end ASR

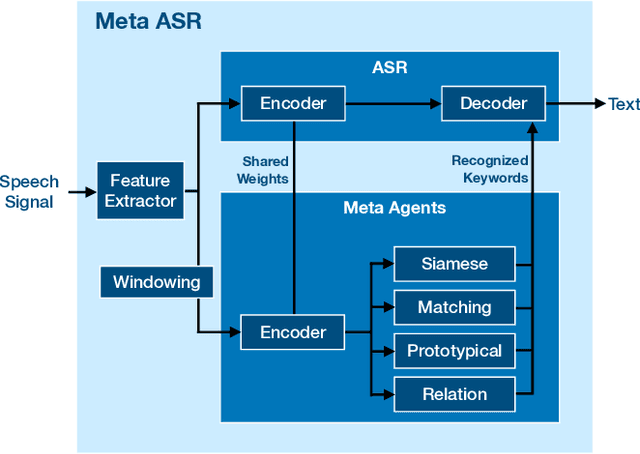

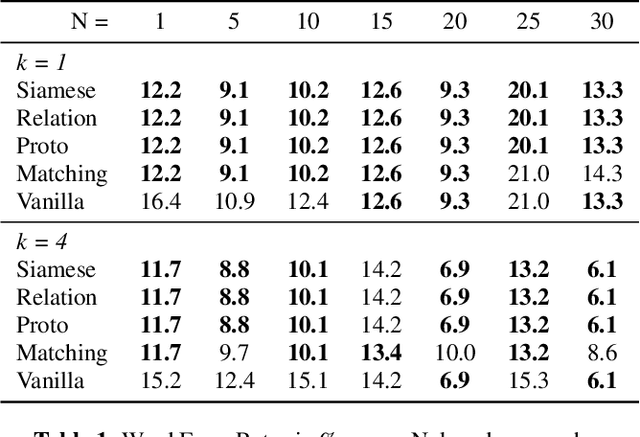

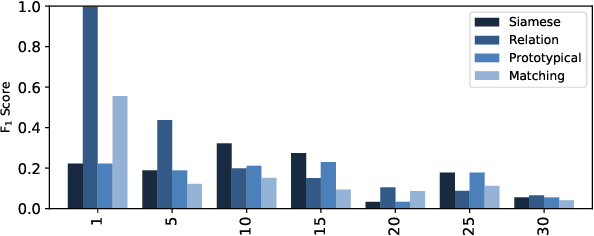

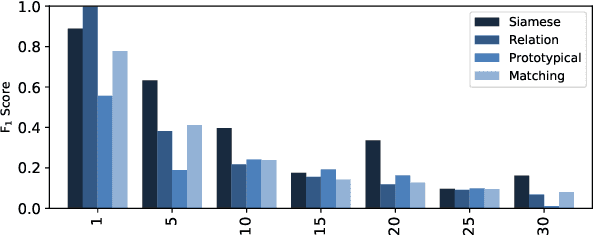

Feb 25, 2021Florian Lux, Ngoc Thang Vu

We propose a new method of generating meaningful embeddings for speech, changes to four commonly used meta learning approaches to enable them to perform keyword spotting in continuous signals and an approach of combining their outcomes into an end-to-end automatic speech recognition system to improve rare word recognition. We verify the functionality of each of our three contributions in two experiments exploring their performance for different amounts of classes (N-way) and examples per class (k-shot) in a few-shot setting. We find that the speech embeddings work well and the changes to the meta learning approaches also clearly enable them to perform continuous signal spotting. Despite the interface between keyword spotting and speech recognition being very simple, we are able to consistently improve word error rate by up to 5%.

Fine-tuning BERT for Low-Resource Natural Language Understanding via Active Learning

Dec 04, 2020Daniel Grießhaber, Johannes Maucher, Ngoc Thang Vu

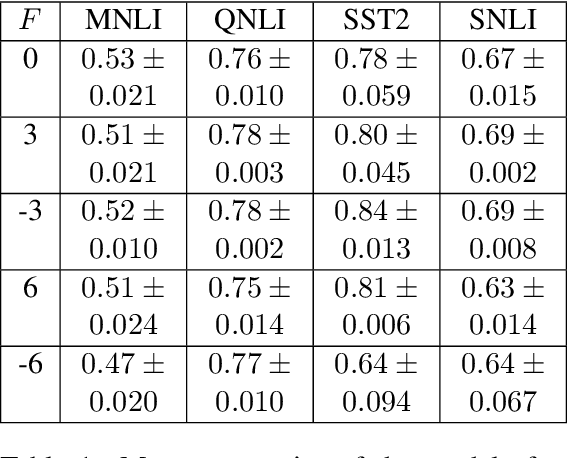

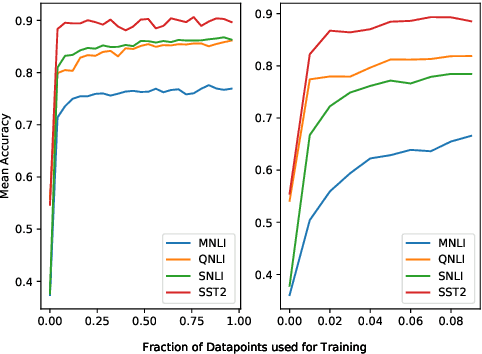

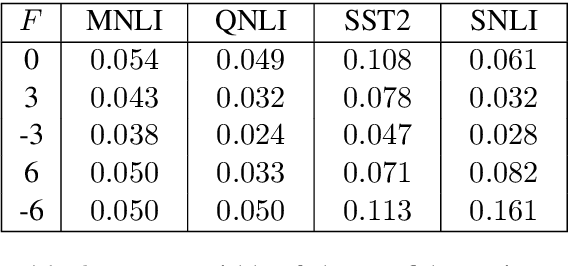

Recently, leveraging pre-trained Transformer based language models in down stream, task specific models has advanced state of the art results in natural language understanding tasks. However, only a little research has explored the suitability of this approach in low resource settings with less than 1,000 training data points. In this work, we explore fine-tuning methods of BERT -- a pre-trained Transformer based language model -- by utilizing pool-based active learning to speed up training while keeping the cost of labeling new data constant. Our experimental results on the GLUE data set show an advantage in model performance by maximizing the approximate knowledge gain of the model when querying from the pool of unlabeled data. Finally, we demonstrate and analyze the benefits of freezing layers of the language model during fine-tuning to reduce the number of trainable parameters, making it more suitable for low-resource settings.

Interpreting Attention Models with Human Visual Attention in Machine Reading Comprehension

Oct 27, 2020Ekta Sood, Simon Tannert, Diego Frassinelli, Andreas Bulling, Ngoc Thang Vu

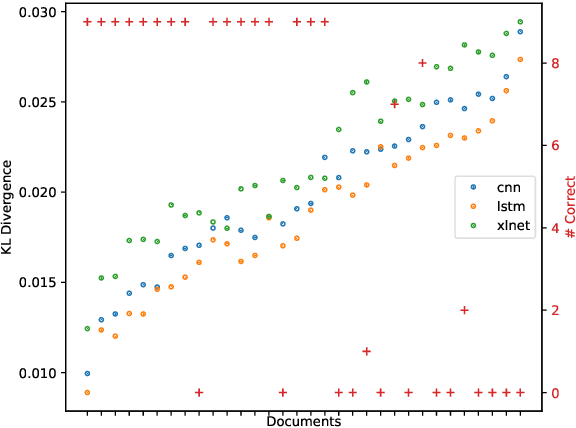

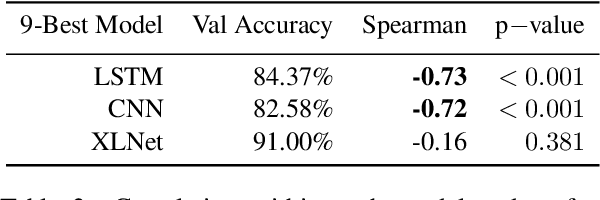

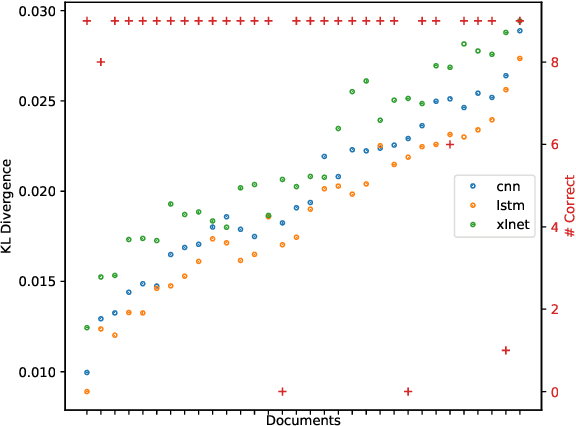

While neural networks with attention mechanisms have achieved superior performance on many natural language processing tasks, it remains unclear to which extent learned attention resembles human visual attention. In this paper, we propose a new method that leverages eye-tracking data to investigate the relationship between human visual attention and neural attention in machine reading comprehension. To this end, we introduce a novel 23 participant eye tracking dataset - MQA-RC, in which participants read movie plots and answered pre-defined questions. We compare state of the art networks based on long short-term memory (LSTM), convolutional neural models (CNN) and XLNet Transformer architectures. We find that higher similarity to human attention and performance significantly correlates to the LSTM and CNN models. However, we show this relationship does not hold true for the XLNet models -- despite the fact that the XLNet performs best on this challenging task. Our results suggest that different architectures seem to learn rather different neural attention strategies and similarity of neural to human attention does not guarantee best performance.

F1 is Not Enough! Models and Evaluation Towards User-Centered Explainable Question Answering

Oct 13, 2020Hendrik Schuff, Heike Adel, Ngoc Thang Vu

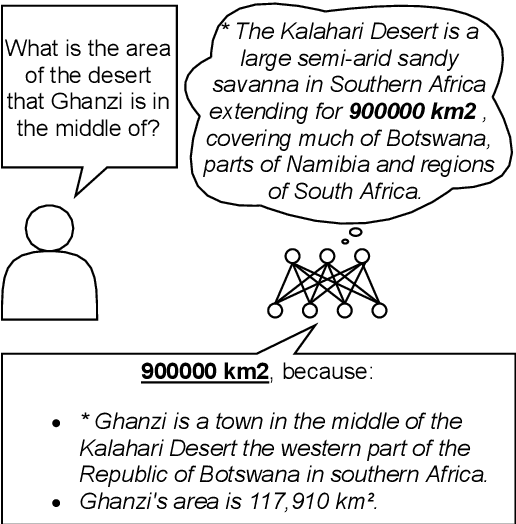

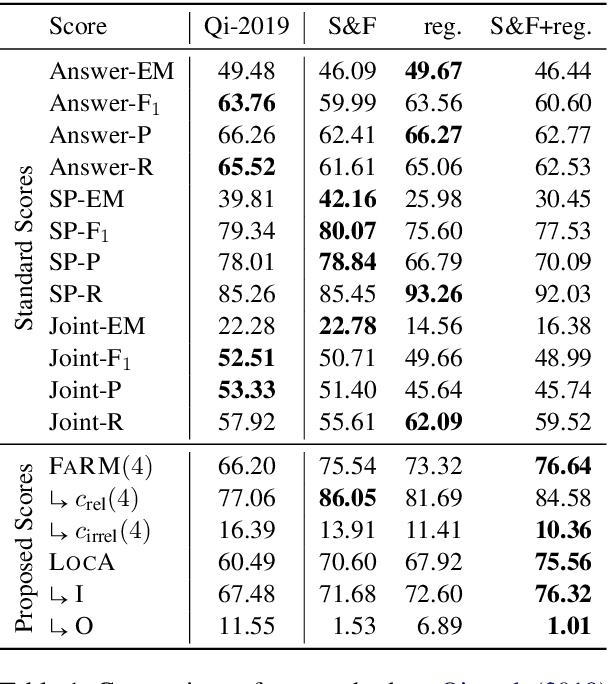

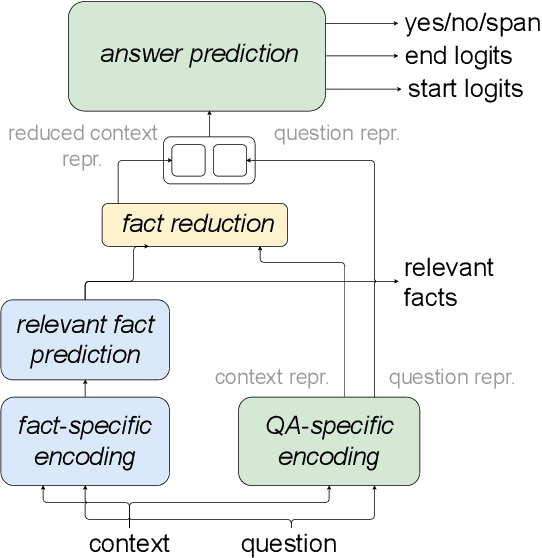

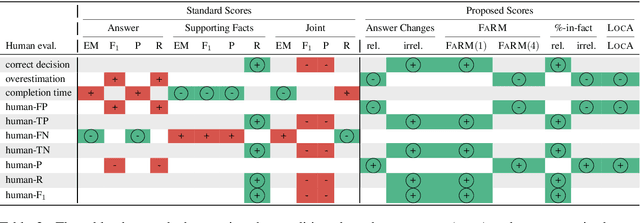

Explainable question answering systems predict an answer together with an explanation showing why the answer has been selected. The goal is to enable users to assess the correctness of the system and understand its reasoning process. However, we show that current models and evaluation settings have shortcomings regarding the coupling of answer and explanation which might cause serious issues in user experience. As a remedy, we propose a hierarchical model and a new regularization term to strengthen the answer-explanation coupling as well as two evaluation scores to quantify the coupling. We conduct experiments on the HOTPOTQA benchmark data set and perform a user study. The user study shows that our models increase the ability of the users to judge the correctness of the system and that scores like F1 are not enough to estimate the usefulness of a model in a practical setting with human users. Our scores are better aligned with user experience, making them promising candidates for model selection.

Pretrained Semantic Speech Embeddings for End-to-End Spoken Language Understanding via Cross-Modal Teacher-Student Learning

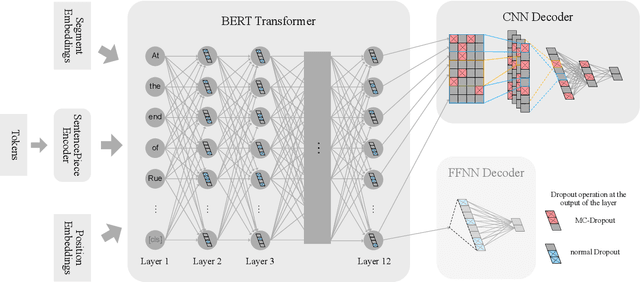

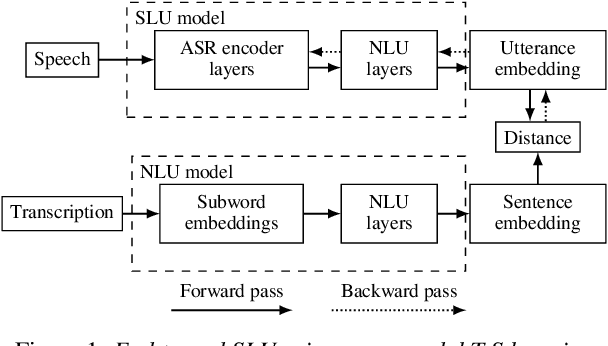

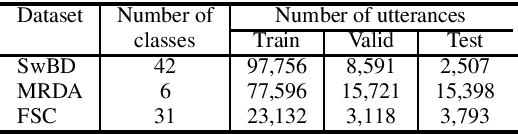

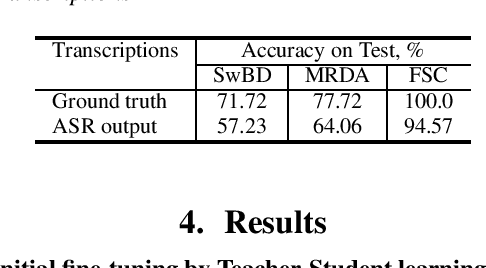

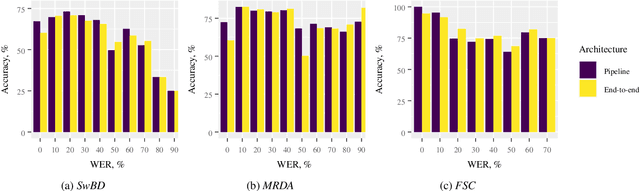

Jul 03, 2020Pavel Denisov, Ngoc Thang Vu

Spoken language understanding is typically based on pipeline architectures including speech recognition and natural language understanding steps. Therefore, these components are optimized independently from each other and the overall system suffers from error propagation. In this paper, we propose a novel training method that enables pretrained contextual embeddings such as BERT to process acoustic features. In particular, we extend it with an encoder of pretrained speech recognition systems in order to construct end-to-end spoken language understanding systems. Our proposed method is based on the teacher-student framework across speech and text modalities that aligns the acoustic and the semantic latent spaces. Experimental results in three benchmark datasets show that our system reaches the pipeline architecture performance without using any training data and outperforms it after fine-tuning with only a few examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge