Munindar P. Singh

North Carolina State University

Conversation Modeling to Predict Derailment

Mar 20, 2023Abstract:Conversations among online users sometimes derail, i.e., break down into personal attacks. Such derailment has a negative impact on the healthy growth of cyberspace communities. The ability to predict whether ongoing conversations are likely to derail could provide valuable real-time insight to interlocutors and moderators. Prior approaches predict conversation derailment retrospectively without the ability to forestall the derailment proactively. Some works attempt to make dynamic prediction as the conversation develops, but fail to incorporate multisource information, such as conversation structure and distance to derailment. We propose a hierarchical transformer-based framework that combines utterance-level and conversation-level information to capture fine-grained contextual semantics. We propose a domain-adaptive pretraining objective to integrate conversational structure information and a multitask learning scheme to leverage the distance from each utterance to derailment. An evaluation of our framework on two conversation derailment datasets yields improvement over F1 score for the prediction of derailment. These results demonstrate the effectiveness of incorporating multisource information.

PACO: Provocation Involving Action, Culture, and Oppression

Mar 19, 2023Abstract:In India, people identify with a particular group based on certain attributes such as religion. The same religious groups are often provoked against each other. Previous studies show the role of provocation in increasing tensions between India's two prominent religious groups: Hindus and Muslims. With the advent of the Internet, such provocation also surfaced on social media platforms such as WhatsApp. By leveraging an existing dataset of Indian WhatsApp posts, we identified three categories of provoking sentences against Indian Muslims. Further, we labeled 7,000 sentences for three provocation categories and called this dataset PACO. We leveraged PACO to train a model that can identify provoking sentences from a WhatsApp post. Our best model is fine-tuned RoBERTa and achieved a 0.851 average AUC score over five-fold cross-validation. Automatically identifying provoking sentences could stop provoking text from reaching out to the masses, and can prevent possible discrimination or violence against the target religious group. Further, we studied the provocative speech through a pragmatic lens, by identifying the dialog acts and impoliteness super-strategies used against the religious group.

Extracting Incidents, Effects, and Requested Advice from MeToo Posts

Mar 19, 2023Abstract:Survivors of sexual harassment frequently share their experiences on social media, revealing their feelings and emotions and seeking advice. We observed that on Reddit, survivors regularly share long posts that describe a combination of (i) a sexual harassment incident, (ii) its effect on the survivor, including their feelings and emotions, and (iii) the advice being sought. We term such posts MeToo posts, even though they may not be so tagged and may appear in diverse subreddits. A prospective helper (such as a counselor or even a casual reader) must understand a survivor's needs from such posts. But long posts can be time-consuming to read and respond to. Accordingly, we address the problem of extracting key information from a long MeToo post. We develop a natural language-based model to identify sentences from a post that describe any of the above three categories. On ten-fold cross-validation of a dataset, our model achieves a macro F1 score of 0.82. In addition, we contribute MeThree, a dataset comprising 8,947 labeled sentences extracted from Reddit posts. We apply the LIWC-22 toolkit on MeThree to understand how different language patterns in sentences of the three categories can reveal differences in emotional tone, authenticity, and other aspects.

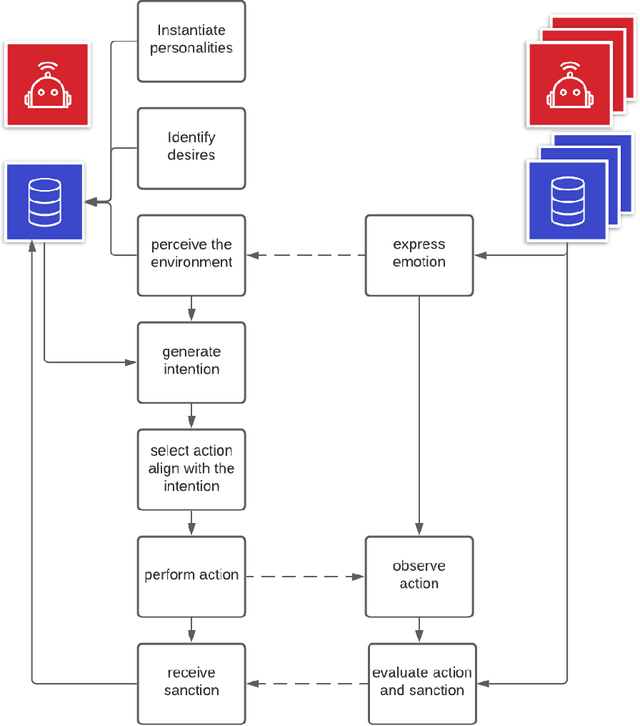

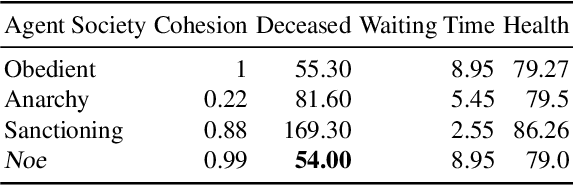

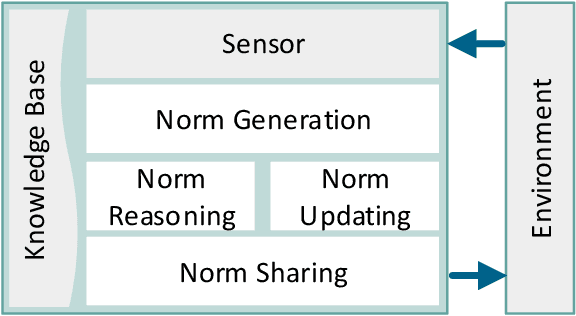

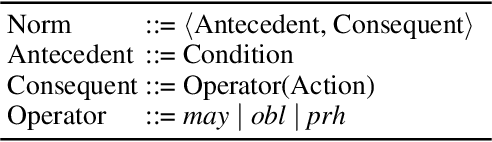

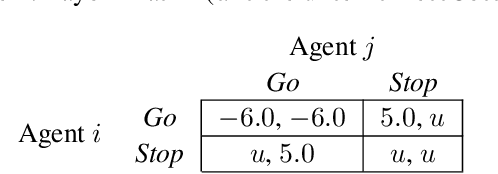

Socially Intelligent Genetic Agents for the Emergence of Explicit Norms

Aug 07, 2022

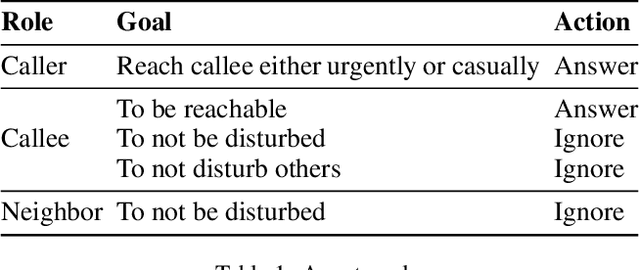

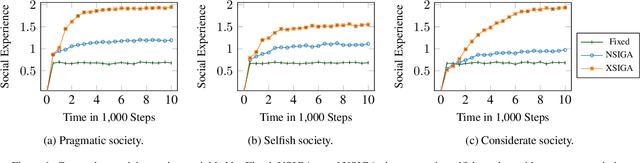

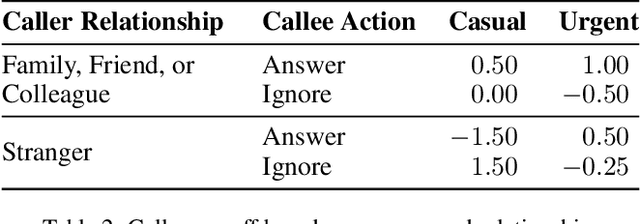

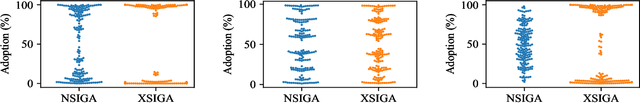

Abstract:Norms help regulate a society. Norms may be explicit (represented in structured form) or implicit. We address the emergence of explicit norms by developing agents who provide and reason about explanations for norm violations in deciding sanctions and identifying alternative norms. These agents use a genetic algorithm to produce norms and reinforcement learning to learn the values of these norms. We find that applying explanations leads to norms that provide better cohesion and goal satisfaction for the agents. Our results are stable for societies with differing attitudes of generosity.

Consent as a Foundation for Responsible Autonomy

Mar 22, 2022

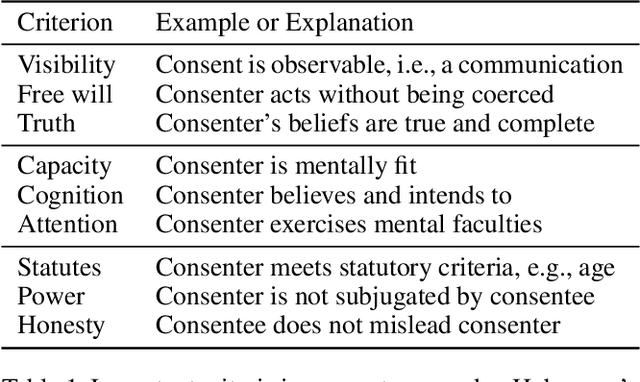

Abstract:This paper focuses on a dynamic aspect of responsible autonomy, namely, to make intelligent agents be responsible at run time. That is, it considers settings where decision making by agents impinges upon the outcomes perceived by other agents. For an agent to act responsibly, it must accommodate the desires and other attitudes of its users and, through other agents, of their users. The contribution of this paper is twofold. First, it provides a conceptual analysis of consent, its benefits and misuses, and how understanding consent can help achieve responsible autonomy. Second, it outlines challenges for AI (in particular, for agents and multiagent systems) that merit investigation to form as a basis for modeling consent in multiagent systems and applying consent to achieve responsible autonomy.

* 6 pages; 1 table Proceedings of the 36th AAAI Conference on Artificial Intelligence (AAAI), Blue Sky Track

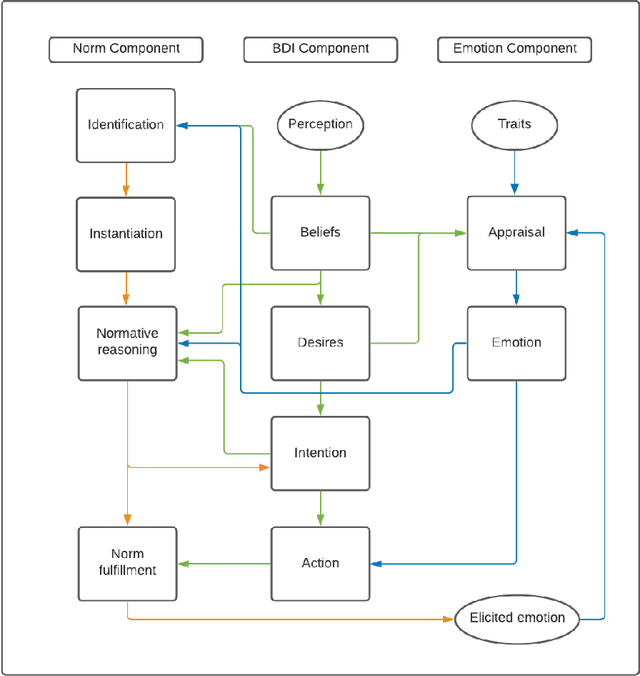

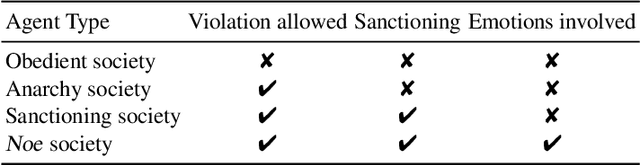

Noe: Norms Emergence and Robustness Based on Emotions in Multiagent Systems

Apr 30, 2021

Abstract:Social norms characterize collective and acceptable group conducts in human society. Furthermore, some social norms emerge from interactions of agents or humans. To achieve agent autonomy and make norm satisfaction explainable, we include emotions into the normative reasoning process, which evaluate whether to comply or violate a norm. Specifically, before selecting an action to execute, an agent observes the environment and infer the state and consequences with its internal states after norm satisfaction or violation of a social norm. Both norm satisfaction and violation provoke further emotions, and the subsequent emotions affect norm enforcement. This paper investigates how modeling emotions affect the emergence and robustness of social norms via social simulation experiments. We find that an ability in agents to consider emotional responses to the outcomes of norm satisfaction and violation (1) promote norm compliance; and (2) improve societal welfare.

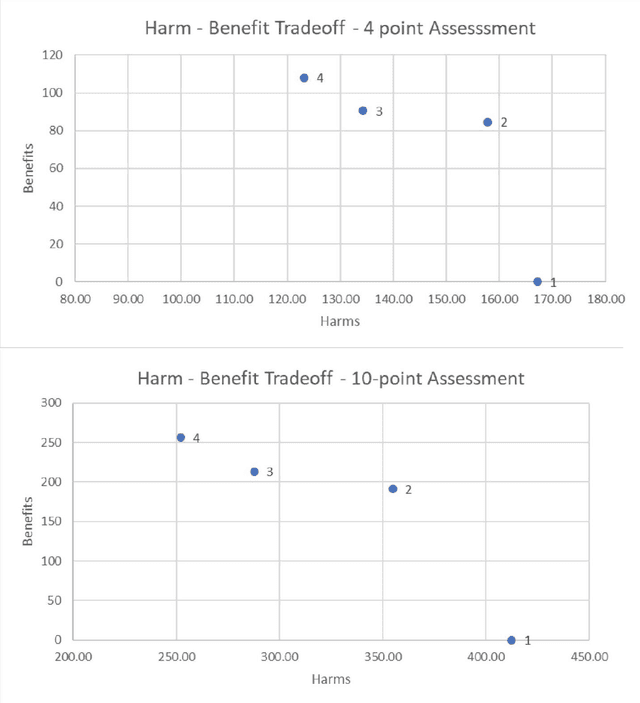

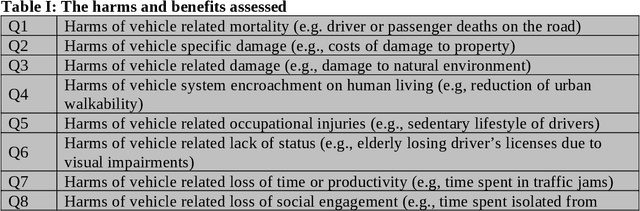

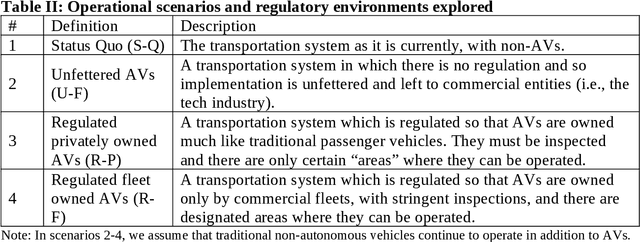

Toward a Rational and Ethical Sociotechnical System of Autonomous Vehicles: A Novel Application of Multi-Criteria Decision Analysis

Feb 04, 2021

Abstract:The expansion of artificial intelligence (AI) and autonomous systems has shown the potential to generate enormous social good while also raising serious ethical and safety concerns. AI technology is increasingly adopted in transportation. A survey of various in-vehicle technologies found that approximately 64% of the respondents used a smartphone application to assist with their travel. The top-used applications were navigation and real-time traffic information systems. Among those who used smartphones during their commutes, the top-used applications were navigation and entertainment. There is a pressing need to address relevant social concerns to allow for the development of systems of intelligent agents that are informed and cognizant of ethical standards. Doing so will facilitate the responsible integration of these systems in society. To this end, we have applied Multi-Criteria Decision Analysis (MCDA) to develop a formal Multi-Attribute Impact Assessment (MAIA) questionnaire for examining the social and ethical issues associated with the uptake of AI. We have focused on the domain of autonomous vehicles (AVs) because of their imminent expansion. However, AVs could serve as a stand-in for any domain where intelligent, autonomous agents interact with humans, either on an individual level (e.g., pedestrians, passengers) or a societal level.

Moral and Social Ramifications of Autonomous Vehicles

Jan 29, 2021

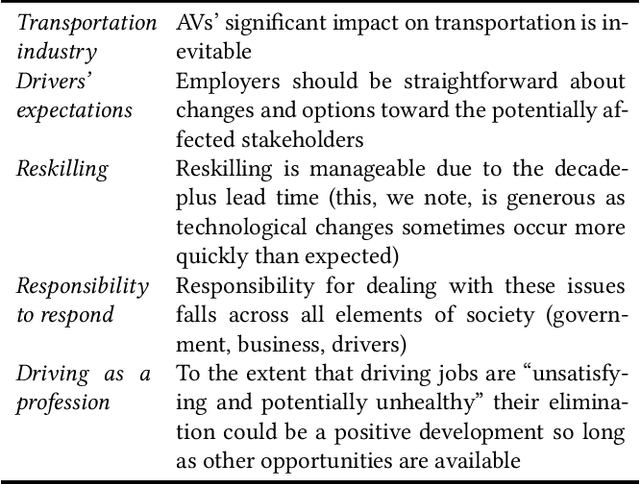

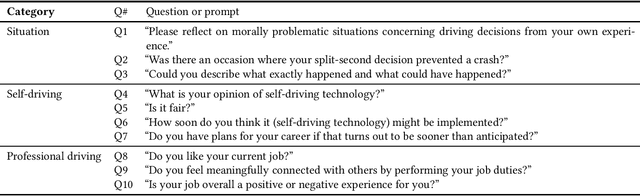

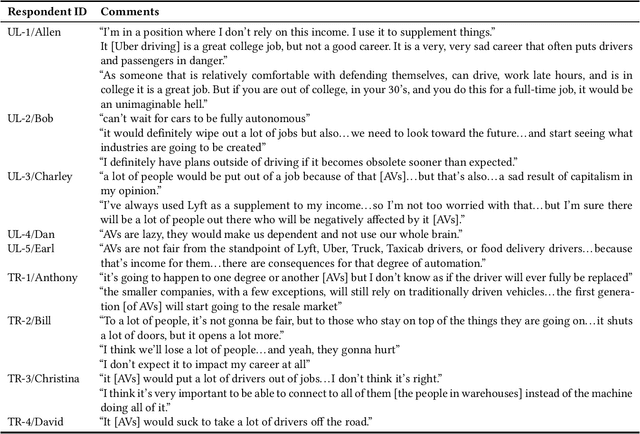

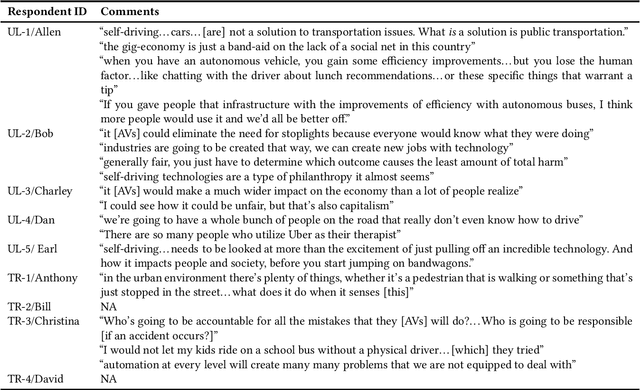

Abstract:Autonomous Vehicles (AVs) raise important social and ethical concerns, especially about accountability, dignity, and justice. We focus on the specific concerns arising from how AV technology will affect the lives and livelihoods of professional and semi-professional drivers. Whereas previous studies of such concerns have focused on the opinions of experts, we seek to understand these ethical and societal challenges from the perspectives of the drivers themselves. To this end, we adopted a qualitative research methodology based on semi-structured interviews. This is an established social science methodology that helps understand the core concerns of stakeholders in depth by avoiding the biases of superficial methods such as surveys. We find that whereas drivers agree with the experts that AVs will significantly impact transportation systems, they are apprehensive about the prospects for their livelihoods and dismiss the suggestions that driving jobs are unsatisfying and their profession does not merit protection. By showing how drivers differ from the experts, our study has ramifications beyond AVs to AI and other advanced technologies. Our findings suggest that qualitative research applied to the relevant, especially disempowered, stakeholders is essential to ensuring that new technologies are introduced ethically.

Game-Theoretic and Machine Learning-based Approaches for Defensive Deception: A Survey

Jan 21, 2021

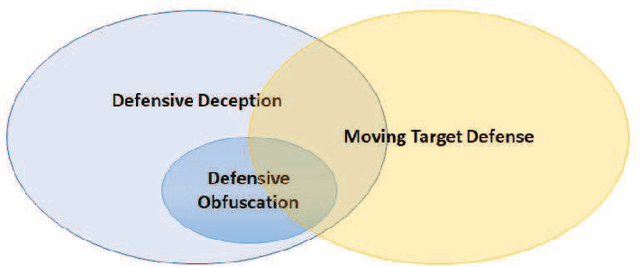

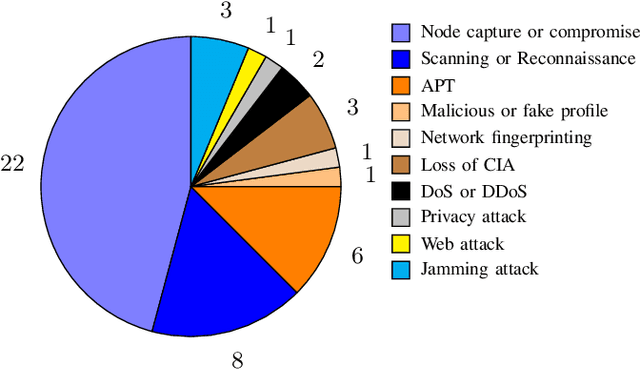

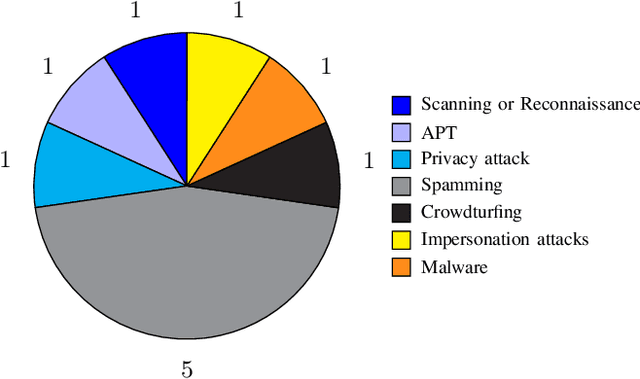

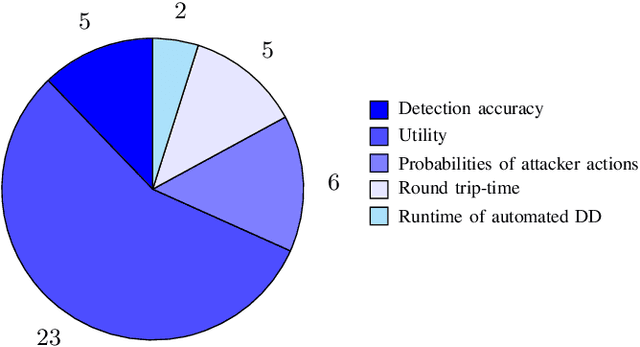

Abstract:Defensive deception is a promising approach for cyberdefense. Although defensive deception is increasingly popular in the research community, there has not been a systematic investigation of its key components, the underlying principles, and its tradeoffs in various problem settings. This survey paper focuses on defensive deception research centered on game theory and machine learning, since these are prominent families of artificial intelligence approaches that are widely employed in defensive deception. This paper brings forth insights, lessons, and limitations from prior work. It closes with an outline of some research directions to tackle major gaps in current defensive deception research.

Prosocial Norm Emergence in Multiagent Systems

Dec 29, 2020

Abstract:Multiagent systems provide a basis of developing systems of autonomous entities and thus find application in a variety of domains. We consider a setting where not only the member agents are adaptive but also the multiagent system itself is adaptive. Specifically, the social structure of a multiagent system can be reflected in the social norms among its members. It is well recognized that the norms that arise in society are not always beneficial to its members. We focus on prosocial norms, which help achieve positive outcomes for society and often provide guidance to agents to act in a manner that takes into account the welfare of others. Specifically, we propose Cha, a framework for the emergence of prosocial norms. Unlike previous norm emergence approaches, Cha supports continual change to a system (agents may enter and leave), and dynamism (norms may change when the environment changes). Importantly, Cha agents incorporate prosocial decision making based on inequity aversion theory, reflecting an intuition of guilt from being antisocial. In this manner, Cha brings together two important themes in prosociality: decision making by individuals and fairness of system-level outcomes. We demonstrate via simulation that Cha can improve aggregate societal gains and fairness of outcomes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge