Mohammad Wasil

Parameter-Efficient Fine-Tuning of Vision Foundation Model for Forest Floor Segmentation from UAV Imagery

May 13, 2025Abstract:Unmanned Aerial Vehicles (UAVs) are increasingly used for reforestation and forest monitoring, including seed dispersal in hard-to-reach terrains. However, a detailed understanding of the forest floor remains a challenge due to high natural variability, quickly changing environmental parameters, and ambiguous annotations due to unclear definitions. To address this issue, we adapt the Segment Anything Model (SAM), a vision foundation model with strong generalization capabilities, to segment forest floor objects such as tree stumps, vegetation, and woody debris. To this end, we employ parameter-efficient fine-tuning (PEFT) to fine-tune a small subset of additional model parameters while keeping the original weights fixed. We adjust SAM's mask decoder to generate masks corresponding to our dataset categories, allowing for automatic segmentation without manual prompting. Our results show that the adapter-based PEFT method achieves the highest mean intersection over union (mIoU), while Low-rank Adaptation (LoRA), with fewer parameters, offers a lightweight alternative for resource-constrained UAV platforms.

Taming the Randomness: Towards Label-Preserving Cropping in Contrastive Learning

Apr 28, 2025

Abstract:Contrastive learning (CL) approaches have gained great recognition as a very successful subset of self-supervised learning (SSL) methods. SSL enables learning from unlabeled data, a crucial step in the advancement of deep learning, particularly in computer vision (CV), given the plethora of unlabeled image data. CL works by comparing different random augmentations (e.g., different crops) of the same image, thus achieving self-labeling. Nevertheless, randomly augmenting images and especially random cropping can result in an image that is semantically very distant from the original and therefore leads to false labeling, hence undermining the efficacy of the methods. In this research, two novel parameterized cropping methods are introduced that increase the robustness of self-labeling and consequently increase the efficacy. The results show that the use of these methods significantly improves the accuracy of the model by between 2.7\% and 12.4\% on the downstream task of classifying CIFAR-10, depending on the crop size compared to that of the non-parameterized random cropping method.

b-it-bots RoboCup@Work Team Description Paper 2023

Dec 29, 2023Abstract:This paper presents the b-it-bots RoboCup@Work team and its current hardware and functional architecture for the KUKA youBot robot. We describe the underlying software framework and the developed capabilities required for operating in industrial environments including features such as reliable and precise navigation, flexible manipulation, robust object recognition and task planning. New developments include an approach to grasp vertical objects, placement of objects by considering the empty space on a workstation, and the process of porting our code to ROS2.

Personalised Robot Behaviour Modelling for Robot-Assisted Therapy in the Context of Autism Spectrum Disorder

Jul 25, 2022

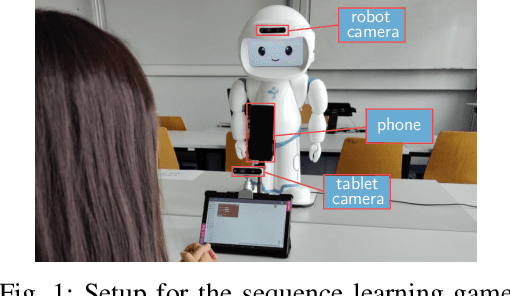

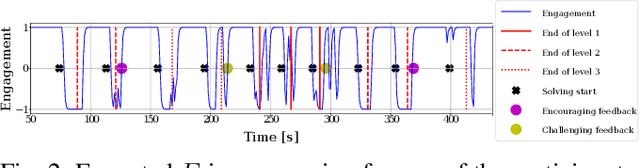

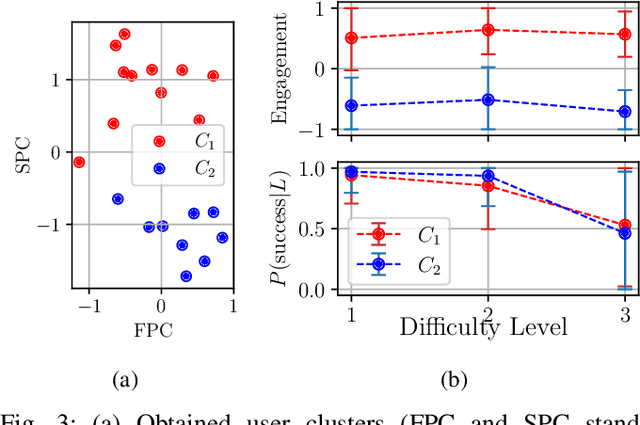

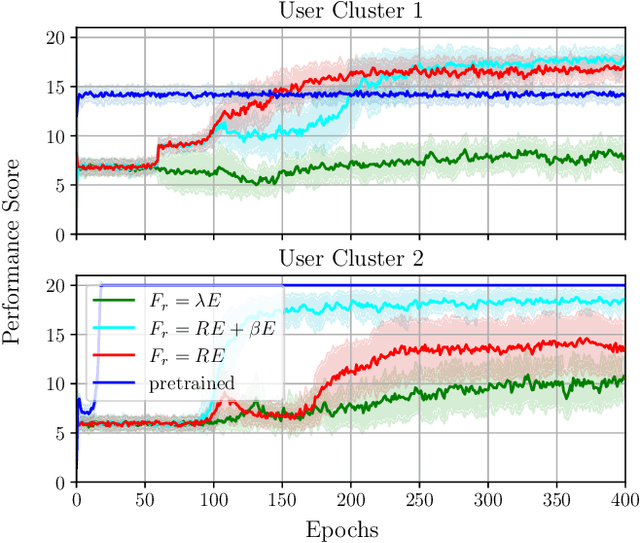

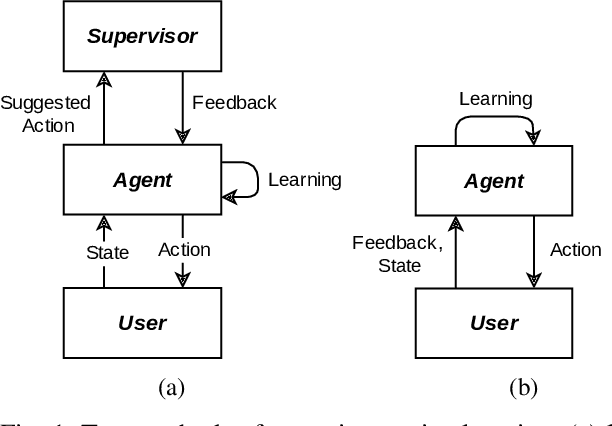

Abstract:In robot-assisted therapy for individuals with Autism Spectrum Disorder, the workload of therapists during a therapeutic session is increased if they have to control the robot manually. To allow therapists to focus on the interaction with the person instead, the robot should be more autonomous, namely it should be able to interpret the person's state and continuously adapt its actions according to their behaviour. In this paper, we develop a personalised robot behaviour model that can be used in the robot decision-making process during an activity; this behaviour model is trained with the help of a user model that has been learned from real interaction data. We use Q-learning for this task, such that the results demonstrate that the policy requires about 10,000 iterations to converge. We thus investigate policy transfer for improving the convergence speed; we show that this is a feasible solution, but an inappropriate initial policy can lead to a suboptimal final return.

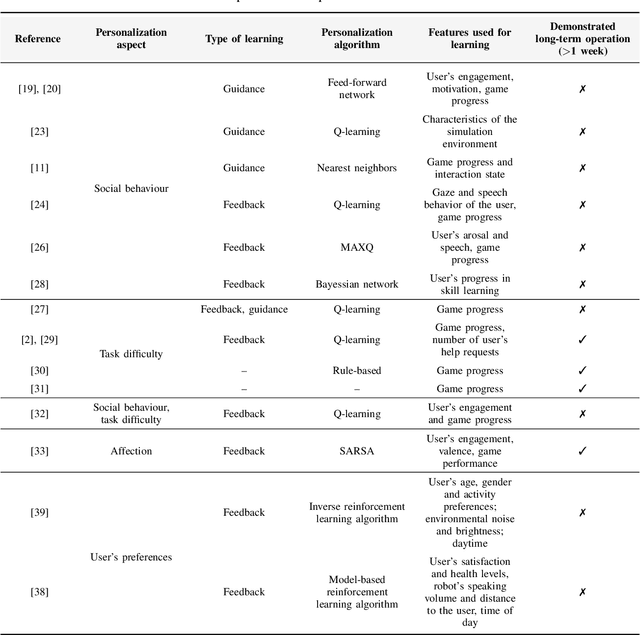

Personalized Behaviour Models: A Survey Focusing on Autism Therapy Applications

May 18, 2022

Abstract:Children with Autism Spectrum Disorder find robots easier to communicate with than humans. Thus, robots have been introduced in autism therapies. However, due to the environmental complexity, the used robots often have to be controlled manually. This is a significant drawback of such systems and it is required to make them more autonomous. In particular, the robot should interpret the child's state and continuously adapt its actions according to the behaviour of the child under therapy. This survey elaborates on different forms of personalized robot behaviour models. Various approaches from the field of Human-Robot Interaction, as well as Child-Robot Interaction, are discussed. The aim is to compare them in terms of their deficits, feasibility in real scenarios, and potential usability for autism-specific Robot-Assisted Therapy. The general challenge for algorithms based on which the robot learns proper interaction strategies during therapeutic games is to increase the robot's autonomy, thereby providing a basis for a robot's decision-making.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge