Milan Češka

Constrained and Robust Policy Synthesis with Satisfiability-Modulo-Probabilistic-Model-Checking

Nov 15, 2025Abstract:The ability to compute reward-optimal policies for given and known finite Markov decision processes (MDPs) underpins a variety of applications across planning, controller synthesis, and verification. However, we often want policies (1) to be robust, i.e., they perform well on perturbations of the MDP and (2) to satisfy additional structural constraints regarding, e.g., their representation or implementation cost. Computing such robust and constrained policies is indeed computationally more challenging. This paper contributes the first approach to effectively compute robust policies subject to arbitrary structural constraints using a flexible and efficient framework. We achieve flexibility by allowing to express our constraints in a first-order theory over a set of MDPs, while the root for our efficiency lies in the tight integration of satisfiability solvers to handle the combinatorial nature of the problem and probabilistic model checking algorithms to handle the analysis of MDPs. Experiments on a few hundred benchmarks demonstrate the feasibility for constrained and robust policy synthesis and the competitiveness with state-of-the-art methods for various fragments of the problem.

\textsc{rfPG}: Robust Finite-Memory Policy Gradients for Hidden-Model POMDPs

May 14, 2025Abstract:Partially observable Markov decision processes (POMDPs) model specific environments in sequential decision-making under uncertainty. Critically, optimal policies for POMDPs may not be robust against perturbations in the environment. Hidden-model POMDPs (HM-POMDPs) capture sets of different environment models, that is, POMDPs with a shared action and observation space. The intuition is that the true model is hidden among a set of potential models, and it is unknown which model will be the environment at execution time. A policy is robust for a given HM-POMDP if it achieves sufficient performance for each of its POMDPs. We compute such robust policies by combining two orthogonal techniques: (1) a deductive formal verification technique that supports tractable robust policy evaluation by computing a worst-case POMDP within the HM-POMDP and (2) subgradient ascent to optimize the candidate policy for a worst-case POMDP. The empirical evaluation shows that, compared to various baselines, our approach (1) produces policies that are more robust and generalize better to unseen POMDPs and (2) scales to HM-POMDPs that consist of over a hundred thousand environments.

Decentralized Planning Using Probabilistic Hyperproperties

Feb 19, 2025

Abstract:Multi-agent planning under stochastic dynamics is usually formalised using decentralized (partially observable) Markov decision processes ( MDPs) and reachability or expected reward specifications. In this paper, we propose a different approach: we use an MDP describing how a single agent operates in an environment and probabilistic hyperproperties to capture desired temporal objectives for a set of decentralized agents operating in the environment. We extend existing approaches for model checking probabilistic hyperproperties to handle temporal formulae relating paths of different agents, thus requiring the self-composition between multiple MDPs. Using several case studies, we demonstrate that our approach provides a flexible and expressive framework to broaden the specification capabilities with respect to existing planning techniques. Additionally, we establish a close connection between a subclass of probabilistic hyperproperties and planning for a particular type of Dec-MDPs, for both of which we show undecidability. This lays the ground for the use of existing decentralized planning tools in the field of probabilistic hyperproperty verification.

Counterexample-Driven Synthesis for Probabilistic Program Sketches

Apr 28, 2019

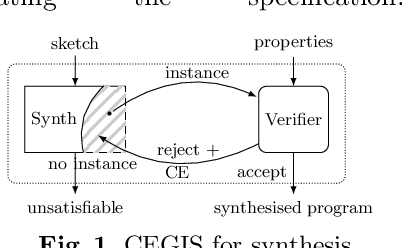

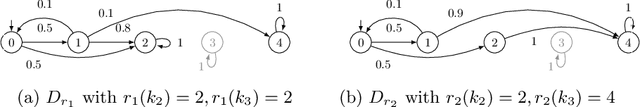

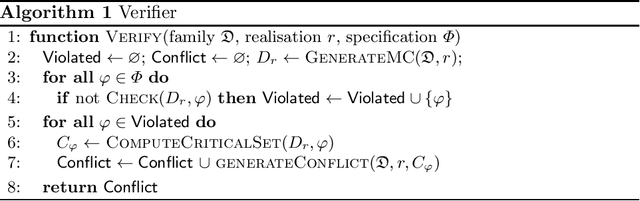

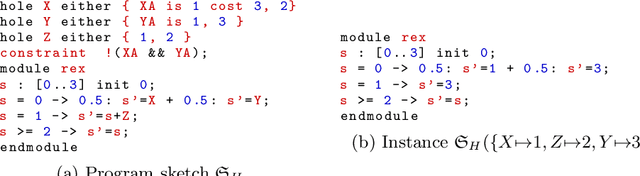

Abstract:Probabilistic programs are key to deal with uncertainty in e.g. controller synthesis. They are typically small but intricate. Their development is complex and error prone requiring quantitative reasoning over a myriad of alternative designs. To mitigate this complexity, we adopt counterexample-guided inductive synthesis (CEGIS) to automatically synthesise finite-state probabilistic programs. Our approach leverages efficient model checking, modern SMT solving, and counterexample generation at program level. Experiments on practically relevant case studies show that design spaces with millions of candidate designs can be fully explored using a few thousand verification queries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge