Filip Macák

Constrained and Robust Policy Synthesis with Satisfiability-Modulo-Probabilistic-Model-Checking

Nov 15, 2025

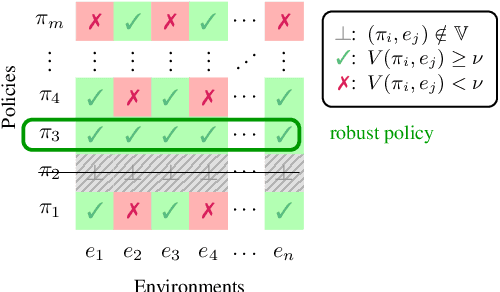

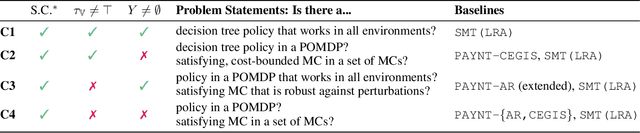

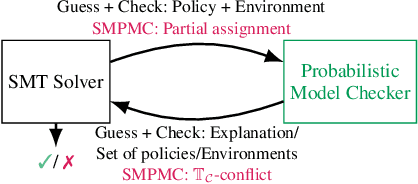

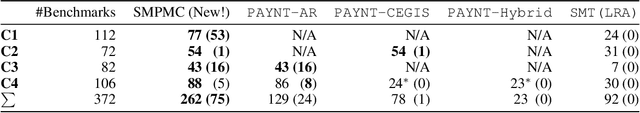

Abstract:The ability to compute reward-optimal policies for given and known finite Markov decision processes (MDPs) underpins a variety of applications across planning, controller synthesis, and verification. However, we often want policies (1) to be robust, i.e., they perform well on perturbations of the MDP and (2) to satisfy additional structural constraints regarding, e.g., their representation or implementation cost. Computing such robust and constrained policies is indeed computationally more challenging. This paper contributes the first approach to effectively compute robust policies subject to arbitrary structural constraints using a flexible and efficient framework. We achieve flexibility by allowing to express our constraints in a first-order theory over a set of MDPs, while the root for our efficiency lies in the tight integration of satisfiability solvers to handle the combinatorial nature of the problem and probabilistic model checking algorithms to handle the analysis of MDPs. Experiments on a few hundred benchmarks demonstrate the feasibility for constrained and robust policy synthesis and the competitiveness with state-of-the-art methods for various fragments of the problem.

Decentralized Planning Using Probabilistic Hyperproperties

Feb 19, 2025

Abstract:Multi-agent planning under stochastic dynamics is usually formalised using decentralized (partially observable) Markov decision processes ( MDPs) and reachability or expected reward specifications. In this paper, we propose a different approach: we use an MDP describing how a single agent operates in an environment and probabilistic hyperproperties to capture desired temporal objectives for a set of decentralized agents operating in the environment. We extend existing approaches for model checking probabilistic hyperproperties to handle temporal formulae relating paths of different agents, thus requiring the self-composition between multiple MDPs. Using several case studies, we demonstrate that our approach provides a flexible and expressive framework to broaden the specification capabilities with respect to existing planning techniques. Additionally, we establish a close connection between a subclass of probabilistic hyperproperties and planning for a particular type of Dec-MDPs, for both of which we show undecidability. This lays the ground for the use of existing decentralized planning tools in the field of probabilistic hyperproperty verification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge