Michele Piana

PRESOL: a web-based computational setting for feature-based flare forecasting

Oct 02, 2025Abstract:Solar flares are the most explosive phenomena in the solar system and the main trigger of the events' chain that starts from Coronal Mass Ejections and leads to geomagnetic storms with possible impacts on the infrastructures at Earth. Data-driven solar flare forecasting relies on either deep learning approaches, which are operationally promising but with a low explainability degree, or machine learning algorithms, which can provide information on the physical descriptors that mostly impact the prediction. This paper describes a web-based technological platform for the execution of a computational pipeline of feature-based machine learning methods that provide predictions of the flare occurrence, feature ranking information, and assessment of the prediction performances.

Probabilistic approach to longitudinal response prediction: application to radiomics from brain cancer imaging

May 12, 2025Abstract:Longitudinal imaging analysis tracks disease progression and treatment response over time, providing dynamic insights into treatment efficacy and disease evolution. Radiomic features extracted from medical imaging can support the study of disease progression and facilitate longitudinal prediction of clinical outcomes. This study presents a probabilistic model for longitudinal response prediction, integrating baseline features with intermediate follow-ups. The probabilistic nature of the model naturally allows to handle the instrinsic uncertainty of the longitudinal prediction of disease progression. We evaluate the proposed model against state-of-the-art disease progression models in both a synthetic scenario and using a brain cancer dataset. Results demonstrate that the approach is competitive against existing methods while uniquely accounting for uncertainty and controlling the growth of problem dimensionality, eliminating the need for data from intermediate follow-ups.

DISARM++: Beyond scanner-free harmonization

May 06, 2025

Abstract:Harmonization of T1-weighted MR images across different scanners is crucial for ensuring consistency in neuroimaging studies. This study introduces a novel approach to direct image harmonization, moving beyond feature standardization to ensure that extracted features remain inherently reliable for downstream analysis. Our method enables image transfer in two ways: (1) mapping images to a scanner-free space for uniform appearance across all scanners, and (2) transforming images into the domain of a specific scanner used in model training, embedding its unique characteristics. Our approach presents strong generalization capability, even for unseen scanners not included in the training phase. We validated our method using MR images from diverse cohorts, including healthy controls, traveling subjects, and individuals with Alzheimer's disease (AD). The model's effectiveness is tested in multiple applications, such as brain age prediction (R2 = 0.60 \pm 0.05), biomarker extraction, AD classification (Test Accuracy = 0.86 \pm 0.03), and diagnosis prediction (AUC = 0.95). In all cases, our harmonization technique outperforms state-of-the-art methods, showing improvements in both reliability and predictive accuracy. Moreover, our approach eliminates the need for extensive preprocessing steps, such as skull-stripping, which can introduce errors by misclassifying brain and non-brain structures. This makes our method particularly suitable for applications that require full-head analysis, including research on head trauma and cranial deformities. Additionally, our harmonization model does not require retraining for new datasets, allowing smooth integration into various neuroimaging workflows. By ensuring scanner-invariant image quality, our approach provides a robust and efficient solution for improving neuroimaging studies across diverse settings. The code is available at this link.

Physics-informed features in supervised machine learning

Apr 23, 2025Abstract:Supervised machine learning involves approximating an unknown functional relationship from a limited dataset of features and corresponding labels. The classical approach to feature-based machine learning typically relies on applying linear regression to standardized features, without considering their physical meaning. This may limit model explainability, particularly in scientific applications. This study proposes a physics-informed approach to feature-based machine learning that constructs non-linear feature maps informed by physical laws and dimensional analysis. These maps enhance model interpretability and, when physical laws are unknown, allow for the identification of relevant mechanisms through feature ranking. The method aims to improve both predictive performance in regression tasks and classification skill scores by integrating domain knowledge into the learning process, while also enabling the potential discovery of new physical equations within the context of explainable machine learning.

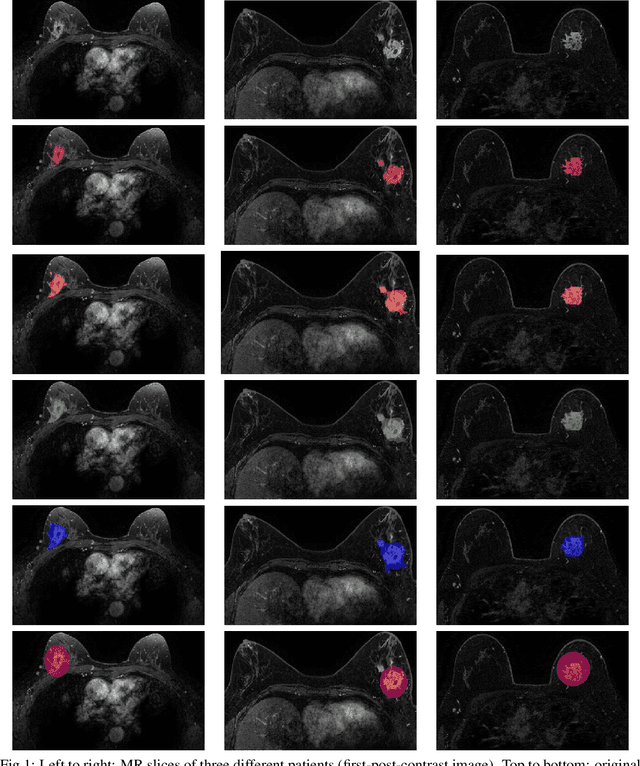

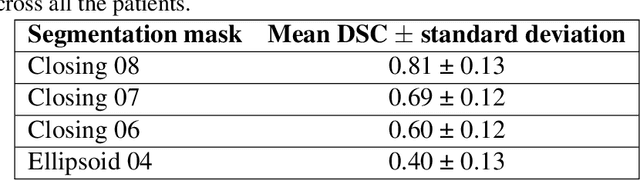

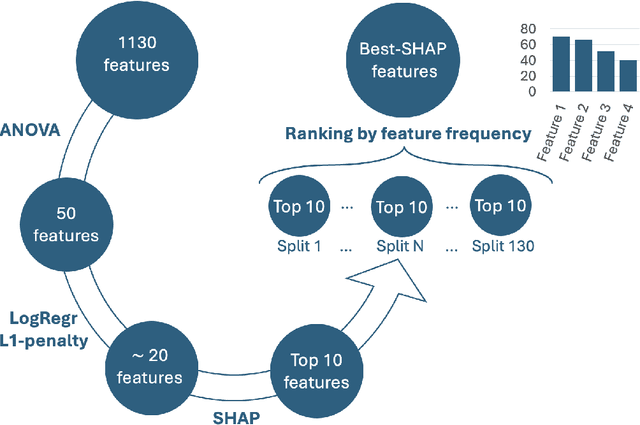

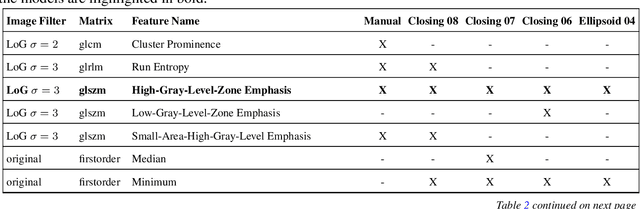

Segmentation variability and radiomics stability for predicting Triple-Negative Breast Cancer subtype using Magnetic Resonance Imaging

Apr 02, 2025

Abstract:Most papers caution against using predictive models for disease stratification based on unselected radiomic features, as these features are affected by contouring variability. Instead, they advocate for the use of the Intraclass Correlation Coefficient (ICC) as a measure of stability for feature selection. However, the direct effect of segmentation variability on the predictive models is rarely studied. This study investigates the impact of segmentation variability on feature stability and predictive performance in radiomics-based prediction of Triple-Negative Breast Cancer (TNBC) subtype using Magnetic Resonance Imaging. A total of 244 images from the Duke dataset were used, with segmentation variability introduced through modifications of manual segmentations. For each mask, explainable radiomic features were selected using the Shapley Additive exPlanations method and used to train logistic regression models. Feature stability across segmentations was assessed via ICC, Pearson's correlation, and reliability scores quantifying the relationship between feature stability and segmentation variability. Results indicate that segmentation accuracy does not significantly impact predictive performance. While incorporating peritumoral information may reduce feature reproducibility, it does not diminish feature predictive capability. Moreover, feature selection in predictive models is not inherently tied to feature stability with respect to segmentation, suggesting that an overreliance on ICC or reliability scores for feature selection might exclude valuable predictive features.

Deep Learning for Active Region Classification: A Systematic Study from Convolutional Neural Networks to Vision Transformers

Oct 23, 2024Abstract:A solar active region can significantly disrupt the Sun Earth space environment, often leading to severe space weather events such as solar flares and coronal mass ejections. As a consequence, the automatic classification of active region groups is the crucial starting point for accurately and promptly predicting solar activity. This study presents our results concerned with the application of deep learning techniques to the classification of active region cutouts based on the Mount Wilson classification scheme. Specifically, we have explored the latest advancements in image classification architectures, from Convolutional Neural Networks to Vision Transformers, and reported on their performances for the active region classification task, showing that the crucial point for their effectiveness consists in a robust training process based on the latest advances in the field.

Forecasting Geoffective Events from Solar Wind Data and Evaluating the Most Predictive Features through Machine Learning Approaches

Mar 14, 2024Abstract:This study addresses the prediction of geomagnetic disturbances by exploiting machine learning techniques. Specifically, the Long-Short Term Memory recurrent neural network, which is particularly suited for application over long time series, is employed in the analysis of in-situ measurements of solar wind plasma and magnetic field acquired over more than one solar cycle, from $2005$ to $2019$, at the Lagrangian point L$1$. The problem is approached as a binary classification aiming to predict one hour in advance a decrease in the SYM-H geomagnetic activity index below the threshold of $-50$ nT, which is generally regarded as indicative of magnetospheric perturbations. The strong class imbalance issue is tackled by using an appropriate loss function tailored to optimize appropriate skill scores in the training phase of the neural network. Beside classical skill scores, value-weighted skill scores are then employed to evaluate predictions, suitable in the study of problems, such as the one faced here, characterized by strong temporal variability. For the first time, the content of magnetic helicity and energy carried by solar transients, associated with their detection and likelihood of geo-effectiveness, were considered as input features of the network architecture. Their predictive capabilities are demonstrated through a correlation-driven feature selection method to rank the most relevant characteristics involved in the neural network prediction model. The optimal performance of the adopted neural network in properly forecasting the onset of geomagnetic storms, which is a crucial point for giving real warnings in an operational setting, is finally showed.

Greedy feature selection: Classifier-dependent feature selection via greedy methods

Mar 08, 2024

Abstract:The purpose of this study is to introduce a new approach to feature ranking for classification tasks, called in what follows greedy feature selection. In statistical learning, feature selection is usually realized by means of methods that are independent of the classifier applied to perform the prediction using that reduced number of features. Instead, greedy feature selection identifies the most important feature at each step and according to the selected classifier. In the paper, the benefits of such scheme are investigated theoretically in terms of model capacity indicators, such as the Vapnik-Chervonenkis (VC) dimension or the kernel alignment, and tested numerically by considering its application to the problem of predicting geo-effective manifestations of the active Sun.

AI-FLARES: Artificial Intelligence for the Analysis of Solar Flares Data

Jan 02, 2024Abstract:AI-FLARES (Artificial Intelligence for the Analysis of Solar Flares Data) is a research project funded by the Agenzia Spaziale Italiana and by the Istituto Nazionale di Astrofisica within the framework of the ``Attivit\`a di Studio per la Comunit\`a Scientifica Nazionale Sole, Sistema Solare ed Esopianeti'' program. The topic addressed by this project was the development and use of computational methods for the analysis of remote sensing space data associated to solar flare emission. This paper overviews the main results obtained by the project, with specific focus on solar flare forecasting, reconstruction of morphologies of the flaring sources, and interpretation of acceleration mechanisms triggered by solar flares.

Three-dimensional numerical schemes for the segmentation of the psoas muscle in X-ray computed tomography images

Dec 10, 2023Abstract:The analysis of the psoas muscle in morphological and functional imaging has proved to be an accurate approach to assess sarcopenia, i.e. a systemic loss of skeletal muscle mass and function that may be correlated to multifactorial etiological aspects. The inclusion of sarcopenia assessment into a radiological workflow would need the implementation of computational pipelines for image processing that guarantee segmentation reliability and a significant degree of automation. The present study utilizes three-dimensional numerical schemes for psoas segmentation in low-dose X-ray computed tomography images. Specifically, here we focused on the level set methodology and compared the performances of two standard approaches, a classical evolution model and a three-dimension geodesic model, with the performances of an original first-order modification of this latter one. The results of this analysis show that these gradient-based schemes guarantee reliability with respect to manual segmentation and that the first-order scheme requires a computational burden that is significantly smaller than the one needed by the second-order approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge