Michael Friedrich

RouteNator: A Router-Based Multi-Modal Architecture for Generating Synthetic Training Data for Function Calling LLMs

May 15, 2025Abstract:This paper addresses fine-tuning Large Language Models (LLMs) for function calling tasks when real user interaction data is unavailable. In digital content creation tools, where users express their needs through natural language queries that must be mapped to API calls, the lack of real-world task-specific data and privacy constraints for training on it necessitate synthetic data generation. Existing approaches to synthetic data generation fall short in diversity and complexity, failing to replicate real-world data distributions and leading to suboptimal performance after LLM fine-tuning. We present a novel router-based architecture that leverages domain resources like content metadata and structured knowledge graphs, along with text-to-text and vision-to-text language models to generate high-quality synthetic training data. Our architecture's flexible routing mechanism enables synthetic data generation that matches observed real-world distributions, addressing a fundamental limitation of traditional approaches. Evaluation on a comprehensive set of real user queries demonstrates significant improvements in both function classification accuracy and API parameter selection. Models fine-tuned with our synthetic data consistently outperform traditional approaches, establishing new benchmarks for function calling tasks.

* Proceedings of the 4th International Workshop on Knowledge-Augmented Methods for Natural Language Processing

Domain-specific Question Answering with Hybrid Search

Dec 04, 2024

Abstract:Domain specific question answering is an evolving field that requires specialized solutions to address unique challenges. In this paper, we show that a hybrid approach combining a fine-tuned dense retriever with keyword based sparse search methods significantly enhances performance. Our system leverages a linear combination of relevance signals, including cosine similarity from dense retrieval, BM25 scores, and URL host matching, each with tunable boost parameters. Experimental results indicate that this hybrid method outperforms our single-retriever system, achieving improved accuracy while maintaining robust contextual grounding. These findings suggest that integrating multiple retrieval methodologies with weighted scoring effectively addresses the complexities of domain specific question answering in enterprise settings.

Improving Automated Visual Fault Detection by Combining a Biologically Plausible Model of Visual Attention with Deep Learning

Feb 13, 2021

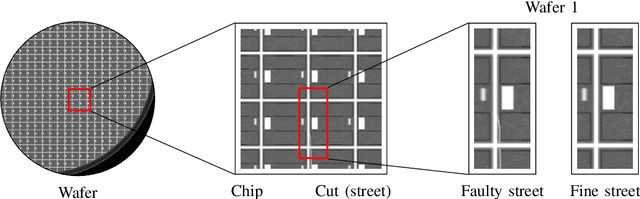

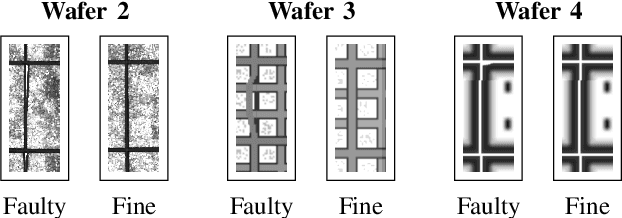

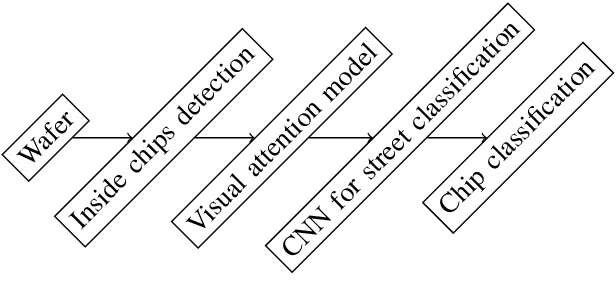

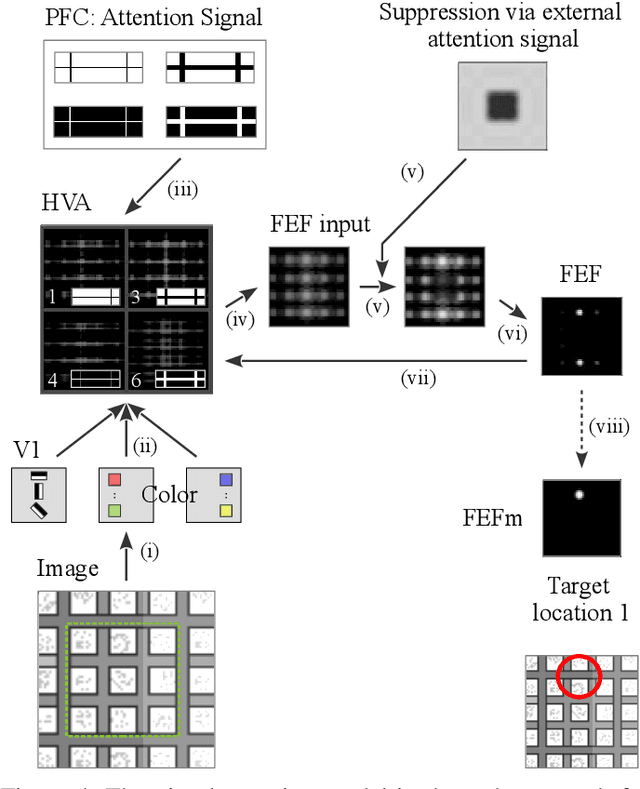

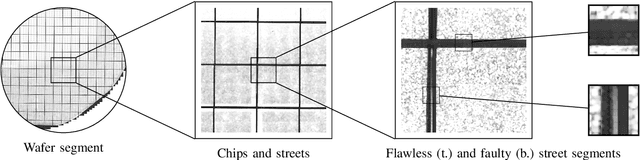

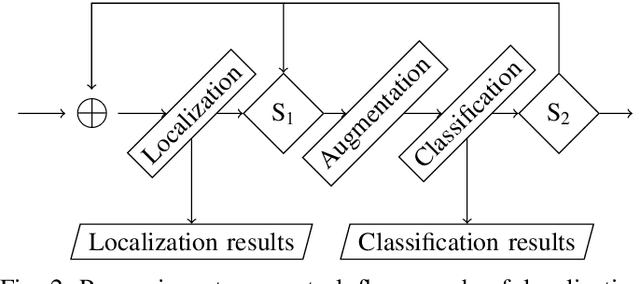

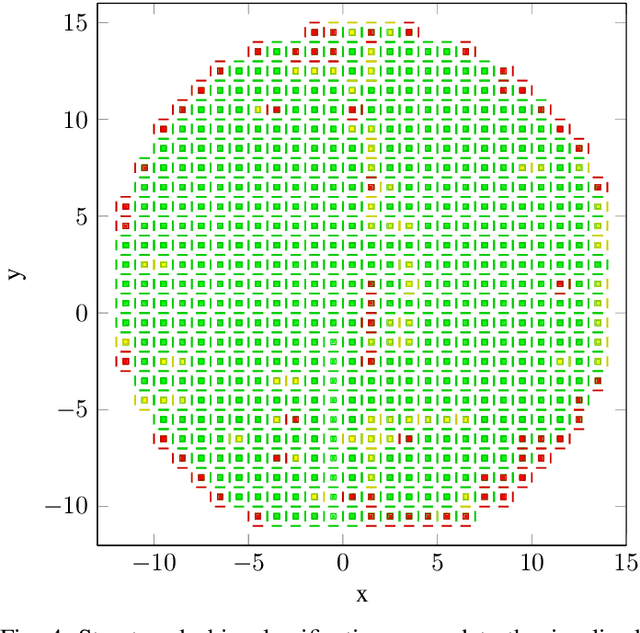

Abstract:It is a long-term goal to transfer biological processing principles as well as the power of human recognition into machine vision and engineering systems. One of such principles is visual attention, a smart human concept which focuses processing on a part of a scene. In this contribution, we utilize attention to improve the automatic detection of defect patterns for wafers within the domain of semiconductor manufacturing. Previous works in the domain have often utilized classical machine learning approaches such as KNNs, SVMs, or MLPs, while a few have already used modern approaches like deep neural networks (DNNs). However, one problem in the domain is that the faults are often very small and have to be detected within a larger size of the chip or even the wafer. Therefore, small structures in the size of pixels have to be detected in a vast amount of image data. One interesting principle of the human brain for solving this problem is visual attention. Hence, we employ here a biologically plausible model of visual attention for automatic visual inspection. We propose a hybrid system of visual attention and a deep neural network. As demonstrated, our system achieves among other decisive advantages an improvement in accuracy from 81% to 92%, and an increase in accuracy for detecting faults from 67% to 88%. Hence, the error rates are reduced from 19% to 8%, and notably from 33% to 12% for detecting a fault in a chip. These results show that attention can greatly improve the performance of visual inspection systems. Furthermore, we conduct a broad evaluation, identifying specific advantages of the biological attention model in this application, and benchmarks standard deep learning approaches as an alternative with and without attention. This work is an extended arXiv version of the original conference article published in "IECON 2020", which has been extended regarding visual attention.

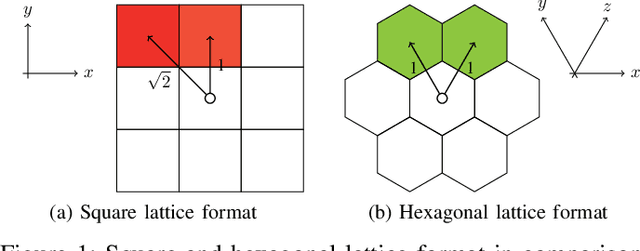

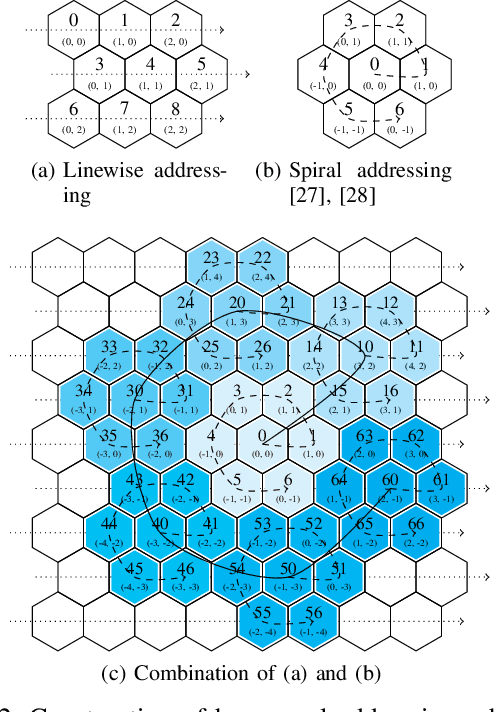

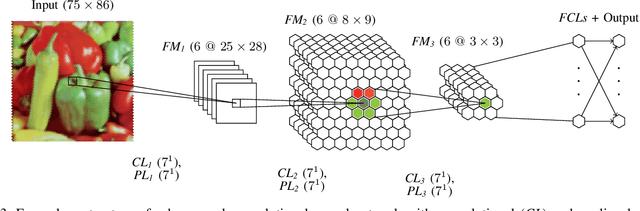

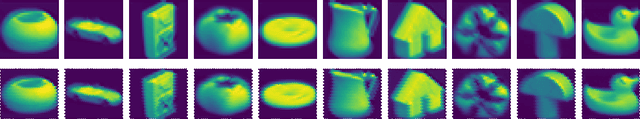

Hexagonal Image Processing in the Context of Machine Learning: Conception of a Biologically Inspired Hexagonal Deep Learning Framework

Dec 31, 2019

Abstract:Inspired by the human visual perception system, hexagonal image processing in the context of machine learning deals with the development of image processing systems that combine the advantages of evolutionary motivated structures based on biological models. While conventional state of the art image processing systems of recording and output devices almost exclusively utilize square arranged methods, their hexagonal counterparts offer a number of key advantages that can benefit both researchers and users. This contribution serves as a general application-oriented approach with the synthesis of the therefor designed hexagonal image processing framework, called Hexnet, the processing steps of hexagonal image transformation, and dependent methods. The results of our created test environment show that the realized framework surpasses current approaches of hexagonal image processing systems, while hexagonal artificial neural networks can benefit from the implemented hexagonal architecture. As hexagonal lattice format based deep neural networks, also called H-DNN, can be compared to their square counterpart by transforming classical square lattice based data sets into their hexagonal representation, they can also result in a reduction of trainable parameters as well as result in increased training and test rates.

A Novel Visual Fault Detection and Classification System for Semiconductor Manufacturing Using Stacked Hybrid Convolutional Neural Networks

Dec 31, 2019

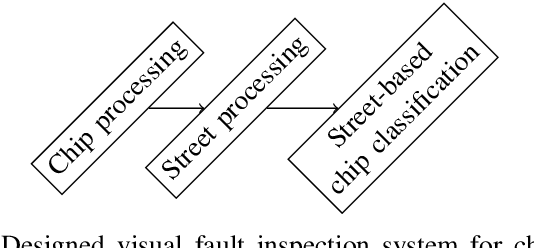

Abstract:Automated visual inspection in the semiconductor industry aims to detect and classify manufacturing defects utilizing modern image processing techniques. While an earliest possible detection of defect patterns allows quality control and automation of manufacturing chains, manufacturers benefit from an increased yield and reduced manufacturing costs. Since classical image processing systems are limited in their ability to detect novel defect patterns, and machine learning approaches often involve a tremendous amount of computational effort, this contribution introduces a novel deep neural network-based hybrid approach. Unlike classical deep neural networks, a multi-stage system allows the detection and classification of the finest structures in pixel size within high-resolution imagery. Consisting of stacked hybrid convolutional neural networks (SH-CNN) and inspired by current approaches of visual attention, the realized system draws the focus over the level of detail from its structures to more task-relevant areas of interest. The results of our test environment show that the SH-CNN outperforms current approaches of learning-based automated visual inspection, whereas a distinction depending on the level of detail enables the elimination of defect patterns in earlier stages of the manufacturing process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge