Menghan Yu

SwiftSpec: Ultra-Low Latency LLM Decoding by Scaling Asynchronous Speculative Decoding

Jun 12, 2025

Abstract:Low-latency decoding for large language models (LLMs) is crucial for applications like chatbots and code assistants, yet generating long outputs remains slow in single-query settings. Prior work on speculative decoding (which combines a small draft model with a larger target model) and tensor parallelism has each accelerated decoding. However, conventional approaches fail to apply both simultaneously due to imbalanced compute requirements (between draft and target models), KV-cache inconsistencies, and communication overheads under small-batch tensor-parallelism. This paper introduces SwiftSpec, a system that targets ultra-low latency for LLM decoding. SwiftSpec redesigns the speculative decoding pipeline in an asynchronous and disaggregated manner, so that each component can be scaled flexibly and remove draft overhead from the critical path. To realize this design, SwiftSpec proposes parallel tree generation, tree-aware KV cache management, and fused, latency-optimized kernels to overcome the challenges listed above. Across 5 model families and 6 datasets, SwiftSpec achieves an average of 1.75x speedup over state-of-the-art speculative decoding systems and, as a highlight, serves Llama3-70B at 348 tokens/s on 8 Nvidia Hopper GPUs, making it the fastest known system for low-latency LLM serving at this scale.

Understanding Stragglers in Large Model Training Using What-if Analysis

May 09, 2025

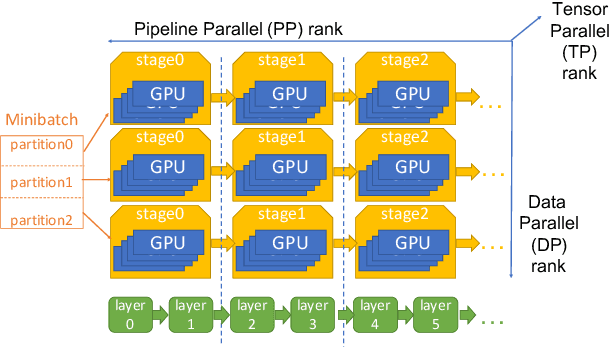

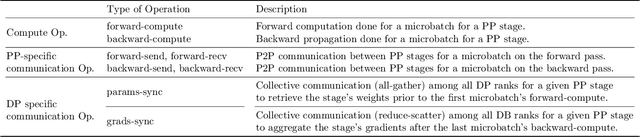

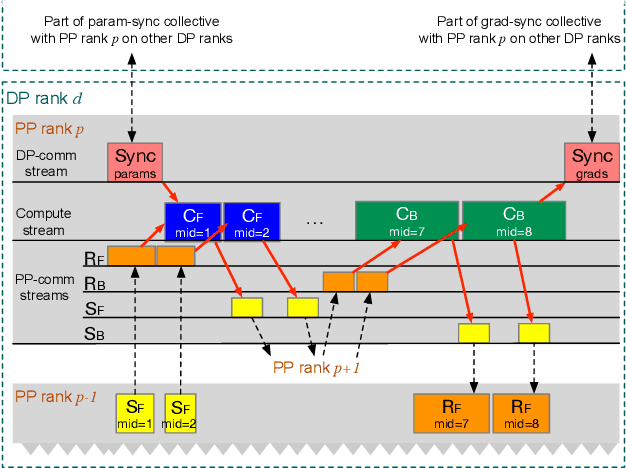

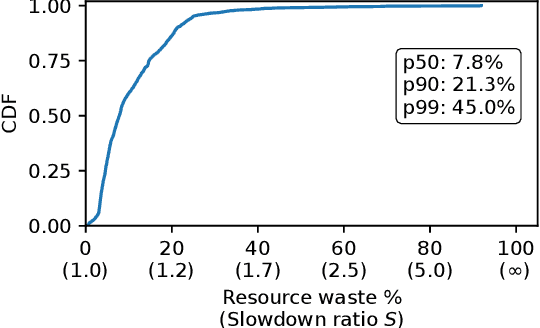

Abstract:Large language model (LLM) training is one of the most demanding distributed computations today, often requiring thousands of GPUs with frequent synchronization across machines. Such a workload pattern makes it susceptible to stragglers, where the training can be stalled by few slow workers. At ByteDance we find stragglers are not trivially always caused by hardware failures, but can arise from multiple complex factors. This work aims to present a comprehensive study on the straggler issues in LLM training, using a five-month trace collected from our ByteDance LLM training cluster. The core methodology is what-if analysis that simulates the scenario without any stragglers and contrasts with the actual case. We use this method to study the following questions: (1) how often do stragglers affect training jobs, and what effect do they have on job performance; (2) do stragglers exhibit temporal or spatial patterns; and (3) what are the potential root causes for stragglers?

How Good Are Synthetic Medical Images? An Empirical Study with Lung Ultrasound

Oct 05, 2023Abstract:Acquiring large quantities of data and annotations is known to be effective for developing high-performing deep learning models, but is difficult and expensive to do in the healthcare context. Adding synthetic training data using generative models offers a low-cost method to deal effectively with the data scarcity challenge, and can also address data imbalance and patient privacy issues. In this study, we propose a comprehensive framework that fits seamlessly into model development workflows for medical image analysis. We demonstrate, with datasets of varying size, (i) the benefits of generative models as a data augmentation method; (ii) how adversarial methods can protect patient privacy via data substitution; (iii) novel performance metrics for these use cases by testing models on real holdout data. We show that training with both synthetic and real data outperforms training with real data alone, and that models trained solely with synthetic data approach their real-only counterparts. Code is available at https://github.com/Global-Health-Labs/US-DCGAN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge