Matthew J. Graham

Pre-training vision models for the classification of alerts from wide-field time-domain surveys

Dec 12, 2025Abstract:Modern wide-field time-domain surveys facilitate the study of transient, variable and moving phenomena by conducting image differencing and relaying alerts to their communities. Machine learning tools have been used on data from these surveys and their precursors for more than a decade, and convolutional neural networks (CNNs), which make predictions directly from input images, saw particularly broad adoption through the 2010s. Since then, continually rapid advances in computer vision have transformed the standard practices around using such models. It is now commonplace to use standardized architectures pre-trained on large corpora of everyday images (e.g., ImageNet). In contrast, time-domain astronomy studies still typically design custom CNN architectures and train them from scratch. Here, we explore the affects of adopting various pre-training regimens and standardized model architectures on the performance of alert classification. We find that the resulting models match or outperform a custom, specialized CNN like what is typically used for filtering alerts. Moreover, our results show that pre-training on galaxy images from Galaxy Zoo tends to yield better performance than pre-training on ImageNet or training from scratch. We observe that the design of standardized architectures are much better optimized than the custom CNN baseline, requiring significantly less time and memory for inference despite having more trainable parameters. On the eve of the Legacy Survey of Space and Time and other image-differencing surveys, these findings advocate for a paradigm shift in the creation of vision models for alerts, demonstrating that greater performance and efficiency, in time and in data, can be achieved by adopting the latest practices from the computer vision field.

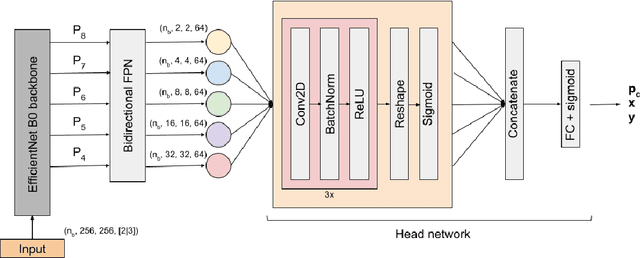

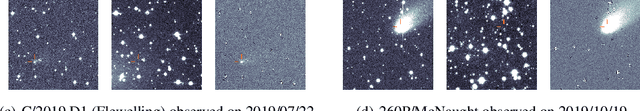

Tails: Chasing Comets with the Zwicky Transient Facility and Deep Learning

Feb 26, 2021

Abstract:We present Tails, an open-source deep-learning framework for the identification and localization of comets in the image data of the Zwicky Transient Facility (ZTF), a robotic optical time-domain survey currently in operation at the Palomar Observatory in California, USA. Tails employs a custom EfficientDet-based architecture and is capable of finding comets in single images in near real time, rather than requiring multiple epochs as with traditional methods. The system achieves state-of-the-art performance with 99% recall, 0.01% false positive rate, and 1-2 pixel root mean square error in the predicted position. We report the initial results of the Tails efficiency evaluation in a production setting on the data of the ZTF Twilight survey, including the first AI-assisted discovery of a comet (C/2020 T2) and the recovery of a comet (P/2016 J3 = P/2021 A3).

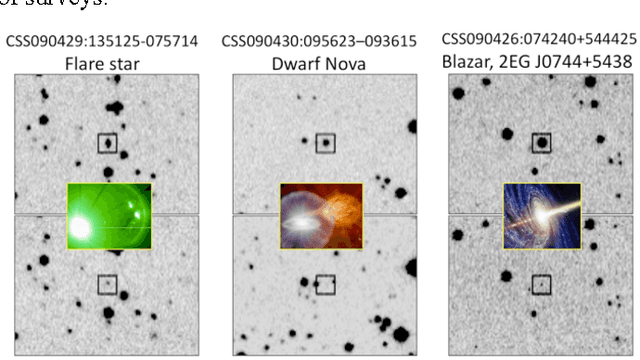

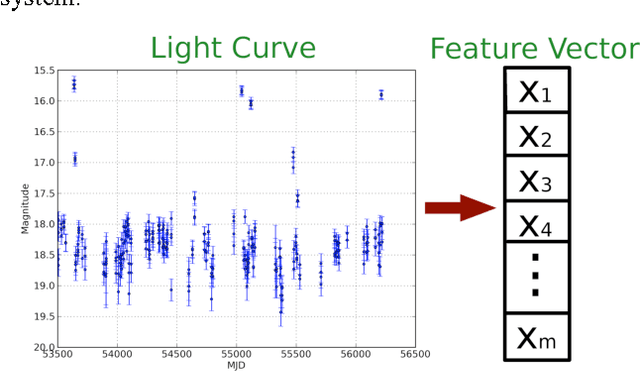

Feature Selection Strategies for Classifying High Dimensional Astronomical Data Sets

Oct 08, 2013

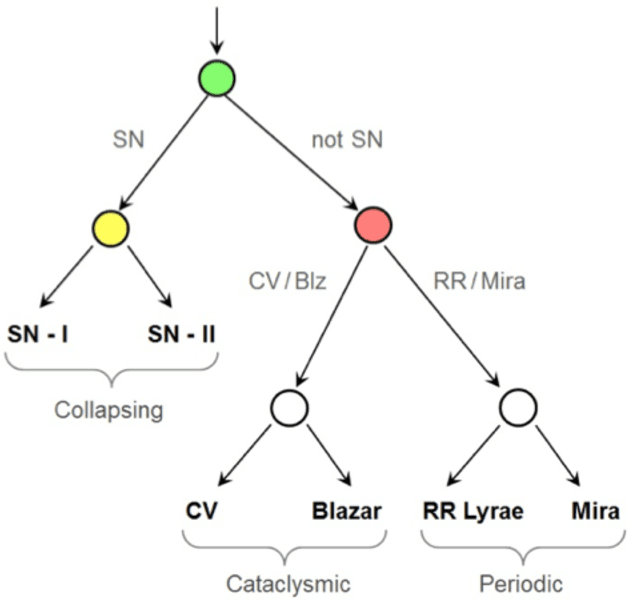

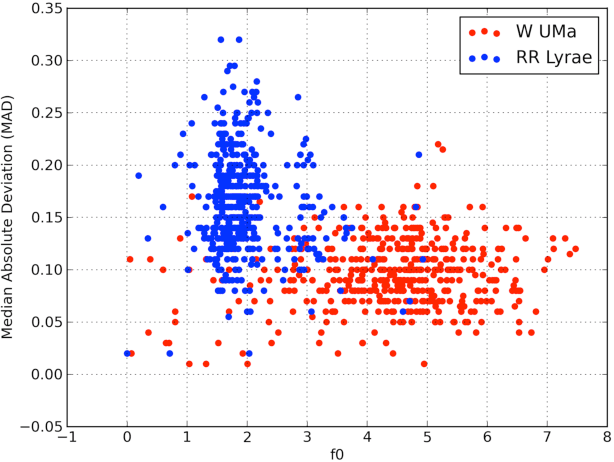

Abstract:The amount of collected data in many scientific fields is increasing, all of them requiring a common task: extract knowledge from massive, multi parametric data sets, as rapidly and efficiently possible. This is especially true in astronomy where synoptic sky surveys are enabling new research frontiers in the time domain astronomy and posing several new object classification challenges in multi dimensional spaces; given the high number of parameters available for each object, feature selection is quickly becoming a crucial task in analyzing astronomical data sets. Using data sets extracted from the ongoing Catalina Real-Time Transient Surveys (CRTS) and the Kepler Mission we illustrate a variety of feature selection strategies used to identify the subsets that give the most information and the results achieved applying these techniques to three major astronomical problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge