Marco Agus

VizCV: AI-assisted visualization of researchers' publications tracks

May 13, 2025

Abstract:Analyzing how the publication records of scientists and research groups have evolved over the years is crucial for assessing their expertise since it can support the management of academic environments by assisting with career planning and evaluation. We introduce VizCV, a novel web-based end-to-end visual analytics framework that enables the interactive exploration of researchers' scientific trajectories. It incorporates AI-assisted analysis and supports automated reporting of career evolution. Our system aims to model career progression through three key dimensions: a) research topic evolution to detect and visualize shifts in scholarly focus over time, b) publication record and the corresponding impact, c) collaboration dynamics depicting the growth and transformation of a researcher's co-authorship network. AI-driven insights provide automated explanations of career transitions, detecting significant shifts in research direction, impact surges, or collaboration expansions. The system also supports comparative analysis between researchers, allowing users to compare topic trajectories and impact growth. Our interactive, multi-tab and multiview system allows for the exploratory analysis of career milestones under different perspectives, such as the most impactful articles, emerging research themes, or obtaining a detailed analysis of the contribution of the researcher in a subfield. The key contributions include AI/ML techniques for: a) topic analysis, b) dimensionality reduction for visualizing patterns and trends, c) the interactive creation of textual descriptions of facets of data through configurable prompt generation and large language models, that include key indicators, to help understanding the career development of individuals or groups.

SAM4EM: Efficient memory-based two stage prompt-free segment anything model adapter for complex 3D neuroscience electron microscopy stacks

Apr 30, 2025Abstract:We present SAM4EM, a novel approach for 3D segmentation of complex neural structures in electron microscopy (EM) data by leveraging the Segment Anything Model (SAM) alongside advanced fine-tuning strategies. Our contributions include the development of a prompt-free adapter for SAM using two stage mask decoding to automatically generate prompt embeddings, a dual-stage fine-tuning method based on Low-Rank Adaptation (LoRA) for enhancing segmentation with limited annotated data, and a 3D memory attention mechanism to ensure segmentation consistency across 3D stacks. We further release a unique benchmark dataset for the segmentation of astrocytic processes and synapses. We evaluated our method on challenging neuroscience segmentation benchmarks, specifically targeting mitochondria, glia, and synapses, with significant accuracy improvements over state-of-the-art (SOTA) methods, including recent SAM-based adapters developed for the medical domain and other vision transformer-based approaches. Experimental results indicate that our approach outperforms existing solutions in the segmentation of complex processes like glia and post-synaptic densities. Our code and models are available at https://github.com/Uzshah/SAM4EM.

Towards deep observation: A systematic survey on artificial intelligence techniques to monitor fetus via Ultrasound Images

Jan 17, 2022

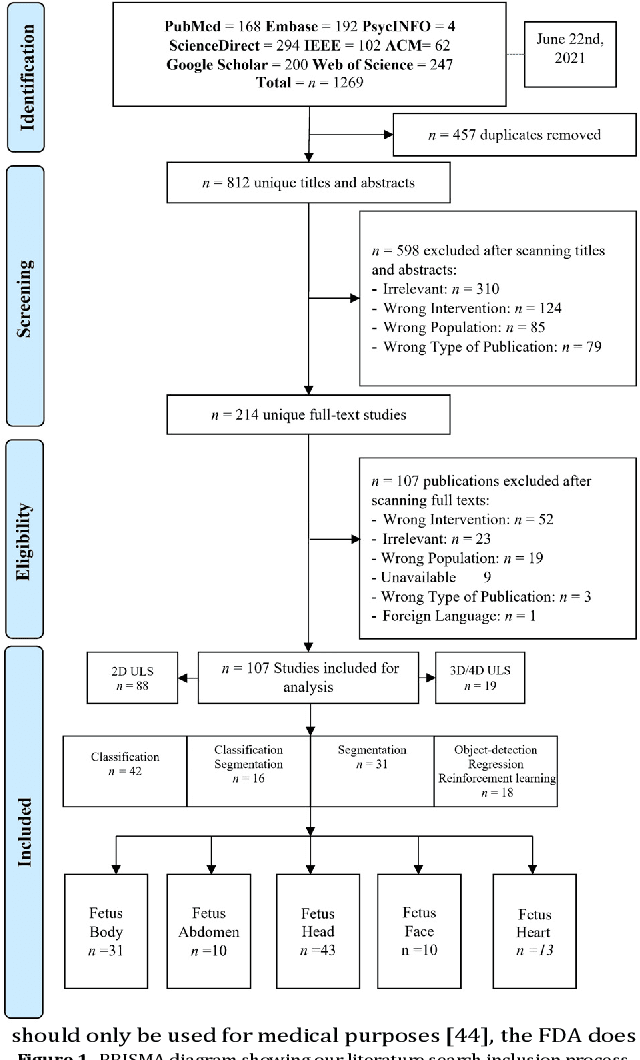

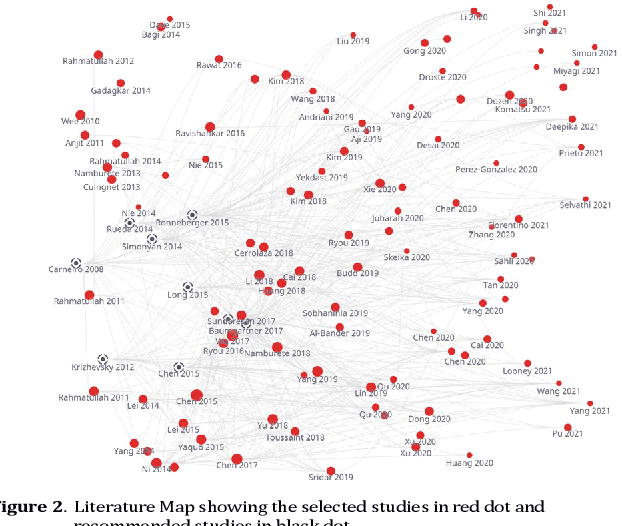

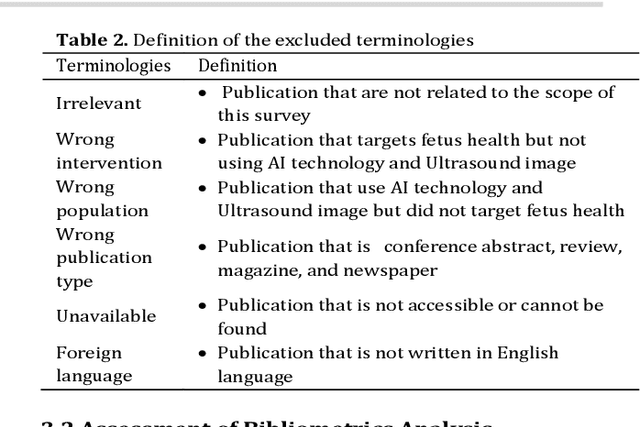

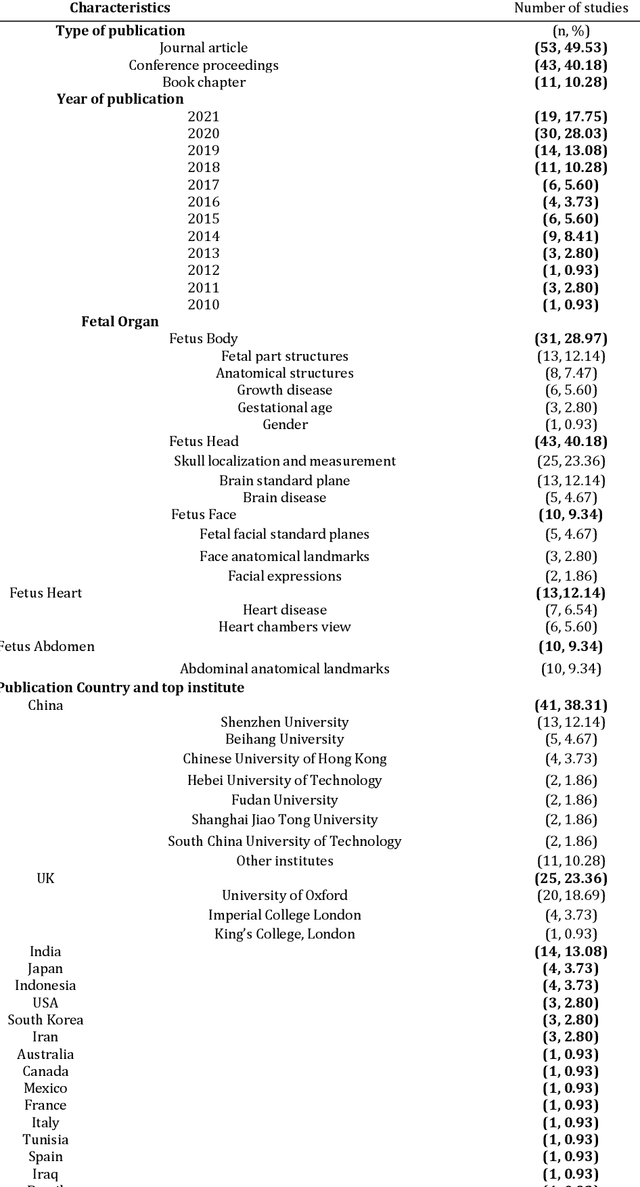

Abstract:Developing innovative informatics approaches aimed to enhance fetal monitoring is a burgeoning field of study in reproductive medicine. Several reviews have been conducted regarding Artificial intelligence (AI) techniques to improve pregnancy outcomes. They are limited by focusing on specific data such as mother's care during pregnancy. This systematic survey aims to explore how artificial intelligence (AI) can assist with fetal growth monitoring via Ultrasound (US) image. We used eight medical and computer science bibliographic databases, including PubMed, Embase, PsycINFO, ScienceDirect, IEEE explore, ACM Library, Google Scholar, and the Web of Science. We retrieved studies published between 2010 to 2021. Data extracted from studies were synthesized using a narrative approach. Out of 1269 retrieved studies, we included 107 distinct studies from queries that were relevant to the topic in the survey. We found that 2D ultrasound images were more popular (n=88) than 3D and 4D ultrasound images (n=19). Classification is the most used method (n=42), followed by segmentation (n=31), classification integrated with segmentation (n=16) and other miscellaneous such as object-detection, regression and reinforcement learning (n=18). The most common areas within the pregnancy domain were the fetus head (n=43), then fetus body (n=31), fetus heart (n=13), fetus abdomen (n=10), and lastly the fetus face (n=10). In the most recent studies, deep learning techniques were primarily used (n=81), followed by machine learning (n=16), artificial neural network (n=7), and reinforcement learning (n=2). AI techniques played a crucial role in predicting fetal diseases and identifying fetus anatomy structures during pregnancy. More research is required to validate this technology from a physician's perspective, such as pilot studies and randomized controlled trials on AI and its applications in a hospital setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge