Luca Garello

Next-Gen Museum Guides: Autonomous Navigation and Visitor Interaction with an Agentic Robot

Jul 16, 2025

Abstract:Autonomous robots are increasingly being tested into public spaces to enhance user experiences, particularly in cultural and educational settings. This paper presents the design, implementation, and evaluation of the autonomous museum guide robot Alter-Ego equipped with advanced navigation and interactive capabilities. The robot leverages state-of-the-art Large Language Models (LLMs) to provide real-time, context aware question-and-answer (Q&A) interactions, allowing visitors to engage in conversations about exhibits. It also employs robust simultaneous localization and mapping (SLAM) techniques, enabling seamless navigation through museum spaces and route adaptation based on user requests. The system was tested in a real museum environment with 34 participants, combining qualitative analysis of visitor-robot conversations and quantitative analysis of pre and post interaction surveys. Results showed that the robot was generally well-received and contributed to an engaging museum experience, despite some limitations in comprehension and responsiveness. This study sheds light on HRI in cultural spaces, highlighting not only the potential of AI-driven robotics to support accessibility and knowledge acquisition, but also the current limitations and challenges of deploying such technologies in complex, real-world environments.

Building Knowledge from Interactions: An LLM-Based Architecture for Adaptive Tutoring and Social Reasoning

Apr 02, 2025

Abstract:Integrating robotics into everyday scenarios like tutoring or physical training requires robots capable of adaptive, socially engaging, and goal-oriented interactions. While Large Language Models show promise in human-like communication, their standalone use is hindered by memory constraints and contextual incoherence. This work presents a multimodal, cognitively inspired framework that enhances LLM-based autonomous decision-making in social and task-oriented Human-Robot Interaction. Specifically, we develop an LLM-based agent for a robot trainer, balancing social conversation with task guidance and goal-driven motivation. To further enhance autonomy and personalization, we introduce a memory system for selecting, storing and retrieving experiences, facilitating generalized reasoning based on knowledge built across different interactions. A preliminary HRI user study and offline experiments with a synthetic dataset validate our approach, demonstrating the system's ability to manage complex interactions, autonomously drive training tasks, and build and retrieve contextual memories, advancing socially intelligent robotics.

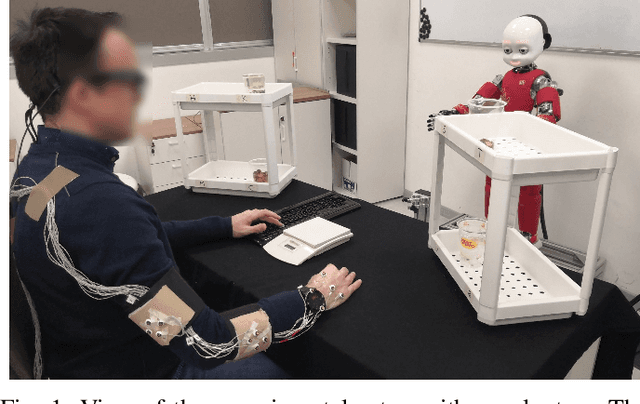

Robots with Different Embodiments Can Express and Influence Carefulness in Object Manipulation

Aug 03, 2022

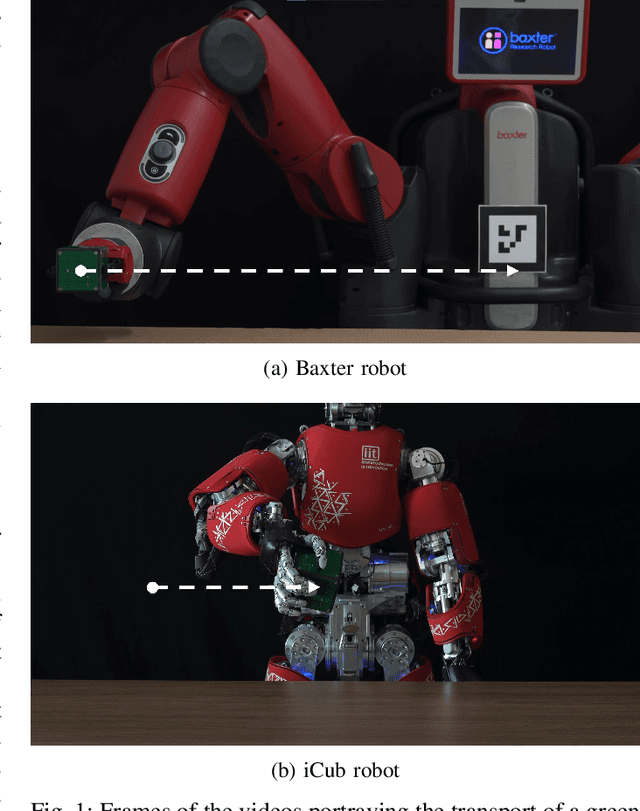

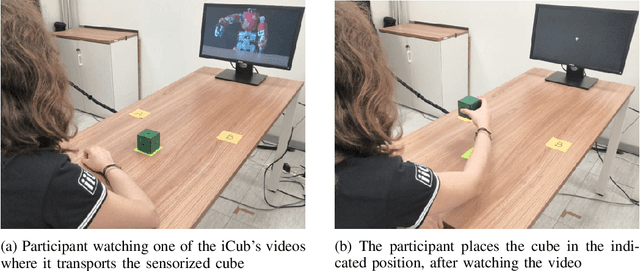

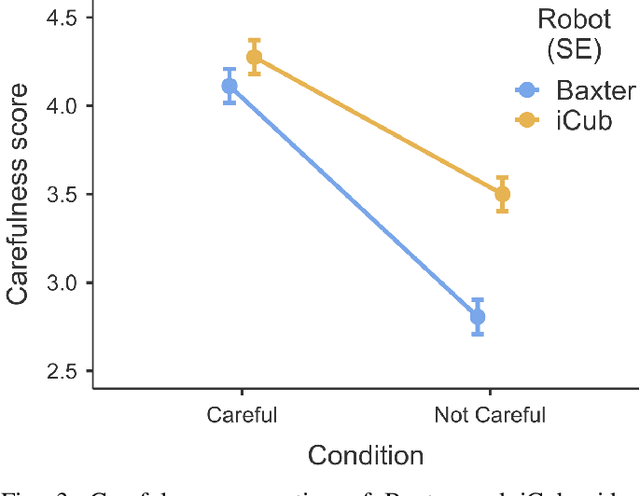

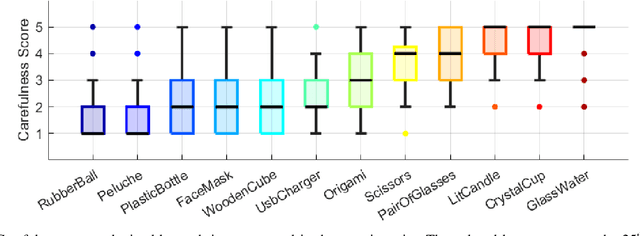

Abstract:Humans have an extraordinary ability to communicate and read the properties of objects by simply watching them being carried by someone else. This level of communicative skills and interpretation, available to humans, is essential for collaborative robots if they are to interact naturally and effectively. For example, suppose a robot is handing over a fragile object. In that case, the human who receives it should be informed of its fragility in advance, through an immediate and implicit message, i.e., by the direct modulation of the robot's action. This work investigates the perception of object manipulations performed with a communicative intent by two robots with different embodiments (an iCub humanoid robot and a Baxter robot). We designed the robots' movements to communicate carefulness or not during the transportation of objects. We found that not only this feature is correctly perceived by human observers, but it can elicit as well a form of motor adaptation in subsequent human object manipulations. In addition, we get an insight into which motion features may induce to manipulate an object more or less carefully.

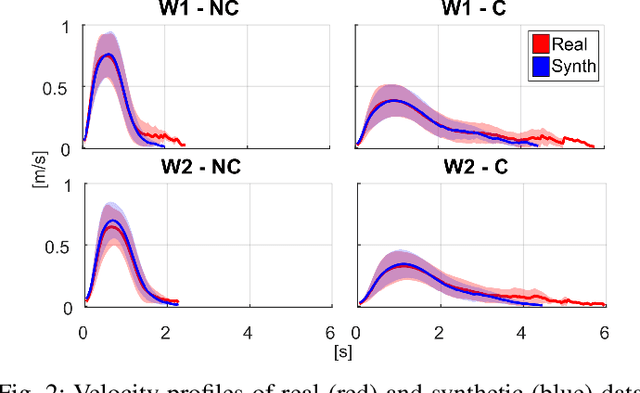

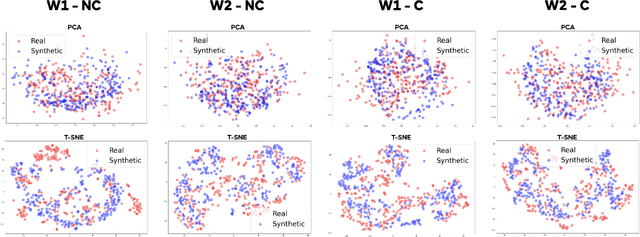

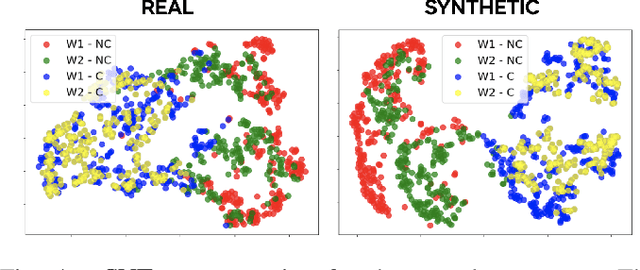

Synthesis and Execution of Communicative Robotic Movements with Generative Adversarial Networks

Mar 31, 2022

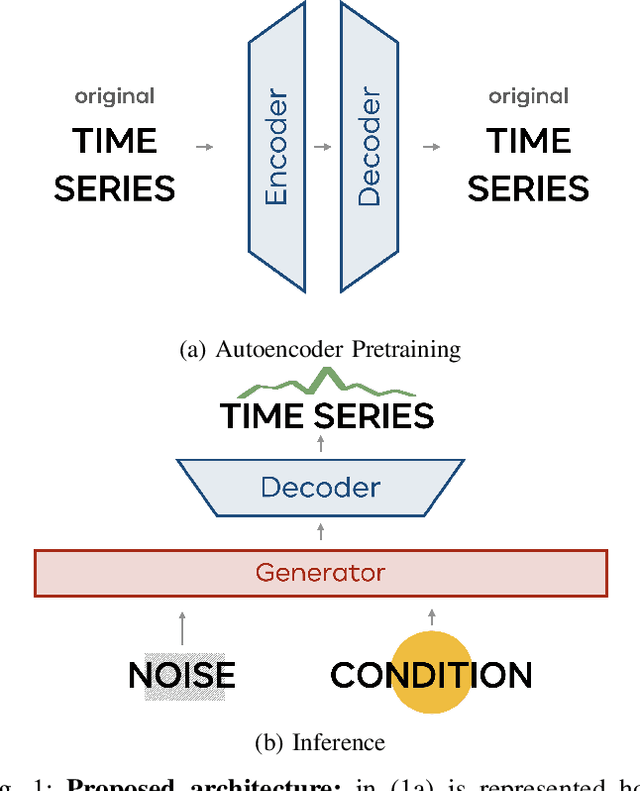

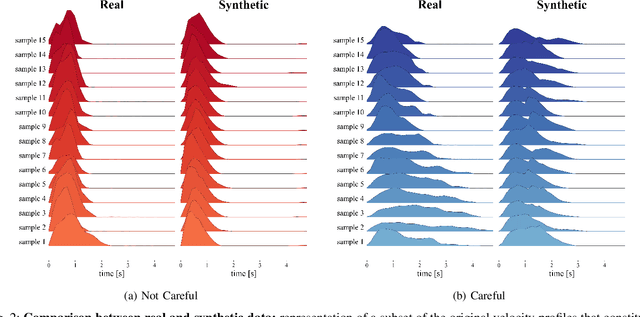

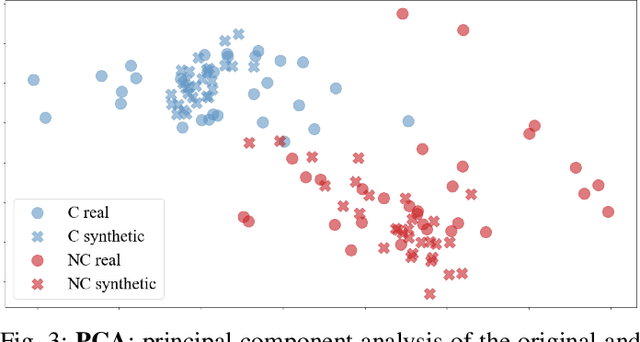

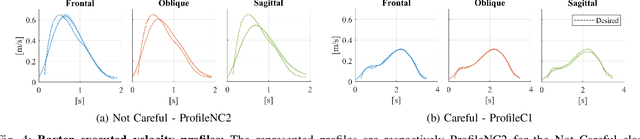

Abstract:Object manipulation is a natural activity we perform every day. How humans handle objects can communicate not only the willfulness of the acting, or key aspects of the context where we operate, but also the properties of the objects involved, without any need for explicit verbal description. Since human intelligence comprises the ability to read the context, allowing robots to perform actions that intuitively convey this kind of information would greatly facilitate collaboration. In this work, we focus on how to transfer on two different robotic platforms the same kinematics modulation that humans adopt when manipulating delicate objects, aiming to endow robots with the capability to show carefulness in their movements. We choose to modulate the velocity profile adopted by the robots' end-effector, inspired by what humans do when transporting objects with different characteristics. We exploit a novel Generative Adversarial Network architecture, trained with human kinematics examples, to generalize over them and generate new and meaningful velocity profiles, either associated with careful or not careful attitudes. This approach would allow next generation robots to select the most appropriate style of movement, depending on the perceived context, and autonomously generate their motor action execution.

Property-Aware Robot Object Manipulation: a Generative Approach

Jun 08, 2021

Abstract:When transporting an object, we unconsciously adapt our movement to its properties, for instance by slowing down when the item is fragile. The most relevant features of an object are immediately revealed to a human observer by the way the handling occurs, without any need for verbal description. It would greatly facilitate collaboration to enable humanoid robots to perform movements that convey similar intuitive cues to the observers. In this work, we focus on how to generate robot motion adapted to the hidden properties of the manipulated objects, such as their weight and fragility. We explore the possibility of leveraging Generative Adversarial Networks to synthesize new actions coherent with the properties of the object. The use of a generative approach allows us to create new and consistent motion patterns, without the need of collecting a large number of recorded human-led demonstrations. Besides, the informative content of the actions is preserved. Our results show that Generative Adversarial Nets can be a powerful tool for the generation of novel and meaningful transportation actions, which result effectively modulated as a function of the object weight and the carefulness required in its handling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge