Lu Ji

Who Responded to Whom: The Joint Effects of Latent Topics and Discourse in Conversation Structure

Apr 17, 2021

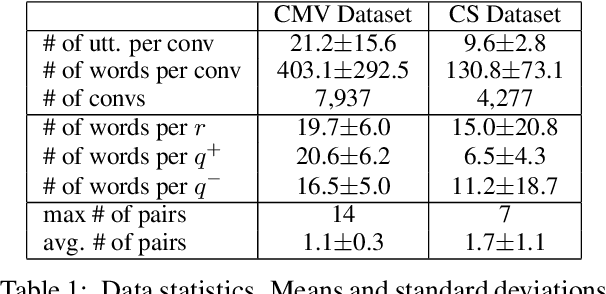

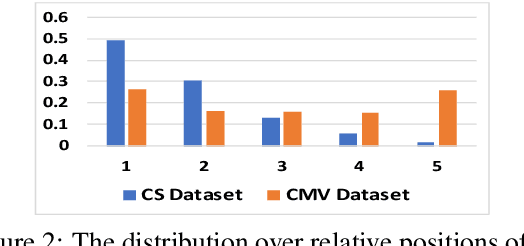

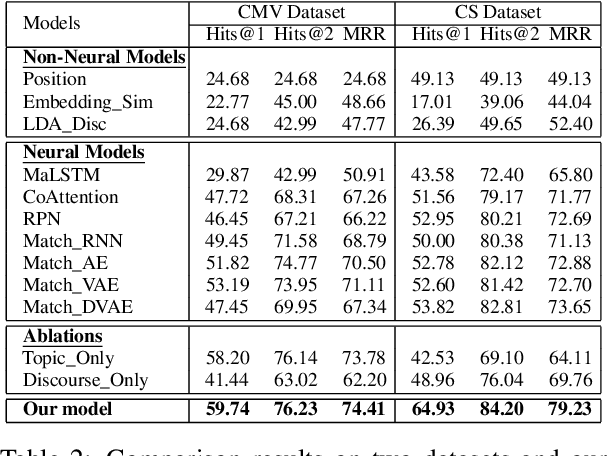

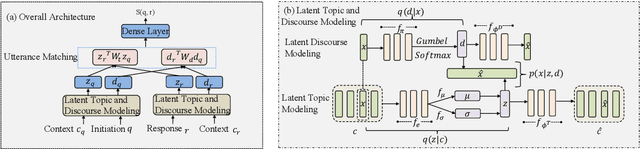

Abstract:Numerous online conversations are produced on a daily basis, resulting in a pressing need to conversation understanding. As a basis to structure a discussion, we identify the responding relations in the conversation discourse, which link response utterances to their initiations. To figure out who responded to whom, here we explore how the consistency of topic contents and dependency of discourse roles indicate such interactions, whereas most prior work ignore the effects of latent factors underlying word occurrences. We propose a model to learn latent topics and discourse in word distributions, and predict pairwise initiation-response links via exploiting topic consistency and discourse dependency. Experimental results on both English and Chinese conversations show that our model significantly outperforms the previous state of the arts, such as 79 vs. 73 MRR on Chinese customer service dialogues. We further probe into our outputs and shed light on how topics and discourse indicate conversational user interactions.

Discrete Argument Representation Learning for Interactive Argument Pair Identification

Nov 05, 2019

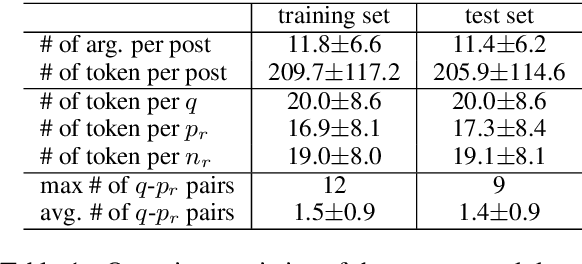

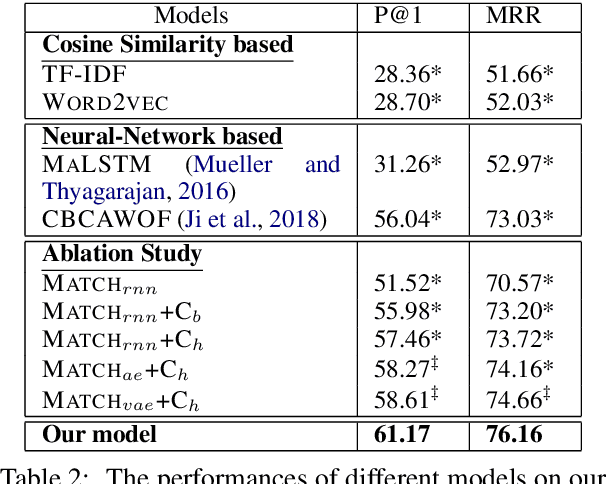

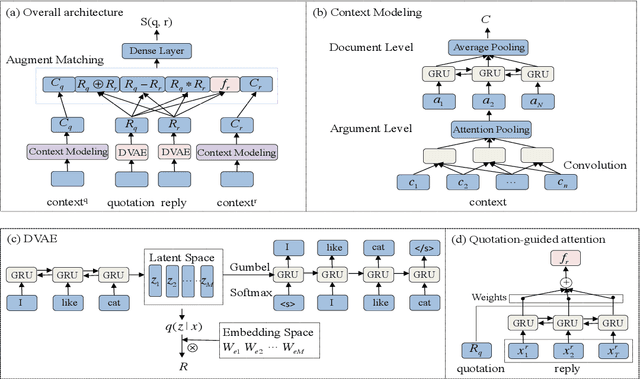

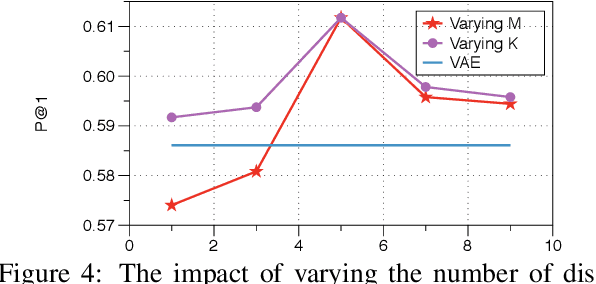

Abstract:In this paper, we focus on extracting interactive argument pairs from two posts with opposite stances to a certain topic. Considering opinions are exchanged from different perspectives of the discussing topic, we study the discrete representations for arguments to capture varying aspects in argumentation languages (e.g., the debate focus and the participant behavior). Moreover, we utilize hierarchical structure to model post-wise information incorporating contextual knowledge. Experimental results on the large-scale dataset collected from CMV show that our proposed framework can significantly outperform the competitive baselines. Further analyses reveal why our model yields superior performance and prove the usefulness of our learned representations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge