Lixi Zhu

A LLM-based Controllable, Scalable, Human-Involved User Simulator Framework for Conversational Recommender Systems

May 13, 2024

Abstract:Conversational Recommender System (CRS) leverages real-time feedback from users to dynamically model their preferences, thereby enhancing the system's ability to provide personalized recommendations and improving the overall user experience. CRS has demonstrated significant promise, prompting researchers to concentrate their efforts on developing user simulators that are both more realistic and trustworthy. The emergence of Large Language Models (LLMs) has marked the onset of a new epoch in computational capabilities, exhibiting human-level intelligence in various tasks. Research efforts have been made to utilize LLMs for building user simulators to evaluate the performance of CRS. Although these efforts showcase innovation, they are accompanied by certain limitations. In this work, we introduce a Controllable, Scalable, and Human-Involved (CSHI) simulator framework that manages the behavior of user simulators across various stages via a plugin manager. CSHI customizes the simulation of user behavior and interactions to provide a more lifelike and convincing user interaction experience. Through experiments and case studies in two conversational recommendation scenarios, we show that our framework can adapt to a variety of conversational recommendation settings and effectively simulate users' personalized preferences. Consequently, our simulator is able to generate feedback that closely mirrors that of real users. This facilitates a reliable assessment of existing CRS studies and promotes the creation of high-quality conversational recommendation datasets.

How Reliable is Your Simulator? Analysis on the Limitations of Current LLM-based User Simulators for Conversational Recommendation

Mar 25, 2024Abstract:Conversational Recommender System (CRS) interacts with users through natural language to understand their preferences and provide personalized recommendations in real-time. CRS has demonstrated significant potential, prompting researchers to address the development of more realistic and reliable user simulators as a key focus. Recently, the capabilities of Large Language Models (LLMs) have attracted a lot of attention in various fields. Simultaneously, efforts are underway to construct user simulators based on LLMs. While these works showcase innovation, they also come with certain limitations that require attention. In this work, we aim to analyze the limitations of using LLMs in constructing user simulators for CRS, to guide future research. To achieve this goal, we conduct analytical validation on the notable work, iEvaLM. Through multiple experiments on two widely-used datasets in the field of conversational recommendation, we highlight several issues with the current evaluation methods for user simulators based on LLMs: (1) Data leakage, which occurs in conversational history and the user simulator's replies, results in inflated evaluation results. (2) The success of CRS recommendations depends more on the availability and quality of conversational history than on the responses from user simulators. (3) Controlling the output of the user simulator through a single prompt template proves challenging. To overcome these limitations, we propose SimpleUserSim, employing a straightforward strategy to guide the topic toward the target items. Our study validates the ability of CRS models to utilize the interaction information, significantly improving the recommendation results.

Knowledge Graph-enhanced Sampling for Conversational Recommender System

Oct 13, 2021

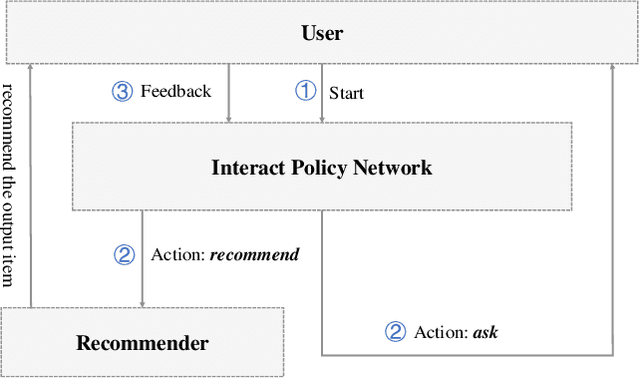

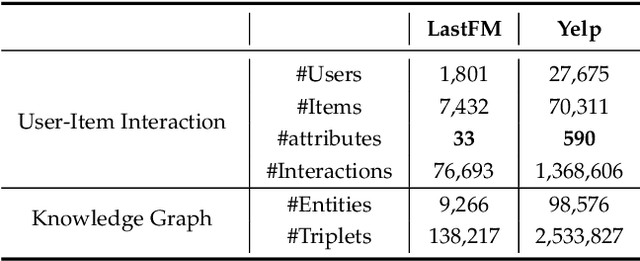

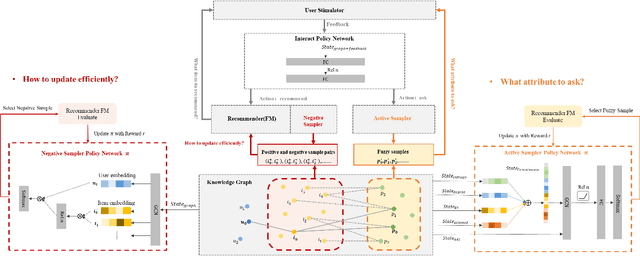

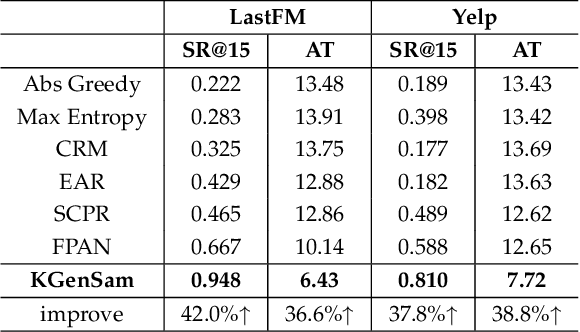

Abstract:The traditional recommendation systems mainly use offline user data to train offline models, and then recommend items for online users, thus suffering from the unreliable estimation of user preferences based on sparse and noisy historical data. Conversational Recommendation System (CRS) uses the interactive form of the dialogue systems to solve the intrinsic problems of traditional recommendation systems. However, due to the lack of contextual information modeling, the existing CRS models are unable to deal with the exploitation and exploration (E&E) problem well, resulting in the heavy burden on users. To address the aforementioned issue, this work proposes a contextual information enhancement model tailored for CRS, called Knowledge Graph-enhanced Sampling (KGenSam). KGenSam integrates the dynamic graph of user interaction data with the external knowledge into one heterogeneous Knowledge Graph (KG) as the contextual information environment. Then, two samplers are designed to enhance knowledge by sampling fuzzy samples with high uncertainty for obtaining user preferences and reliable negative samples for updating recommender to achieve efficient acquisition of user preferences and model updating, and thus provide a powerful solution for CRS to deal with E&E problem. Experimental results on two real-world datasets demonstrate the superiority of KGenSam with significant improvements over state-of-the-art methods.

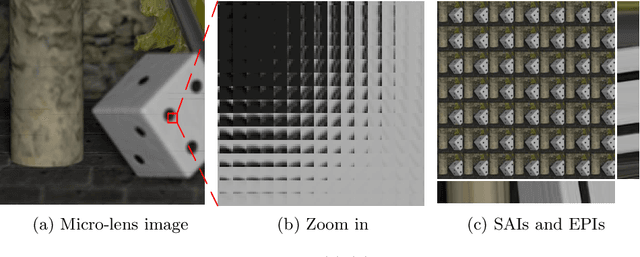

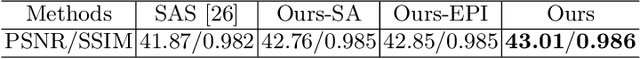

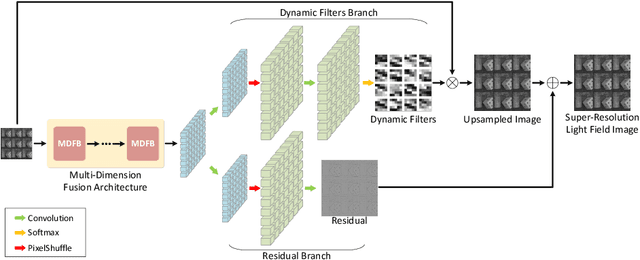

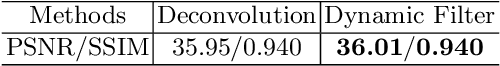

Multi-Dimension Fusion Network for Light Field Spatial Super-Resolution using Dynamic Filters

Aug 26, 2020

Abstract:Light field cameras have been proved to be powerful tools for 3D reconstruction and virtual reality applications. However, the limited resolution of light field images brings a lot of difficulties for further information display and extraction. In this paper, we introduce a novel learning-based framework to improve the spatial resolution of light fields. First, features from different dimensions are parallelly extracted and fused together in our multi-dimension fusion architecture. These features are then used to generate dynamic filters, which extract subpixel information from micro-lens images and also implicitly consider the disparity information. Finally, more high-frequency details learned in the residual branch are added to the upsampled images and the final super-resolved light fields are obtained. Experimental results show that the proposed method uses fewer parameters but achieves better performances than other state-of-the-art methods in various kinds of datasets. Our reconstructed images also show sharp details and distinct lines in both sub-aperture images and epipolar plane images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge