Lior Rokach

Silent Killer: Optimizing Backdoor Trigger Yields a Stealthy and Powerful Data Poisoning Attack

Jan 05, 2023

Abstract:We propose a stealthy and powerful backdoor attack on neural networks based on data poisoning (DP). In contrast to previous attacks, both the poison and the trigger in our method are stealthy. We are able to change the model's classification of samples from a source class to a target class chosen by the attacker. We do so by using a small number of poisoned training samples with nearly imperceptible perturbations, without changing their labels. At inference time, we use a stealthy perturbation added to the attacked samples as a trigger. This perturbation is crafted as a universal adversarial perturbation (UAP), and the poison is crafted using gradient alignment coupled to this trigger. Our method is highly efficient in crafting time compared to previous methods and requires only a trained surrogate model without additional retraining. Our attack achieves state-of-the-art results in terms of attack success rate while maintaining high accuracy on clean samples.

Cross Version Defect Prediction with Class Dependency Embeddings

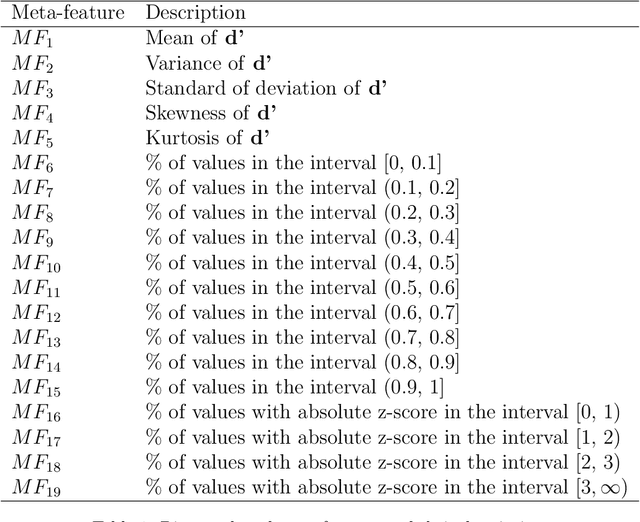

Dec 29, 2022Abstract:Software Defect Prediction aims at predicting which software modules are the most probable to contain defects. The idea behind this approach is to save time during the development process by helping find bugs early. Defect Prediction models are based on historical data. Specifically, one can use data collected from past software distributions, or Versions, of the same target application under analysis. Defect Prediction based on past versions is called Cross Version Defect Prediction (CVDP). Traditionally, Static Code Metrics are used to predict defects. In this work, we use the Class Dependency Network (CDN) as another predictor for defects, combined with static code metrics. CDN data contains structural information about the target application being analyzed. Usually, CDN data is analyzed using different handcrafted network measures, like Social Network metrics. Our approach uses network embedding techniques to leverage CDN information without having to build the metrics manually. In order to use the embeddings between versions, we incorporate different embedding alignment techniques. To evaluate our approach, we performed experiments on 24 software release pairs and compared it against several benchmark methods. In these experiments, we analyzed the performance of two different graph embedding techniques, three anchor selection approaches, and two alignment techniques. We also built a meta-model based on two different embeddings and achieved a statistically significant improvement in AUC of 4.7% (p < 0.002) over the baseline method.

Transfer learning for time series classification using synthetic data generation

Jul 16, 2022Abstract:In this paper, we propose an innovative Transfer learning for Time series classification method. Instead of using an existing dataset from the UCR archive as the source dataset, we generated a 15,000,000 synthetic univariate time series dataset that was created using our unique synthetic time series generator algorithm which can generate data with diverse patterns and angles and different sequence lengths. Furthermore, instead of using classification tasks provided by the UCR archive as the source task as previous studies did,we used our own 55 regression tasks as the source tasks, which produced better results than selecting classification tasks from the UCR archive

A Universal Adversarial Policy for Text Classifiers

Jun 19, 2022

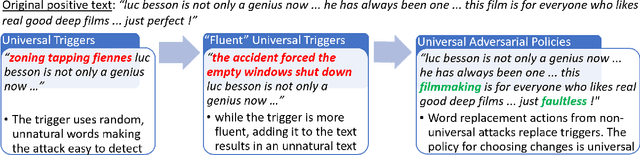

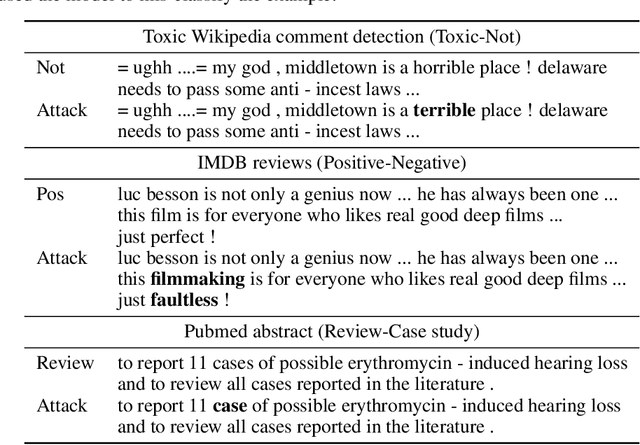

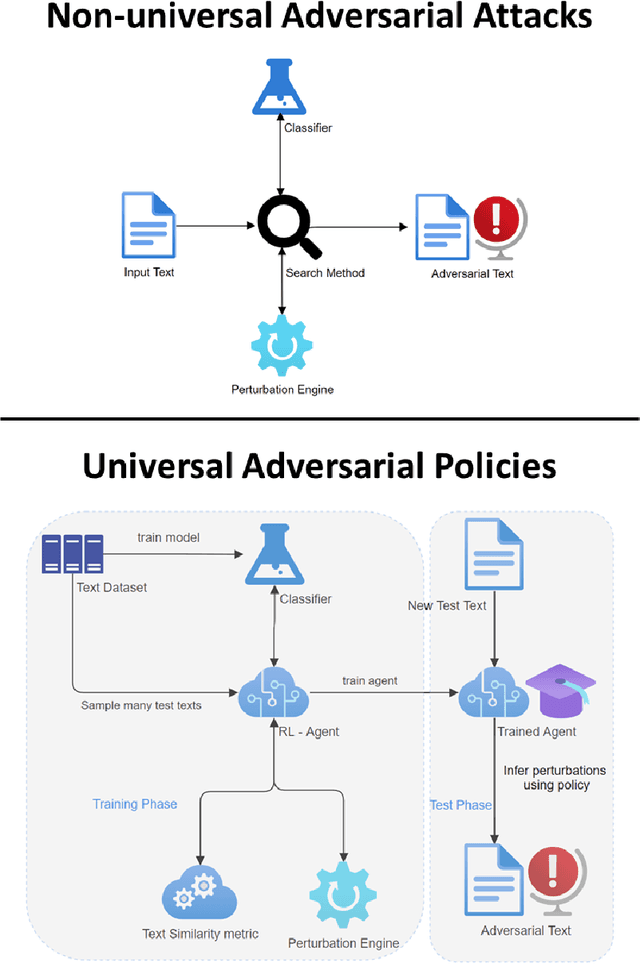

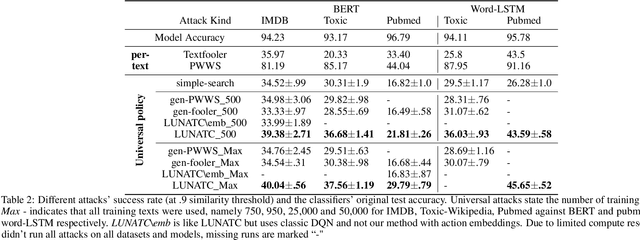

Abstract:Discovering the existence of universal adversarial perturbations had large theoretical and practical impacts on the field of adversarial learning. In the text domain, most universal studies focused on adversarial prefixes which are added to all texts. However, unlike the vision domain, adding the same perturbation to different inputs results in noticeably unnatural inputs. Therefore, we introduce a new universal adversarial setup - a universal adversarial policy, which has many advantages of other universal attacks but also results in valid texts - thus making it relevant in practice. We achieve this by learning a single search policy over a predefined set of semantics preserving text alterations, on many texts. This formulation is universal in that the policy is successful in finding adversarial examples on new texts efficiently. Our approach uses text perturbations which were extensively shown to produce natural attacks in the non-universal setup (specific synonym replacements). We suggest a strong baseline approach for this formulation which uses reinforcement learning. It's ability to generalise (from as few as 500 training texts) shows that universal adversarial patterns exist in the text domain as well.

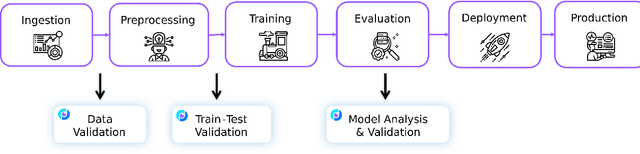

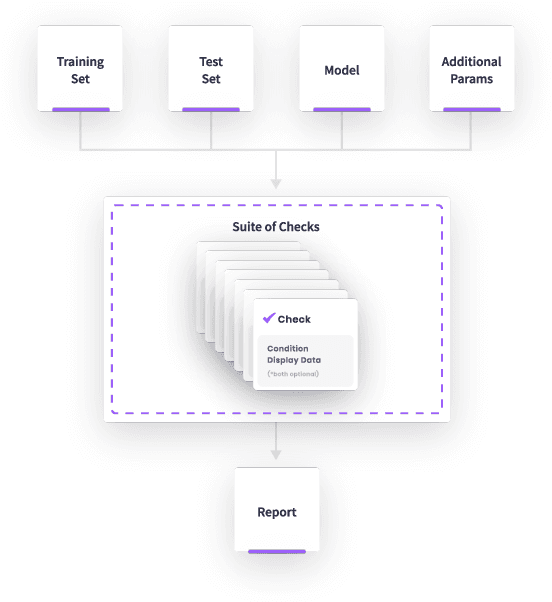

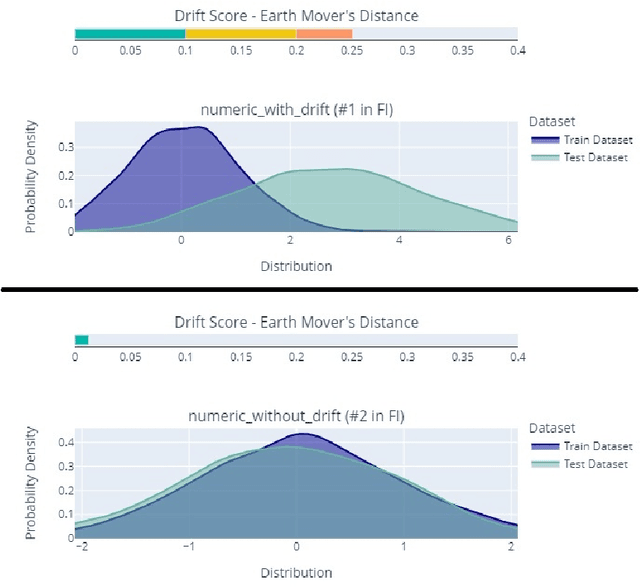

Deepchecks: A Library for Testing and Validating Machine Learning Models and Data

Mar 16, 2022

Abstract:This paper presents Deepchecks, a Python library for comprehensively validating machine learning models and data. Our goal is to provide an easy-to-use library comprising of many checks related to various types of issues, such as model predictive performance, data integrity, data distribution mismatches, and more. The package is distributed under the GNU Affero General Public License (AGPL) and relies on core libraries from the scientific Python ecosystem: scikit-learn, PyTorch, NumPy, pandas, and SciPy. Source code, documentation, examples, and an extensive user guide can be found at \url{https://github.com/deepchecks/deepchecks} and \url{https://docs.deepchecks.com/}.

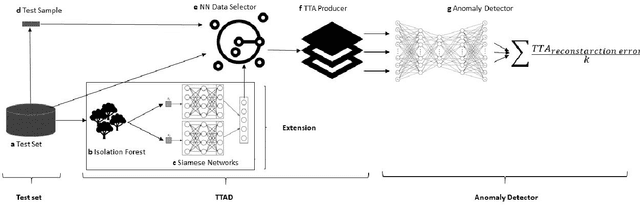

Boosting Anomaly Detection Using Unsupervised Diverse Test-Time Augmentation

Oct 29, 2021

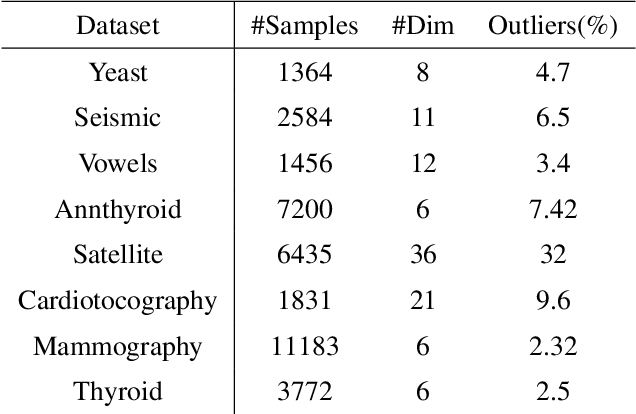

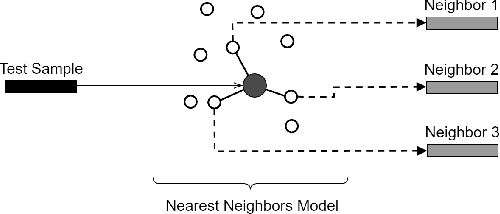

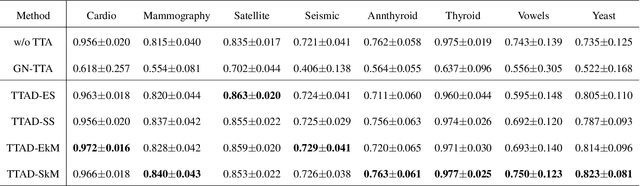

Abstract:Anomaly detection is a well-known task that involves the identification of abnormal events that occur relatively infrequently. Methods for improving anomaly detection performance have been widely studied. However, no studies utilizing test-time augmentation (TTA) for anomaly detection in tabular data have been performed. TTA involves aggregating the predictions of several synthetic versions of a given test sample; TTA produces different points of view for a specific test instance and might decrease its prediction bias. We propose the Test-Time Augmentation for anomaly Detection (TTAD) technique, a TTA-based method aimed at improving anomaly detection performance. TTAD augments a test instance based on its nearest neighbors; various methods, including the k-Means centroid and SMOTE methods, are used to produce the augmentations. Our technique utilizes a Siamese network to learn an advanced distance metric when retrieving a test instance's neighbors. Our experiments show that the anomaly detector that uses our TTA technique achieved significantly higher AUC results on all datasets evaluated.

Enhancing Real-World Adversarial Patches with 3D Modeling Techniques

Feb 10, 2021

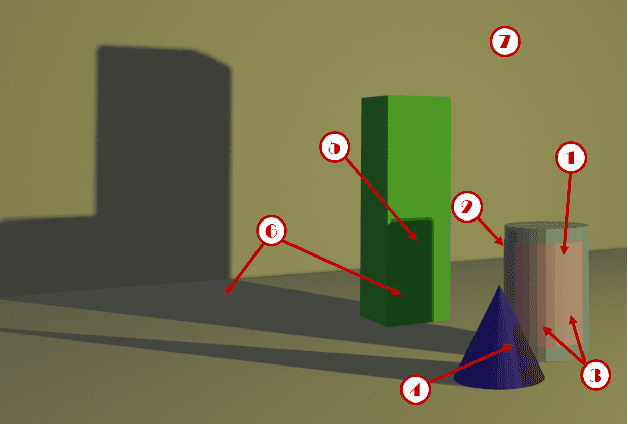

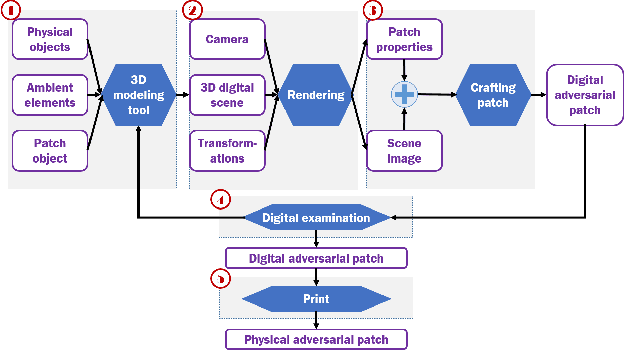

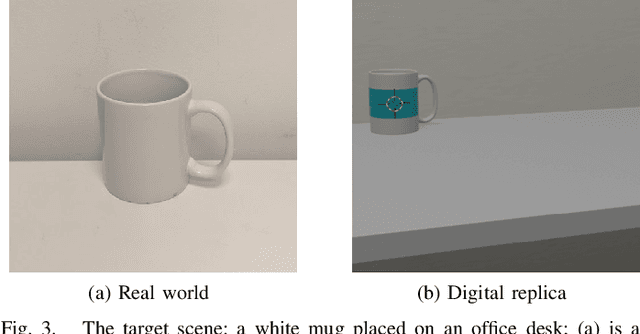

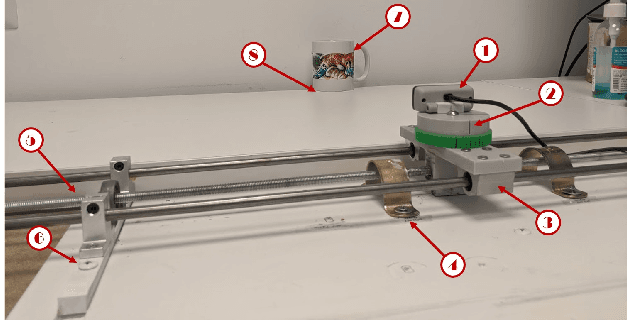

Abstract:Although many studies have examined adversarial examples in the real world, most of them relied on 2D photos of the attack scene; thus, the attacks proposed cannot address realistic environments with 3D objects or varied conditions. Studies that use 3D objects are limited, and in many cases, the real-world evaluation process is not replicable by other researchers, preventing others from reproducing the results. In this study, we present a framework that crafts an adversarial patch for an existing real-world scene. Our approach uses a 3D digital approximation of the scene as a simulation of the real world. With the ability to add and manipulate any element in the digital scene, our framework enables the attacker to improve the patch's robustness in real-world settings. We use the framework to create a patch for an everyday scene and evaluate its performance using a novel evaluation process that ensures that our results are reproducible in both the digital space and the real world. Our evaluation results show that the framework can generate adversarial patches that are robust to different settings in the real world.

Automatic selection of clustering algorithms using supervised graph embedding

Nov 16, 2020

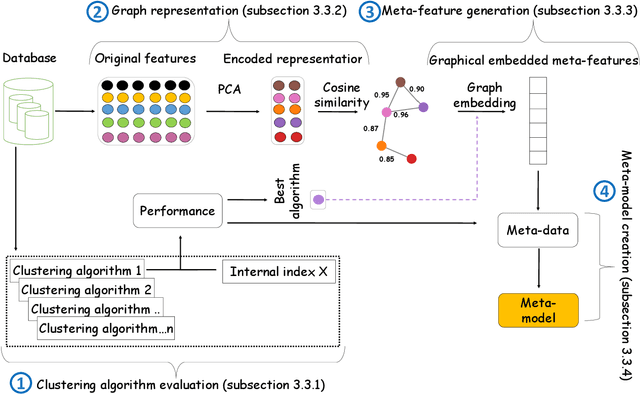

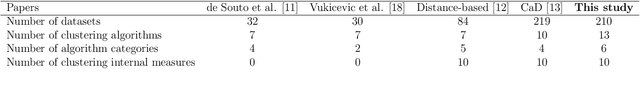

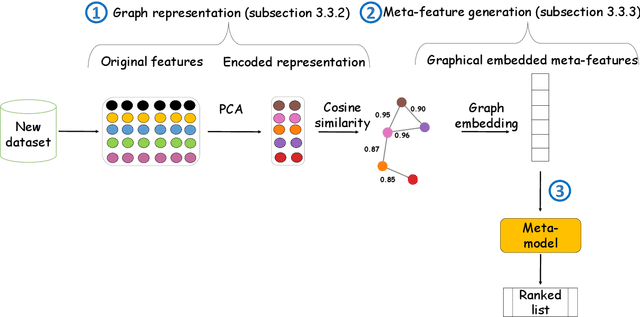

Abstract:The widespread adoption of machine learning (ML) techniques and the extensive expertise required to apply them have led to increased interest in automated ML solutions that reduce the need for human intervention. One of the main challenges in applying ML to previously unseen problems is algorithm selection - the identification of high-performing algorithm(s) for a given dataset, task, and evaluation measure. This study addresses the algorithm selection challenge for data clustering, a fundamental task in data mining that is aimed at grouping similar objects. We present MARCO-GE, a novel meta-learning approach for the automated recommendation of clustering algorithms. MARCO-GE first transforms datasets into graphs and then utilizes a graph convolutional neural network technique to extract their latent representation. Using the embedding representations obtained, MARCO-GE trains a ranking meta-model capable of accurately recommending top-performing algorithms for a new dataset and clustering evaluation measure. Extensive evaluation on 210 datasets, 13 clustering algorithms, and 10 clustering measures demonstrates the effectiveness of our approach and its dominance in terms of predictive and generalization performance over state-of-the-art clustering meta-learning approaches.

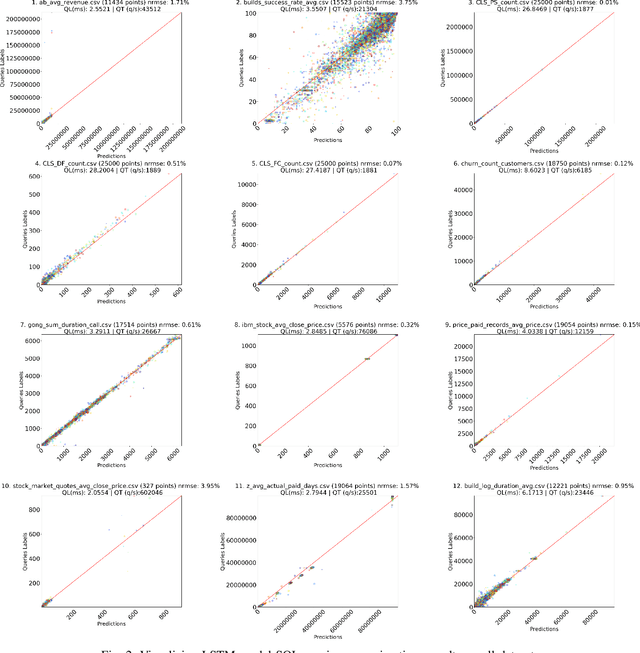

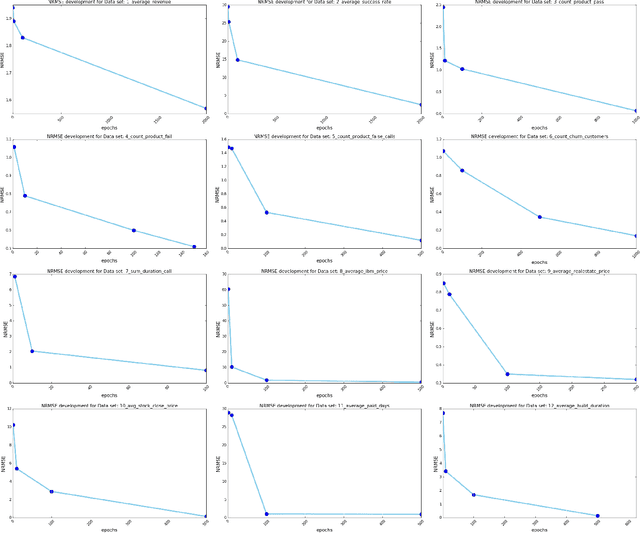

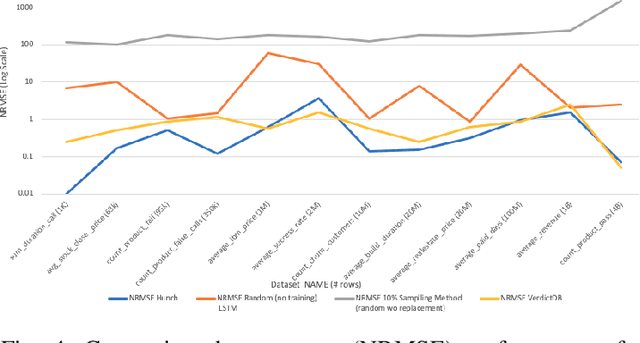

Approximating Aggregated SQL Queries With LSTM Networks

Nov 02, 2020

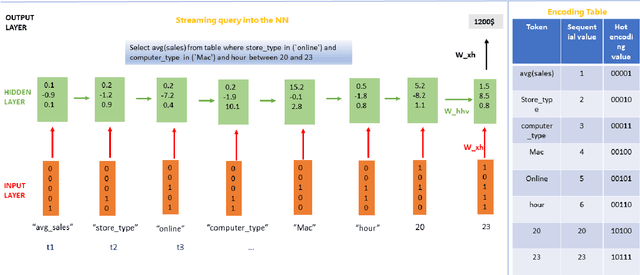

Abstract:Despite continuous investments in data technologies, the latency of querying data still poses a significant challenge. Modern analytic solutions require near real-time responsiveness both to make them interactive and to support automated processing. Current technologies (Hadoop, Spark, Dataflow) scan the dataset to execute queries. They focus on providing a scalable data storage to maximize task execution speed. We argue that these solutions fail to offer an adequate level of interactivity since they depend on continual access to data. In this paper we present a method for query approximation, also known as approximate query processing (AQP), that reduce the need to scan data during inference (query calculation), thus enabling a rapid query processing tool. We use LSTM network to learn the relationship between queries and their results, and to provide a rapid inference layer for predicting query results. Our method (referred as ``Hunch``) produces a lightweight LSTM network which provides a high query throughput. We evaluated our method using 12 datasets. The results show that our method predicted queries' results with a normalized root mean squared error (NRMSE) ranging from approximately 1\% to 4\%. Moreover, our method was able to predict up to 120,000 queries in a second (streamed together), and with a single query latency of no more than 2ms.

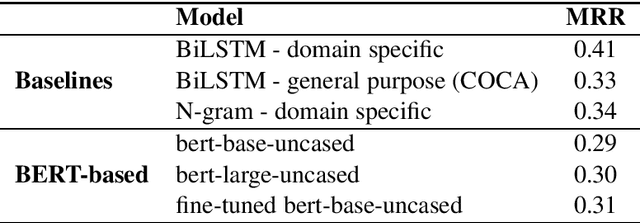

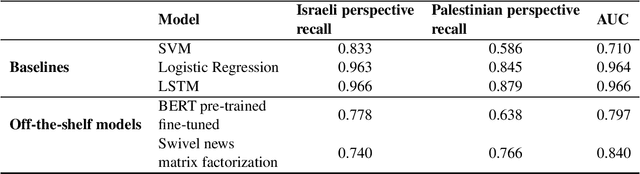

Lessons Learned from Applying off-the-shelf BERT: There is no Silver Bullet

Sep 18, 2020

Abstract:One of the challenges in the NLP field is training large classification models, a task that is both difficult and tedious. It is even harder when GPU hardware is unavailable. The increased availability of pre-trained and off-the-shelf word embeddings, models, and modules aim at easing the process of training large models and achieving a competitive performance. We explore the use of off-the-shelf BERT models and share the results of our experiments and compare their results to those of LSTM networks and more simple baselines. We show that the complexity and computational cost of BERT is not a guarantee for enhanced predictive performance in the classification tasks at hand.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge