Rami Puzis

FAA Framework: A Large Language Model-Based Approach for Credit Card Fraud Investigations

Jun 13, 2025

Abstract:The continuous growth of the e-commerce industry attracts fraudsters who exploit stolen credit card details. Companies often investigate suspicious transactions in order to retain customer trust and address gaps in their fraud detection systems. However, analysts are overwhelmed with an enormous number of alerts from credit card transaction monitoring systems. Each alert investigation requires from the fraud analysts careful attention, specialized knowledge, and precise documentation of the outcomes, leading to alert fatigue. To address this, we propose a fraud analyst assistant (FAA) framework, which employs multi-modal large language models (LLMs) to automate credit card fraud investigations and generate explanatory reports. The FAA framework leverages the reasoning, code execution, and vision capabilities of LLMs to conduct planning, evidence collection, and analysis in each investigation step. A comprehensive empirical evaluation of 500 credit card fraud investigations demonstrates that the FAA framework produces reliable and efficient investigations comprising seven steps on average. Thus we found that the FAA framework can automate large parts of the workload and help reduce the challenges faced by fraud analysts.

The Information Security Awareness of Large Language Models

Nov 20, 2024

Abstract:The popularity of large language models (LLMs) continues to increase, and LLM-based assistants have become ubiquitous, assisting people of diverse backgrounds in many aspects of life. Significant resources have been invested in the safety of LLMs and their alignment with social norms. However, research examining their behavior from the information security awareness (ISA) perspective is lacking. Chatbots and LLM-based assistants may put unwitting users in harm's way by facilitating unsafe behavior. We observe that the ISA inherent in some of today's most popular LLMs varies significantly, with most models requiring user prompts with a clear security context to utilize their security knowledge and provide safe responses to users. Based on this observation, we created a comprehensive set of 30 scenarios to assess the ISA of LLMs. These scenarios benchmark the evaluated models with respect to all focus areas defined in a mobile ISA taxonomy. Among our findings is that ISA is mildly affected by changing the model's temperature, whereas adjusting the system prompt can substantially impact it. This underscores the necessity of setting the right system prompt to mitigate ISA weaknesses. Our findings also highlight the importance of ISA assessment for the development of future LLM-based assistants.

Assessment and manipulation of latent constructs in pre-trained language models using psychometric scales

Sep 29, 2024Abstract:Human-like personality traits have recently been discovered in large language models, raising the hypothesis that their (known and as yet undiscovered) biases conform with human latent psychological constructs. While large conversational models may be tricked into answering psychometric questionnaires, the latent psychological constructs of thousands of simpler transformers, trained for other tasks, cannot be assessed because appropriate psychometric methods are currently lacking. Here, we show how standard psychological questionnaires can be reformulated into natural language inference prompts, and we provide a code library to support the psychometric assessment of arbitrary models. We demonstrate, using a sample of 88 publicly available models, the existence of human-like mental health-related constructs (including anxiety, depression, and Sense of Coherence) which conform with standard theories in human psychology and show similar correlations and mitigation strategies. The ability to interpret and rectify the performance of language models by using psychological tools can boost the development of more explainable, controllable, and trustworthy models.

GeNet: A Multimodal LLM-Based Co-Pilot for Network Topology and Configuration

Jul 11, 2024

Abstract:Communication network engineering in enterprise environments is traditionally a complex, time-consuming, and error-prone manual process. Most research on network engineering automation has concentrated on configuration synthesis, often overlooking changes in the physical network topology. This paper introduces GeNet, a multimodal co-pilot for enterprise network engineers. GeNet is a novel framework that leverages a large language model (LLM) to streamline network design workflows. It uses visual and textual modalities to interpret and update network topologies and device configurations based on user intents. GeNet was evaluated on enterprise network scenarios adapted from Cisco certification exercises. Our results demonstrate GeNet's ability to interpret network topology images accurately, potentially reducing network engineers' efforts and accelerating network design processes in enterprise environments. Furthermore, we show the importance of precise topology understanding when handling intents that require modifications to the network's topology.

ReMark: Receptive Field based Spatial WaterMark Embedding Optimization using Deep Network

May 11, 2023

Abstract:Watermarking is one of the most important copyright protection tools for digital media. The most challenging type of watermarking is the imperceptible one, which embeds identifying information in the data while retaining the latter's original quality. To fulfill its purpose, watermarks need to withstand various distortions whose goal is to damage their integrity. In this study, we investigate a novel deep learning-based architecture for embedding imperceptible watermarks. The key insight guiding our architecture design is the need to correlate the dimensions of our watermarks with the sizes of receptive fields (RF) of modules of our architecture. This adaptation makes our watermarks more robust, while also enabling us to generate them in a way that better maintains image quality. Extensive evaluations on a wide variety of distortions show that the proposed method is robust against most common distortions on watermarks including collusive distortion.

Cross Version Defect Prediction with Class Dependency Embeddings

Dec 29, 2022Abstract:Software Defect Prediction aims at predicting which software modules are the most probable to contain defects. The idea behind this approach is to save time during the development process by helping find bugs early. Defect Prediction models are based on historical data. Specifically, one can use data collected from past software distributions, or Versions, of the same target application under analysis. Defect Prediction based on past versions is called Cross Version Defect Prediction (CVDP). Traditionally, Static Code Metrics are used to predict defects. In this work, we use the Class Dependency Network (CDN) as another predictor for defects, combined with static code metrics. CDN data contains structural information about the target application being analyzed. Usually, CDN data is analyzed using different handcrafted network measures, like Social Network metrics. Our approach uses network embedding techniques to leverage CDN information without having to build the metrics manually. In order to use the embeddings between versions, we incorporate different embedding alignment techniques. To evaluate our approach, we performed experiments on 24 software release pairs and compared it against several benchmark methods. In these experiments, we analyzed the performance of two different graph embedding techniques, three anchor selection approaches, and two alignment techniques. We also built a meta-model based on two different embeddings and achieved a statistically significant improvement in AUC of 4.7% (p < 0.002) over the baseline method.

Can one hear the position of nodes?

Nov 10, 2022

Abstract:Wave propagation through nodes and links of a network forms the basis of spectral graph theory. Nevertheless, the sound emitted by nodes within the resonating chamber formed by a network are not well studied. The sound emitted by vibrations of individual nodes reflects the structure of the overall network topology but also the location of the node within the network. In this article, a sound recognition neural network is trained to infer centrality measures from the nodes' wave-forms. In addition to advancing network representation learning, sounds emitted by nodes are plausible in most cases. Auralization of the network topology may open new directions in arts, competing with network visualization.

Fake News Data Collection and Classification: Iterative Query Selection for Opaque Search Engines with Pseudo Relevance Feedback

Dec 23, 2020

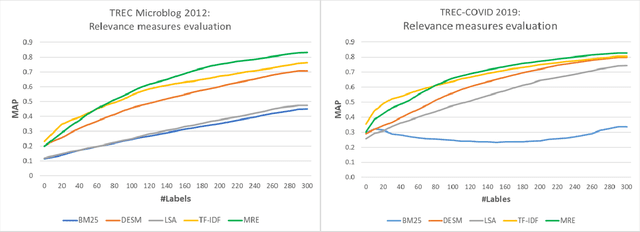

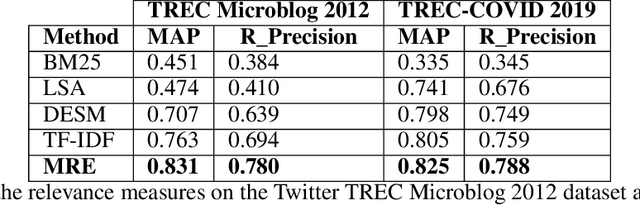

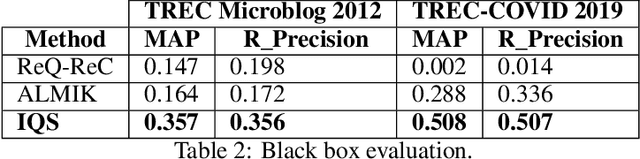

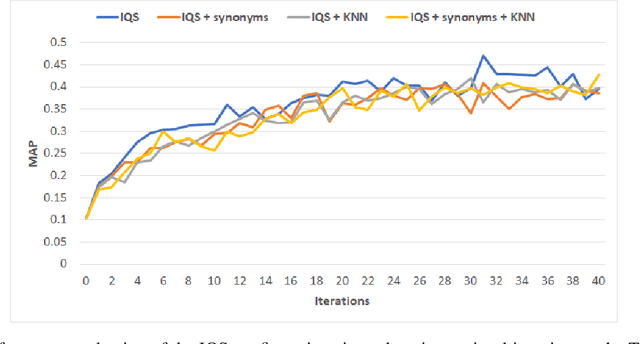

Abstract:Retrieving information from an online search engine is the first and most important step in many data mining tasks. Most of the search engines currently available on the web, including all social media platforms, are black-boxes (a.k.a opaque) supporting short keyword queries. In these settings, retrieving all posts and comments discussing a particular news item automatically and at large scales is a challenging task. In this paper, we propose a method for generating short keyword queries given a prototype document. The proposed algorithm interacts with the opaque search engine to iteratively improve the query. It is evaluated on the Twitter TREC Microblog 2012 and TREC-COVID 2019 datasets showing superior performance compared to state of the art and is applied to automatically collect large scale dataset for training machine learning classifiers for fake news detection. The classifiers training on 70,000 labeled news items and more than 61 million associated tweets automatically collected using the proposed method obtained impressive performance of AUC and accuracy of 0.92, and 0.86, respectively.

Predicting traffic overflows on private peering

Oct 03, 2020

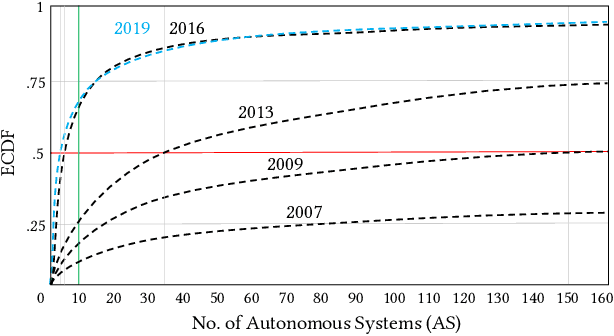

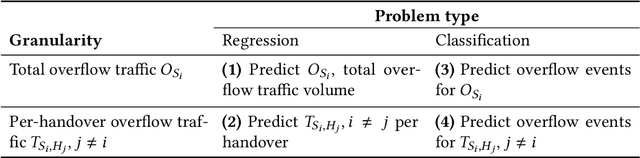

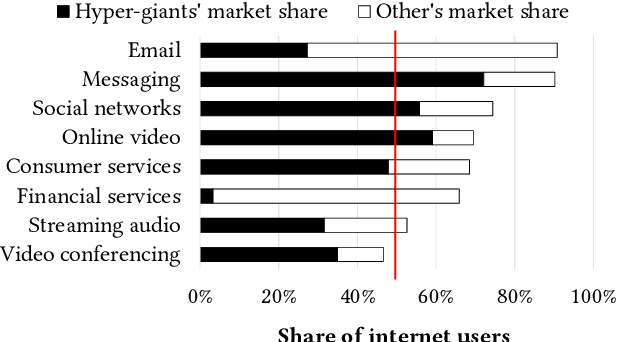

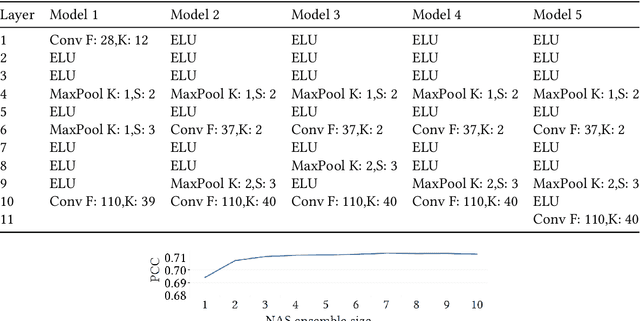

Abstract:Large content providers and content distribution network operators usually connect with large Internet service providers (eyeball networks) through dedicated private peering. The capacity of these private network interconnects is provisioned to match the volume of the real content demand by the users. Unfortunately, in case of a surge in traffic demand, for example due to a content trending in a certain country, the capacity of the private interconnect may deplete and the content provider/distributor would have to reroute the excess traffic through transit providers. Although, such overflow events are rare, they have significant negative impacts on content providers, Internet service providers, and end-users. These include unexpected delays and disruptions reducing the user experience quality, as well as direct costs paid by the Internet service provider to the transit providers. If the traffic overflow events could be predicted, the Internet service providers would be able to influence the routes chosen for the excess traffic to reduce the costs and increase user experience quality. In this article we propose a method based on an ensemble of deep learning models to predict overflow events over a short term horizon of 2-6 hours and predict the specific interconnections that will ingress the overflow traffic. The method was evaluated with 2.5 years' traffic measurement data from a large European Internet service provider resulting in a true-positive rate of 0.8 while maintaining a 0.05 false-positive rate. The lockdown imposed by the COVID-19 pandemic reduced the overflow prediction accuracy. Nevertheless, starting from the end of April 2020 with the gradual lockdown release, the old models trained before the pandemic perform equally well.

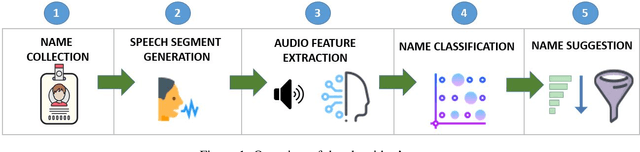

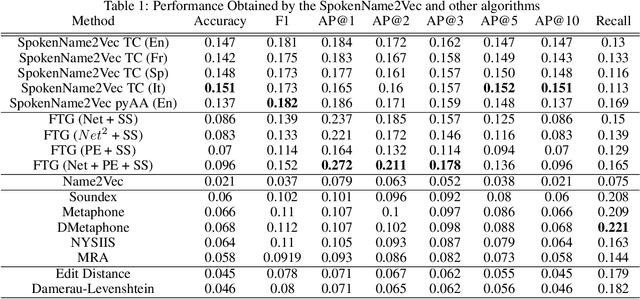

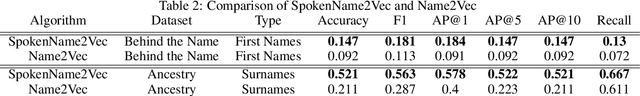

How Does That Sound? Multi-Language SpokenName2Vec Algorithm Using Speech Generation and Deep Learning

May 24, 2020

Abstract:Searching for information about a specific person is an online activity frequently performed by many users. In most cases, users are aided by queries containing a name and sending back to the web search engines for finding their will. Typically, Web search engines provide just a few accurate results associated with a name-containing query. Currently, most solutions for suggesting synonyms in online search are based on pattern matching and phonetic encoding, however very often, the performance of such solutions is less than optimal. In this paper, we propose SpokenName2Vec, a novel and generic approach which addresses the similar name suggestion problem by utilizing automated speech generation, and deep learning to produce spoken name embeddings. This sophisticated and innovative embeddings captures the way people pronounce names in any language and accent. Utilizing the name pronunciation can be helpful for both differentiating and detecting names that sound alike, but are written differently. The proposed approach was demonstrated on a large-scale dataset consisting of 250,000 forenames and evaluated using a machine learning classifier and 7,399 names with their verified synonyms. The performance of the proposed approach was found to be superior to 12 other algorithms evaluated in this study, including well used phonetic and string similarity algorithms, and two recently proposed algorithms. The results obtained suggest that the proposed approach could serve as a useful and valuable tool for solving the similar name suggestion problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge