Lingxue Song

LongVPO: From Anchored Cues to Self-Reasoning for Long-Form Video Preference Optimization

Feb 02, 2026Abstract:We present LongVPO, a novel two-stage Direct Preference Optimization framework that enables short-context vision-language models to robustly understand ultra-long videos without any long-video annotations. In Stage 1, we synthesize preference triples by anchoring questions to individual short clips, interleaving them with distractors, and applying visual-similarity and question-specificity filtering to mitigate positional bias and ensure unambiguous supervision. We also approximate the reference model's scoring over long contexts by evaluating only the anchor clip, reducing computational overhead. In Stage 2, we employ a recursive captioning pipeline on long videos to generate scene-level metadata, then use a large language model to craft multi-segment reasoning queries and dispreferred responses, aligning the model's preferences through multi-segment reasoning tasks. With only 16K synthetic examples and no costly human labels, LongVPO outperforms the state-of-the-art open-source models on multiple long-video benchmarks, while maintaining strong short-video performance (e.g., on MVBench), offering a scalable paradigm for efficient long-form video understanding.

Occlusion Robust Face Recognition Based on Mask Learning with PairwiseDifferential Siamese Network

Aug 17, 2019

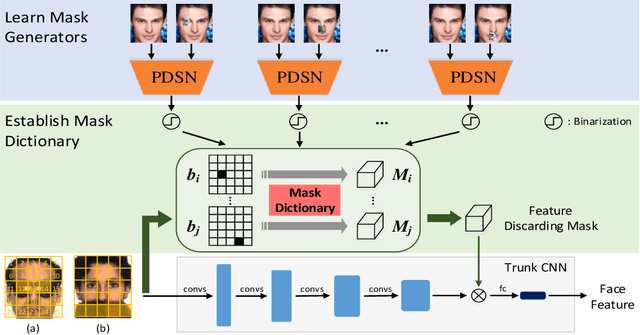

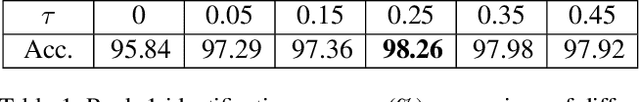

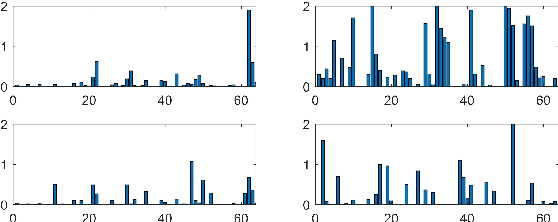

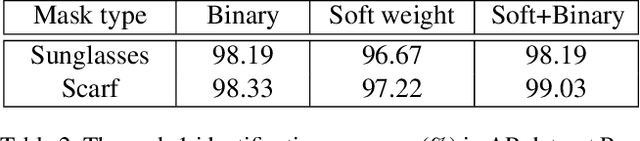

Abstract:Deep Convolutional Neural Networks (CNNs) have been pushing the frontier of the face recognition research in the past years. However, existing general CNN face models generalize poorly to the scenario of occlusions on variable facial areas. Inspired by the fact that a human visual system explicitly ignores occlusions and only focuses on non-occluded facial areas, we propose a mask learning strategy to find and discard the corrupted feature elements for face recognition. A mask dictionary is firstly established by exploiting the differences between the top convoluted features of occluded and occlusion-free face pairs using an innovatively designed Pairwise Differential Siamese Network (PDSN). Each item of this dictionary captures the correspondence between occluded facial areas and corrupted feature elements, which is named Feature Discarding Mask (FDM). When dealing with a face image with random partial occlusions, we generate its FDM by combining relevant dictionary items and then multiply it with the original features to eliminate those corrupted feature elements. Comprehensive experiments on both synthesized and realistic occluded face datasets show that the proposed approach significantly outperforms the state-of-the-arts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge