Ling Shao

Terminus Group, Beijing, China

From Zero-shot Learning to Conventional Supervised Classification: Unseen Visual Data Synthesis

May 04, 2017

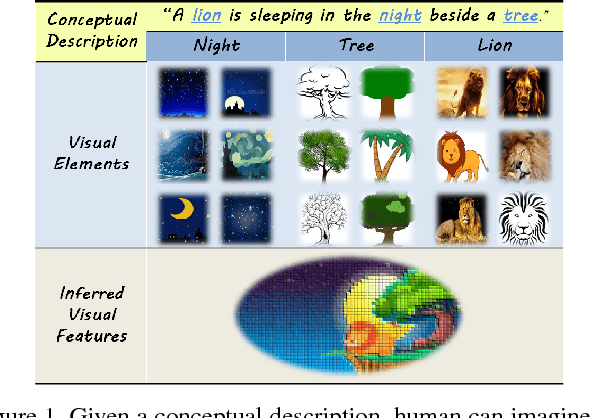

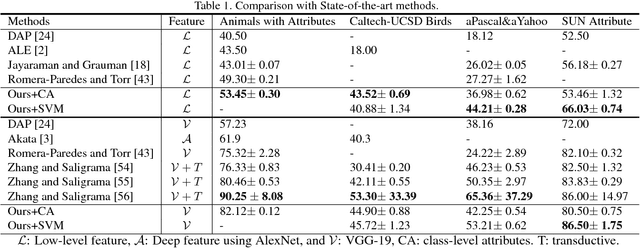

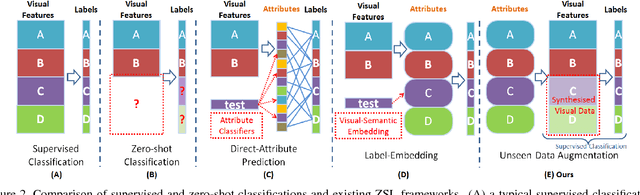

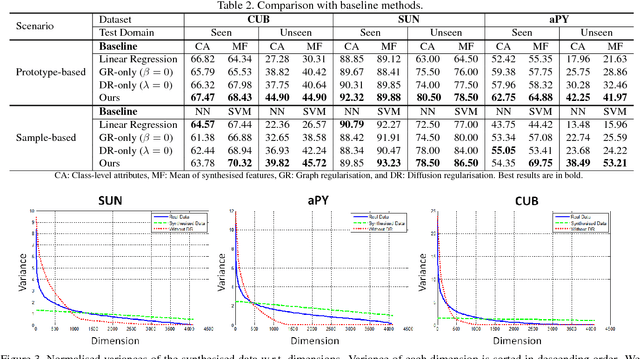

Abstract:Robust object recognition systems usually rely on powerful feature extraction mechanisms from a large number of real images. However, in many realistic applications, collecting sufficient images for ever-growing new classes is unattainable. In this paper, we propose a new Zero-shot learning (ZSL) framework that can synthesise visual features for unseen classes without acquiring real images. Using the proposed Unseen Visual Data Synthesis (UVDS) algorithm, semantic attributes are effectively utilised as an intermediate clue to synthesise unseen visual features at the training stage. Hereafter, ZSL recognition is converted into the conventional supervised problem, i.e. the synthesised visual features can be straightforwardly fed to typical classifiers such as SVM. On four benchmark datasets, we demonstrate the benefit of using synthesised unseen data. Extensive experimental results suggest that our proposed approach significantly improve the state-of-the-art results.

Deep Sketch Hashing: Fast Free-hand Sketch-Based Image Retrieval

Mar 16, 2017

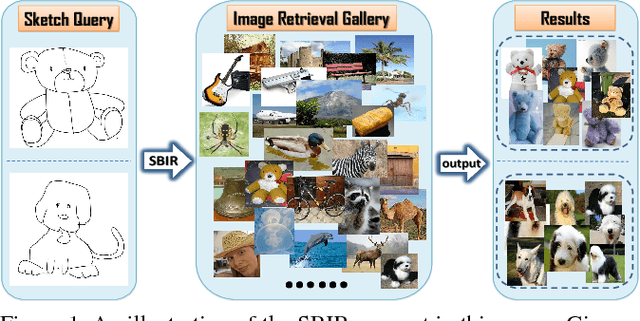

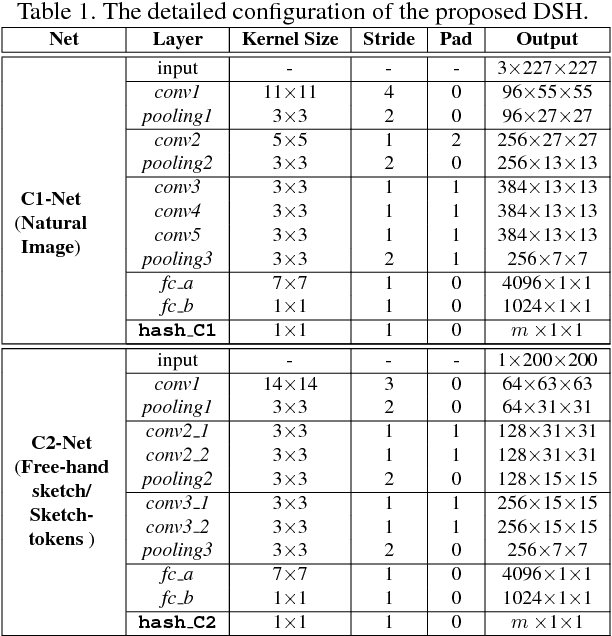

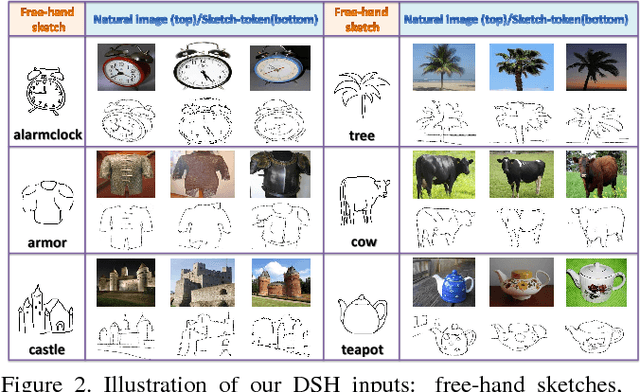

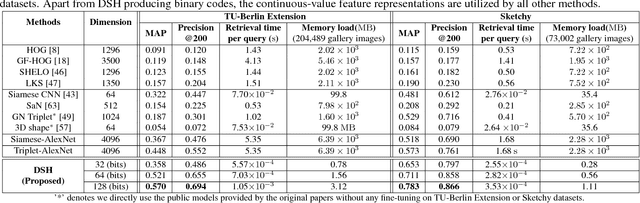

Abstract:Free-hand sketch-based image retrieval (SBIR) is a specific cross-view retrieval task, in which queries are abstract and ambiguous sketches while the retrieval database is formed with natural images. Work in this area mainly focuses on extracting representative and shared features for sketches and natural images. However, these can neither cope well with the geometric distortion between sketches and images nor be feasible for large-scale SBIR due to the heavy continuous-valued distance computation. In this paper, we speed up SBIR by introducing a novel binary coding method, named \textbf{Deep Sketch Hashing} (DSH), where a semi-heterogeneous deep architecture is proposed and incorporated into an end-to-end binary coding framework. Specifically, three convolutional neural networks are utilized to encode free-hand sketches, natural images and, especially, the auxiliary sketch-tokens which are adopted as bridges to mitigate the sketch-image geometric distortion. The learned DSH codes can effectively capture the cross-view similarities as well as the intrinsic semantic correlations between different categories. To the best of our knowledge, DSH is the first hashing work specifically designed for category-level SBIR with an end-to-end deep architecture. The proposed DSH is comprehensively evaluated on two large-scale datasets of TU-Berlin Extension and Sketchy, and the experiments consistently show DSH's superior SBIR accuracies over several state-of-the-art methods, while achieving significantly reduced retrieval time and memory footprint.

DAVE: A Unified Framework for Fast Vehicle Detection and Annotation

Aug 01, 2016

Abstract:Vehicle detection and annotation for streaming video data with complex scenes is an interesting but challenging task for urban traffic surveillance. In this paper, we present a fast framework of Detection and Annotation for Vehicles (DAVE), which effectively combines vehicle detection and attributes annotation. DAVE consists of two convolutional neural networks (CNNs): a fast vehicle proposal network (FVPN) for vehicle-like objects extraction and an attributes learning network (ALN) aiming to verify each proposal and infer each vehicle's pose, color and type simultaneously. These two nets are jointly optimized so that abundant latent knowledge learned from the ALN can be exploited to guide FVPN training. Once the system is trained, it can achieve efficient vehicle detection and annotation for real-world traffic surveillance data. We evaluate DAVE on a new self-collected UTS dataset and the public PASCAL VOC2007 car and LISA 2010 datasets, with consistent improvements over existing algorithms.

DAP3D-Net: Where, What and How Actions Occur in Videos?

Feb 10, 2016

Abstract:Action parsing in videos with complex scenes is an interesting but challenging task in computer vision. In this paper, we propose a generic 3D convolutional neural network in a multi-task learning manner for effective Deep Action Parsing (DAP3D-Net) in videos. Particularly, in the training phase, action localization, classification and attributes learning can be jointly optimized on our appearancemotion data via DAP3D-Net. For an upcoming test video, we can describe each individual action in the video simultaneously as: Where the action occurs, What the action is and How the action is performed. To well demonstrate the effectiveness of the proposed DAP3D-Net, we also contribute a new Numerous-category Aligned Synthetic Action dataset, i.e., NASA, which consists of 200; 000 action clips of more than 300 categories and with 33 pre-defined action attributes in two hierarchical levels (i.e., low-level attributes of basic body part movements and high-level attributes related to action motion). We learn DAP3D-Net using the NASA dataset and then evaluate it on our collected Human Action Understanding (HAU) dataset. Experimental results show that our approach can accurately localize, categorize and describe multiple actions in realistic videos.

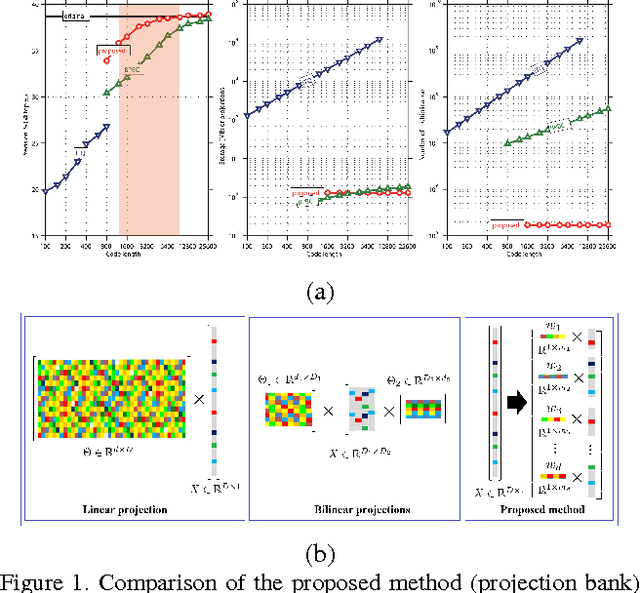

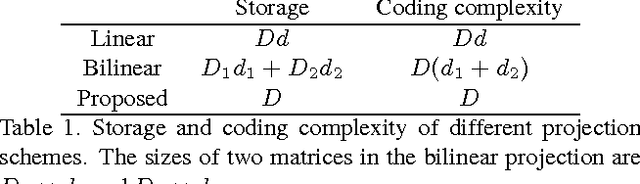

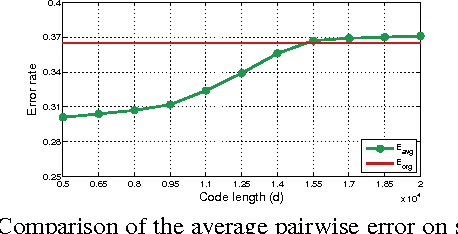

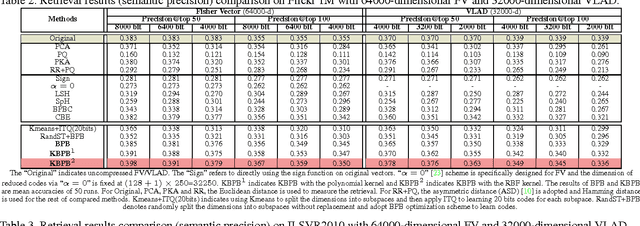

Projection Bank: From High-dimensional Data to Medium-length Binary Codes

Sep 16, 2015

Abstract:Recently, very high-dimensional feature representations, e.g., Fisher Vector, have achieved excellent performance for visual recognition and retrieval. However, these lengthy representations always cause extremely heavy computational and storage costs and even become unfeasible in some large-scale applications. A few existing techniques can transfer very high-dimensional data into binary codes, but they still require the reduced code length to be relatively long to maintain acceptable accuracies. To target a better balance between computational efficiency and accuracies, in this paper, we propose a novel embedding method called Binary Projection Bank (BPB), which can effectively reduce the very high-dimensional representations to medium-dimensional binary codes without sacrificing accuracies. Instead of using conventional single linear or bilinear projections, the proposed method learns a bank of small projections via the max-margin constraint to optimally preserve the intrinsic data similarity. We have systematically evaluated the proposed method on three datasets: Flickr 1M, ILSVR2010 and UCF101, showing competitive retrieval and recognition accuracies compared with state-of-the-art approaches, but with a significantly smaller memory footprint and lower coding complexity.

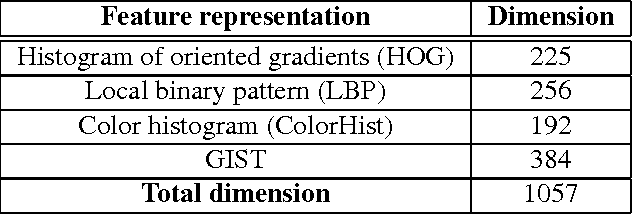

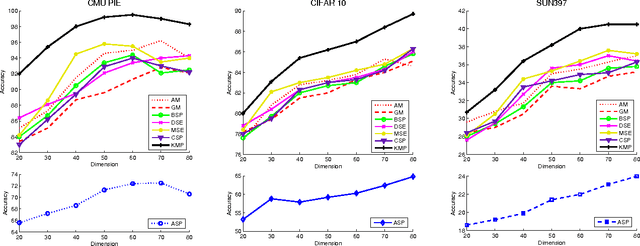

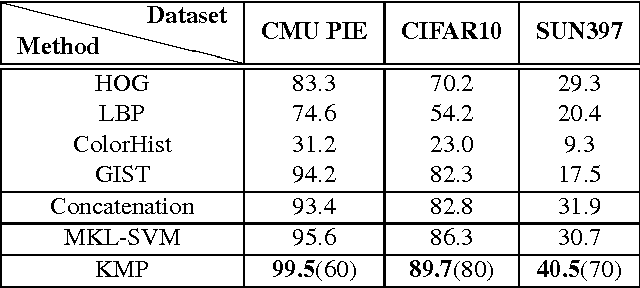

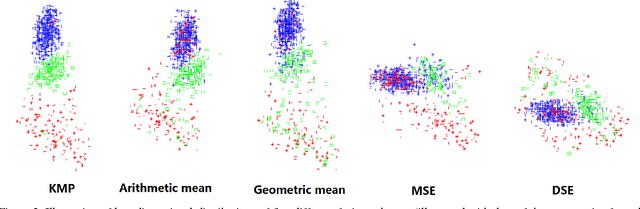

Kernelized Multiview Projection

Aug 04, 2015

Abstract:Conventional vision algorithms adopt a single type of feature or a simple concatenation of multiple features, which is always represented in a high-dimensional space. In this paper, we propose a novel unsupervised spectral embedding algorithm called Kernelized Multiview Projection (KMP) to better fuse and embed different feature representations. Computing the kernel matrices from different features/views, KMP can encode them with the corresponding weights to achieve a low-dimensional and semantically meaningful subspace where the distribution of each view is sufficiently smooth and discriminative. More crucially, KMP is linear for the reproducing kernel Hilbert space (RKHS) and solves the out-of-sample problem, which allows it to be competent for various practical applications. Extensive experiments on three popular image datasets demonstrate the effectiveness of our multiview embedding algorithm.

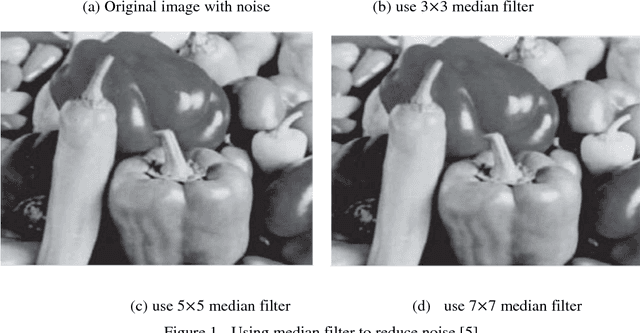

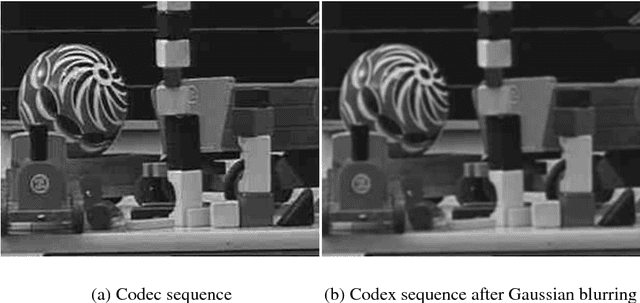

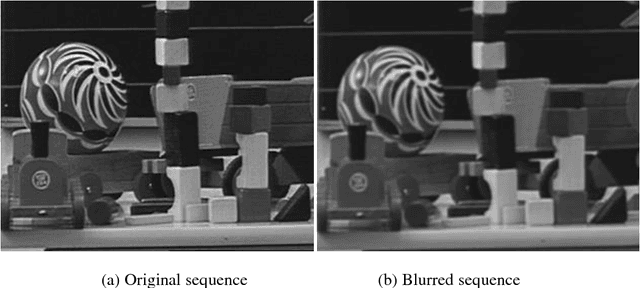

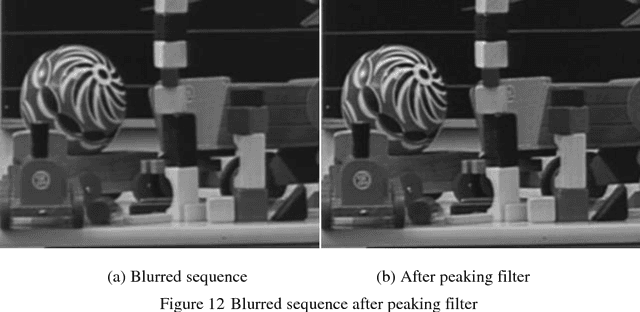

An Algorithm for Repairing Low-Quality Video Enhancement Techniques Based on Trained Filter

Mar 02, 2011

Abstract:Multifarious image enhancement algorithms have been used in different applications. Still, some algorithms or modules are imperfect for practical use. When the image enhancement modules have been fixed or combined by a series of algorithms, we need to repair them as a whole part without changing the inside. This report aims to find an algorithm based on trained filters to repair low-quality image enhancement modules. A brief review on basic image enhancement techniques and pixel classification methods will be presented, and the procedure of trained filters will be described step by step. The experiments and result comparisons for this algorithm will be described in detail.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge