Kevin Yu

Tony

Combining Geometric and Information-Theoretic Approaches for Multi-Robot Exploration

Apr 15, 2020

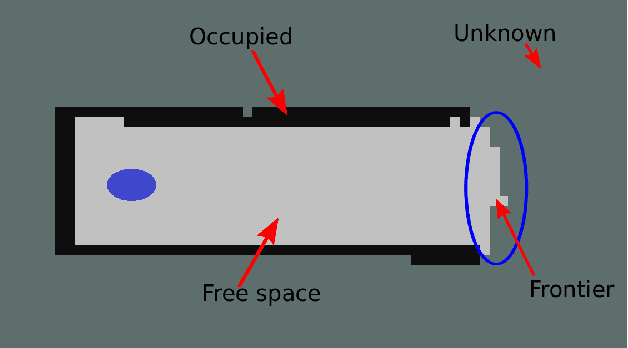

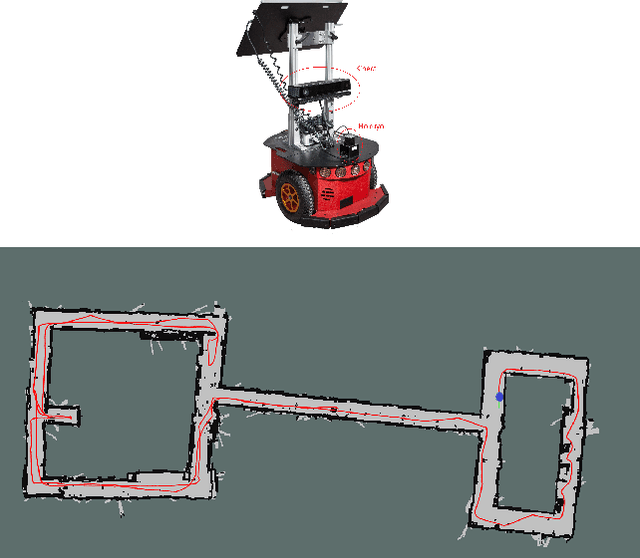

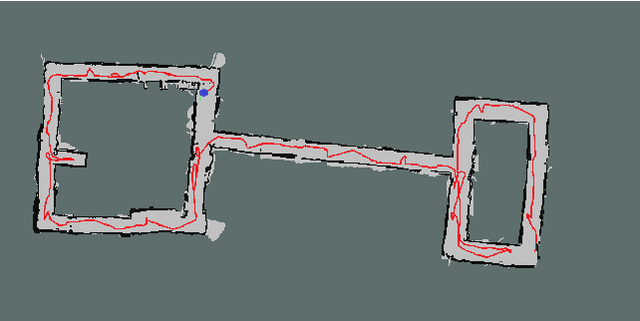

Abstract:We present an algorithm to explore an orthogonal polygon using a team of $p$ robots. This algorithm combines ideas from information-theoretic exploration algorithms and computational geometry based exploration algorithms. We show that the exploration time of our algorithm is competitive (as a function of $p$) with respect to the offline optimal exploration algorithm. The algorithm is based on a single-robot polygon exploration algorithm, a tree exploration algorithm for higher level planning and a submodular orienteering algorithm for lower level planning. We discuss how this strategy can be adapted to real-world settings to deal with noisy sensors. In addition to theoretical analysis, we investigate the performance of our algorithm through simulations for multiple robots and experiments with a single robot.

Garbage In, Garbage Out? Do Machine Learning Application Papers in Social Computing Report Where Human-Labeled Training Data Comes From?

Dec 17, 2019

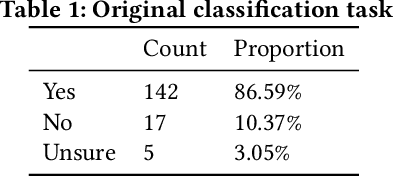

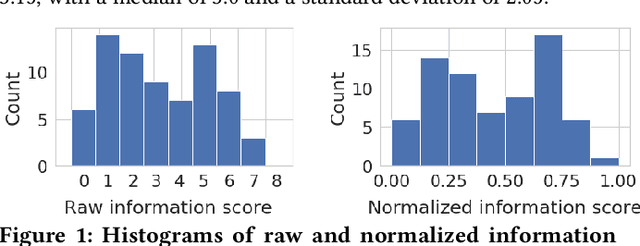

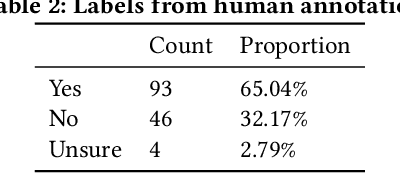

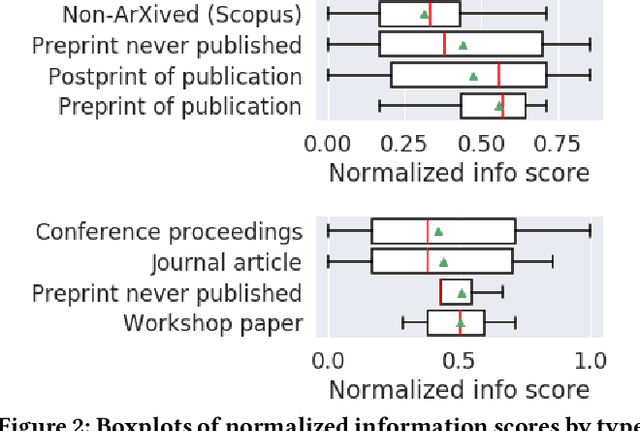

Abstract:Many machine learning projects for new application areas involve teams of humans who label data for a particular purpose, from hiring crowdworkers to the paper's authors labeling the data themselves. Such a task is quite similar to (or a form of) structured content analysis, which is a longstanding methodology in the social sciences and humanities, with many established best practices. In this paper, we investigate to what extent a sample of machine learning application papers in social computing --- specifically papers from ArXiv and traditional publications performing an ML classification task on Twitter data --- give specific details about whether such best practices were followed. Our team conducted multiple rounds of structured content analysis of each paper, making determinations such as: Does the paper report who the labelers were, what their qualifications were, whether they independently labeled the same items, whether inter-rater reliability metrics were disclosed, what level of training and/or instructions were given to labelers, whether compensation for crowdworkers is disclosed, and if the training data is publicly available. We find a wide divergence in whether such practices were followed and documented. Much of machine learning research and education focuses on what is done once a "gold standard" of training data is available, but we discuss issues around the equally-important aspect of whether such data is reliable in the first place.

* 18 pages, includes appendix

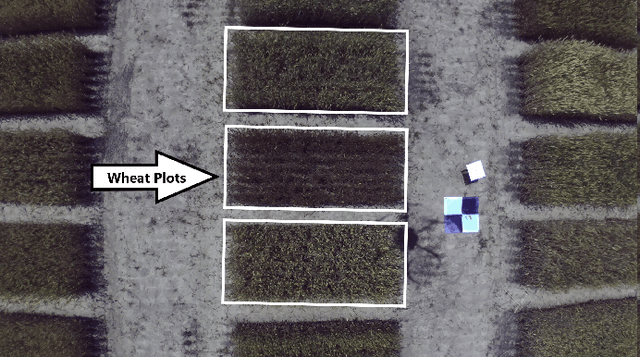

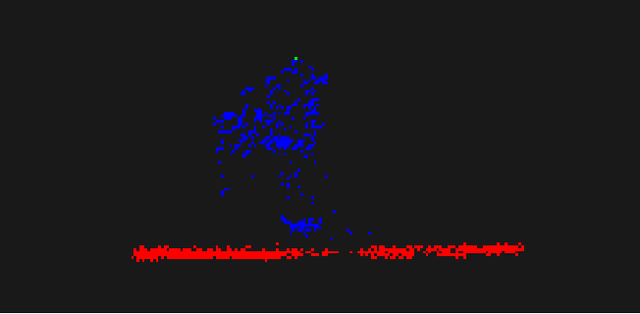

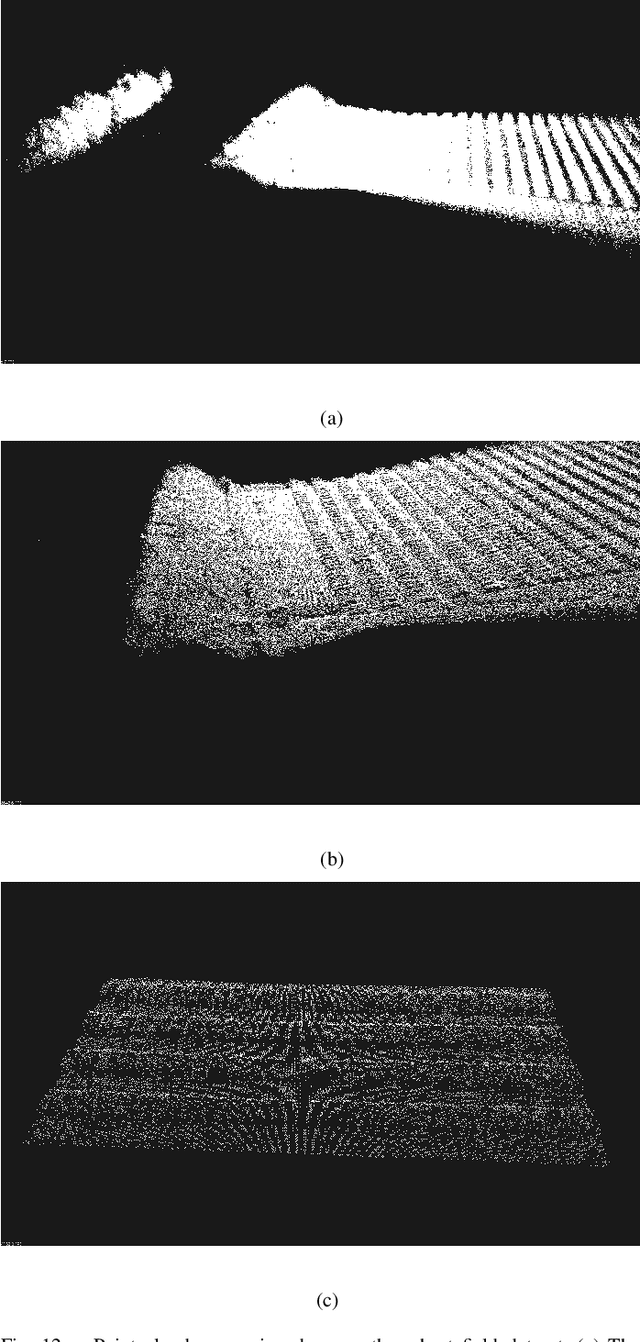

Crop Height and Plot Estimation from Unmanned Aerial Vehicles using 3D LiDAR

Oct 30, 2019

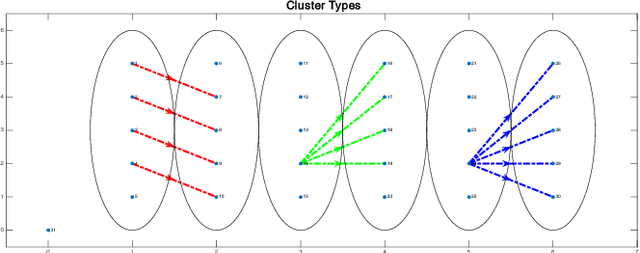

Abstract:In this paper, we present techniques to measure crop heights using a 3D LiDAR mounted on an Unmanned Aerial Vehicle (UAV). Knowing the height of plants is crucial to monitor their overall health and growth cycles, especially for high-throughput plant phenotyping. We present a methodology for extracting plant heights from 3D LiDAR point clouds, specifically focusing on row-crop environments. The key steps in our algorithm are clustering of LiDAR points to semi-automatically detect plots, local ground plane estimation, and height estimation. The plot detection uses a k--means clustering algorithm followed by a voting scheme to find the bounding boxes of individual plots. We conducted a series of experiments in controlled and natural settings. Our algorithm was able to estimate the plant heights in a field with 112 plots within +-5.36%. This is the first such dataset for 3D LiDAR from an airborne robot over a wheat field. The developed code can be found on the GitHub repository located at https://github.com/hsd1121/PointCloudProcessing.

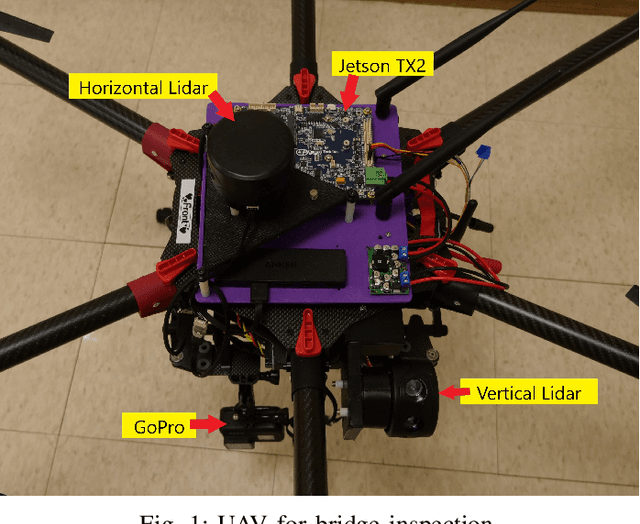

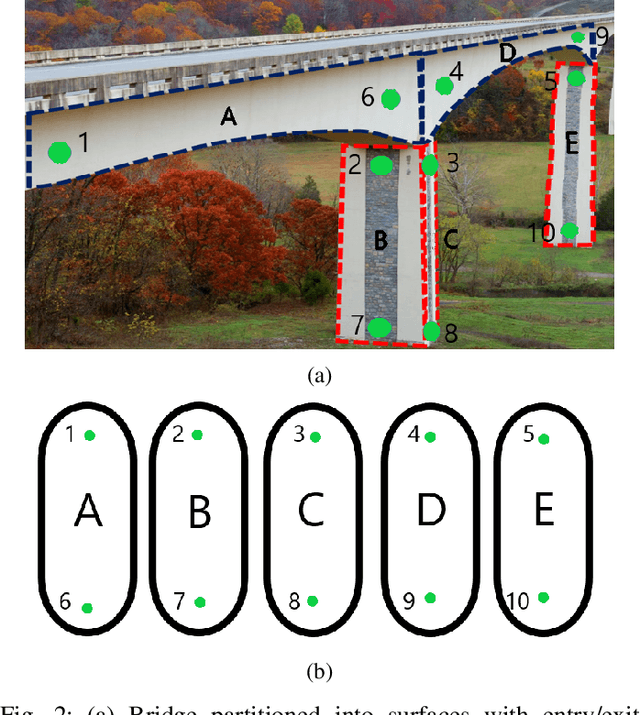

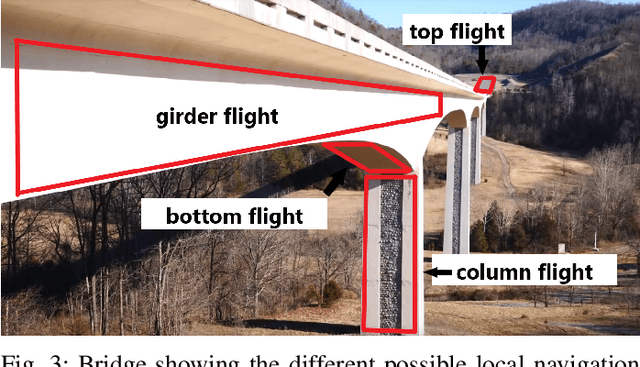

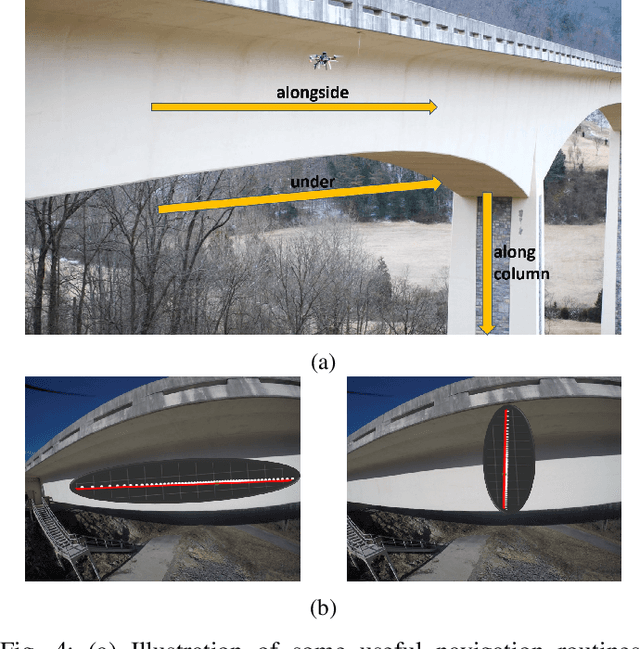

View Planning and Navigation Algorithms for Autonomous Bridge Inspection with UAVs

Oct 03, 2019

Abstract:We study the problem of infrastructure inspection using an Unmanned Aerial Vehicle (UAV) in box girder bridge environments. We consider a scenario where the UAV needs to fully inspect box girder bridges and localize along the bridge surface when standard methods like GPS and optical flow are denied. Our method for overcoming the difficulties of box girder bridges consist of creating local navigation routines, a supervisor, and a planner. The local navigation routines use two 2D Lidars for girder and column flight. For switching between local navigation routines we implement a supervisor which dictates when the UAV is able to switch between local navigation routines. Lastly, we implement a planner to calculate the path along that box girder bridge that will minimize the flight time of the UAV. With local navigation routines, a supervisor, and a planner we construct a system that can fully and autonomously inspect box girder bridges when standard methods are unavailable.

Improved visible to IR image transformation using synthetic data augmentation with cycle-consistent adversarial networks

Apr 25, 2019Abstract:Infrared (IR) images are essential to improve the visibility of dark or camouflaged objects. Object recognition and segmentation based on a neural network using IR images provide more accuracy and insight than color visible images. But the bottleneck is the amount of relevant IR images for training. It is difficult to collect real-world IR images for special purposes, including space exploration, military and fire-fighting applications. To solve this problem, we created color visible and IR images using a Unity-based 3D game editor. These synthetically generated color visible and IR images were used to train cycle consistent adversarial networks (CycleGAN) to convert visible images to IR images. CycleGAN has the advantage that it does not require precisely matching visible and IR pairs for transformation training. In this study, we discovered that additional synthetic data can help improve CycleGAN performance. Neural network training using real data (N = 20) performed more accurate transformations than training using real (N = 10) and synthetic (N = 10) data combinations. The result indicates that the synthetic data cannot exceed the quality of the real data. Neural network training using real (N = 10) and synthetic (N = 100) data combinations showed almost the same performance as training using real data (N = 20). At least 10 times more synthetic data than real data is required to achieve the same performance. In summary, CycleGAN is used with synthetic data to improve the IR image conversion performance of visible images.

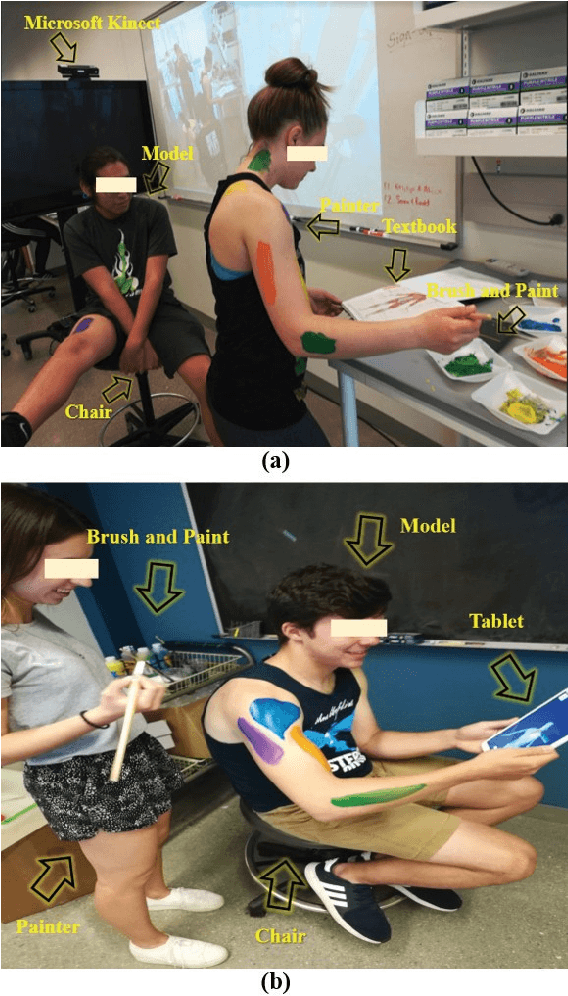

Collaboration Analysis Using Deep Learning

Apr 17, 2019

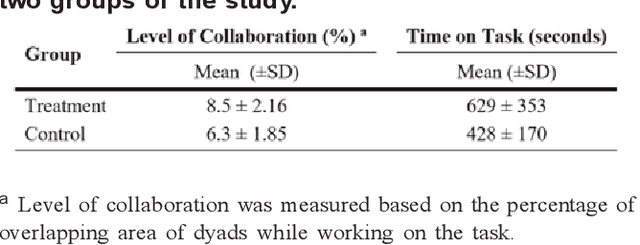

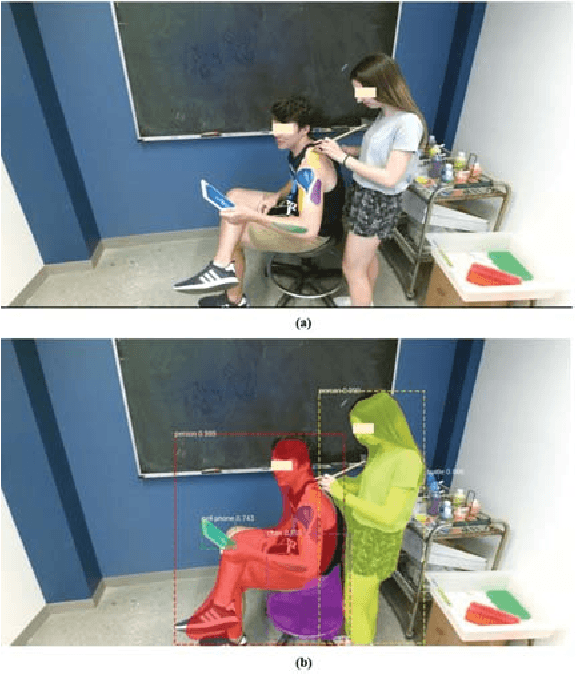

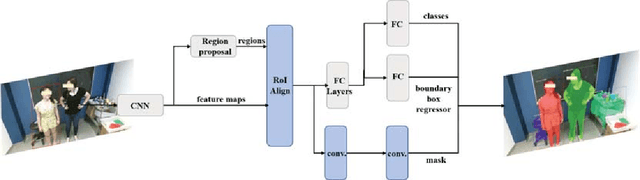

Abstract:The analysis of the collaborative learning process is one of the growing fields of education research, which has many different analytic solutions. In this paper, we provided a new solution to improve automated collaborative learning analyses using deep neural networks. Instead of using self-reported questionnaires, which are subject to bias and noise, we automatically extract group-working information by object recognition results using Mask R-CNN method. This process is based on detecting the people and other objects from pictures and video clips of the collaborative learning process, then evaluate the mobile learning performance using the collaborative indicators. We tested our approach to automatically evaluate the group-work collaboration in a controlled study of thirty-three dyads while performing an anatomy body painting intervention. The results indicate that our approach recognizes the differences of collaborations among teams of treatment and control groups in the case study. This work introduces new methods for automated quality prediction of collaborations among human-human interactions using computer vision techniques.

On-the-fly Augmented Reality for Orthopaedic Surgery Using a Multi-Modal Fiducial

Jan 04, 2018Abstract:Fluoroscopic X-ray guidance is a cornerstone for percutaneous orthopaedic surgical procedures. However, two-dimensional observations of the three-dimensional anatomy suffer from the effects of projective simplification. Consequently, many X-ray images from various orientations need to be acquired for the surgeon to accurately assess the spatial relations between the patient's anatomy and the surgical tools. In this paper, we present an on-the-fly surgical support system that provides guidance using augmented reality and can be used in quasi-unprepared operating rooms. The proposed system builds upon a multi-modality marker and simultaneous localization and mapping technique to co-calibrate an optical see-through head mounted display to a C-arm fluoroscopy system. Then, annotations on the 2D X-ray images can be rendered as virtual objects in 3D providing surgical guidance. We quantitatively evaluate the components of the proposed system, and finally, design a feasibility study on a semi-anthropomorphic phantom. The accuracy of our system was comparable to the traditional image-guided technique while substantially reducing the number of acquired X-ray images as well as procedure time. Our promising results encourage further research on the interaction between virtual and real objects, that we believe will directly benefit the proposed method. Further, we would like to explore the capabilities of our on-the-fly augmented reality support system in a larger study directed towards common orthopaedic interventions.

* S. Andress, A. Johnson, M. Unberath, and A. Winkler have contributed equally and are listed in alphabetical order

Algorithms for Routing of Unmanned Aerial Vehicles with Mobile Recharging Stations

Sep 18, 2017

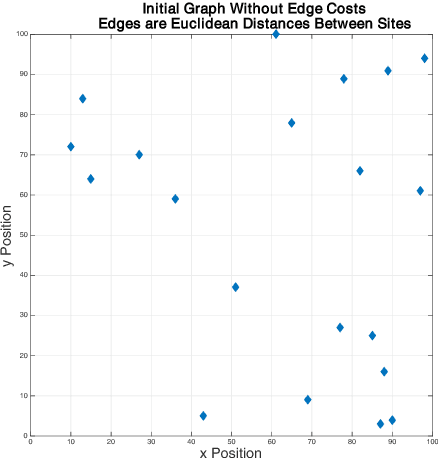

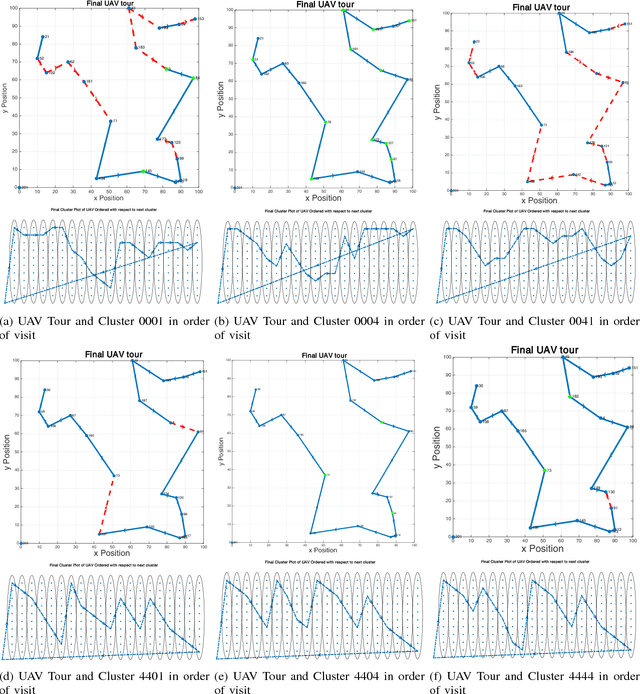

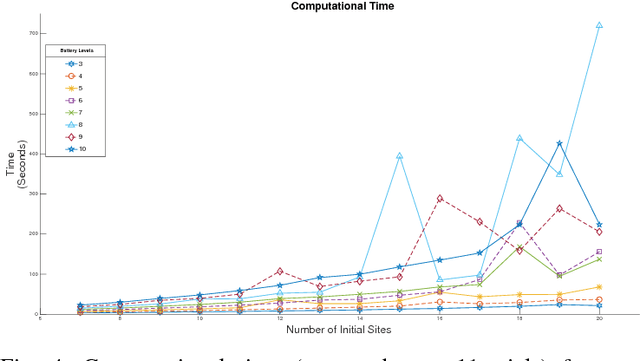

Abstract:We study the problem of planning a tour for an energy-limited Unmanned Aerial Vehicle (UAV) to visit a set of sites in the least amount of time. We envision scenarios where the UAV can be recharged along the way either by landing on stationary recharging stations or on Unmanned Ground Vehicles (UGVs) acting as mobile recharging stations. This leads to a new variant of the Traveling Salesperson Problem (TSP) with mobile recharging stations. We present an algorithm that finds not only the order in which to visit the sites but also when and where to land on the charging stations to recharge. Our algorithm plans tours for the UGVs as well as determines best locations to place stationary charging stations. While the problems we study are NP-Hard, we present a practical solution using Generalized TSP that finds the optimal solution. If the UGVs are slower, the algorithm also finds the minimum number of UGVs required to support the UAV mission such that the UAV is not required to wait for the UGV. Our simulation results show that the running time is acceptable for reasonably sized instances in practice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge