Kevin Ros

Interactive Information Need Prediction with Intent and Context

Jan 05, 2025Abstract:The ability to predict a user's information need would have wide-ranging implications, from saving time and effort to mitigating vocabulary gaps. We study how to interactively predict a user's information need by letting them select a pre-search context (e.g., a paragraph, sentence, or singe word) and specify an optional partial search intent (e.g., "how", "why", "applications", etc.). We examine how various generative language models can explicitly make this prediction by generating a question as well as how retrieval models can implicitly make this prediction by retrieving an answer. We find that this prediction process is possible in many cases and that user-provided partial search intent can help mitigate large pre-search contexts. We conclude that this framework is promising and suitable for real-world applications.

Translation between Molecules and Natural Language

Apr 26, 2022

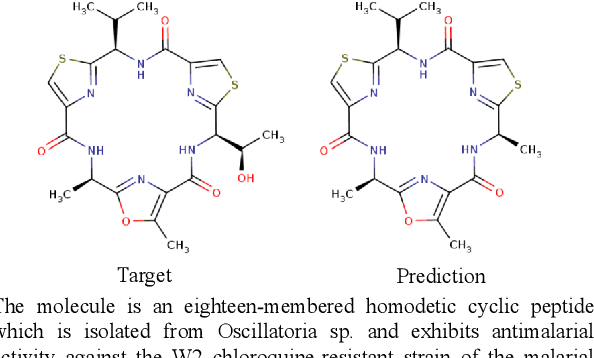

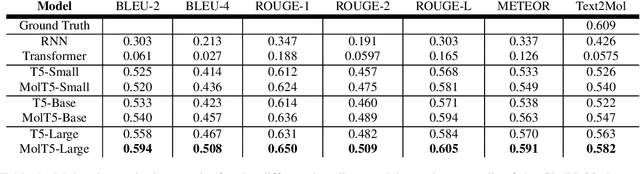

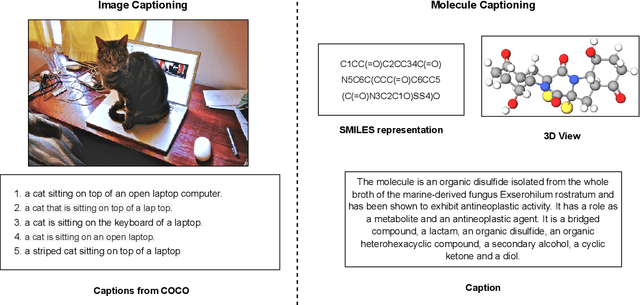

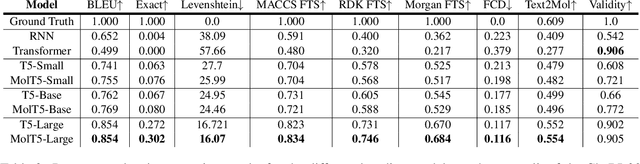

Abstract:Joint representations between images and text have been deeply investigated in the literature. In computer vision, the benefits of incorporating natural language have become clear for enabling semantic-level control of images. In this work, we present $\textbf{MolT5}-$a self-supervised learning framework for pretraining models on a vast amount of unlabeled natural language text and molecule strings. $\textbf{MolT5}$ allows for new, useful, and challenging analogs of traditional vision-language tasks, such as molecule captioning and text-based de novo molecule generation (altogether: translation between molecules and language), which we explore for the first time. Furthermore, since $\textbf{MolT5}$ pretrains models on single-modal data, it helps overcome the chemistry domain shortcoming of data scarcity. Additionally, we consider several metrics, including a new cross-modal embedding-based metric, to evaluate the tasks of molecule captioning and text-based molecule generation. By interfacing molecules with natural language, we enable a higher semantic level of control over molecule discovery and understanding--a critical task for scientific domains such as drug discovery and material design. Our results show that $\textbf{MolT5}$-based models are able to generate outputs, both molecule and text, which in many cases are high quality and match the input modality. On molecule generation, our best model achieves 30% exact matching test accuracy (i.e., it generates the correct structure for about one-third of the captions in our held-out test set).

Comprehension and Knowledge

Dec 11, 2020

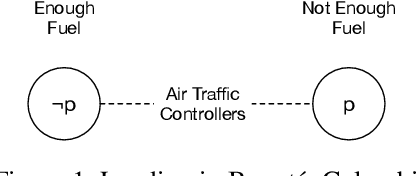

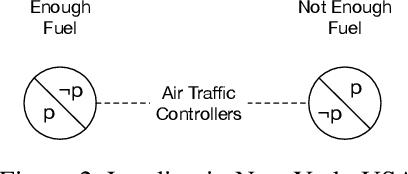

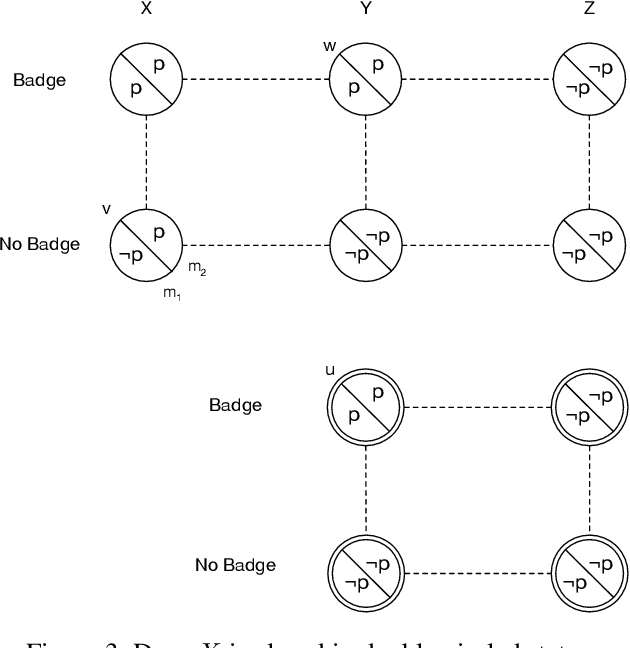

Abstract:The ability of an agent to comprehend a sentence is tightly connected to the agent's prior experiences and background knowledge. The paper suggests to interpret comprehension as a modality and proposes a complete bimodal logical system that describes an interplay between comprehension and knowledge modalities.

Strategic Coalitions in Stochastic Games

Oct 10, 2019

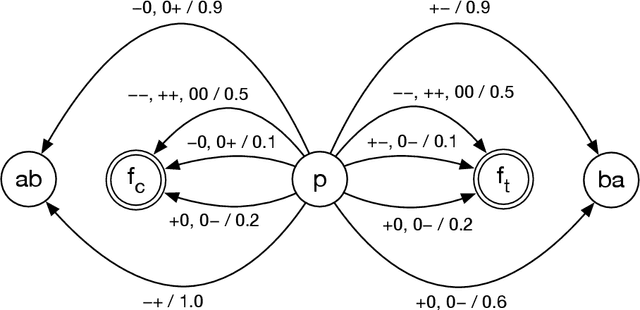

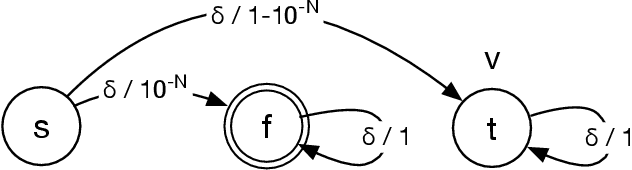

Abstract:The article introduces a notion of a stochastic game with failure states and proposes two logical systems with modality "coalition has a strategy to transition to a non-failure state with a given probability while achieving a given goal." The logical properties of this modality depend on whether the modal language allows the empty coalition. The main technical results are a completeness theorem for a logical system with the empty coalition, a strong completeness theorem for the logical system without the empty coalition, and an incompleteness theorem which shows that there is no strongly complete logical system in the language with the empty coalition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge