Pavel Naumov

Vassar College

A Graph-Theoretical Perspective on Law Design for Multiagent Systems

Nov 09, 2025Abstract:A law in a multiagent system is a set of constraints imposed on agents' behaviours to avoid undesirable outcomes. The paper considers two types of laws: useful laws that, if followed, completely eliminate the undesirable outcomes and gap-free laws that guarantee that at least one agent can be held responsible each time an undesirable outcome occurs. In both cases, we study the problem of finding a law that achieves the desired result by imposing the minimum restrictions. We prove that, for both types of laws, the minimisation problem is NP-hard even in the simple case of one-shot concurrent interactions. We also show that the approximation algorithm for the vertex cover problem in hypergraphs could be used to efficiently approximate the minimum laws in both cases.

An Epistemic Perspective on Agent Awareness

Nov 08, 2025Abstract:The paper proposes to treat agent awareness as a form of knowledge, breaking the tradition in the existing literature on awareness. It distinguishes the de re and de dicto forms of such knowledge. The work introduces two modalities capturing these forms and formally specifies their meaning using a version of 2D-semantics. The main technical result is a sound and complete logical system describing the interplay between the two proposed modalities and the standard "knowledge of the fact" modality.

Responsibility Gap and Diffusion in Sequential Decision-Making Mechanisms

Jul 03, 2025Abstract:Responsibility has long been a subject of study in law and philosophy. More recently, it became a focus of AI literature. The article investigates the computational complexity of two important properties of responsibility in collective decision-making: diffusion and gap. It shows that the sets of diffusion-free and gap-free decision-making mechanisms are $\Pi_2$-complete and $\Pi_3$-complete, respectively. At the same time, the intersection of these classes is $\Pi_2$-complete.

Diffusion of Responsibility in Collective Decision Making

Jun 09, 2025Abstract:The term "diffusion of responsibility'' refers to situations in which multiple agents share responsibility for an outcome, obscuring individual accountability. This paper examines this frequently undesirable phenomenon in the context of collective decision-making mechanisms. The work shows that if a decision is made by two agents, then the only way to avoid diffusion of responsibility is for one agent to act as a "dictator'', making the decision unilaterally. In scenarios with more than two agents, any diffusion-free mechanism is an "elected dictatorship'' where the agents elect a single agent to make a unilateral decision. The technical results are obtained by defining a bisimulation of decision-making mechanisms, proving that bisimulation preserves responsibility-related properties, and establishing the results for a smallest bisimular mechanism.

Responsibility Gap in Collective Decision Making

May 08, 2025Abstract:The responsibility gap is a set of outcomes of a collective decision-making mechanism in which no single agent is individually responsible. In general, when designing a decision-making process, it is desirable to minimise the gap. The paper proposes a concept of an elected dictatorship. It shows that, in a perfect information setting, the gap is empty if and only if the mechanism is an elected dictatorship. It also proves that in an imperfect information setting, the class of gap-free mechanisms is positioned strictly between two variations of the class of elected dictatorships.

Uncommon Belief in Rationality

Dec 12, 2024

Abstract:Common knowledge/belief in rationality is the traditional standard assumption in analysing interaction among agents. This paper proposes a graph-based language for capturing significantly more complicated structures of higher-order beliefs that agents might have about the rationality of the other agents. The two main contributions are a solution concept that captures the reasoning process based on a given belief structure and an efficient algorithm for compressing any belief structure into a unique minimal form.

The Logic of Doxastic Strategies

Dec 13, 2023Abstract:In many real-world situations, there is often not enough information to know that a certain strategy will succeed in achieving the goal, but there is a good reason to believe that it will. The paper introduces the term ``doxastic'' for such strategies. The main technical contribution is a sound and complete logical system that describes the interplay between doxastic strategy and belief modalities.

De Re and De Dicto Knowledge in Egocentric Setting

Jul 18, 2023

Abstract:Prior proposes the term "egocentric" for logical systems that study properties of agents rather than properties of possible worlds. In such a setting, the paper introduces two different modalities capturing de re and de dicto knowledge and proves that these two modalities are not definable through each other.

Shhh! The Logic of Clandestine Operations

May 10, 2023Abstract:An operation is called covert if it conceals the identity of the actor; it is called clandestine if the very fact that the operation is conducted is concealed. The paper proposes a formal semantics of clandestine operations and introduces a sound and complete logical system that describes the interplay between the distributed knowledge modality and a modality capturing coalition power to conduct clandestine operations.

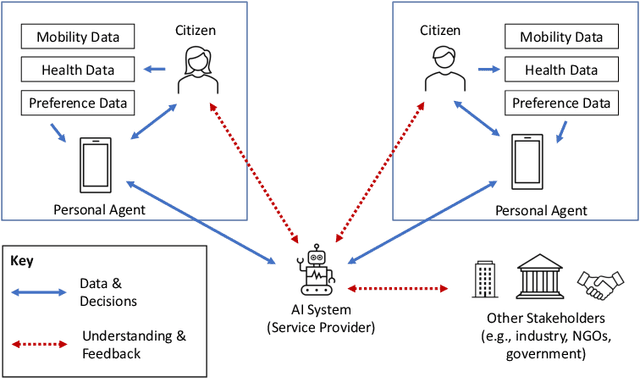

From Intelligent Agents to Trustworthy Human-Centred Multiagent Systems

Oct 05, 2022

Abstract:The Agents, Interaction and Complexity research group at the University of Southampton has a long track record of research in multiagent systems (MAS). We have made substantial scientific contributions across learning in MAS, game-theoretic techniques for coordinating agent systems, and formal methods for representation and reasoning. We highlight key results achieved by the group and elaborate on recent work and open research challenges in developing trustworthy autonomous systems and deploying human-centred AI systems that aim to support societal good.

* Appears in the Special Issue on Multi-Agent Systems Research in the United Kingdom

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge