Juecen Zhan

State-Aware IoT Scheduling Using Deep Q-Networks and Edge-Based Coordination

Apr 22, 2025Abstract:This paper addresses the challenge of energy efficiency management faced by intelligent IoT devices in complex application environments. A novel optimization method is proposed, combining Deep Q-Network (DQN) with an edge collaboration mechanism. The method builds a state-action-reward interaction model and introduces edge nodes as intermediaries for state aggregation and policy scheduling. This enables dynamic resource coordination and task allocation among multiple devices. During the modeling process, device status, task load, and network resources are jointly incorporated into the state space. The DQN is used to approximate and learn the optimal scheduling strategy. To enhance the model's ability to perceive inter-device relationships, a collaborative graph structure is introduced to model the multi-device environment and assist in decision optimization. Experiments are conducted using real-world IoT data collected from the FastBee platform. Several comparative and validation tests are performed, including energy efficiency comparisons across different scheduling strategies, robustness analysis under varying task loads, and evaluation of state dimension impacts on policy convergence speed. The results show that the proposed method outperforms existing baseline approaches in terms of average energy consumption, processing latency, and resource utilization. This confirms its effectiveness and practicality in intelligent IoT scenarios.

Context-Aware Adaptive Sampling for Intelligent Data Acquisition Systems Using DQN

Apr 12, 2025

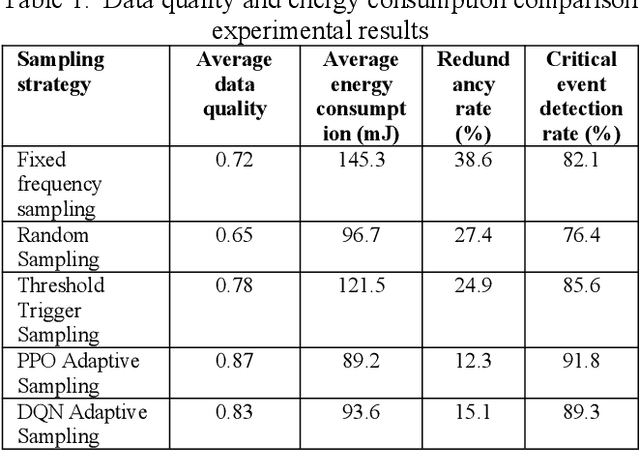

Abstract:Multi-sensor systems are widely used in the Internet of Things, environmental monitoring, and intelligent manufacturing. However, traditional fixed-frequency sampling strategies often lead to severe data redundancy, high energy consumption, and limited adaptability, failing to meet the dynamic sensing needs of complex environments. To address these issues, this paper proposes a DQN-based multi-sensor adaptive sampling optimization method. By leveraging a reinforcement learning framework to learn the optimal sampling strategy, the method balances data quality, energy consumption, and redundancy. We first model the multi-sensor sampling task as a Markov Decision Process (MDP), then employ a Deep Q-Network to optimize the sampling policy. Experiments on the Intel Lab Data dataset confirm that, compared with fixed-frequency sampling, threshold-triggered sampling, and other reinforcement learning approaches, DQN significantly improves data quality while lowering average energy consumption and redundancy rates. Moreover, in heterogeneous multi-sensor environments, DQN-based adaptive sampling shows enhanced robustness, maintaining superior data collection performance even in the presence of interference factors. These findings demonstrate that DQN-based adaptive sampling can enhance overall data acquisition efficiency in multi-sensor systems, providing a new solution for efficient and intelligent sensing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge