Joonbum Lee

What Can Be Predicted from Six Seconds of Driver Glances?

Nov 26, 2016

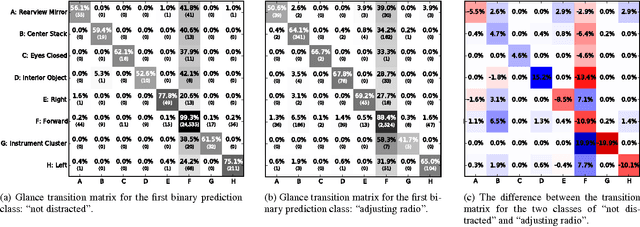

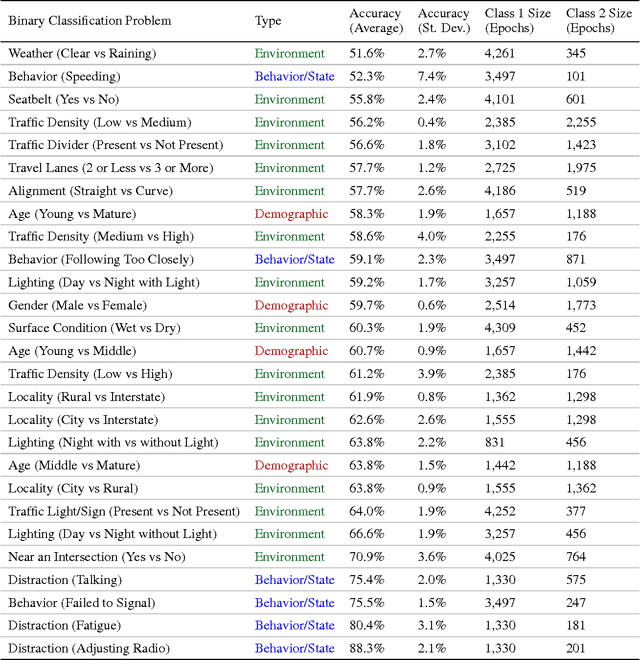

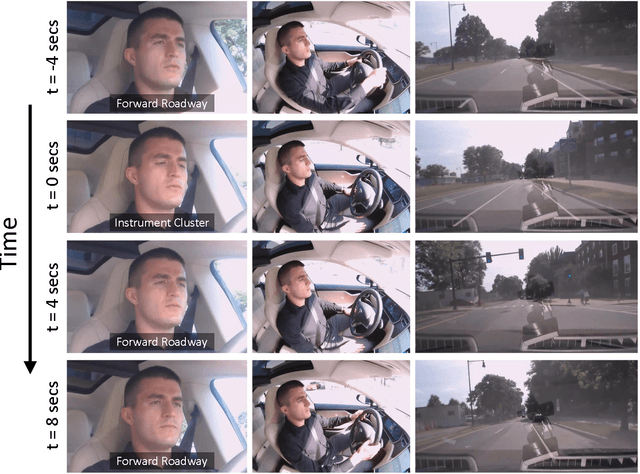

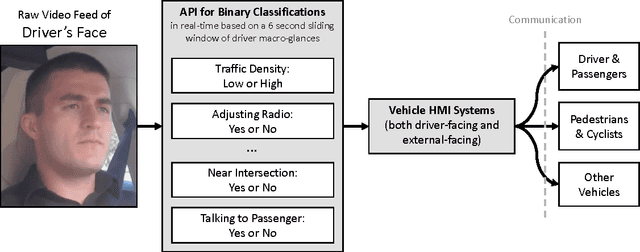

Abstract:We consider a large dataset of real-world, on-road driving from a 100-car naturalistic study to explore the predictive power of driver glances and, specifically, to answer the following question: what can be predicted about the state of the driver and the state of the driving environment from a 6-second sequence of macro-glances? The context-based nature of such glances allows for application of supervised learning to the problem of vision-based gaze estimation, making it robust, accurate, and reliable in messy, real-world conditions. So, it's valuable to ask whether such macro-glances can be used to infer behavioral, environmental, and demographic variables? We analyze 27 binary classification problems based on these variables. The takeaway is that glance can be used as part of a multi-sensor real-time system to predict radio-tuning, fatigue state, failure to signal, talking, and several environment variables.

Owl and Lizard: Patterns of Head Pose and Eye Pose in Driver Gaze Classification

Nov 20, 2016

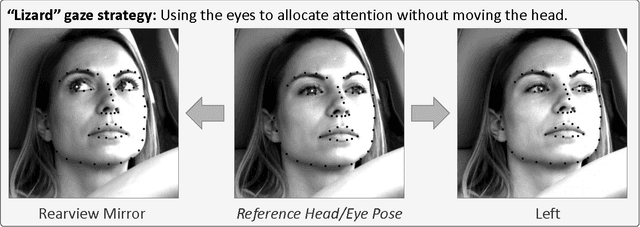

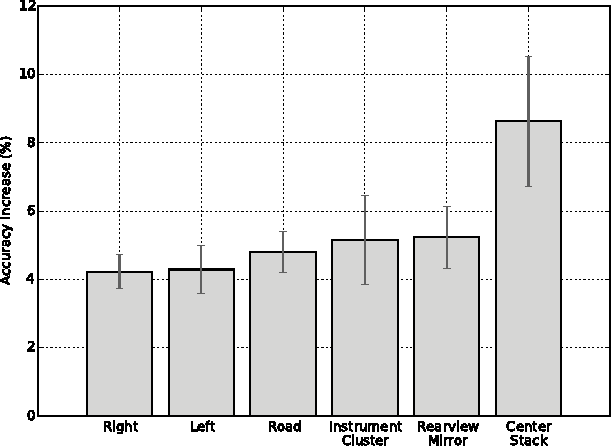

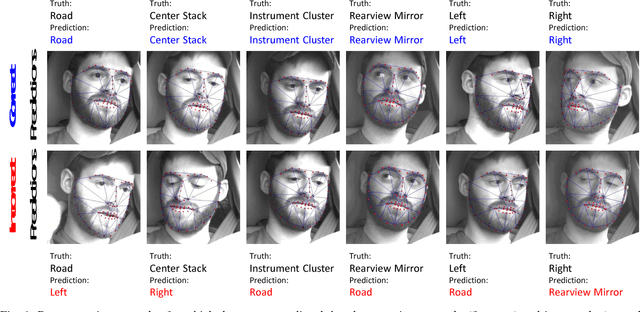

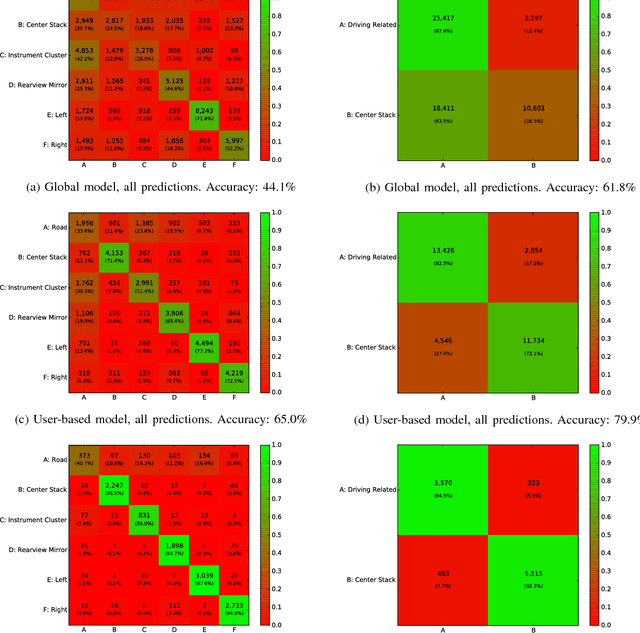

Abstract:Accurate, robust, inexpensive gaze tracking in the car can help keep a driver safe by facilitating the more effective study of how to improve (1) vehicle interfaces and (2) the design of future Advanced Driver Assistance Systems. In this paper, we estimate head pose and eye pose from monocular video using methods developed extensively in prior work and ask two new interesting questions. First, how much better can we classify driver gaze using head and eye pose versus just using head pose? Second, are there individual-specific gaze strategies that strongly correlate with how much gaze classification improves with the addition of eye pose information? We answer these questions by evaluating data drawn from an on-road study of 40 drivers. The main insight of the paper is conveyed through the analogy of an "owl" and "lizard" which describes the degree to which the eyes and the head move when shifting gaze. When the head moves a lot ("owl"), not much classification improvement is attained by estimating eye pose on top of head pose. On the other hand, when the head stays still and only the eyes move ("lizard"), classification accuracy increases significantly from adding in eye pose. We characterize how that accuracy varies between people, gaze strategies, and gaze regions.

Driver Gaze Region Estimation Without Using Eye Movement

Mar 01, 2016

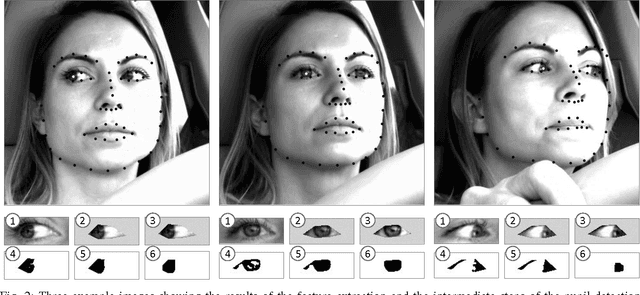

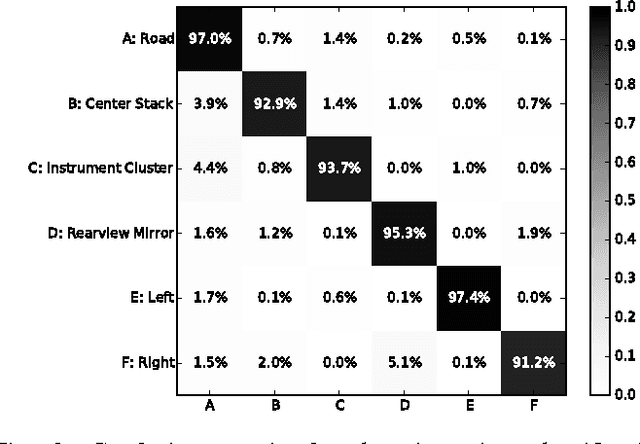

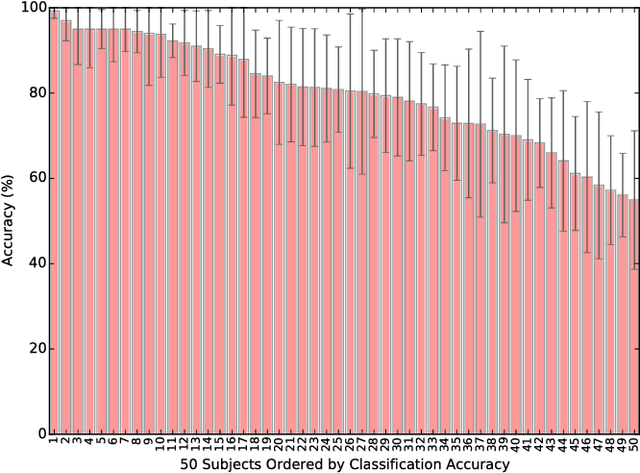

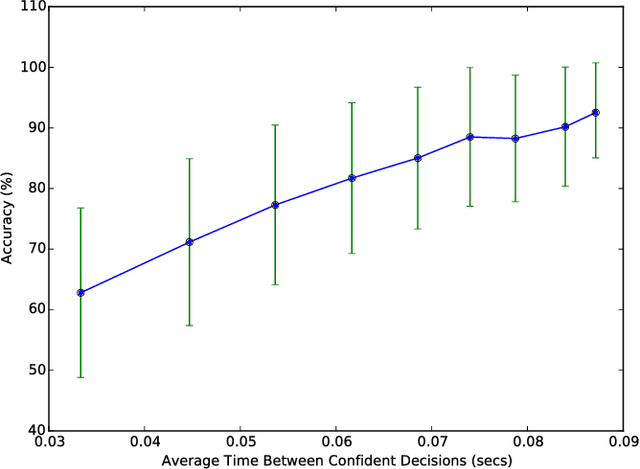

Abstract:Automated estimation of the allocation of a driver's visual attention may be a critical component of future Advanced Driver Assistance Systems. In theory, vision-based tracking of the eye can provide a good estimate of gaze location. In practice, eye tracking from video is challenging because of sunglasses, eyeglass reflections, lighting conditions, occlusions, motion blur, and other factors. Estimation of head pose, on the other hand, is robust to many of these effects, but cannot provide as fine-grained of a resolution in localizing the gaze. However, for the purpose of keeping the driver safe, it is sufficient to partition gaze into regions. In this effort, we propose a system that extracts facial features and classifies their spatial configuration into six regions in real-time. Our proposed method achieves an average accuracy of 91.4% at an average decision rate of 11 Hz on a dataset of 50 drivers from an on-road study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge