Jennifer Dy

Structured Disentangled Representations

May 29, 2018

Abstract:Deep latent-variable models learn representations of high-dimensional data in an unsupervised manner. A number of recent efforts have focused on learning representations that disentangle statistically independent axes of variation by introducing modifications to the standard objective function. These approaches generally assume a simple diagonal Gaussian prior and as a result are not able to reliably disentangle discrete factors of variation. We propose a two-level hierarchical objective to control relative degree of statistical independence between blocks of variables and individual variables within blocks. We derive this objective as a generalization of the evidence lower bound, which allows us to explicitly represent the trade-offs between mutual information between data and representation, KL divergence between representation and prior, and coverage of the support of the empirical data distribution. Experiments on a variety of datasets demonstrate that our objective can not only disentangle discrete variables, but that doing so also improves disentanglement of other variables and, importantly, generalization even to unseen combinations of factors.

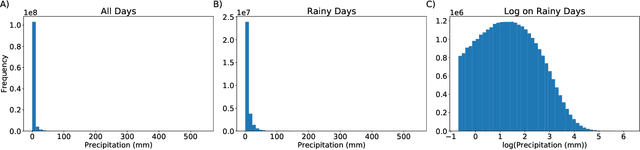

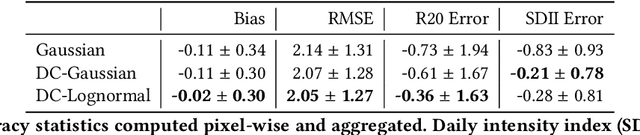

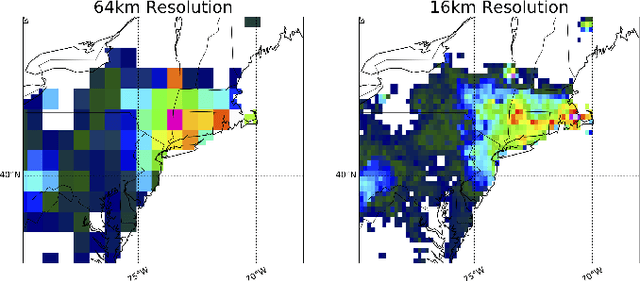

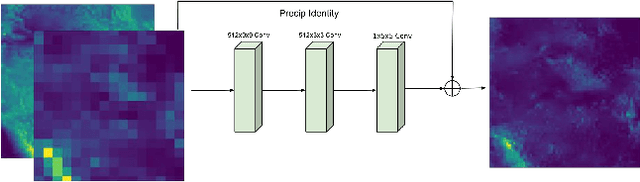

Quantifying Uncertainty in Discrete-Continuous and Skewed Data with Bayesian Deep Learning

May 24, 2018

Abstract:Deep Learning (DL) methods have been transforming computer vision with innovative adaptations to other domains including climate change. For DL to pervade Science and Engineering (S&E) applications where risk management is a core component, well-characterized uncertainty estimates must accompany predictions. However, S&E observations and model-simulations often follow heavily skewed distributions and are not well modeled with DL approaches, since they usually optimize a Gaussian, or Euclidean, likelihood loss. Recent developments in Bayesian Deep Learning (BDL), which attempts to capture uncertainties from noisy observations, aleatoric, and from unknown model parameters, epistemic, provide us a foundation. Here we present a discrete-continuous BDL model with Gaussian and lognormal likelihoods for uncertainty quantification (UQ). We demonstrate the approach by developing UQ estimates on `DeepSD', a super-resolution based DL model for Statistical Downscaling (SD) in climate applied to precipitation, which follows an extremely skewed distribution. We find that the discrete-continuous models outperform a basic Gaussian distribution in terms of predictive accuracy and uncertainty calibration. Furthermore, we find that the lognormal distribution, which can handle skewed distributions, produces quality uncertainty estimates at the extremes. Such results may be important across S&E, as well as other domains such as finance and economics, where extremes are often of significant interest. Furthermore, to our knowledge, this is the first UQ model in SD where both aleatoric and epistemic uncertainties are characterized.

* 10 Pages

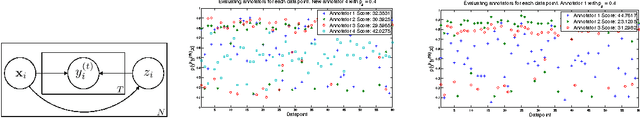

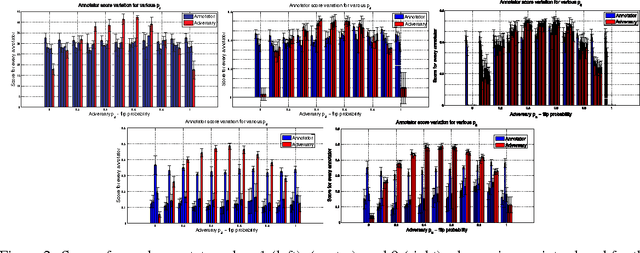

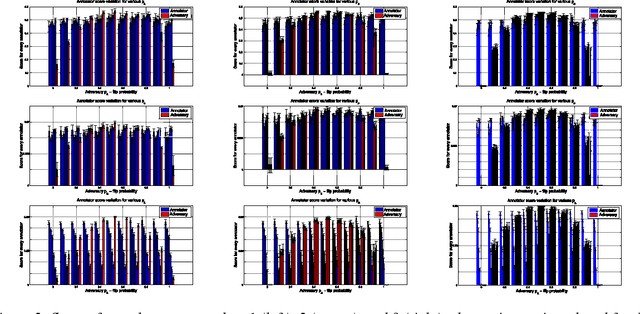

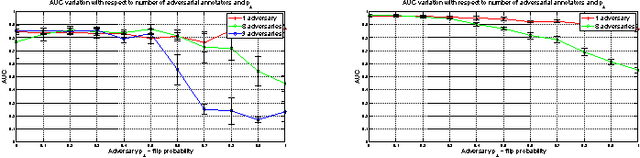

Evaluating Crowdsourcing Participants in the Absence of Ground-Truth

May 30, 2016

Abstract:Given a supervised/semi-supervised learning scenario where multiple annotators are available, we consider the problem of identification of adversarial or unreliable annotators.

Modeling Multiple Annotator Expertise in the Semi-Supervised Learning Scenario

Mar 15, 2012

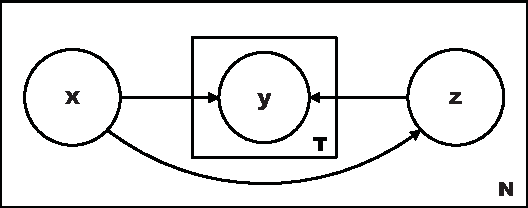

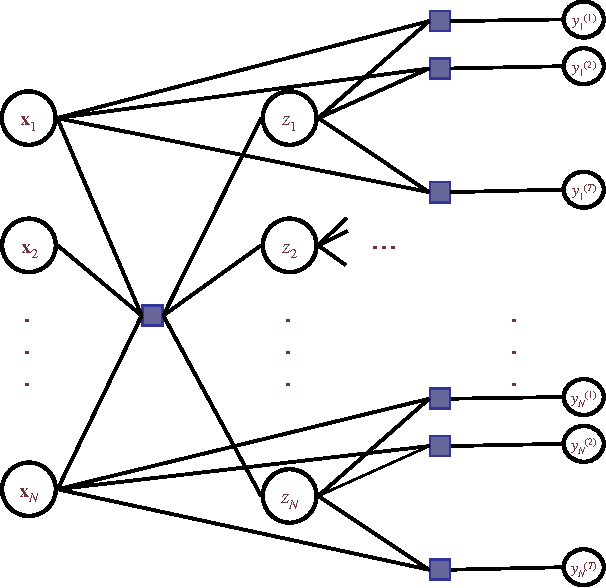

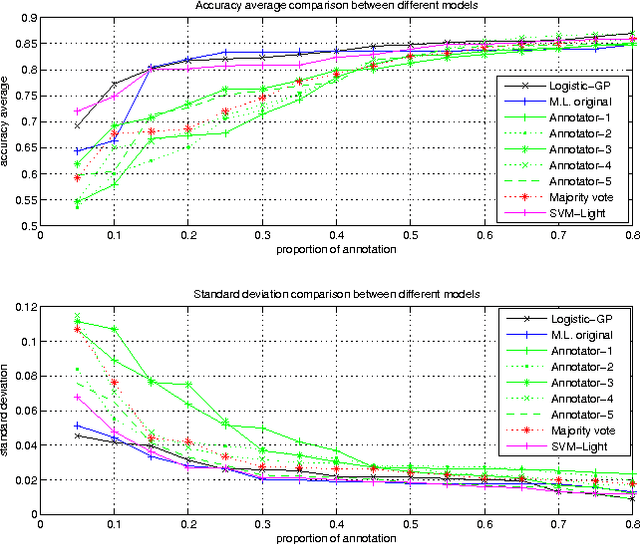

Abstract:Learning algorithms normally assume that there is at most one annotation or label per data point. However, in some scenarios, such as medical diagnosis and on-line collaboration,multiple annotations may be available. In either case, obtaining labels for data points can be expensive and time-consuming (in some circumstances ground-truth may not exist). Semi-supervised learning approaches have shown that utilizing the unlabeled data is often beneficial in these cases. This paper presents a probabilistic semi-supervised model and algorithm that allows for learning from both unlabeled and labeled data in the presence of multiple annotators. We assume that it is known what annotator labeled which data points. The proposed approach produces annotator models that allow us to provide (1) estimates of the true label and (2) annotator variable expertise for both labeled and unlabeled data. We provide numerical comparisons under various scenarios and with respect to standard semi-supervised learning. Experiments showed that the presented approach provides clear advantages over multi-annotator methods that do not use the unlabeled data and over methods that do not use multi-labeler information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge