Hyunghun Cho

DR.CPO: Diversified and Realistic 3D Augmentation via Iterative Construction, Random Placement, and HPR Occlusion

Mar 20, 2023

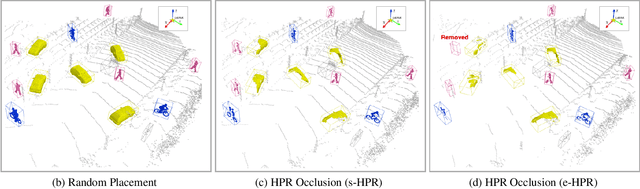

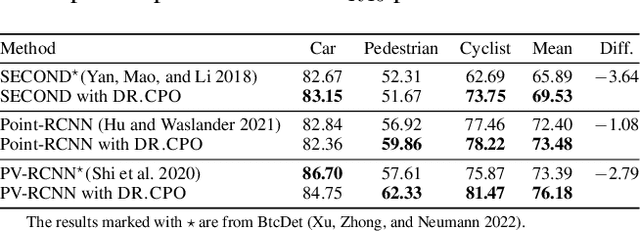

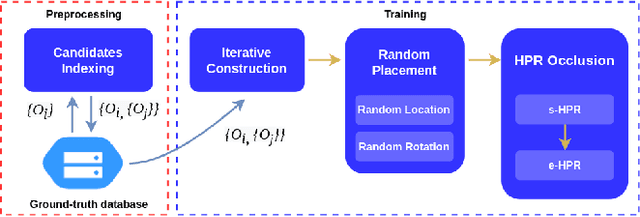

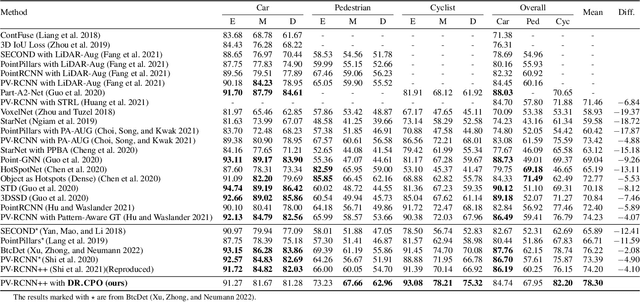

Abstract:In autonomous driving, data augmentation is commonly used for improving 3D object detection. The most basic methods include insertion of copied objects and rotation and scaling of the entire training frame. Numerous variants have been developed as well. The existing methods, however, are considerably limited when compared to the variety of the real world possibilities. In this work, we develop a diversified and realistic augmentation method that can flexibly construct a whole-body object, freely locate and rotate the object, and apply self-occlusion and external-occlusion accordingly. To improve the diversity of the whole-body object construction, we develop an iterative method that stochastically combines multiple objects observed from the real world into a single object. Unlike the existing augmentation methods, the constructed objects can be randomly located and rotated in the training frame because proper occlusions can be reflected to the whole-body objects in the final step. Finally, proper self-occlusion at each local object level and external-occlusion at the global frame level are applied using the Hidden Point Removal (HPR) algorithm that is computationally efficient. HPR is also used for adaptively controlling the point density of each object according to the object's distance from the LiDAR. Experiment results show that the proposed DR.CPO algorithm is data-efficient and model-agnostic without incurring any computational overhead. Also, DR.CPO can improve mAP performance by 2.08% when compared to the best 3D detection result known for KITTI dataset. The code is available at https://github.com/SNU-DRL/DRCPO.git

B2EA: An Evolutionary Algorithm Assisted by Two Bayesian Optimization Modules for Neural Architecture Search

Feb 17, 2022

Abstract:The early pioneering Neural Architecture Search (NAS) works were multi-trial methods applicable to any general search space. The subsequent works took advantage of the early findings and developed weight-sharing methods that assume a structured search space typically with pre-fixed hyperparameters. Despite the amazing computational efficiency of the weight-sharing NAS algorithms, it is becoming apparent that multi-trial NAS algorithms are also needed for identifying very high-performance architectures, especially when exploring a general search space. In this work, we carefully review the latest multi-trial NAS algorithms and identify the key strategies including Evolutionary Algorithm (EA), Bayesian Optimization (BO), diversification, input and output transformations, and lower fidelity estimation. To accommodate the key strategies into a single framework, we develop B2EA that is a surrogate assisted EA with two BO surrogate models and a mutation step in between. To show that B2EA is robust and efficient, we evaluate three performance metrics over 14 benchmarks with general and cell-based search spaces. Comparisons with state-of-the-art multi-trial algorithms reveal that B2EA is robust and efficient over the 14 benchmarks for three difficulty levels of target performance. The B2EA code is publicly available at \url{https://github.com/snu-adsl/BBEA}.

DEEP-BO for Hyperparameter Optimization of Deep Networks

May 23, 2019

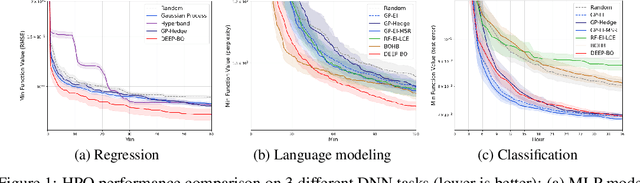

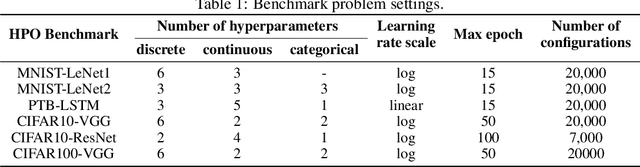

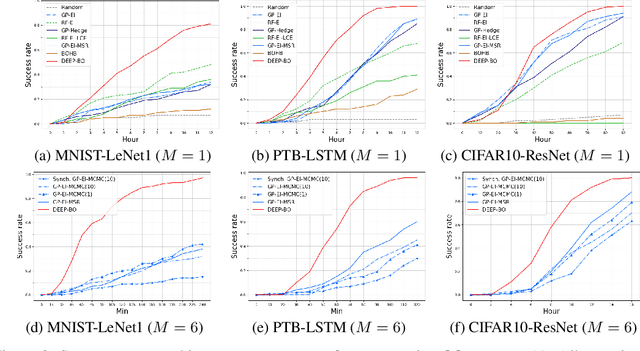

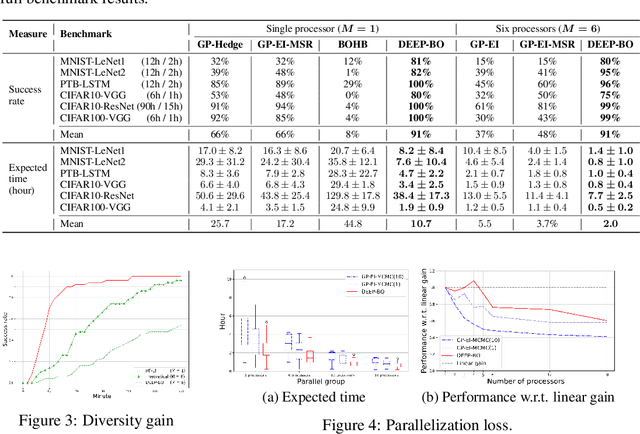

Abstract:The performance of deep neural networks (DNN) is very sensitive to the particular choice of hyper-parameters. To make it worse, the shape of the learning curve can be significantly affected when a technique like batchnorm is used. As a result, hyperparameter optimization of deep networks can be much more challenging than traditional machine learning models. In this work, we start from well known Bayesian Optimization solutions and provide enhancement strategies specifically designed for hyperparameter optimization of deep networks. The resulting algorithm is named as DEEP-BO (Diversified, Early-termination-Enabled, and Parallel Bayesian Optimization). When evaluated over six DNN benchmarks, DEEP-BO easily outperforms or shows comparable performance with some of the well-known solutions including GP-Hedge, Hyperband, BOHB, Median Stopping Rule, and Learning Curve Extrapolation. The code used is made publicly available at https://github.com/snu-adsl/DEEP-BO.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge