Hiroshi Saruwatari

Empirical Study Incorporating Linguistic Knowledge on Filled Pauses for Personalized Spontaneous Speech Synthesis

Oct 14, 2022

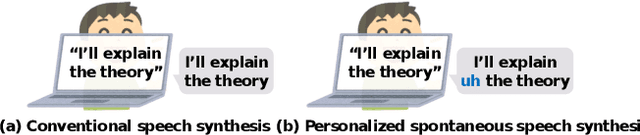

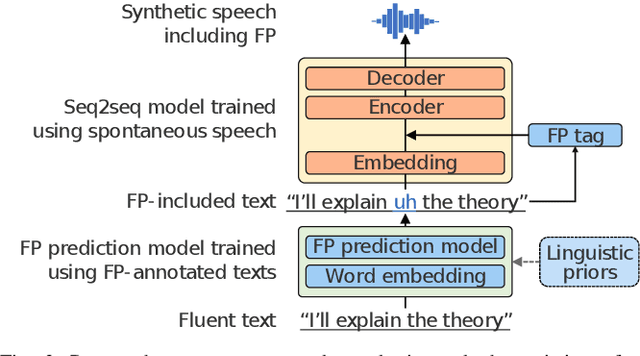

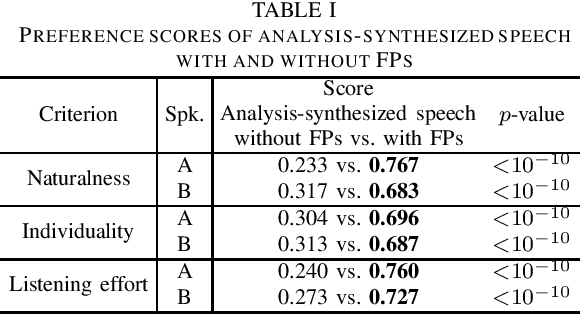

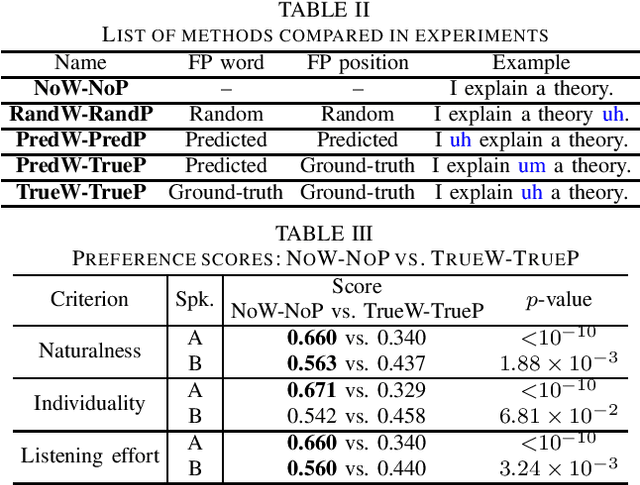

Abstract:We present a comprehensive empirical study for personalized spontaneous speech synthesis on the basis of linguistic knowledge. With the advent of voice cloning for reading-style speech synthesis, a new voice cloning paradigm for human-like and spontaneous speech synthesis is required. We, therefore, focus on personalized spontaneous speech synthesis that can clone both the individual's voice timbre and speech disfluency. Specifically, we deal with filled pauses, a major source of speech disfluency, which is known to play an important role in speech generation and communication in psychology and linguistics. To comparatively evaluate personalized filled pause insertion and non-personalized filled pause prediction methods, we developed a speech synthesis method with a non-personalized external filled pause predictor trained with a multi-speaker corpus. The results clarify the position-word entanglement of filled pauses, i.e., the necessity of precisely predicting positions for naturalness and the necessity of precisely predicting words for individuality on the evaluation of synthesized speech.

Hyperbolic Timbre Embedding for Musical Instrument Sound Synthesis Based on Variational Autoencoders

Sep 27, 2022

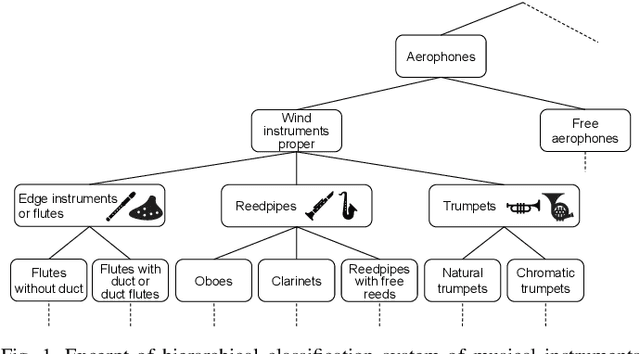

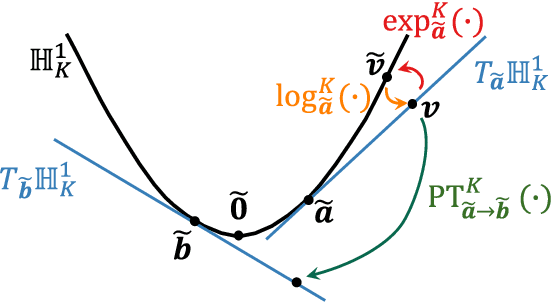

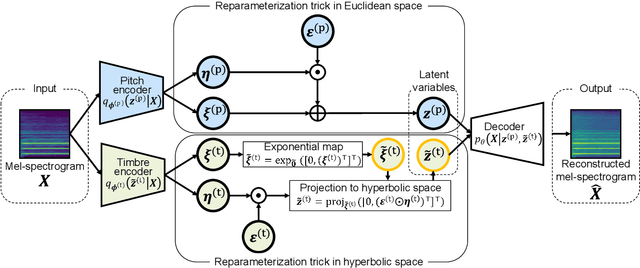

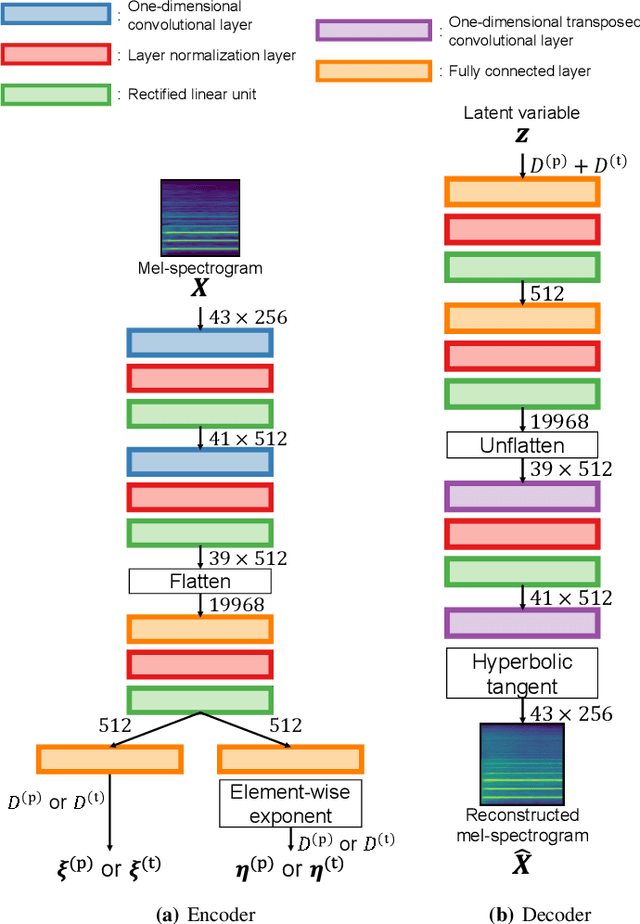

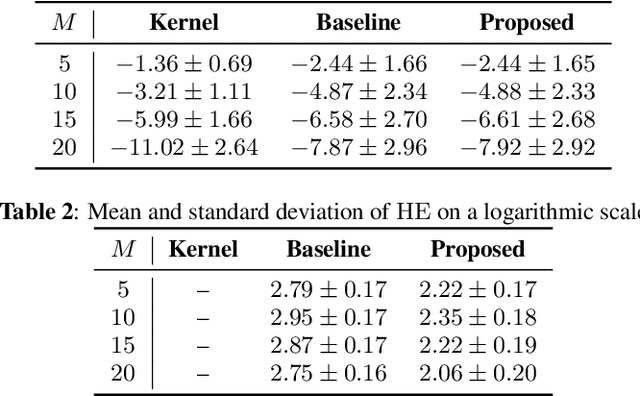

Abstract:In this paper, we propose a musical instrument sound synthesis (MISS) method based on a variational autoencoder (VAE) that has a hierarchy-inducing latent space for timbre. VAE-based MISS methods embed an input signal into a low-dimensional latent representation that captures the characteristics of the input. Adequately manipulating this representation leads to sound morphing and timbre replacement. Although most VAE-based MISS methods seek a disentangled representation of pitch and timbre, how to capture an underlying structure in timbre remains an open problem. To address this problem, we focus on the fact that musical instruments can be hierarchically classified on the basis of their physical mechanisms. Motivated by this hierarchy, we propose a VAE-based MISS method by introducing a hyperbolic space for timbre. The hyperbolic space can represent treelike data more efficiently than the Euclidean space owing to its exponential growth property. Results of experiments show that the proposed method provides an efficient latent representation of timbre compared with the method using the Euclidean space.

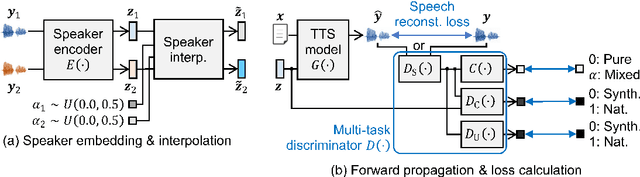

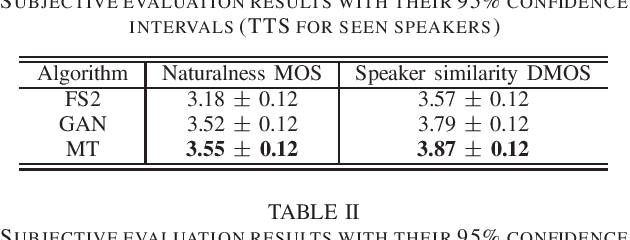

Multi-Task Adversarial Training Algorithm for Multi-Speaker Neural Text-to-Speech

Sep 26, 2022

Abstract:We propose a novel training algorithm for a multi-speaker neural text-to-speech (TTS) model based on multi-task adversarial training. A conventional generative adversarial network (GAN)-based training algorithm significantly improves the quality of synthetic speech by reducing the statistical difference between natural and synthetic speech. However, the algorithm does not guarantee the generalization performance of the trained TTS model in synthesizing voices of unseen speakers who are not included in the training data. Our algorithm alternatively trains two deep neural networks: multi-task discriminator and multi-speaker neural TTS model (i.e., generator of GANs). The discriminator is trained not only to distinguish between natural and synthetic speech but also to verify the speaker of input speech is existent or non-existent (i.e., newly generated by interpolating seen speakers' embedding vectors). Meanwhile, the generator is trained to minimize the weighted sum of the speech reconstruction loss and adversarial loss for fooling the discriminator, which achieves high-quality multi-speaker TTS even if the target speaker is unseen. Experimental evaluation shows that our algorithm improves the quality of synthetic speech better than a conventional GANSpeech algorithm.

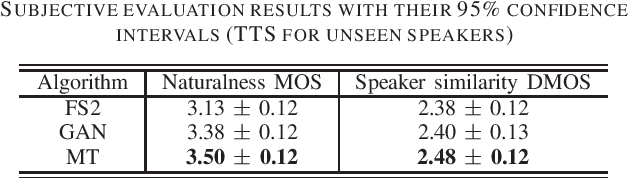

Head-Related Transfer Function Interpolation from Spatially Sparse Measurements Using Autoencoder with Source Position Conditioning

Jul 22, 2022

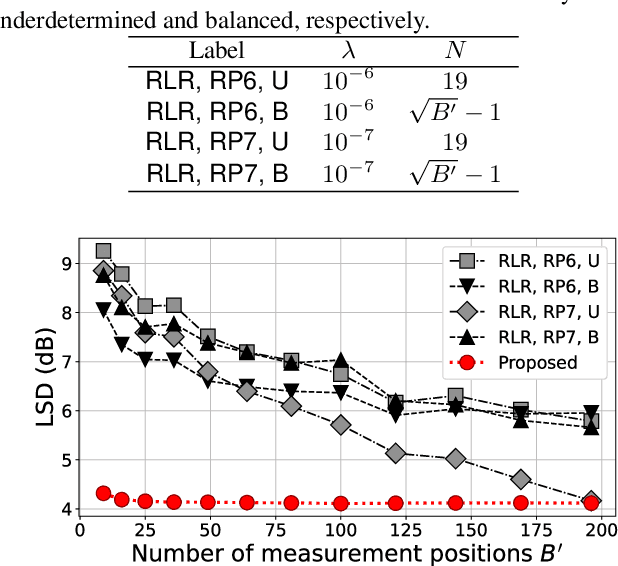

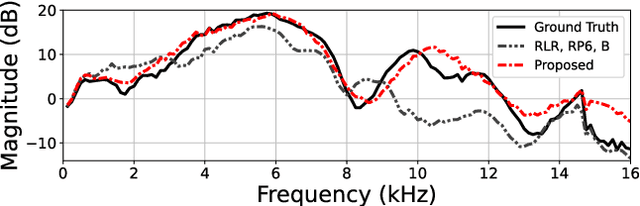

Abstract:We propose a method of head-related transfer function (HRTF) interpolation from sparsely measured HRTFs using an autoencoder with source position conditioning. The proposed method is drawn from an analogy between an HRTF interpolation method based on regularized linear regression (RLR) and an autoencoder. Through this analogy, we found the key feature of the RLR-based method that HRTFs are decomposed into source-position-dependent and source-position-independent factors. On the basis of this finding, we design the encoder and decoder so that their weights and biases are generated from source positions. Furthermore, we introduce an aggregation module that reduces the dependence of latent variables on source position for obtaining a source-position-independent representation of each subject. Numerical experiments show that the proposed method can work well for unseen subjects and achieve an interpolation performance with only one-eighth measurements comparable to that of the RLR-based method.

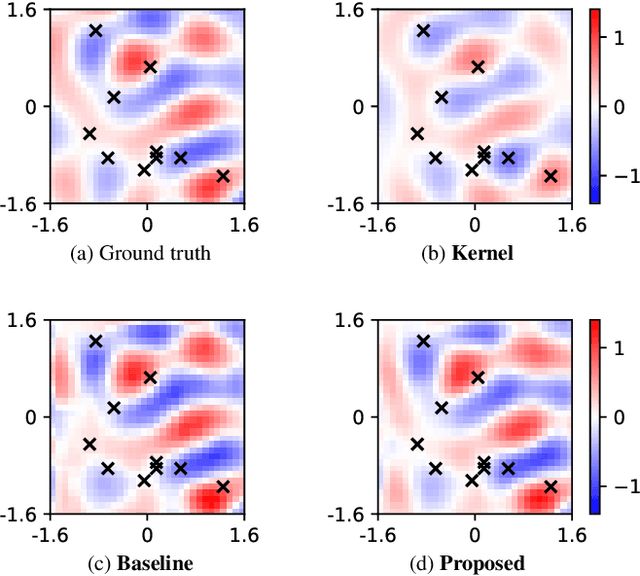

Physics-informed convolutional neural network with bicubic spline interpolation for sound field estimation

Jul 22, 2022

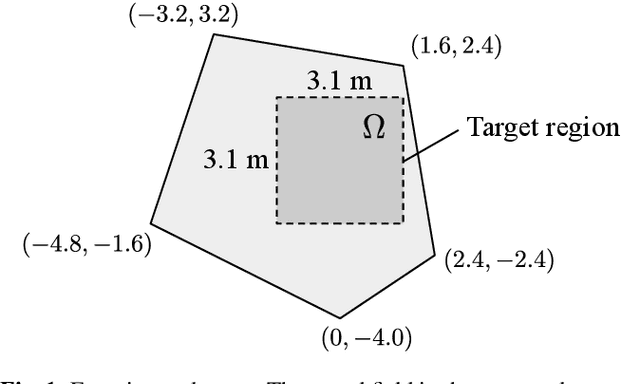

Abstract:A sound field estimation method based on a physics-informed convolutional neural network (PICNN) using spline interpolation is proposed. Most of the sound field estimation methods are based on wavefunction expansion, making the estimated function satisfy the Helmholtz equation. However, these methods rely only on physical properties; thus, they suffer from a significant deterioration of accuracy when the number of measurements is small. Recent learning-based methods based on neural networks have advantages in estimating from sparse measurements when training data are available. However, since physical properties are not taken into consideration, the estimated function can be a physically infeasible solution. We propose the application of PICNN to the sound field estimation problem by using a loss function that penalizes deviation from the Helmholtz equation. Since the output of CNN is a spatially discretized pressure distribution, it is difficult to directly evaluate the Helmholtz-equation loss function. Therefore, we incorporate bicubic spline interpolation in the PICNN framework. Experimental results indicated that accurate and physically feasible estimation from sparse measurements can be achieved with the proposed method.

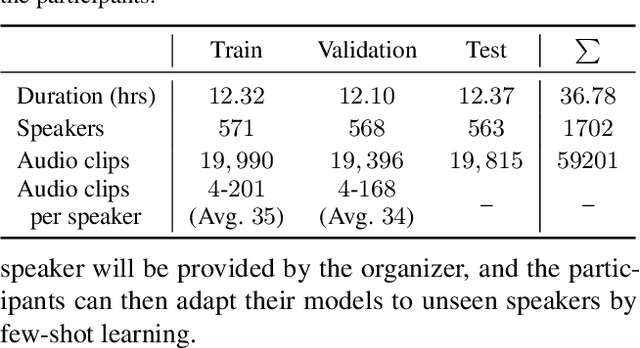

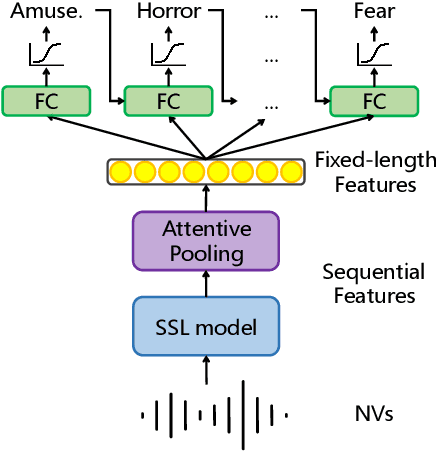

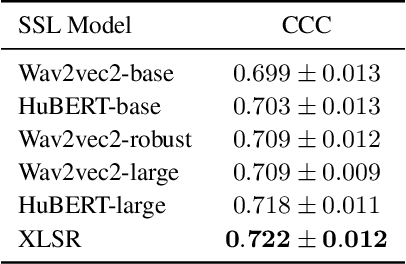

Exploring the Effectiveness of Self-supervised Learning and Classifier Chains in Emotion Recognition of Nonverbal Vocalizations

Jun 21, 2022

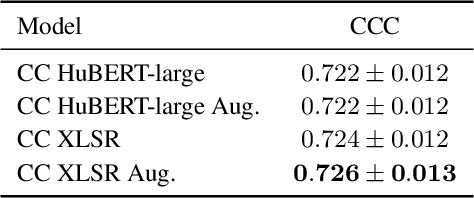

Abstract:We present an emotion recognition system for nonverbal vocalizations (NVs) submitted to the ExVo Few-Shot track of the ICML Expressive Vocalizations Competition 2022. The proposed method uses self-supervised learning (SSL) models to extract features from NVs and uses a classifier chain to model the label dependency between emotions. Experimental results demonstrate that the proposed method can significantly improve the performance of this task compared to several baseline methods. Our proposed method obtained a mean concordance correlation coefficient (CCC) of $0.725$ in the validation set and $0.739$ in the test set, while the best baseline method only obtained $0.554$ in the validation set. We publicate our code at https://github.com/Aria-K-Alethia/ExVo to help others to reproduce our experimental results.

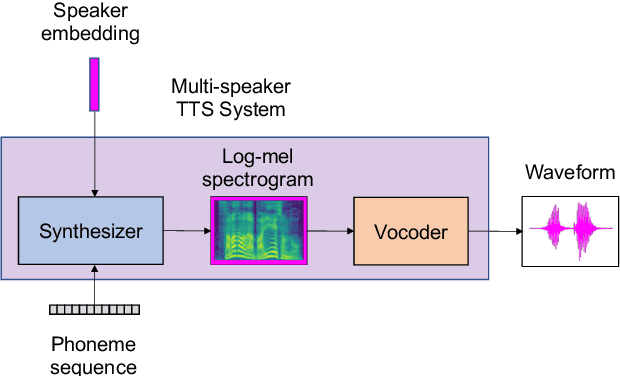

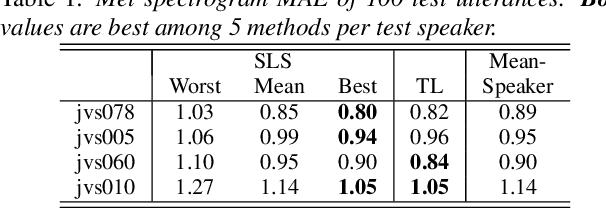

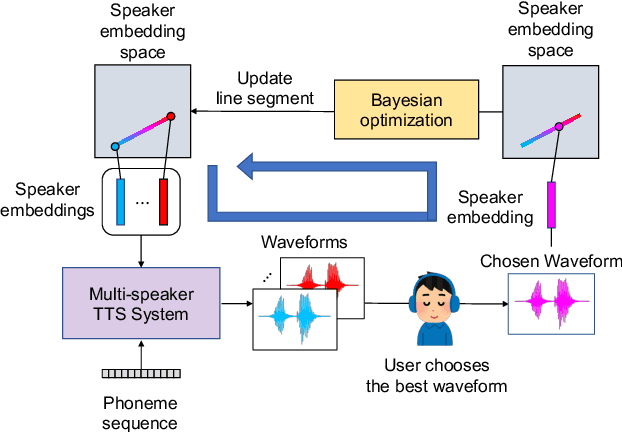

Human-in-the-loop Speaker Adaptation for DNN-based Multi-speaker TTS

Jun 21, 2022

Abstract:This paper proposes a human-in-the-loop speaker-adaptation method for multi-speaker text-to-speech. With a conventional speaker-adaptation method, a target speaker's embedding vector is extracted from his/her reference speech using a speaker encoder trained on a speaker-discriminative task. However, this method cannot obtain an embedding vector for the target speaker when the reference speech is unavailable. Our method is based on a human-in-the-loop optimization framework, which incorporates a user to explore the speaker-embedding space to find the target speaker's embedding. The proposed method uses a sequential line search algorithm that repeatedly asks a user to select a point on a line segment in the embedding space. To efficiently choose the best speech sample from multiple stimuli, we also developed a system in which a user can switch between multiple speakers' voices for each phoneme while looping an utterance. Experimental results indicate that the proposed method can achieve comparable performance to the conventional one in objective and subjective evaluations even if reference speech is not used as the input of a speaker encoder directly.

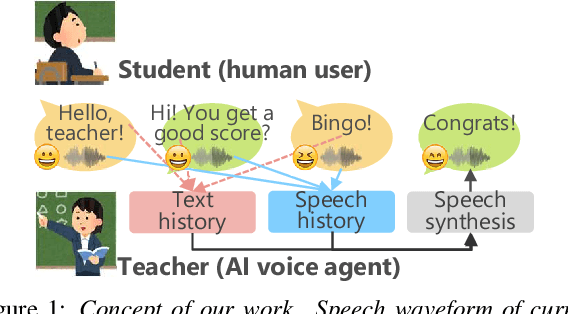

Acoustic Modeling for End-to-End Empathetic Dialogue Speech Synthesis Using Linguistic and Prosodic Contexts of Dialogue History

Jun 16, 2022

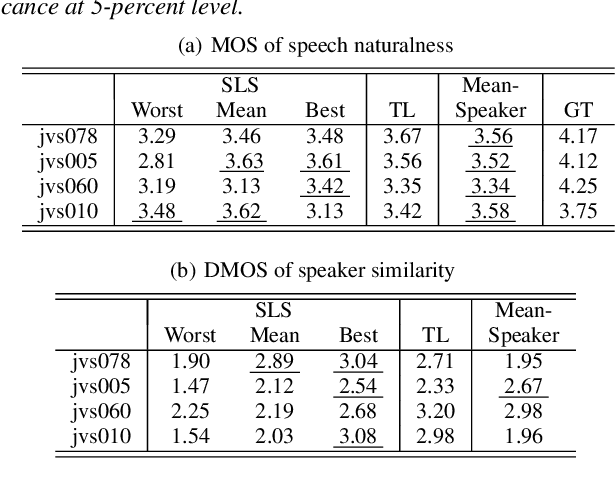

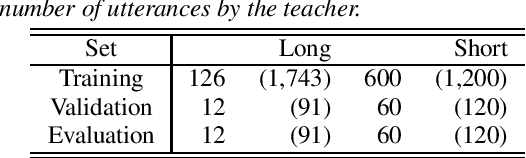

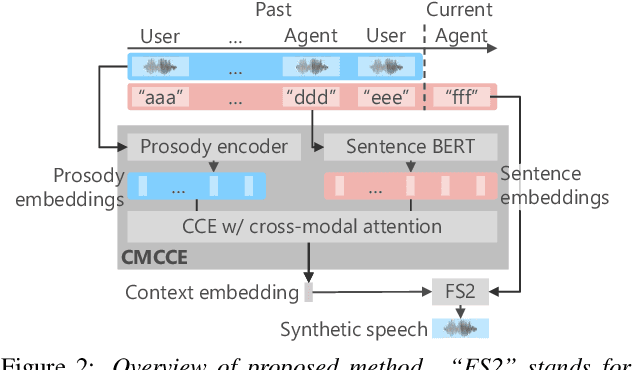

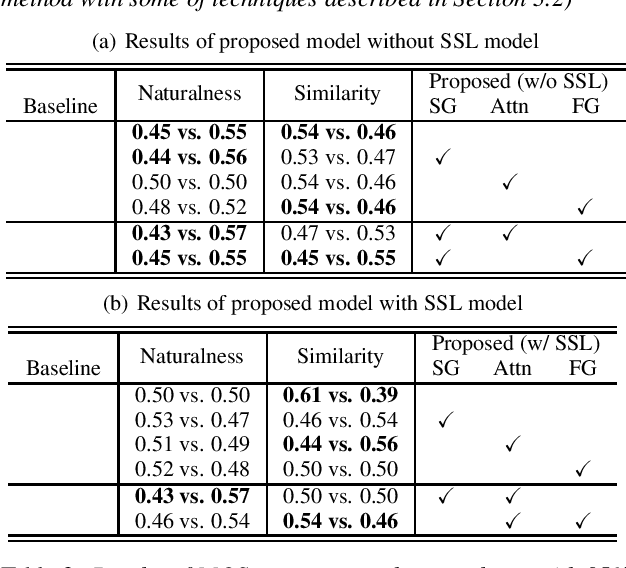

Abstract:We propose an end-to-end empathetic dialogue speech synthesis (DSS) model that considers both the linguistic and prosodic contexts of dialogue history. Empathy is the active attempt by humans to get inside the interlocutor in dialogue, and empathetic DSS is a technology to implement this act in spoken dialogue systems. Our model is conditioned by the history of linguistic and prosody features for predicting appropriate dialogue context. As such, it can be regarded as an extension of the conventional linguistic-feature-based dialogue history modeling. To train the empathetic DSS model effectively, we investigate 1) a self-supervised learning model pretrained with large speech corpora, 2) a style-guided training using a prosody embedding of the current utterance to be predicted by the dialogue context embedding, 3) a cross-modal attention to combine text and speech modalities, and 4) a sentence-wise embedding to achieve fine-grained prosody modeling rather than utterance-wise modeling. The evaluation results demonstrate that 1) simply considering prosodic contexts of the dialogue history does not improve the quality of speech in empathetic DSS and 2) introducing style-guided training and sentence-wise embedding modeling achieves higher speech quality than that by the conventional method.

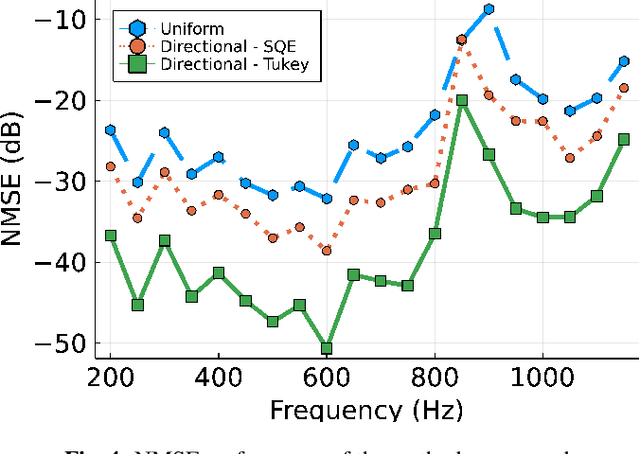

Region-to-region kernel interpolation of acoustic transfer function with directional weighting

May 05, 2022

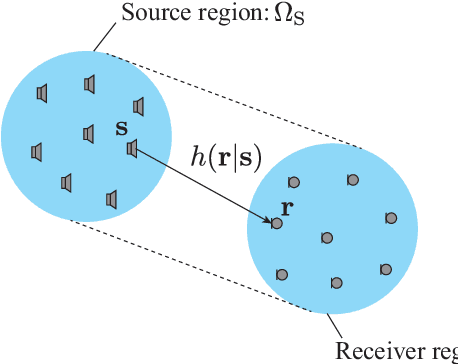

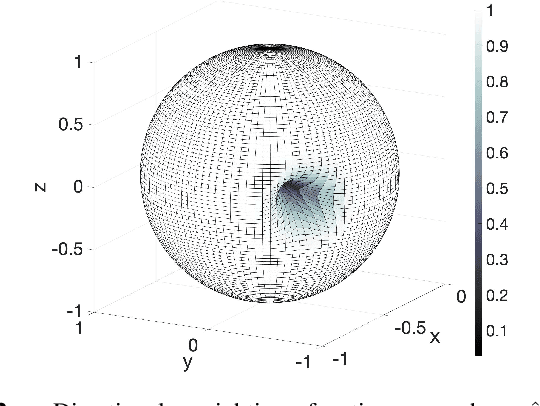

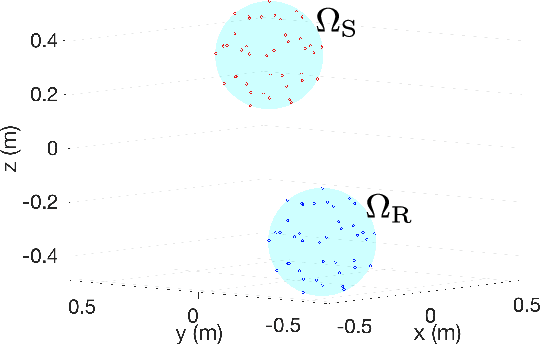

Abstract:A method of interpolating the acoustic transfer function (ATF) between regions that takes into account both the physical properties of the ATF and the directionality of region configurations is proposed. Most spatial ATF interpolation methods are limited to estimation in the region of receivers. A kernel method for region-to-region ATF interpolation makes it possible to estimate the ATFs for both source and receiver regions from a discrete set of ATF measurements. We newly formulate the reproducing kernel Hilbert space and associated kernel function incorporating directional weight to enhance the interpolation accuracy. We also investigate hyperparameter optimization methods for this kernel function. Numerical experiments indicate that the proposed method outperforms the method without the use of directional weighting.

* To appear in ICASSP 2022 - 2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)

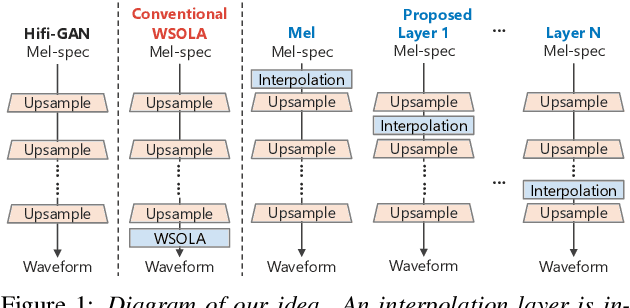

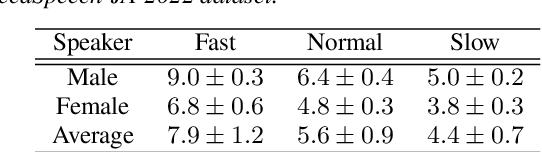

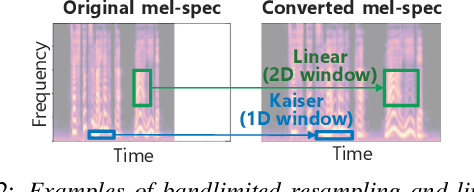

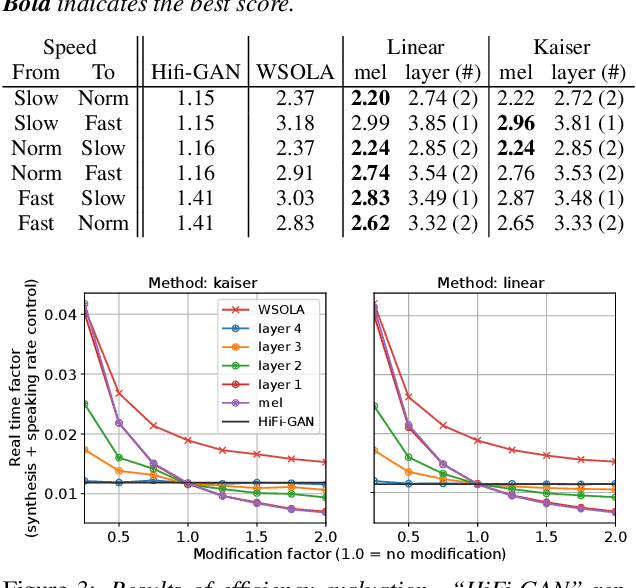

Speaking-Rate-Controllable HiFi-GAN Using Feature Interpolation

Apr 22, 2022

Abstract:This paper presents a speaking-rate-controllable HiFi-GAN neural vocoder. Original HiFi-GAN is a high-fidelity, computationally efficient, and tiny-footprint neural vocoder. We attempt to incorporate a speaking rate control function into HiFi-GAN for improving the accessibility of synthetic speech. The proposed method inserts a differentiable interpolation layer into the HiFi-GAN architecture. A signal resampling method and an image scaling method are implemented in the proposed method to warp the mel-spectrograms or hidden features of the neural vocoder. We also design and open-source a Japanese speech corpus containing three kinds of speaking rates to evaluate the proposed speaking rate control method. Experimental results of comprehensive objective and subjective evaluations demonstrate that 1) the proposed method outperforms a baseline time-scale modification algorithm in speech naturalness, 2) warping mel-spectrograms by image scaling obtained the best performance among all proposed methods, and 3) the proposed speaking rate control method can be incorporated into HiFi-GAN without losing computational efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge