Kenta Udagawa

Multi-Task Adversarial Training Algorithm for Multi-Speaker Neural Text-to-Speech

Sep 26, 2022

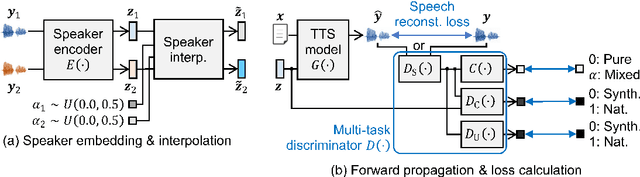

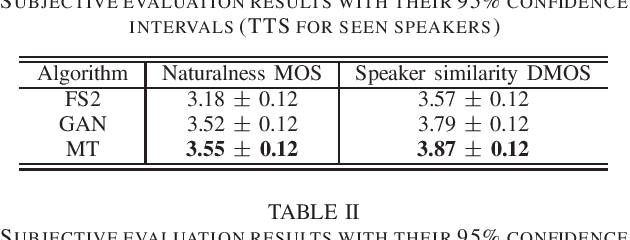

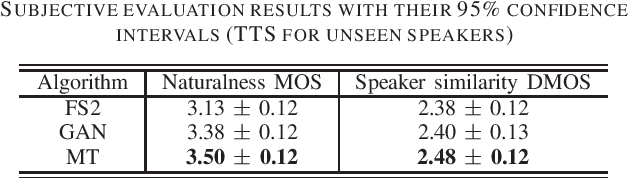

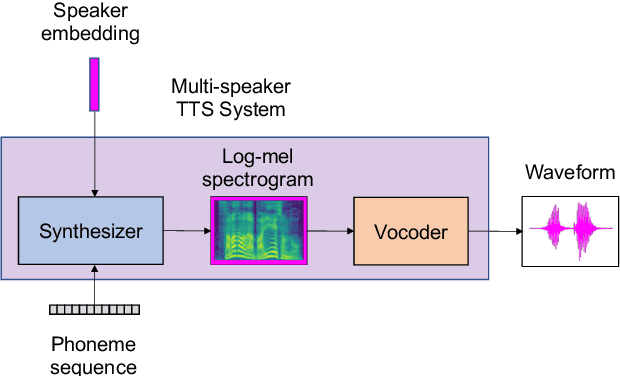

Abstract:We propose a novel training algorithm for a multi-speaker neural text-to-speech (TTS) model based on multi-task adversarial training. A conventional generative adversarial network (GAN)-based training algorithm significantly improves the quality of synthetic speech by reducing the statistical difference between natural and synthetic speech. However, the algorithm does not guarantee the generalization performance of the trained TTS model in synthesizing voices of unseen speakers who are not included in the training data. Our algorithm alternatively trains two deep neural networks: multi-task discriminator and multi-speaker neural TTS model (i.e., generator of GANs). The discriminator is trained not only to distinguish between natural and synthetic speech but also to verify the speaker of input speech is existent or non-existent (i.e., newly generated by interpolating seen speakers' embedding vectors). Meanwhile, the generator is trained to minimize the weighted sum of the speech reconstruction loss and adversarial loss for fooling the discriminator, which achieves high-quality multi-speaker TTS even if the target speaker is unseen. Experimental evaluation shows that our algorithm improves the quality of synthetic speech better than a conventional GANSpeech algorithm.

Human-in-the-loop Speaker Adaptation for DNN-based Multi-speaker TTS

Jun 21, 2022

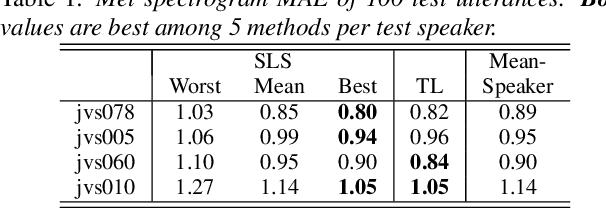

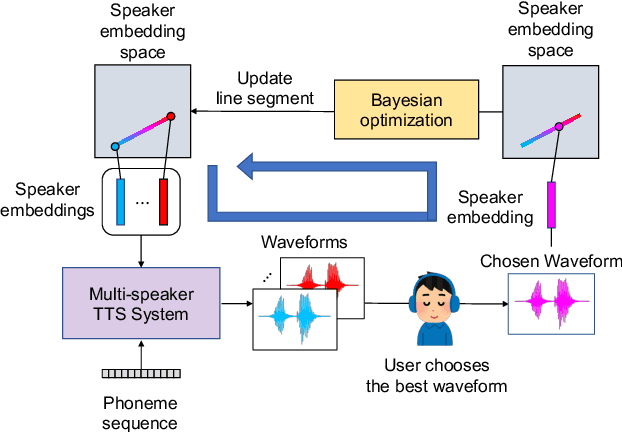

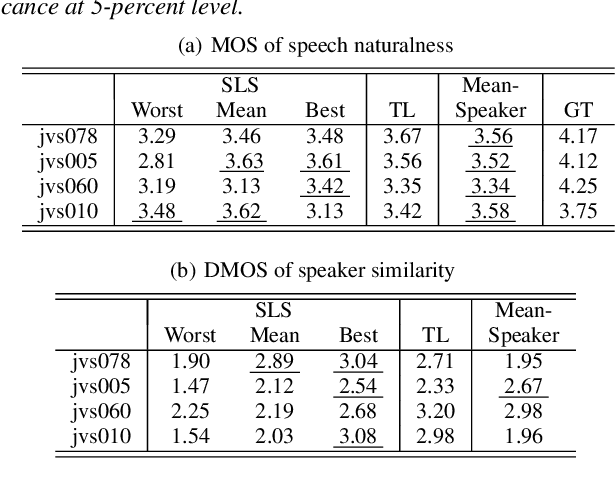

Abstract:This paper proposes a human-in-the-loop speaker-adaptation method for multi-speaker text-to-speech. With a conventional speaker-adaptation method, a target speaker's embedding vector is extracted from his/her reference speech using a speaker encoder trained on a speaker-discriminative task. However, this method cannot obtain an embedding vector for the target speaker when the reference speech is unavailable. Our method is based on a human-in-the-loop optimization framework, which incorporates a user to explore the speaker-embedding space to find the target speaker's embedding. The proposed method uses a sequential line search algorithm that repeatedly asks a user to select a point on a line segment in the embedding space. To efficiently choose the best speech sample from multiple stimuli, we also developed a system in which a user can switch between multiple speakers' voices for each phoneme while looping an utterance. Experimental results indicate that the proposed method can achieve comparable performance to the conventional one in objective and subjective evaluations even if reference speech is not used as the input of a speaker encoder directly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge