Hanzhe Teng

Adaptive LiDAR Odometry and Mapping for Autonomous Agricultural Mobile Robots in Unmanned Farms

Dec 03, 2024Abstract:Unmanned and intelligent agricultural systems are crucial for enhancing agricultural efficiency and for helping mitigate the effect of labor shortage. However, unlike urban environments, agricultural fields impose distinct and unique challenges on autonomous robotic systems, such as the unstructured and dynamic nature of the environment, the rough and uneven terrain, and the resulting non-smooth robot motion. To address these challenges, this work introduces an adaptive LiDAR odometry and mapping framework tailored for autonomous agricultural mobile robots operating in complex agricultural environments. The proposed framework consists of a robust LiDAR odometry algorithm based on dense Generalized-ICP scan matching, and an adaptive mapping module that considers motion stability and point cloud consistency for selective map updates. The key design principle of this framework is to prioritize the incremental consistency of the map by rejecting motion-distorted points and sparse dynamic objects, which in turn leads to high accuracy in odometry estimated from scan matching against the map. The effectiveness of the proposed method is validated via extensive evaluation against state-of-the-art methods on field datasets collected in real-world agricultural environments featuring various planting types, terrain types, and robot motion profiles. Results demonstrate that our method can achieve accurate odometry estimation and mapping results consistently and robustly across diverse agricultural settings, whereas other methods are sensitive to abrupt robot motion and accumulated drift in unstructured environments. Further, the computational efficiency of our method is competitive compared with other methods. The source code of the developed method and the associated field dataset are publicly available at https://github.com/UCR-Robotics/AG-LOAM.

On-the-Go Tree Detection and Geometric Traits Estimation with Ground Mobile Robots in Fruit Tree Groves

Apr 03, 2024

Abstract:By-tree information gathering is an essential task in precision agriculture achieved by ground mobile sensors, but it can be time- and labor-intensive. In this paper we present an algorithmic framework to perform real-time and on-the-go detection of trees and key geometric characteristics (namely, width and height) with wheeled mobile robots in the field. Our method is based on the fusion of 2D domain-specific data (normalized difference vegetation index [NDVI] acquired via a red-green-near-infrared [RGN] camera) and 3D LiDAR point clouds, via a customized tree landmark association and parameter estimation algorithm. The proposed system features a multi-modal and entropy-based landmark correspondences approach, integrated into an underlying Kalman filter system to recognize the surrounding trees and jointly estimate their spatial and vegetation-based characteristics. Realistic simulated tests are used to evaluate our proposed algorithm's behavior in a variety of settings. Physical experiments in agricultural fields help validate our method's efficacy in acquiring accurate by-tree information on-the-go and in real-time by employing only onboard computational and sensing resources.

Multimodal Dataset for Localization, Mapping and Crop Monitoring in Citrus Tree Farms

Sep 29, 2023

Abstract:In this work we introduce the CitrusFarm dataset, a comprehensive multimodal sensory dataset collected by a wheeled mobile robot operating in agricultural fields. The dataset offers stereo RGB images with depth information, as well as monochrome, near-infrared and thermal images, presenting diverse spectral responses crucial for agricultural research. Furthermore, it provides a range of navigational sensor data encompassing wheel odometry, LiDAR, inertial measurement unit (IMU), and GNSS with Real-Time Kinematic (RTK) as the centimeter-level positioning ground truth. The dataset comprises seven sequences collected in three fields of citrus trees, featuring various tree species at different growth stages, distinctive planting patterns, as well as varying daylight conditions. It spans a total operation time of 1.7 hours, covers a distance of 7.5 km, and constitutes 1.3 TB of data. We anticipate that this dataset can facilitate the development of autonomous robot systems operating in agricultural tree environments, especially for localization, mapping and crop monitoring tasks. Moreover, the rich sensing modalities offered in this dataset can also support research in a range of robotics and computer vision tasks, such as place recognition, scene understanding, object detection and segmentation, and multimodal learning. The dataset, in conjunction with related tools and resources, is made publicly available at https://github.com/UCR-Robotics/Citrus-Farm-Dataset.

Centroid Distance Keypoint Detector for Colored Point Clouds

Oct 04, 2022

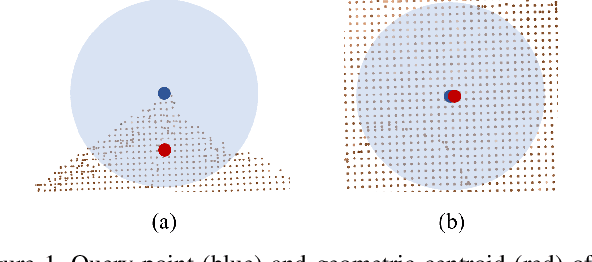

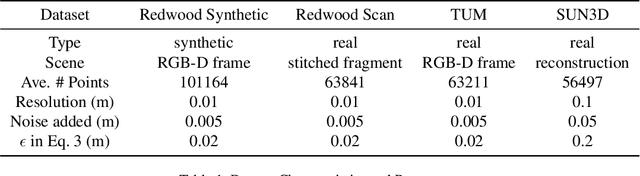

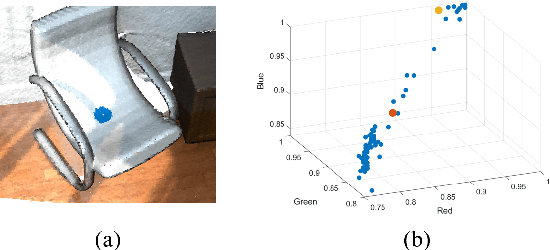

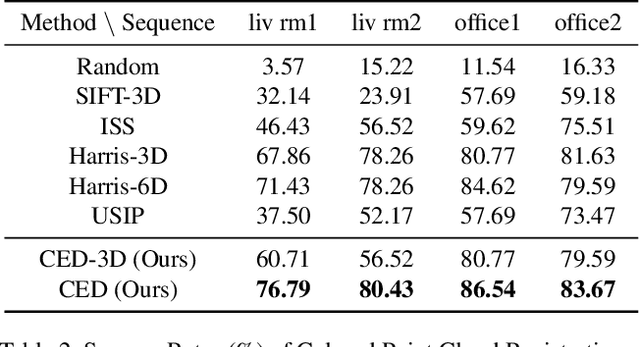

Abstract:Keypoint detection serves as the basis for many computer vision and robotics applications. Despite the fact that colored point clouds can be readily obtained, most existing keypoint detectors extract only geometry-salient keypoints, which can impede the overall performance of systems that intend to (or have the potential to) leverage color information. To promote advances in such systems, we propose an efficient multi-modal keypoint detector that can extract both geometry-salient and color-salient keypoints in colored point clouds. The proposed CEntroid Distance (CED) keypoint detector comprises an intuitive and effective saliency measure, the centroid distance, that can be used in both 3D space and color space, and a multi-modal non-maximum suppression algorithm that can select keypoints with high saliency in two or more modalities. The proposed saliency measure leverages directly the distribution of points in a local neighborhood and does not require normal estimation or eigenvalue decomposition. We evaluate the proposed method in terms of repeatability and computational efficiency (i.e. running time) against state-of-the-art keypoint detectors on both synthetic and real-world datasets. Results demonstrate that our proposed CED keypoint detector requires minimal computational time while attaining high repeatability. To showcase one of the potential applications of the proposed method, we further investigate the task of colored point cloud registration. Results suggest that our proposed CED detector outperforms state-of-the-art handcrafted and learning-based keypoint detectors in the evaluated scenes. The C++ implementation of the proposed method is made publicly available at https://github.com/UCR-Robotics/CED_Detector.

Development and Testing of a Novel Automated Insect Capture Module for Sample Collection and Transfer

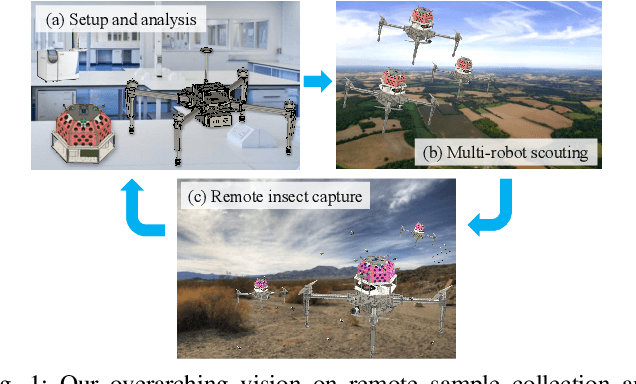

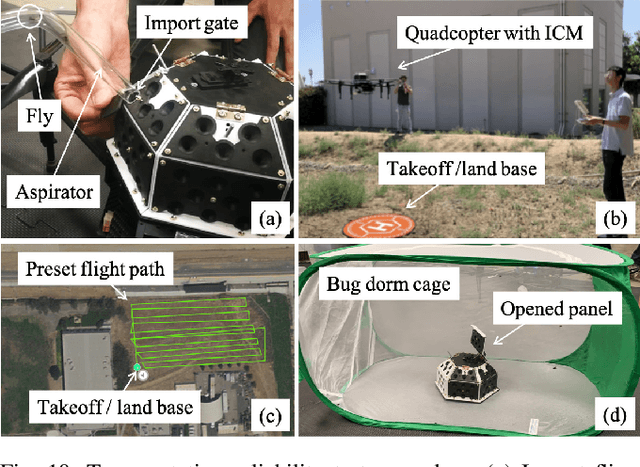

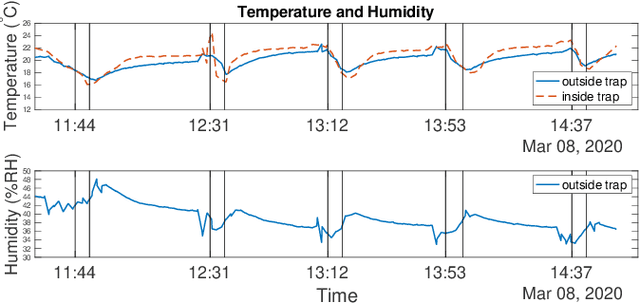

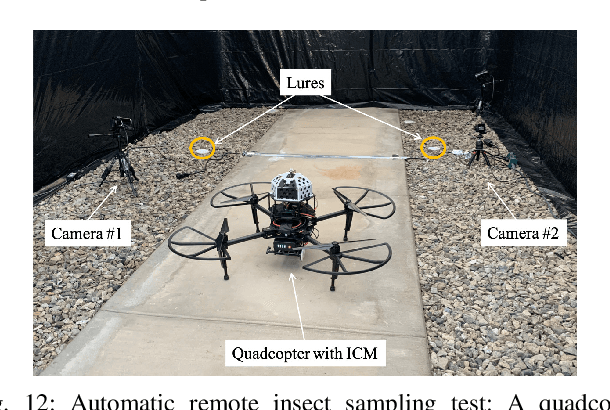

Aug 29, 2020

Abstract:There exists an urgent need for efficient tools in disease surveillance to help model and predict the spread of disease. The transmission of insect-borne diseases poses a serious concern to public health officials and the medical and research community at large. In the modeling of this spread, we face bottlenecks in (1) the frequency at which we are able to sample insect vectors in environments that are prone to propagating disease, (2) manual labor needed to set up and retrieve surveillance devices like traps, and (3) the return time in analyzing insect samples and determining if an infectious disease is spreading in a region. To help address these bottlenecks, we present in this paper the design, fabrication, and testing of a novel automated insect capture module (ICM) or trap that aims to improve the rate of transferring samples collected from the environment via aerial robots. The ICM features an ultraviolet light attractant, passive capture mechanism, panels which can open and close for access to insects, and a small onboard computer for automated operation and data logging. At the same time, the ICM is designed to be accessible; it is small-scale, lightweight and low-cost, and can be integrated with commercially available aerial robots. Indoor and outdoor experimentation validates ICM's feasibility in insect capturing and safe transportation. The device can help bring us one step closer toward achieving fully autonomous and scalable epidemiology by leveraging autonomous robots technology to aid the medical and research community.

Online Exploration and Coverage Planning in Unknown Obstacle-Cluttered Environments

Jun 30, 2020

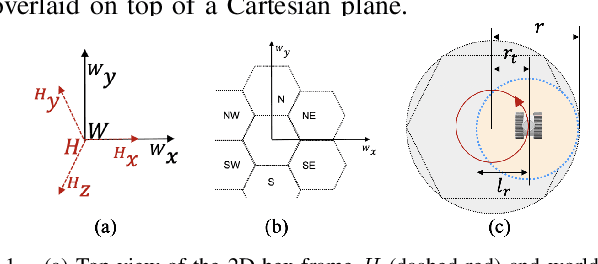

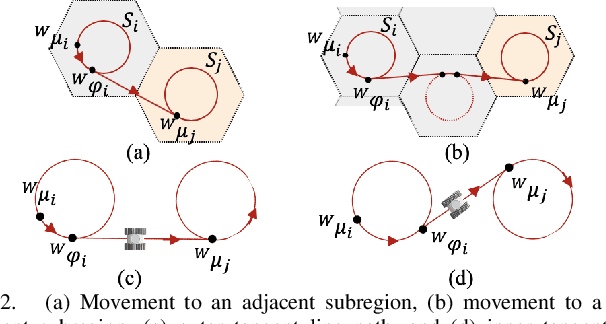

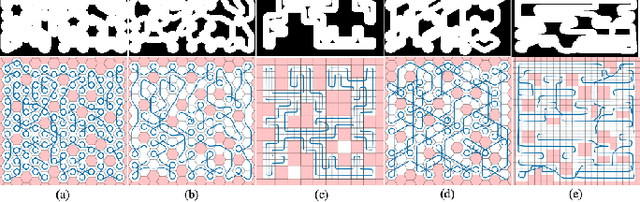

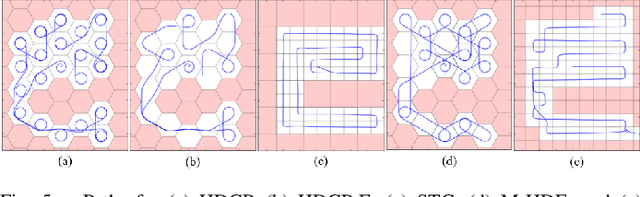

Abstract:Online coverage planning can be useful in applications like field monitoring and search and rescue. Without prior information of the environment, achieving resolution-complete coverage considering the non-holonomic mobility constraints in commonly-used vehicles (e.g., wheeled robots) remains a challenge. In this paper, we propose a hierarchical, hex-decomposition-based coverage planning algorithm for unknown, obstacle-cluttered environments. The proposed approach ensures resolution-complete coverage, can be tuned to achieve fast exploration, and plans smooth paths for Dubins vehicles to follow at constant velocity in real-time. Gazebo simulations and hardware experiments with a non-holonomic wheeled robot show that our approach can successfully tradeoff between coverage and exploration speed and can outperform existing online coverage algorithms in terms of total covered area or exploration speed according to how it is tuned.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge