Dimitrios Chatziparaschis

Adaptive LiDAR Odometry and Mapping for Autonomous Agricultural Mobile Robots in Unmanned Farms

Dec 03, 2024Abstract:Unmanned and intelligent agricultural systems are crucial for enhancing agricultural efficiency and for helping mitigate the effect of labor shortage. However, unlike urban environments, agricultural fields impose distinct and unique challenges on autonomous robotic systems, such as the unstructured and dynamic nature of the environment, the rough and uneven terrain, and the resulting non-smooth robot motion. To address these challenges, this work introduces an adaptive LiDAR odometry and mapping framework tailored for autonomous agricultural mobile robots operating in complex agricultural environments. The proposed framework consists of a robust LiDAR odometry algorithm based on dense Generalized-ICP scan matching, and an adaptive mapping module that considers motion stability and point cloud consistency for selective map updates. The key design principle of this framework is to prioritize the incremental consistency of the map by rejecting motion-distorted points and sparse dynamic objects, which in turn leads to high accuracy in odometry estimated from scan matching against the map. The effectiveness of the proposed method is validated via extensive evaluation against state-of-the-art methods on field datasets collected in real-world agricultural environments featuring various planting types, terrain types, and robot motion profiles. Results demonstrate that our method can achieve accurate odometry estimation and mapping results consistently and robustly across diverse agricultural settings, whereas other methods are sensitive to abrupt robot motion and accumulated drift in unstructured environments. Further, the computational efficiency of our method is competitive compared with other methods. The source code of the developed method and the associated field dataset are publicly available at https://github.com/UCR-Robotics/AG-LOAM.

Augmented-Reality Enabled Crop Monitoring with Robot Assistance

Nov 05, 2024

Abstract:The integration of augmented reality (AR), extended reality (XR), and virtual reality (VR) technologies in agriculture has shown significant promise in enhancing various agricultural practices. Mobile robots have also been adopted as assessment tools in precision agriculture, improving economic efficiency and productivity, and minimizing undesired effects such as weeds and pests. Despite considerable work on both fronts, the combination of a versatile User Interface (UI) provided by an AR headset with the integration and direct interaction and control of a mobile field robot has not yet been fully explored or standardized. This work aims to address this gap by providing real-time data input and control output of a mobile robot for precision agriculture through a virtual environment enabled by an AR headset interface. The system leverages open-source computational tools and off-the-shelf hardware for effective integration. Distinctive case studies are presented where growers or technicians can interact with a legged robot via an AR headset and a UI. Users can teleoperate the robot to gather information in an area of interest, request real-time graphed status of an area, or have the robot autonomously navigate to selected areas for measurement updates. The proposed system utilizes a custom local navigation method with a fixed holographic coordinate system in combination with QR codes. This step toward fusing AR and robotics in agriculture aims to provide practical solutions for real-time data management and control enabled by human-robot interaction. The implementation can be extended to various robot applications in agriculture and beyond, promoting a unified framework for on-demand and autonomous robot operation in the field.

Adaptive Environment-Aware Robotic Arm Reaching Based on a Bio-Inspired Neurodynamical Computational Framework

Jul 16, 2024

Abstract:Bio-inspired robotic systems are capable of adaptive learning, scalable control, and efficient information processing. Enabling real-time decision-making for such systems is critical to respond to dynamic changes in the environment. We focus on dynamic target tracking in open areas using a robotic six-degree-of-freedom manipulator with a bird-eye view camera for visual feedback, and by deploying the Neurodynamical Computational Framework (NeuCF). NeuCF is a recently developed bio-inspired model for target tracking based on Dynamic Neural Fields (DNFs) and Stochastic Optimal Control (SOC) theory. It has been trained for reaching actions on a planar surface toward localized visual beacons, and it can re-target or generate stop signals on the fly based on changes in the environment (e.g., a new target has emerged, or an existing one has been removed). We evaluated our system over various target-reaching scenarios. In all experiments, NeuCF had high end-effector positional accuracy, generated smooth trajectories, and provided reduced path lengths compared with a baseline cubic polynomial trajectory generator. In all, the developed system offers a robust and dynamic-aware robotic manipulation approach that affords real-time decision-making.

On-the-Go Tree Detection and Geometric Traits Estimation with Ground Mobile Robots in Fruit Tree Groves

Apr 03, 2024

Abstract:By-tree information gathering is an essential task in precision agriculture achieved by ground mobile sensors, but it can be time- and labor-intensive. In this paper we present an algorithmic framework to perform real-time and on-the-go detection of trees and key geometric characteristics (namely, width and height) with wheeled mobile robots in the field. Our method is based on the fusion of 2D domain-specific data (normalized difference vegetation index [NDVI] acquired via a red-green-near-infrared [RGN] camera) and 3D LiDAR point clouds, via a customized tree landmark association and parameter estimation algorithm. The proposed system features a multi-modal and entropy-based landmark correspondences approach, integrated into an underlying Kalman filter system to recognize the surrounding trees and jointly estimate their spatial and vegetation-based characteristics. Realistic simulated tests are used to evaluate our proposed algorithm's behavior in a variety of settings. Physical experiments in agricultural fields help validate our method's efficacy in acquiring accurate by-tree information on-the-go and in real-time by employing only onboard computational and sensing resources.

Robot-assisted Soil Apparent Electrical Conductivity Measurements in Orchards

Sep 10, 2023Abstract:Soil apparent electrical conductivity (ECa) is a vital metric in Precision Agriculture and Smart Farming, as it is used for optimal water content management, geological mapping, and yield prediction. Several existing methods seeking to estimate soil electrical conductivity are available, including physical soil sampling, ground sensor installation and monitoring, and the use of sensors that can obtain proximal ECa estimates. However, such methods can be either very laborious and/or too costly for practical use over larger field canopies. Robot-assisted ECa measurements, in contrast, may offer a scalable and cost-effective solution. In this work, we present one such solution that involves a ground mobile robot equipped with a customized and adjustable platform to hold an Electromagnetic Induction (EMI) sensor to perform semi-autonomous and on-demand ECa measurements under various field conditions. The platform is designed to be easily re-configurable in terms of sensor placement; results from testing for traversability and robot-to-sensor interference across multiple case studies help establish appropriate tradeoffs for sensor placement. Further, a developed simulation software package enables rapid and accessible estimation of terrain traversability in relation to desired EMI sensor placement. Extensive experimental evaluation across different fields demonstrates that the obtained robot-assisted ECa measurements are of high linearity compared with the ground truth (data collected manually by a handheld EMI sensor) by scoring more than $90\%$ in Pearson correlation coefficient in both plot measurements and estimated ECa maps generated by kriging interpolation. The proposed robotic solution supports autonomous behavior development in the field since it utilizes the ROS navigation stack along with the RTK GNSS positioning data and features various ranging sensors.

Centroid Distance Keypoint Detector for Colored Point Clouds

Oct 04, 2022

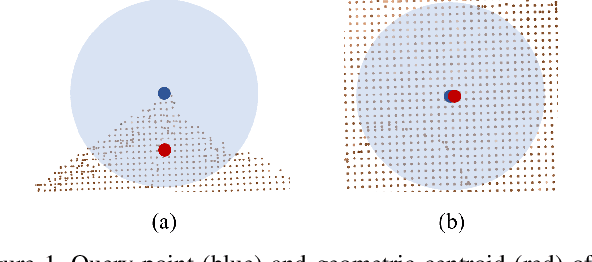

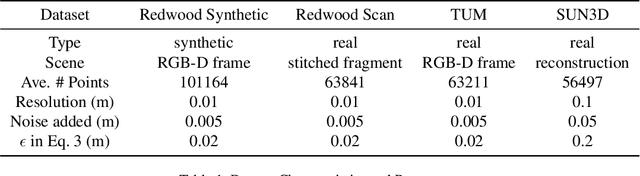

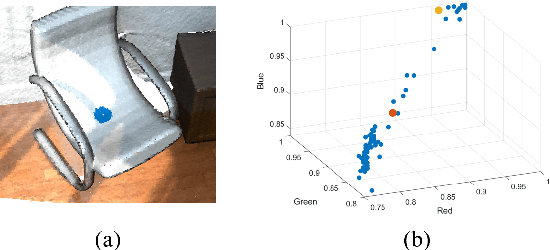

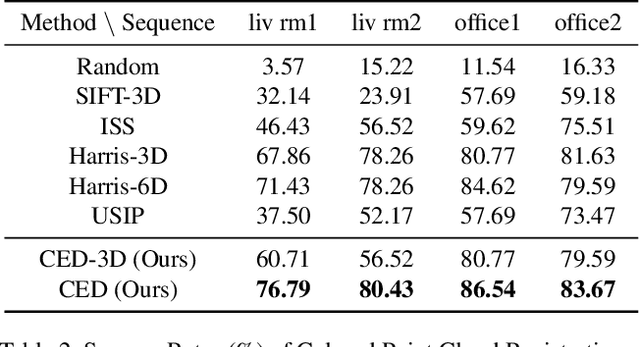

Abstract:Keypoint detection serves as the basis for many computer vision and robotics applications. Despite the fact that colored point clouds can be readily obtained, most existing keypoint detectors extract only geometry-salient keypoints, which can impede the overall performance of systems that intend to (or have the potential to) leverage color information. To promote advances in such systems, we propose an efficient multi-modal keypoint detector that can extract both geometry-salient and color-salient keypoints in colored point clouds. The proposed CEntroid Distance (CED) keypoint detector comprises an intuitive and effective saliency measure, the centroid distance, that can be used in both 3D space and color space, and a multi-modal non-maximum suppression algorithm that can select keypoints with high saliency in two or more modalities. The proposed saliency measure leverages directly the distribution of points in a local neighborhood and does not require normal estimation or eigenvalue decomposition. We evaluate the proposed method in terms of repeatability and computational efficiency (i.e. running time) against state-of-the-art keypoint detectors on both synthetic and real-world datasets. Results demonstrate that our proposed CED keypoint detector requires minimal computational time while attaining high repeatability. To showcase one of the potential applications of the proposed method, we further investigate the task of colored point cloud registration. Results suggest that our proposed CED detector outperforms state-of-the-art handcrafted and learning-based keypoint detectors in the evaluated scenes. The C++ implementation of the proposed method is made publicly available at https://github.com/UCR-Robotics/CED_Detector.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge