Haichao Du

Focusing on Language: Revealing and Exploiting Language Attention Heads in Multilingual Large Language Models

Nov 10, 2025Abstract:Large language models (LLMs) increasingly support multilingual understanding and generation. Meanwhile, efforts to interpret their internal mechanisms have emerged, offering insights to enhance multilingual performance. While multi-head self-attention (MHA) has proven critical in many areas, its role in multilingual capabilities remains underexplored. In this work, we study the contribution of MHA in supporting multilingual processing in LLMs. We propose Language Attention Head Importance Scores (LAHIS), an effective and efficient method that identifies attention head importance for multilingual capabilities via a single forward and backward pass through the LLM. Applying LAHIS to Aya-23-8B, Llama-3.2-3B, and Mistral-7B-v0.1, we reveal the existence of both language-specific and language-general heads. Language-specific heads enable cross-lingual attention transfer to guide the model toward target language contexts and mitigate off-target language generation issue, contributing to addressing challenges in multilingual LLMs. We also introduce a lightweight adaptation that learns a soft head mask to modulate attention outputs over language heads, requiring only 20 tunable parameters to improve XQuAD accuracy. Overall, our work enhances both the interpretability and multilingual capabilities of LLMs from the perspective of MHA.

EG-GAN: Cross-Language Emotion Gain Synthesis based on Cycle-Consistent Adversarial Networks

May 27, 2019

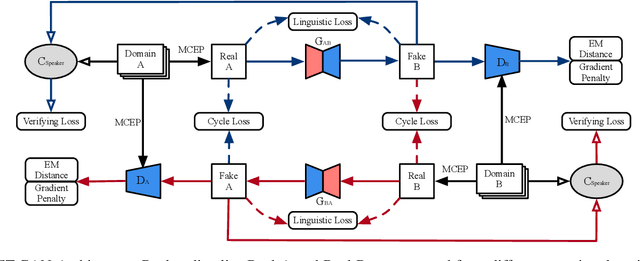

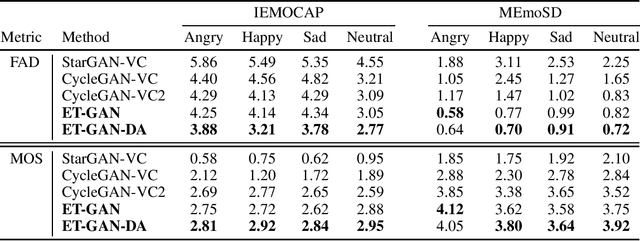

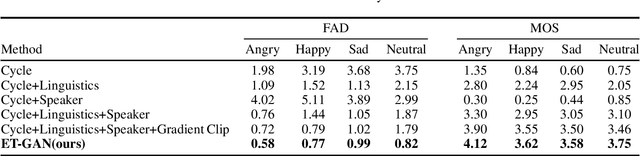

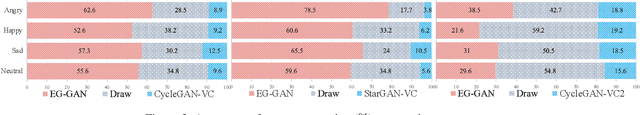

Abstract:Despite remarkable contributions from existing emotional speech synthesizers, we find that these methods are based on Text-to-Speech system or limited by aligned speech pairs, which suffered from pure emotion gain synthesis. Meanwhile, few studies have discussed the cross-language generalization ability of above methods to cope with the task of emotional speech synthesis in various languages. We propose a cross-language emotion gain synthesis method named EG-GAN which can learn a language-independent mapping from source emotion domain to target emotion domain in the absence of paired speech samples. EG-GAN is based on cycle-consistent generation adversarial network with a gradient penalty and an auxiliary speaker discriminator. The domain adaptation is introduced to implement the rapid migrating and sharing of emotional gains among different languages. The experiment results show that our method can efficiently synthesize high quality emotional speech from any source speech for given emotion categories, without the limitation of language differences and aligned speech pairs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge