Gyuseok Lee

Leveraging Historical and Current Interests for Continual Sequential Recommendation

Jun 09, 2025Abstract:Sequential recommendation models based on the Transformer architecture show superior performance in harnessing long-range dependencies within user behavior via self-attention. However, naively updating them on continuously arriving non-stationary data streams incurs prohibitive computation costs or leads to catastrophic forgetting. To address this, we propose Continual Sequential Transformer for Recommendation (CSTRec) that effectively leverages well-preserved historical user interests while capturing current interests. At its core is Continual Sequential Attention (CSA), a linear attention mechanism that retains past knowledge without direct access to old data. CSA integrates two key components: (1) Cauchy-Schwarz Normalization that stabilizes training under uneven interaction frequencies, and (2) Collaborative Interest Enrichment that mitigates forgetting through shared, learnable interest pools. We further introduce a technique that facilitates learning for cold-start users by transferring historical knowledge from behaviorally similar existing users. Extensive experiments on three real-world datasets indicate that CSTRec outperforms state-of-the-art baselines in both knowledge retention and acquisition.

Collaborative Diffusion Model for Recommender System

Jan 31, 2025

Abstract:Diffusion-based recommender systems (DR) have gained increasing attention for their advanced generative and denoising capabilities. However, existing DR face two central limitations: (i) a trade-off between enhancing generative capacity via noise injection and retaining the loss of personalized information. (ii) the underutilization of rich item-side information. To address these challenges, we present a Collaborative Diffusion model for Recommender System (CDiff4Rec). Specifically, CDiff4Rec generates pseudo-users from item features and leverages collaborative signals from both real and pseudo personalized neighbors identified through behavioral similarity, thereby effectively reconstructing nuanced user preferences. Experimental results on three public datasets show that CDiff4Rec outperforms competitors by effectively mitigating the loss of personalized information through the integration of item content and collaborative signals.

Continual Collaborative Distillation for Recommender System

May 29, 2024

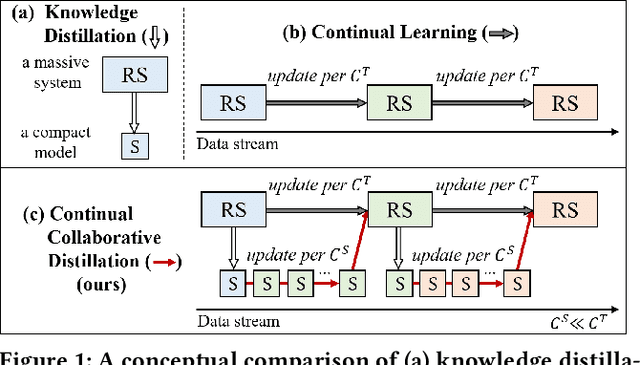

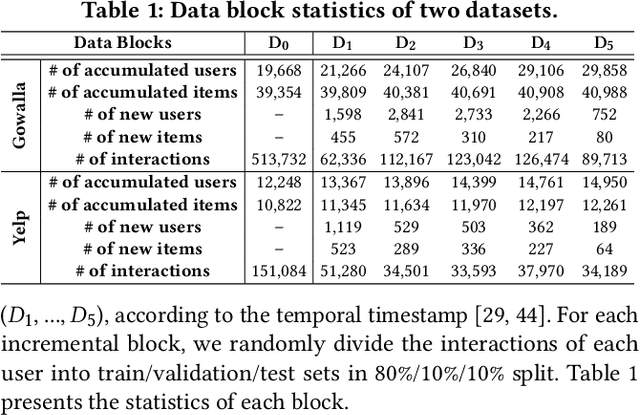

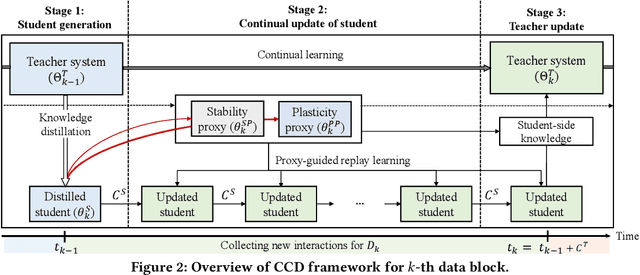

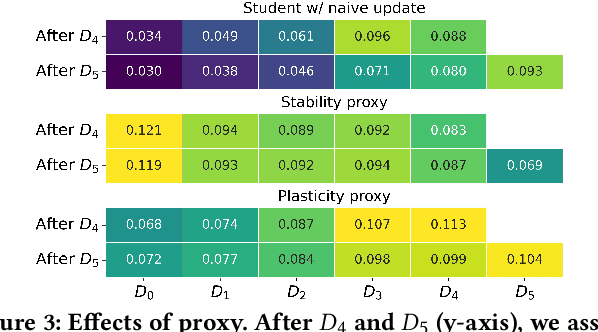

Abstract:Knowledge distillation (KD) has emerged as a promising technique for addressing the computational challenges associated with deploying large-scale recommender systems. KD transfers the knowledge of a massive teacher system to a compact student model, to reduce the huge computational burdens for inference while retaining high accuracy. The existing KD studies primarily focus on one-time distillation in static environments, leaving a substantial gap in their applicability to real-world scenarios dealing with continuously incoming users, items, and their interactions. In this work, we delve into a systematic approach to operating the teacher-student KD in a non-stationary data stream. Our goal is to enable efficient deployment through a compact student, which preserves the high performance of the massive teacher, while effectively adapting to continuously incoming data. We propose Continual Collaborative Distillation (CCD) framework, where both the teacher and the student continually and collaboratively evolve along the data stream. CCD facilitates the student in effectively adapting to new data, while also enabling the teacher to fully leverage accumulated knowledge. We validate the effectiveness of CCD through extensive quantitative, ablative, and exploratory experiments on two real-world datasets. We expect this research direction to contribute to narrowing the gap between existing KD studies and practical applications, thereby enhancing the applicability of KD in real-world systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge