Guanyu Zhang

Dual-Forecaster: A Multimodal Time Series Model Integrating Descriptive and Predictive Texts

May 02, 2025Abstract:Most existing single-modal time series models rely solely on numerical series, which suffer from the limitations imposed by insufficient information. Recent studies have revealed that multimodal models can address the core issue by integrating textual information. However, these models focus on either historical or future textual information, overlooking the unique contributions each plays in time series forecasting. Besides, these models fail to grasp the intricate relationships between textual and time series data, constrained by their moderate capacity for multimodal comprehension. To tackle these challenges, we propose Dual-Forecaster, a pioneering multimodal time series model that combines both descriptively historical textual information and predictive textual insights, leveraging advanced multimodal comprehension capability empowered by three well-designed cross-modality alignment techniques. Our comprehensive evaluations on fifteen multimodal time series datasets demonstrate that Dual-Forecaster is a distinctly effective multimodal time series model that outperforms or is comparable to other state-of-the-art models, highlighting the superiority of integrating textual information for time series forecasting. This work opens new avenues in the integration of textual information with numerical time series data for multimodal time series analysis.

Probabilistic Forecast Reconciliation with Kullback-Leibler Divergence Regularization

Nov 21, 2023Abstract:As the popularity of hierarchical point forecast reconciliation methods increases, there is a growing interest in probabilistic forecast reconciliation. Many studies have utilized machine learning or deep learning techniques to implement probabilistic forecasting reconciliation and have made notable progress. However, these methods treat the reconciliation step as a fixed and hard post-processing step, leading to a trade-off between accuracy and coherency. In this paper, we propose a new approach for probabilistic forecast reconciliation. Unlike existing approaches, our proposed approach fuses the prediction step and reconciliation step into a deep learning framework, making the reconciliation step more flexible and soft by introducing the Kullback-Leibler divergence regularization term into the loss function. The approach is evaluated using three hierarchical time series datasets, which shows the advantages of our approach over other probabilistic forecast reconciliation methods.

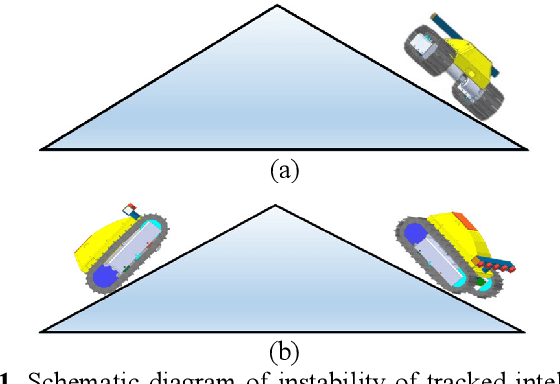

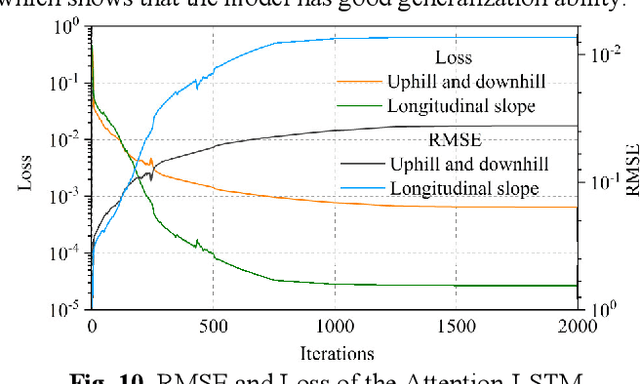

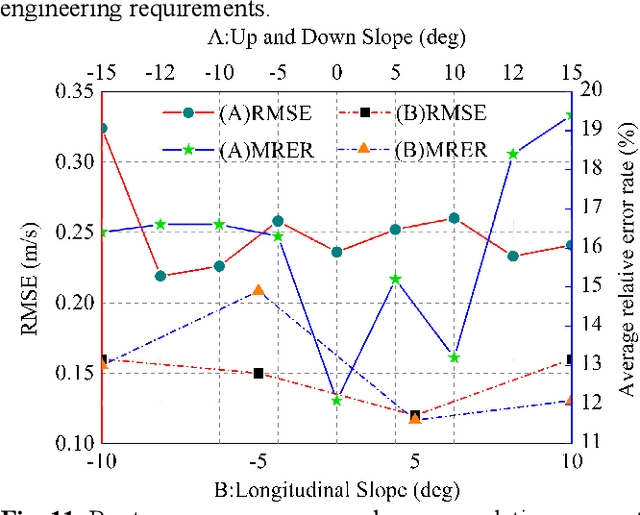

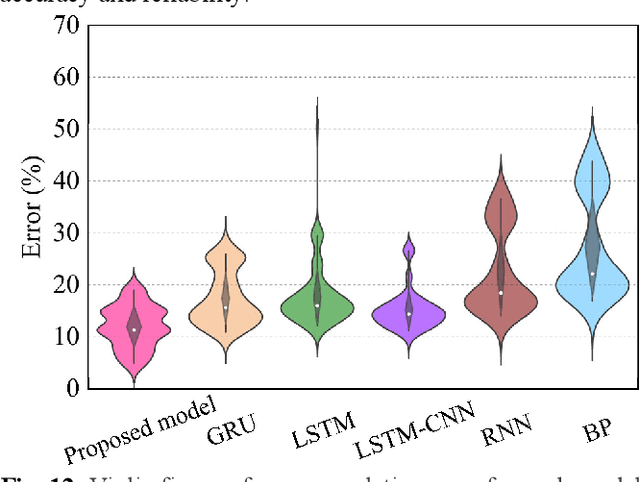

Research on Stable Obstacle Avoidance Control Strategy for Tracked Intelligent Transportation Vehicles in Non-structural Environment Based on Deep Learning

Jul 30, 2022

Abstract:Existing intelligent driving technology often has a problem in balancing smooth driving and fast obstacle avoidance, especially when the vehicle is in a non-structural environment, and is prone to instability in emergency situations. Therefore, this study proposed an autonomous obstacle avoidance control strategy that can effectively guarantee vehicle stability based on Attention-long short-term memory (Attention-LSTM) deep learning model with the idea of humanoid driving. First, we designed the autonomous obstacle avoidance control rules to guarantee the safety of unmanned vehicles. Second, we improved the autonomous obstacle avoidance control strategy combined with the stability analysis of special vehicles. Third, we constructed a deep learning obstacle avoidance control through experiments, and the average relative error of this system was 15%. Finally, the stability and accuracy of this control strategy were verified numerically and experimentally. The method proposed in this study can ensure that the unmanned vehicle can successfully avoid the obstacles while driving smoothly.

Visible and Near Infrared Image Fusion Based on Texture Information

Jul 22, 2022

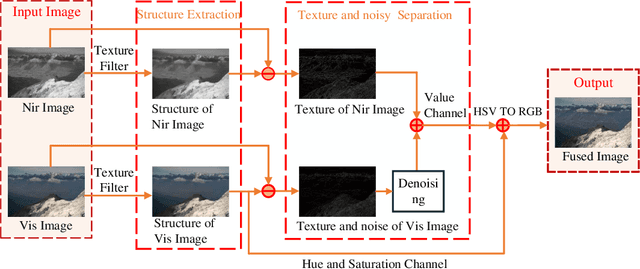

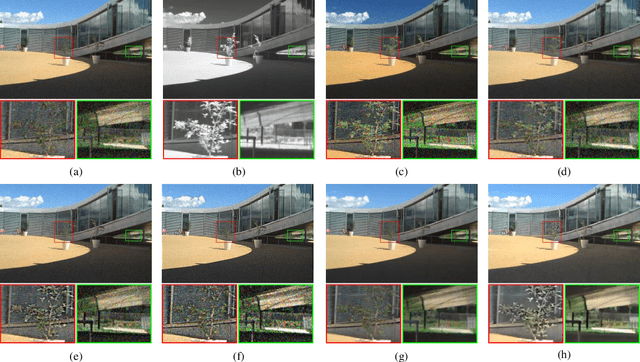

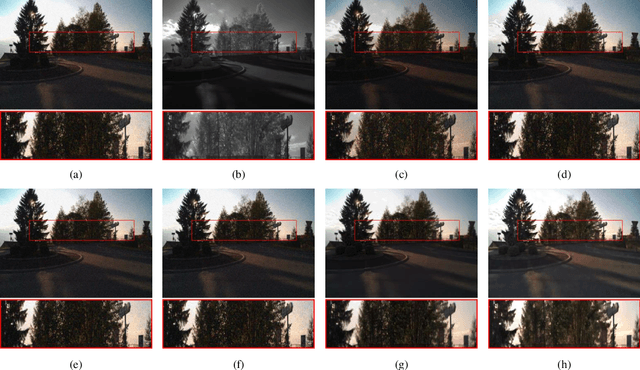

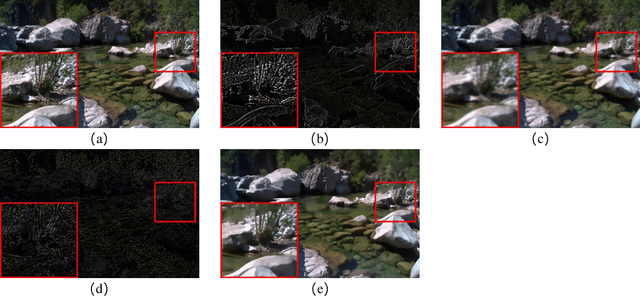

Abstract:Multi-sensor fusion is widely used in the environment perception system of the autonomous vehicle. It solves the interference caused by environmental changes and makes the whole driving system safer and more reliable. In this paper, a novel visible and near-infrared fusion method based on texture information is proposed to enhance unstructured environmental images. It aims at the problems of artifact, information loss and noise in traditional visible and near infrared image fusion methods. Firstly, the structure information of the visible image (RGB) and the near infrared image (NIR) after texture removal is obtained by relative total variation (RTV) calculation as the base layer of the fused image; secondly, a Bayesian classification model is established to calculate the noise weight and the noise information and the noise information in the visible image is adaptively filtered by joint bilateral filter; finally, the fused image is acquired by color space conversion. The experimental results demonstrate that the proposed algorithm can preserve the spectral characteristics and the unique information of visible and near-infrared images without artifacts and color distortion, and has good robustness as well as preserving the unique texture.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge