Fei Tong

Block-Weighted Lasso for Joint Optimization of Memory Depth and Kernels in Wideband DPD

Apr 18, 2025Abstract:The optimizations of both memory depth and kernel functions are critical for wideband digital pre-distortion (DPD). However, the memory depth is usually determined via exhaustive search over a wide range for the sake of linearization optimality, followed by the kernel selection of each memory depth, yielding excessive computational cost. In this letter, we aim to provide an efficient solution that jointly optimizes the memory depth and kernels while preserving reasonable linearization performance. Specifically, we propose to formulate this optimization as a blockweighted least absolute shrinkage and selection operator (Lasso) problem, where kernels are assigned regularization weights based on their polynomial orders. Then, a block coordinate descent algorithm is introduced to solve the block-weighted Lasso problem. Measurement results on a generalized memory polynomial (GMP) model demonstrates that our proposed solution reduces memory depth by 31.6% and kernel count by 85% compared to the full GMP, while achieving -46.4 dB error vector magnitude (EVM) for signals of 80 MHz bandwidth. In addition, the proposed solution outperforms both the full GMP and the GMP pruned by standard Lasso by at least 0.7 dB in EVM.

Exploiting Non-uniform Quantization for Enhanced ILC in Wideband Digital Pre-distortion

Feb 12, 2025Abstract:In this paper, it is identified that lowering the reference level at the vector signal analyzer can significantly improve the performance of iterative learning control (ILC). We present a mathematical explanation for this phenomenon, where the signals experience logarithmic transform prior to analogue-to-digital conversion, resulting in non-uniform quantization. This process reduces the quantization noise of low-amplitude signals that constitute a substantial portion of orthogonal frequency division multiplexing (OFDM) signals, thereby improving ILC performance. Measurement results show that compared to setting the reference level to the peak amplitude, lowering the reference level achieves 3 dB improvement on error vector magnitude (EVM) and 15 dB improvement on normalized mean square error (NMSE) for 320 MHz WiFi OFDM signals.

Constellation-Oriented Perturbation for Scalable-Complexity MIMO Nonlinear Precoding

Aug 04, 2022

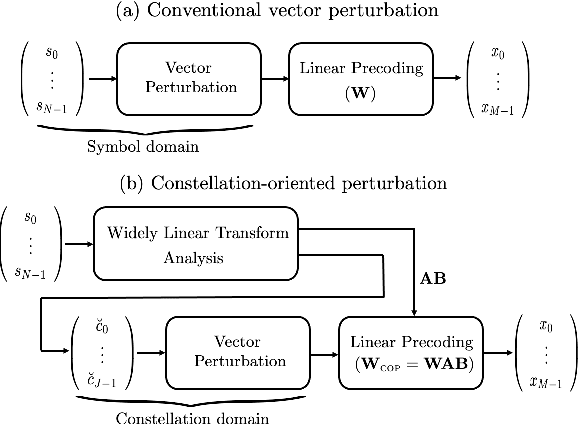

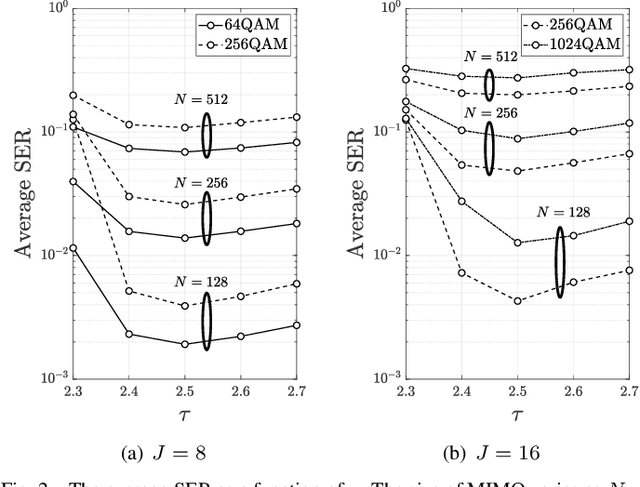

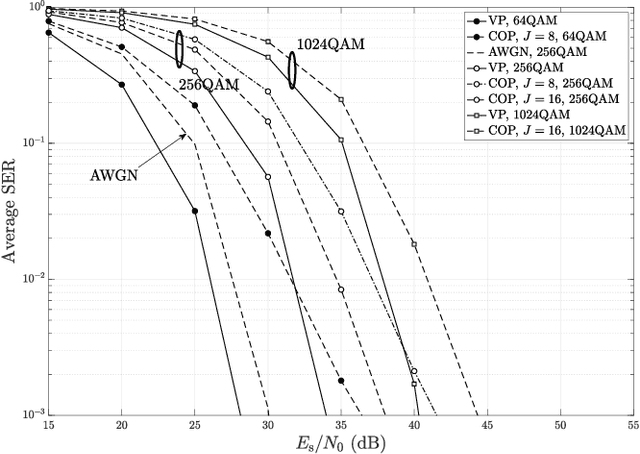

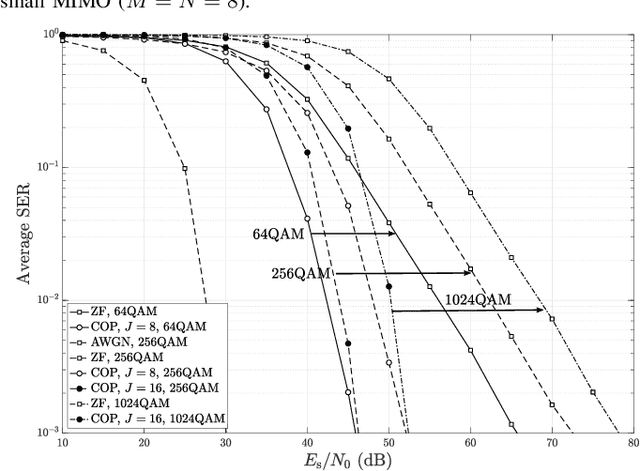

Abstract:In this paper, a novel nonlinear precoding (NLP) technique, namely constellation-oriented perturbation (COP), is proposed to tackle the scalability problem inherent in conventional NLP techniques. The basic concept of COP is to apply vector perturbation (VP) in the constellation domain instead of symbol domain; as often used in conventional techniques. By this means, the computational complexity of COP is made independent to the size of multi-antenna (i.e., MIMO) networks. Instead, it is related to the size of symbol constellation. Through widely linear transform, it is shown that COP has its complexity flexibly scalable in the constellation domain to achieve a good complexity-performance tradeoff. Our computer simulations show that COP can offer very comparable performance with the optimum VP in small MIMO systems. Moreover, it significantly outperforms current sub-optimum VP approaches (such as degree-2 VP) in large MIMO whilst maintaining much lower computational complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge