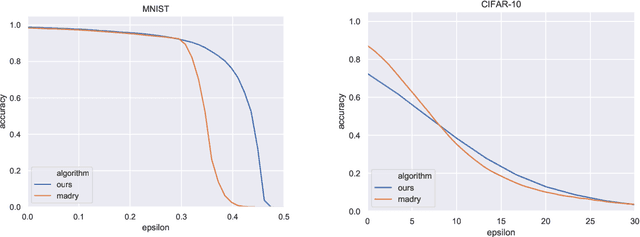

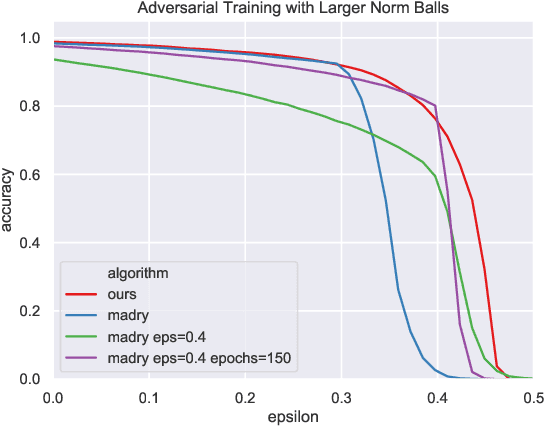

Adversarial Training with Voronoi Constraints

May 02, 2019Marc Khoury, Dylan Hadfield-Menell

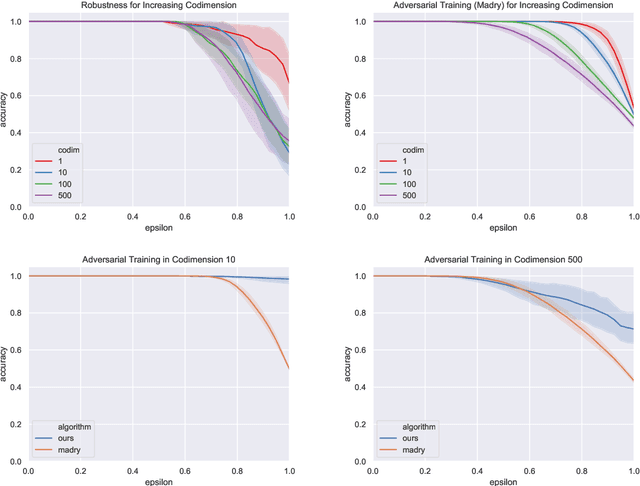

Adversarial examples are a pervasive phenomenon of machine learning models where seemingly imperceptible perturbations to the input lead to misclassifications for otherwise statistically accurate models. We propose a geometric framework, drawing on tools from the manifold reconstruction literature, to analyze the high-dimensional geometry of adversarial examples. In particular, we highlight the importance of codimension: for low-dimensional data manifolds embedded in high-dimensional space there are many directions off the manifold in which an adversary could construct adversarial examples. Adversarial examples are a natural consequence of learning a decision boundary that classifies the low-dimensional data manifold well, but classifies points near the manifold incorrectly. Using our geometric framework we prove that adversarial training is sample inefficient, and show sufficient sampling conditions under which nearest neighbor classifiers and ball-based adversarial training are robust. Finally we introduce adversarial training with Voronoi constraints, which replaces the norm ball constraint with the Voronoi cell for each point in the training set. We show that adversarial training with Voronoi constraints produces robust models which significantly improve over the state-of-the-art on MNIST and are competitive on CIFAR-10.

Conservative Agency via Attainable Utility Preservation

Feb 26, 2019Alexander Matt Turner, Dylan Hadfield-Menell, Prasad Tadepalli

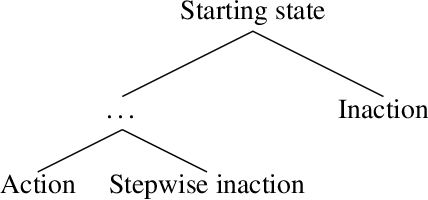

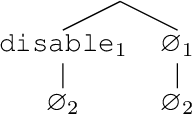

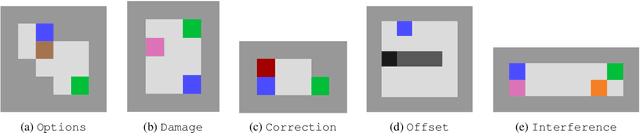

Reward functions are often misspecified. An agent optimizing an incorrect reward function can change its environment in large, undesirable, and potentially irreversible ways. Work on impact measurement seeks a means of identifying (and thereby avoiding) large changes to the environment. We propose a novel impact measure which induces conservative, effective behavior across a range of situations. The approach attempts to preserve the attainable utility of auxiliary objectives. We evaluate our proposal on an array of benchmark tasks and show that it matches or outperforms relative reachability, the state-of-the-art in impact measurement.

The Assistive Multi-Armed Bandit

Jan 24, 2019Lawrence Chan, Dylan Hadfield-Menell, Siddhartha Srinivasa, Anca Dragan

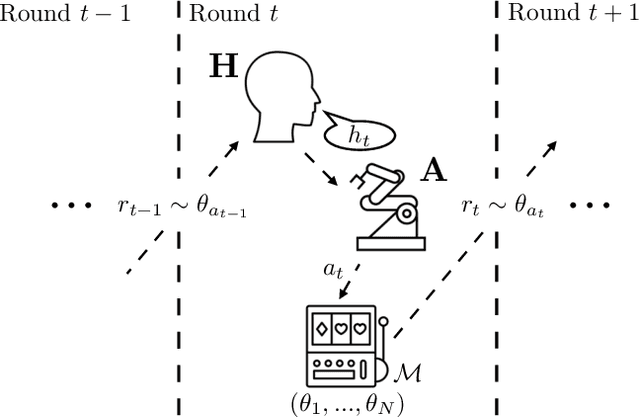

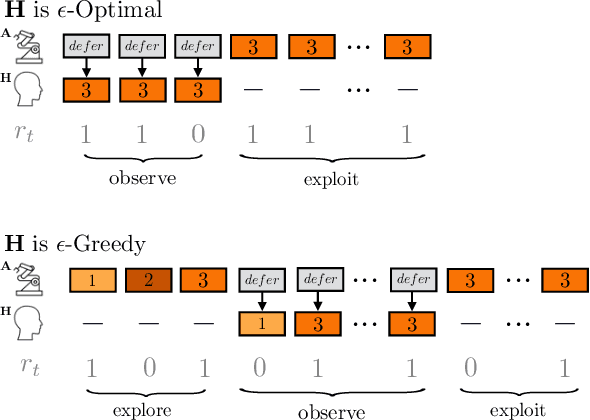

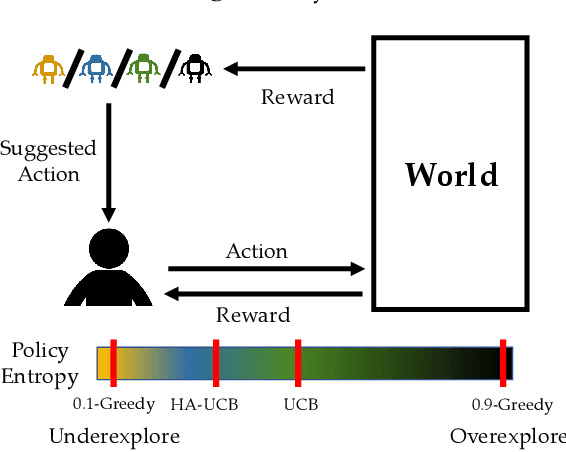

Learning preferences implicit in the choices humans make is a well studied problem in both economics and computer science. However, most work makes the assumption that humans are acting (noisily) optimally with respect to their preferences. Such approaches can fail when people are themselves learning about what they want. In this work, we introduce the assistive multi-armed bandit, where a robot assists a human playing a bandit task to maximize cumulative reward. In this problem, the human does not know the reward function but can learn it through the rewards received from arm pulls; the robot only observes which arms the human pulls but not the reward associated with each pull. We offer sufficient and necessary conditions for successfully assisting the human in this framework. Surprisingly, better human performance in isolation does not necessarily lead to better performance when assisted by the robot: a human policy can do better by effectively communicating its observed rewards to the robot. We conduct proof-of-concept experiments that support these results. We see this work as contributing towards a theory behind algorithms for human-robot interaction.

On the Utility of Model Learning in HRI

Jan 04, 2019Rohan Choudhury*, Gokul Swamy*, Dylan Hadfield-Menell, Anca Dragan

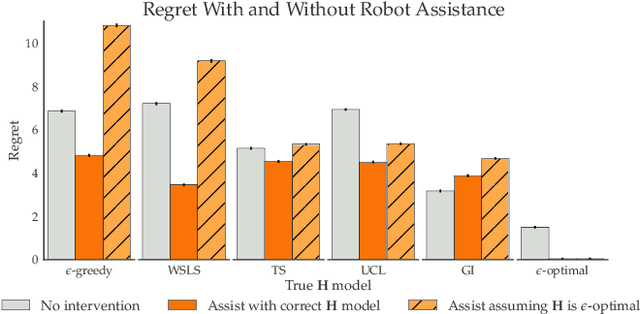

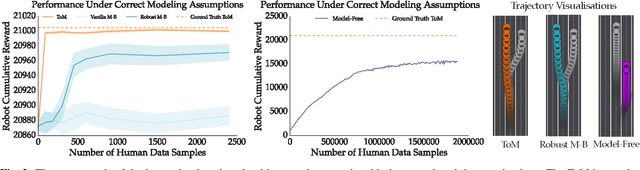

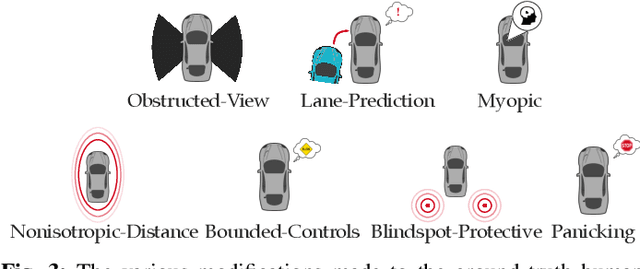

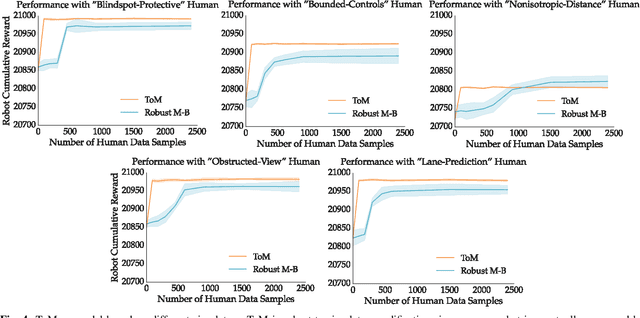

Fundamental to robotics is the debate between model-based and model-free learning: should the robot build an explicit model of the world, or learn a policy directly? In the context of HRI, part of the world to be modeled is the human. One option is for the robot to treat the human as a black box and learn a policy for how they act directly. But it can also model the human as an agent, and rely on a "theory of mind" to guide or bias the learning (grey box). We contribute a characterization of the performance of these methods under the optimistic case of having an ideal theory of mind, as well as under different scenarios in which the assumptions behind the robot's theory of mind for the human are wrong, as they inevitably will be in practice. We find that there is a significant sample complexity advantage to theory of mind methods and that they are more robust to covariate shift, but that when enough interaction data is available, black box approaches eventually dominate.

Human-AI Learning Performance in Multi-Armed Bandits

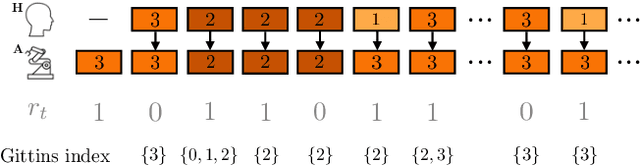

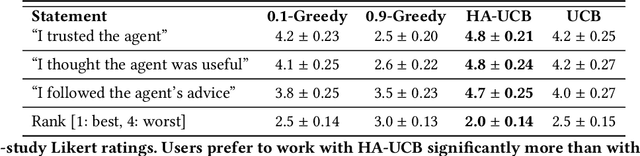

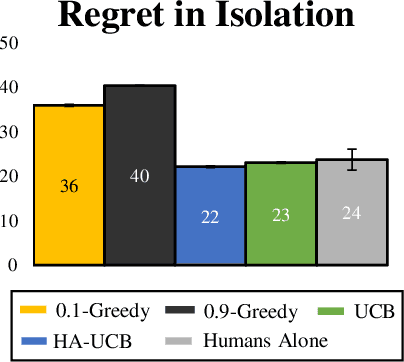

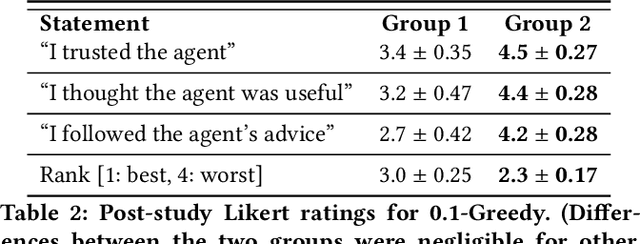

Dec 21, 2018Ravi Pandya, Sandy H. Huang, Dylan Hadfield-Menell, Anca D. Dragan

People frequently face challenging decision-making problems in which outcomes are uncertain or unknown. Artificial intelligence (AI) algorithms exist that can outperform humans at learning such tasks. Thus, there is an opportunity for AI agents to assist people in learning these tasks more effectively. In this work, we use a multi-armed bandit as a controlled setting in which to explore this direction. We pair humans with a selection of agents and observe how well each human-agent team performs. We find that team performance can beat both human and agent performance in isolation. Interestingly, we also find that an agent's performance in isolation does not necessarily correlate with the human-agent team's performance. A drop in agent performance can lead to a disproportionately large drop in team performance, or in some settings can even improve team performance. Pairing a human with an agent that performs slightly better than them can make them perform much better, while pairing them with an agent that performs the same can make them them perform much worse. Further, our results suggest that people have different exploration strategies and might perform better with agents that match their strategy. Overall, optimizing human-agent team performance requires going beyond optimizing agent performance, to understanding how the agent's suggestions will influence human decision-making.

Legible Normativity for AI Alignment: The Value of Silly Rules

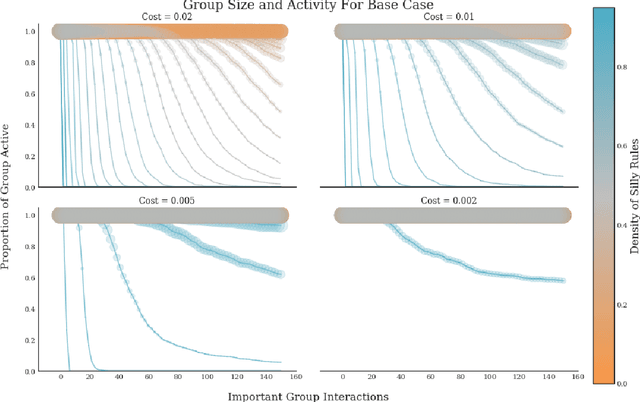

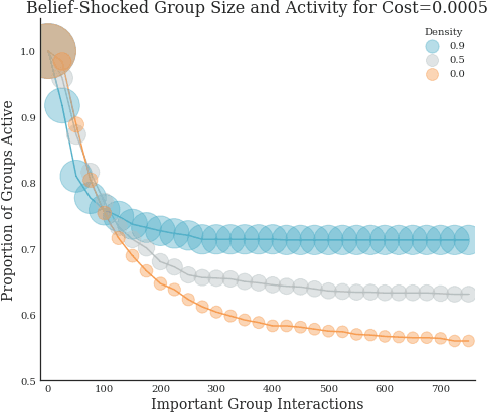

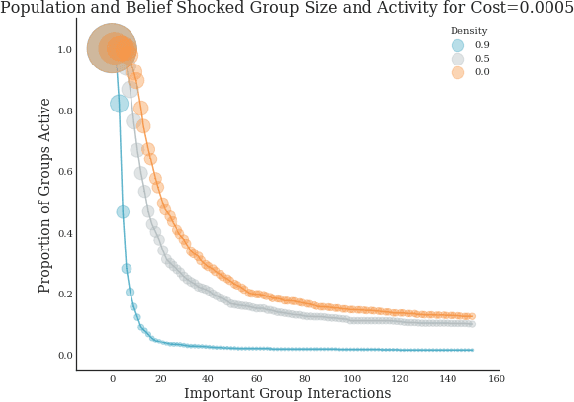

Nov 03, 2018Dylan Hadfield-Menell, McKane Andrus, Gillian K. Hadfield

It has become commonplace to assert that autonomous agents will have to be built to follow human rules of behavior--social norms and laws. But human laws and norms are complex and culturally varied systems, in many cases agents will have to learn the rules. This requires autonomous agents to have models of how human rule systems work so that they can make reliable predictions about rules. In this paper we contribute to the building of such models by analyzing an overlooked distinction between important rules and what we call silly rules--rules with no discernible direct impact on welfare. We show that silly rules render a normative system both more robust and more adaptable in response to shocks to perceived stability. They make normativity more legible for humans, and can increase legibility for AI systems as well. For AI systems to integrate into human normative systems, we suggest, it may be important for them to have models that include representations of silly rules.

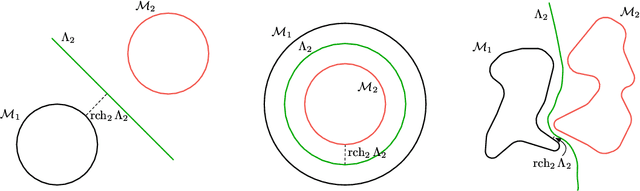

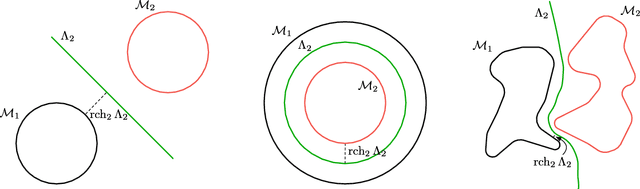

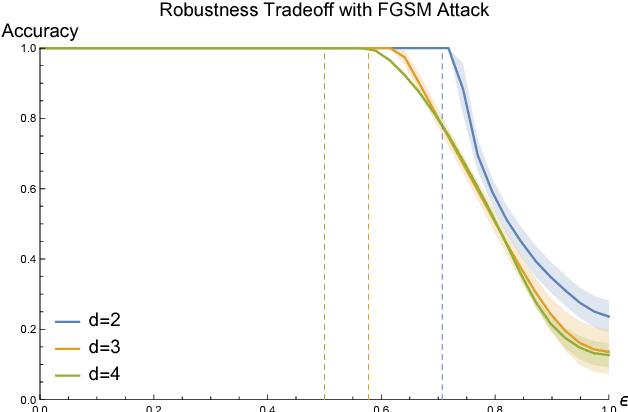

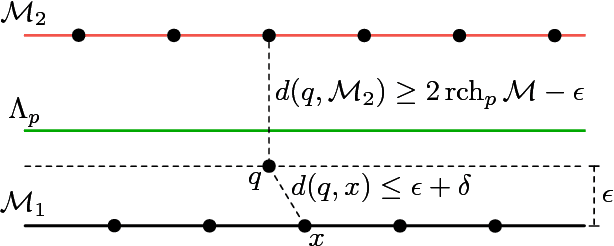

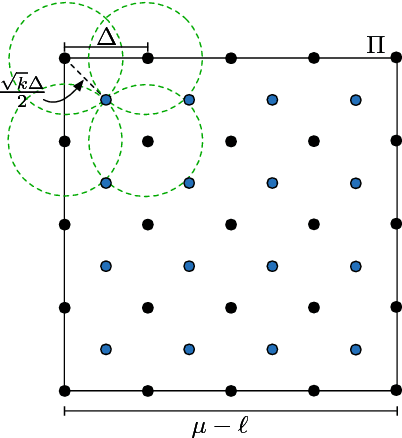

On the Geometry of Adversarial Examples

Nov 01, 2018Marc Khoury, Dylan Hadfield-Menell

Adversarial examples are a pervasive phenomenon of machine learning models where seemingly imperceptible perturbations to the input lead to misclassifications for otherwise statistically accurate models. We propose a geometric framework, drawing on tools from the manifold reconstruction literature, to analyze the high-dimensional geometry of adversarial examples. In particular, we highlight the importance of codimension: for low-dimensional data manifolds embedded in high-dimensional space there are many directions off the manifold in which to construct adversarial examples. Adversarial examples are a natural consequence of learning a decision boundary that classifies the low-dimensional data manifold well, but classifies points near the manifold incorrectly. Using our geometric framework we prove (1) a tradeoff between robustness under different norms, (2) that adversarial training in balls around the data is sample inefficient, and (3) sufficient sampling conditions under which nearest neighbor classifiers and ball-based adversarial training are robust.

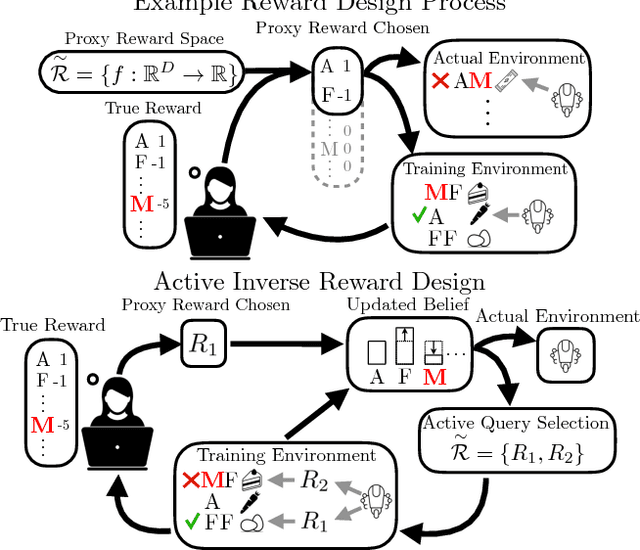

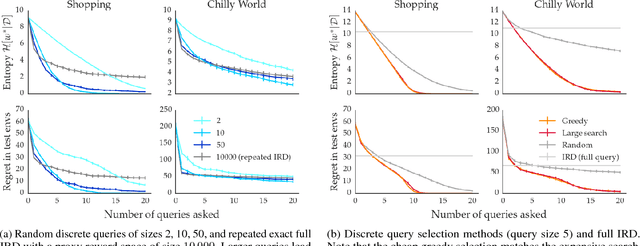

Active Inverse Reward Design

Sep 09, 2018Sören Mindermann, Rohin Shah, Adam Gleave, Dylan Hadfield-Menell

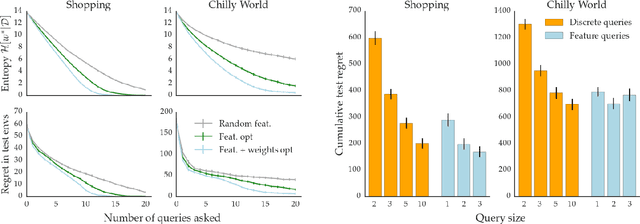

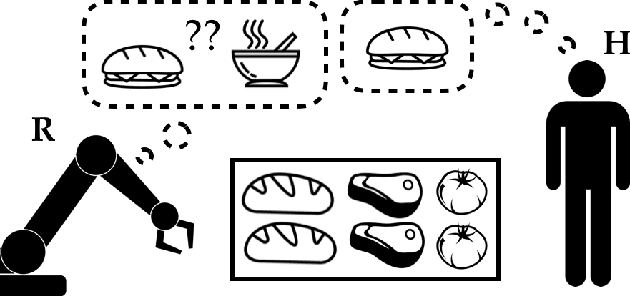

Reward design, the problem of selecting an appropriate reward function for an AI system, is both critically important, as it encodes the task the system should perform, and challenging, as it requires reasoning about and understanding the agent's environment in detail. AI practitioners often iterate on the reward function for their systems in a trial-and-error process to get their desired behavior. Inverse reward design (IRD) is a preference inference method that infers a true reward function from an observed, possibly misspecified, proxy reward function. This allows the system to determine when it should trust its observed reward function and respond appropriately. This has been shown to avoid problems in reward design such as negative side-effects (omitting a seemingly irrelevant but important aspect of the task) and reward hacking (learning to exploit unanticipated loopholes). In this paper, we actively select the $\textit{set of proxy reward functions}$ available to the designer. This improves the quality of inference and simplifies the associated reward design problem. We present two types of queries: discrete queries, where the system designer chooses from a discrete set of reward functions, and feature queries, where the system queries the designer for weights on a small set of features. We evaluate this approach with experiments in a personal shopping assistant domain and a 2D navigation domain. We find that our approach leads to reduced regret at test time compared with vanilla IRD. Our results indicate that actively selecting the set of available reward functions is a promising direction to improve the efficiency and effectiveness of reward design.

An Efficient, Generalized Bellman Update For Cooperative Inverse Reinforcement Learning

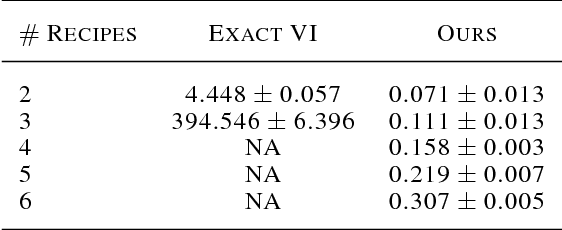

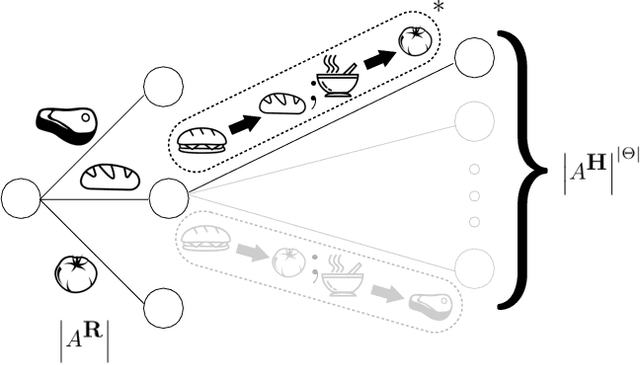

Jun 11, 2018Dhruv Malik, Malayandi Palaniappan, Jaime F. Fisac, Dylan Hadfield-Menell, Stuart Russell, Anca D. Dragan

Our goal is for AI systems to correctly identify and act according to their human user's objectives. Cooperative Inverse Reinforcement Learning (CIRL) formalizes this value alignment problem as a two-player game between a human and robot, in which only the human knows the parameters of the reward function: the robot needs to learn them as the interaction unfolds. Previous work showed that CIRL can be solved as a POMDP, but with an action space size exponential in the size of the reward parameter space. In this work, we exploit a specific property of CIRL---the human is a full information agent---to derive an optimality-preserving modification to the standard Bellman update; this reduces the complexity of the problem by an exponential factor and allows us to relax CIRL's assumption of human rationality. We apply this update to a variety of POMDP solvers and find that it enables us to scale CIRL to non-trivial problems, with larger reward parameter spaces, and larger action spaces for both robot and human. In solutions to these larger problems, the human exhibits pedagogic (teaching) behavior, while the robot interprets it as such and attains higher value for the human.

Simplifying Reward Design through Divide-and-Conquer

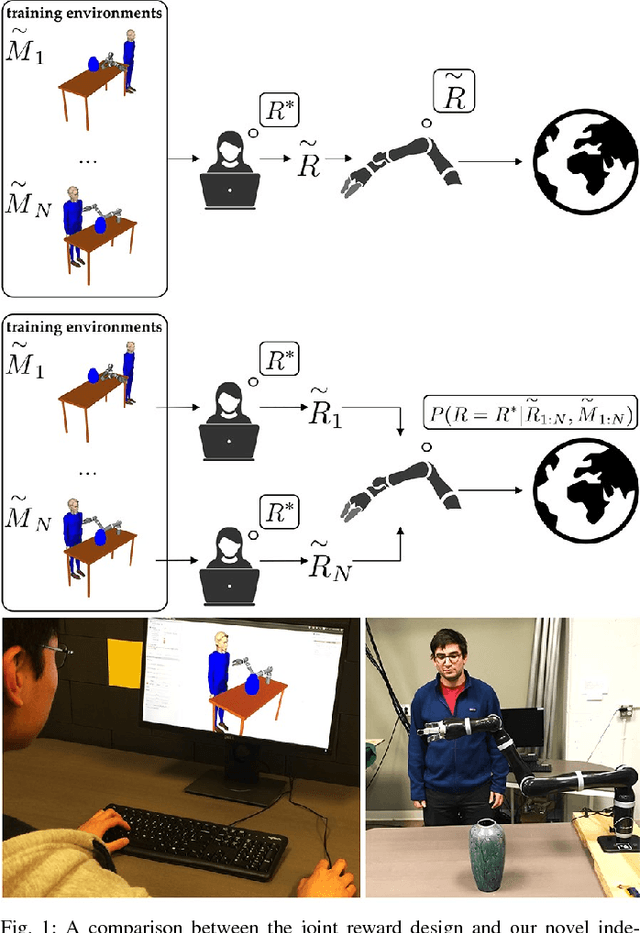

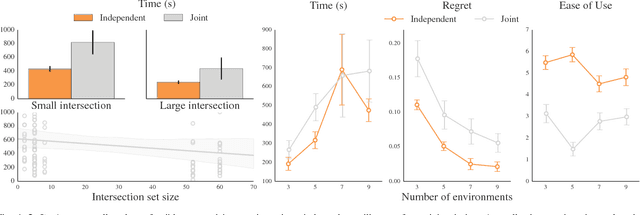

Jun 07, 2018Ellis Ratner, Dylan Hadfield-Menell, Anca D. Dragan

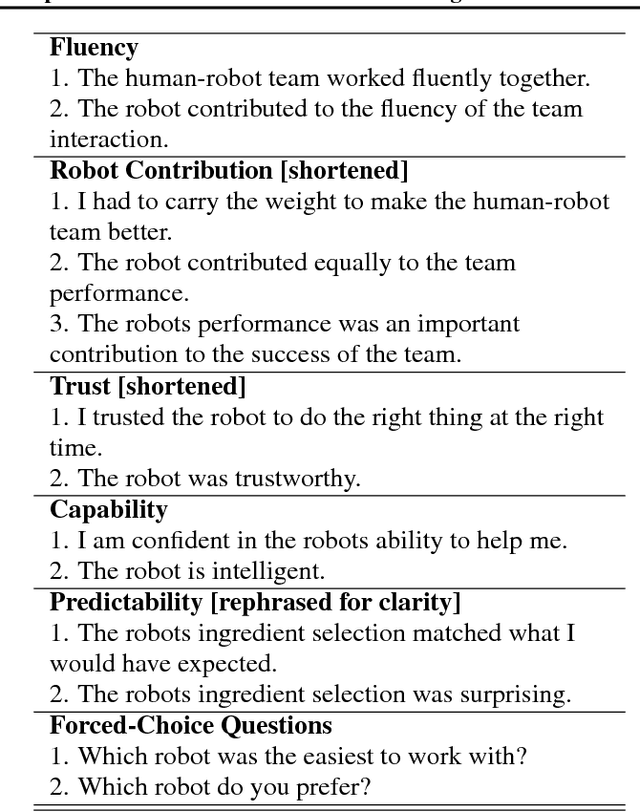

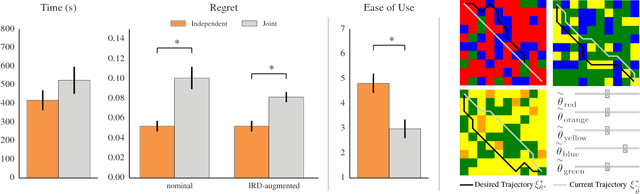

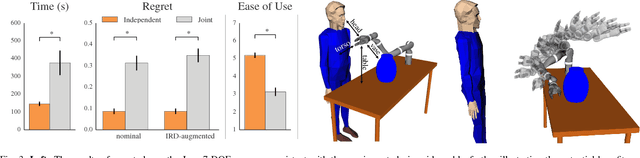

Designing a good reward function is essential to robot planning and reinforcement learning, but it can also be challenging and frustrating. The reward needs to work across multiple different environments, and that often requires many iterations of tuning. We introduce a novel divide-and-conquer approach that enables the designer to specify a reward separately for each environment. By treating these separate reward functions as observations about the underlying true reward, we derive an approach to infer a common reward across all environments. We conduct user studies in an abstract grid world domain and in a motion planning domain for a 7-DOF manipulator that measure user effort and solution quality. We show that our method is faster, easier to use, and produces a higher quality solution than the typical method of designing a reward jointly across all environments. We additionally conduct a series of experiments that measure the sensitivity of these results to different properties of the reward design task, such as the number of environments, the number of feasible solutions per environment, and the fraction of the total features that vary within each environment. We find that independent reward design outperforms the standard, joint, reward design process but works best when the design problem can be divided into simpler subproblems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge