Dongxiao Zhu

Auto-Prompting SAM for Mobile Friendly 3D Medical Image Segmentation

Aug 28, 2023

Abstract:The Segment Anything Model (SAM) has rapidly been adopted for segmenting a wide range of natural images. However, recent studies have indicated that SAM exhibits subpar performance on 3D medical image segmentation tasks. In addition to the domain gaps between natural and medical images, disparities in the spatial arrangement between 2D and 3D images, the substantial computational burden imposed by powerful GPU servers, and the time-consuming manual prompt generation impede the extension of SAM to a broader spectrum of medical image segmentation applications. To address these challenges, in this work, we introduce a novel method, AutoSAM Adapter, designed specifically for 3D multi-organ CT-based segmentation. We employ parameter-efficient adaptation techniques in developing an automatic prompt learning paradigm to facilitate the transformation of the SAM model's capabilities to 3D medical image segmentation, eliminating the need for manually generated prompts. Furthermore, we effectively transfer the acquired knowledge of the AutoSAM Adapter to other lightweight models specifically tailored for 3D medical image analysis, achieving state-of-the-art (SOTA) performance on medical image segmentation tasks. Through extensive experimental evaluation, we demonstrate the AutoSAM Adapter as a critical foundation for effectively leveraging the emerging ability of foundation models in 2D natural image segmentation for 3D medical image segmentation.

Fairness-aware Vision Transformer via Debiased Self-Attention

Jan 31, 2023

Abstract:Vision Transformer (ViT) has recently gained significant interest in solving computer vision (CV) problems due to its capability of extracting informative features and modeling long-range dependencies through the self-attention mechanism. To fully realize the advantages of ViT in real-world applications, recent works have explored the trustworthiness of ViT, including its robustness and explainability. However, another desiderata, fairness has not yet been adequately addressed in the literature. We establish that the existing fairness-aware algorithms (primarily designed for CNNs) do not perform well on ViT. This necessitates the need for developing our novel framework via Debiased Self-Attention (DSA). DSA is a fairness-through-blindness approach that enforces ViT to eliminate spurious features correlated with the sensitive attributes for bias mitigation. Notably, adversarial examples are leveraged to locate and mask the spurious features in the input image patches. In addition, DSA utilizes an attention weights alignment regularizer in the training objective to encourage learning informative features for target prediction. Importantly, our DSA framework leads to improved fairness guarantees over prior works on multiple prediction tasks without compromising target prediction performance

Negative Flux Aggregation to Estimate Feature Attributions

Jan 17, 2023

Abstract:There are increasing demands for understanding deep neural networks' (DNNs) behavior spurred by growing security and/or transparency concerns. Due to multi-layer nonlinearity of the deep neural network architectures, explaining DNN predictions still remains as an open problem, preventing us from gaining a deeper understanding of the mechanisms. To enhance the explainability of DNNs, we estimate the input feature's attributions to the prediction task using divergence and flux. Inspired by the divergence theorem in vector analysis, we develop a novel Negative Flux Aggregation (NeFLAG) formulation and an efficient approximation algorithm to estimate attribution map. Unlike the previous techniques, ours doesn't rely on fitting a surrogate model nor need any path integration of gradients. Both qualitative and quantitative experiments demonstrate a superior performance of NeFLAG in generating more faithful attribution maps than the competing methods.

Learning Compact Features via In-Training Representation Alignment

Nov 23, 2022

Abstract:Deep neural networks (DNNs) for supervised learning can be viewed as a pipeline of the feature extractor (i.e., last hidden layer) and a linear classifier (i.e., output layer) that are trained jointly with stochastic gradient descent (SGD) on the loss function (e.g., cross-entropy). In each epoch, the true gradient of the loss function is estimated using a mini-batch sampled from the training set and model parameters are then updated with the mini-batch gradients. Although the latter provides an unbiased estimation of the former, they are subject to substantial variances derived from the size and number of sampled mini-batches, leading to noisy and jumpy updates. To stabilize such undesirable variance in estimating the true gradients, we propose In-Training Representation Alignment (ITRA) that explicitly aligns feature distributions of two different mini-batches with a matching loss in the SGD training process. We also provide a rigorous analysis of the desirable effects of the matching loss on feature representation learning: (1) extracting compact feature representation; (2) reducing over-adaption on mini-batches via an adaptive weighting mechanism; and (3) accommodating to multi-modalities. Finally, we conduct large-scale experiments on both image and text classifications to demonstrate its superior performance to the strong baselines.

Coupling User Preference with External Rewards to Enable Driver-centered and Resource-aware EV Charging Recommendation

Oct 23, 2022Abstract:Electric Vehicle (EV) charging recommendation that both accommodates user preference and adapts to the ever-changing external environment arises as a cost-effective strategy to alleviate the range anxiety of private EV drivers. Previous studies focus on centralized strategies to achieve optimized resource allocation, particularly useful for privacy-indifferent taxi fleets and fixed-route public transits. However, private EV driver seeks a more personalized and resource-aware charging recommendation that is tailor-made to accommodate the user preference (when and where to charge) yet sufficiently adaptive to the spatiotemporal mismatch between charging supply and demand. Here we propose a novel Regularized Actor-Critic (RAC) charging recommendation approach that would allow each EV driver to strike an optimal balance between the user preference (historical charging pattern) and the external reward (driving distance and wait time). Experimental results on two real-world datasets demonstrate the unique features and superior performance of our approach to the competing methods.

FocalUNETR: A Focal Transformer for Boundary-aware Segmentation of CT Images

Oct 06, 2022

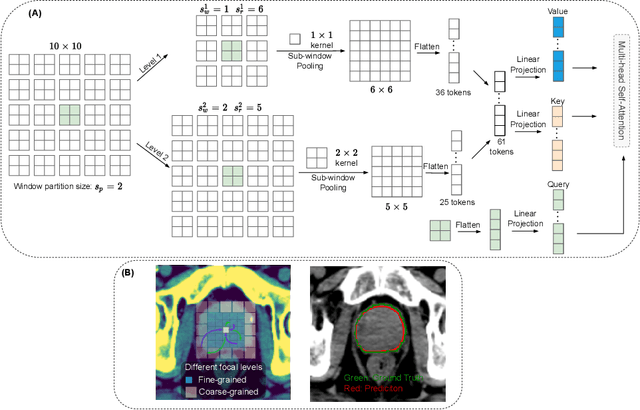

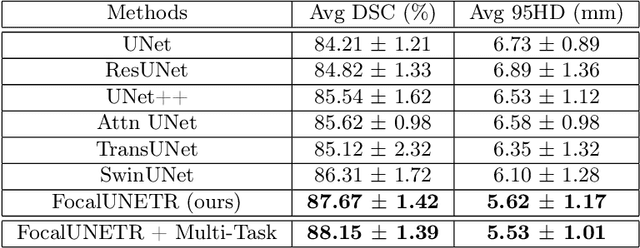

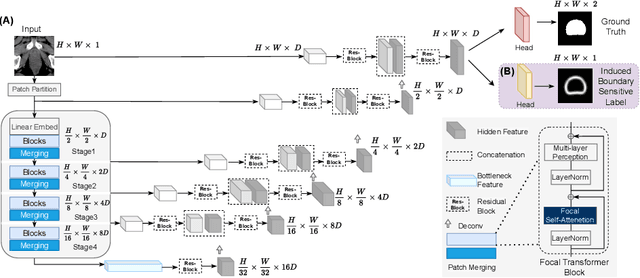

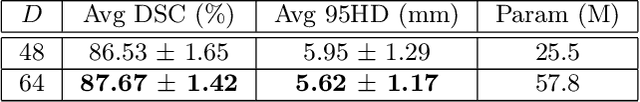

Abstract:Computed Tomography (CT) based precise prostate segmentation for treatment planning is challenging due to (1) the unclear boundary of prostate derived from CTs poor soft tissue contrast, and (2) the limitation of convolutional neural network based models in capturing long-range global context. Here we propose a focal transformer based image segmentation architecture to effectively and efficiently extract local visual features and global context from CT images. Furthermore, we design a main segmentation task and an auxiliary boundary-induced label regression task as regularization to simultaneously optimize segmentation results and mitigate the unclear boundary effect, particularly in unseen data set. Extensive experiments on a large data set of 400 prostate CT scans demonstrate the superior performance of our focal transformer to the competing methods on the prostate segmentation task.

Saliency Guided Adversarial Training for Learning Generalizable Features with Applications to Medical Imaging Classification System

Sep 09, 2022

Abstract:This work tackles a central machine learning problem of performance degradation on out-of-distribution (OOD) test sets. The problem is particularly salient in medical imaging based diagnosis system that appears to be accurate but fails when tested in new hospitals/datasets. Recent studies indicate the system might learn shortcut and non-relevant features instead of generalizable features, so-called good features. We hypothesize that adversarial training can eliminate shortcut features whereas saliency guided training can filter out non-relevant features; both are nuisance features accounting for the performance degradation on OOD test sets. With that, we formulate a novel model training scheme for the deep neural network to learn good features for classification and/or detection tasks ensuring a consistent generalization performance on OOD test sets. The experimental results qualitatively and quantitatively demonstrate the superior performance of our method using the benchmark CXR image data sets on classification tasks.

* 9 pages, 3 figures

Adversarially Robust and Explainable Model Compression with On-Device Personalization for Text Classification

Jan 20, 2021

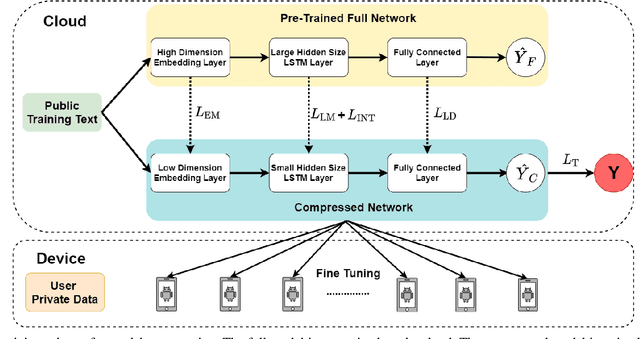

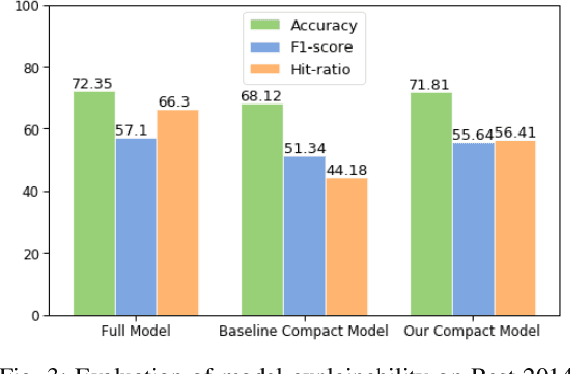

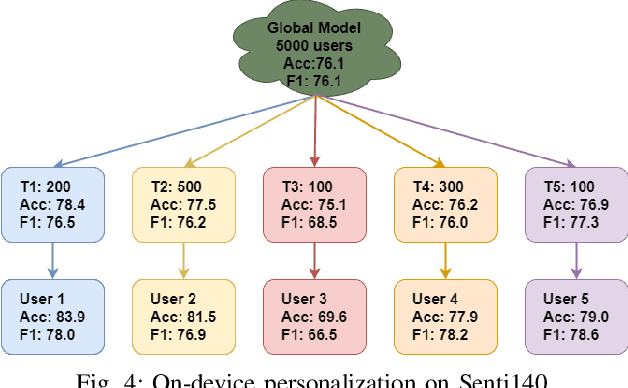

Abstract:On-device Deep Neural Networks (DNNs) have recently gained more attention due to the increasing computing power of the mobile devices and the number of applications in Computer Vision (CV), Natural Language Processing (NLP), and Internet of Things (IoTs). Unfortunately, the existing efficient convolutional neural network (CNN) architectures designed for CV tasks are not directly applicable to NLP tasks and the tiny Recurrent Neural Network (RNN) architectures have been designed primarily for IoT applications. In NLP applications, although model compression has seen initial success in on-device text classification, there are at least three major challenges yet to be addressed: adversarial robustness, explainability, and personalization. Here we attempt to tackle these challenges by designing a new training scheme for model compression and adversarial robustness, including the optimization of an explainable feature mapping objective, a knowledge distillation objective, and an adversarially robustness objective. The resulting compressed model is personalized using on-device private training data via fine-tuning. We perform extensive experiments to compare our approach with both compact RNN (e.g., FastGRNN) and compressed RNN (e.g., PRADO) architectures in both natural and adversarial NLP test settings.

Improving Adversarial Robustness via Probabilistically Compact Loss with Logit Constraints

Dec 14, 2020

Abstract:Convolutional neural networks (CNNs) have achieved state-of-the-art performance on various tasks in computer vision. However, recent studies demonstrate that these models are vulnerable to carefully crafted adversarial samples and suffer from a significant performance drop when predicting them. Many methods have been proposed to improve adversarial robustness (e.g., adversarial training and new loss functions to learn adversarially robust feature representations). Here we offer a unique insight into the predictive behavior of CNNs that they tend to misclassify adversarial samples into the most probable false classes. This inspires us to propose a new Probabilistically Compact (PC) loss with logit constraints which can be used as a drop-in replacement for cross-entropy (CE) loss to improve CNN's adversarial robustness. Specifically, PC loss enlarges the probability gaps between true class and false classes meanwhile the logit constraints prevent the gaps from being melted by a small perturbation. We extensively compare our method with the state-of-the-art using large scale datasets under both white-box and black-box attacks to demonstrate its effectiveness. The source codes are available from the following url: https://github.com/xinli0928/PC-LC.

Explainable Recommendation via Interpretable Feature Mapping and Evaluation of Explainability

Jul 12, 2020

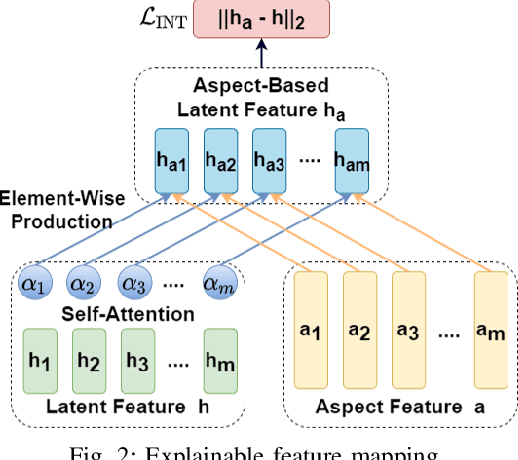

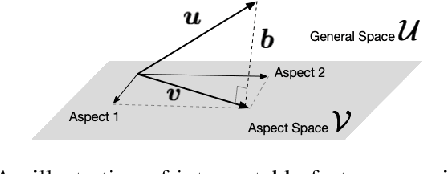

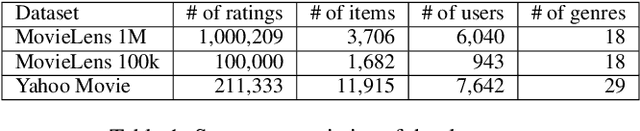

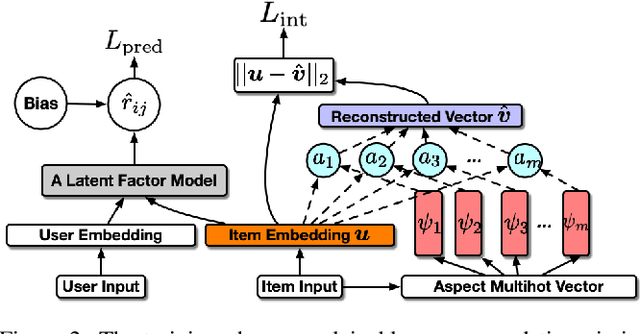

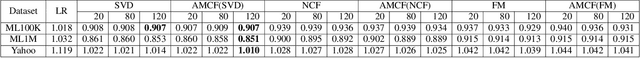

Abstract:Latent factor collaborative filtering (CF) has been a widely used technique for recommender system by learning the semantic representations of users and items. Recently, explainable recommendation has attracted much attention from research community. However, trade-off exists between explainability and performance of the recommendation where metadata is often needed to alleviate the dilemma. We present a novel feature mapping approach that maps the uninterpretable general features onto the interpretable aspect features, achieving both satisfactory accuracy and explainability in the recommendations by simultaneous minimization of rating prediction loss and interpretation loss. To evaluate the explainability, we propose two new evaluation metrics specifically designed for aspect-level explanation using surrogate ground truth. Experimental results demonstrate a strong performance in both recommendation and explaining explanation, eliminating the need for metadata. Code is available from https://github.com/pd90506/AMCF.

* Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence (IJCAI)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge