Donghai Guan

ViEEG: Hierarchical Neural Coding with Cross-Modal Progressive Enhancement for EEG-Based Visual Decoding

May 18, 2025

Abstract:Understanding and decoding brain activity into visual representations is a fundamental challenge at the intersection of neuroscience and artificial intelligence. While EEG-based visual decoding has shown promise due to its non-invasive, low-cost nature and millisecond-level temporal resolution, existing methods are limited by their reliance on flat neural representations that overlook the brain's inherent visual hierarchy. In this paper, we introduce ViEEG, a biologically inspired hierarchical EEG decoding framework that aligns with the Hubel-Wiesel theory of visual processing. ViEEG decomposes each visual stimulus into three biologically aligned components-contour, foreground object, and contextual scene-serving as anchors for a three-stream EEG encoder. These EEG features are progressively integrated via cross-attention routing, simulating cortical information flow from V1 to IT to the association cortex. We further adopt hierarchical contrastive learning to align EEG representations with CLIP embeddings, enabling zero-shot object recognition. Extensive experiments on the THINGS-EEG dataset demonstrate that ViEEG achieves state-of-the-art performance, with 40.9% Top-1 accuracy in subject-dependent and 22.9% Top-1 accuracy in cross-subject settings, surpassing existing methods by over 45%. Our framework not only advances the performance frontier but also sets a new paradigm for biologically grounded brain decoding in AI.

WTCL-Dehaze: Rethinking Real-world Image Dehazing via Wavelet Transform and Contrastive Learning

Oct 07, 2024

Abstract:Images captured in hazy outdoor conditions often suffer from colour distortion, low contrast, and loss of detail, which impair high-level vision tasks. Single image dehazing is essential for applications such as autonomous driving and surveillance, with the aim of restoring image clarity. In this work, we propose WTCL-Dehaze an enhanced semi-supervised dehazing network that integrates Contrastive Loss and Discrete Wavelet Transform (DWT). We incorporate contrastive regularization to enhance feature representation by contrasting hazy and clear image pairs. Additionally, we utilize DWT for multi-scale feature extraction, effectively capturing high-frequency details and global structures. Our approach leverages both labelled and unlabelled data to mitigate the domain gap and improve generalization. The model is trained on a combination of synthetic and real-world datasets, ensuring robust performance across different scenarios. Extensive experiments demonstrate that our proposed algorithm achieves superior performance and improved robustness compared to state-of-the-art single image dehazing methods on both benchmark datasets and real-world images.

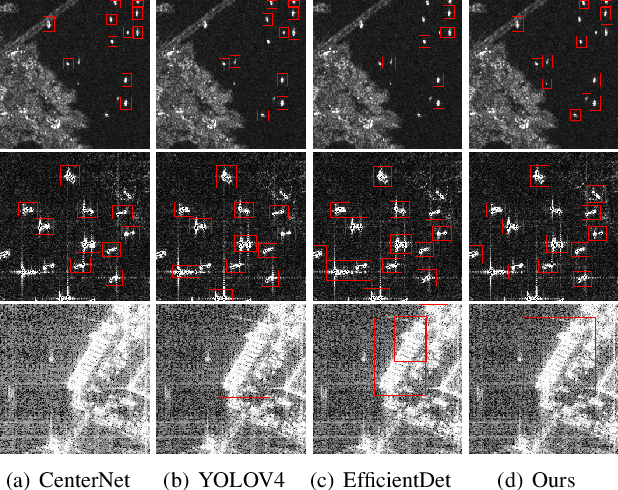

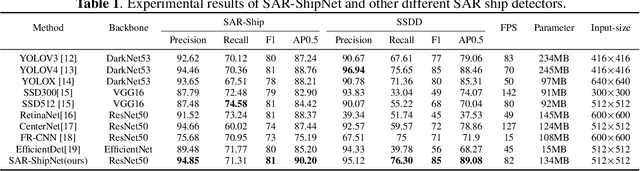

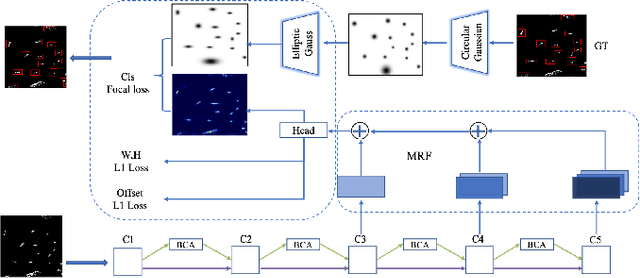

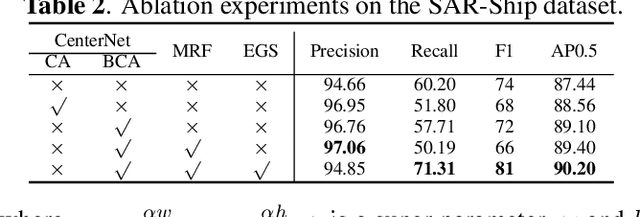

SAR-ShipNet: SAR-Ship Detection Neural Network via Bidirectional Coordinate Attention and Multi-resolution Feature Fusion

Mar 29, 2022

Abstract:This paper studies a practically meaningful ship detection problem from synthetic aperture radar (SAR) images by the neural network. We broadly extract different types of SAR image features and raise the intriguing question that whether these extracted features are beneficial to (1) suppress data variations (e.g., complex land-sea backgrounds, scattered noise) of real-world SAR images, and (2) enhance the features of ships that are small objects and have different aspect (length-width) ratios, therefore resulting in the improvement of ship detection. To answer this question, we propose a SAR-ship detection neural network (call SAR-ShipNet for short), by newly developing Bidirectional Coordinate Attention (BCA) and Multi-resolution Feature Fusion (MRF) based on CenterNet. Moreover, considering the varying length-width ratio of arbitrary ships, we adopt elliptical Gaussian probability distribution in CenterNet to improve the performance of base detector models. Experimental results on the public SAR-Ship dataset show that our SAR-ShipNet achieves competitive advantages in both speed and accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge