Dongbo Zhang

IDCNet: Guided Video Diffusion for Metric-Consistent RGBD Scene Generation with Precise Camera Control

Aug 06, 2025Abstract:We present IDC-Net (Image-Depth Consistency Network), a novel framework designed to generate RGB-D video sequences under explicit camera trajectory control. Unlike approaches that treat RGB and depth generation separately, IDC-Net jointly synthesizes both RGB images and corresponding depth maps within a unified geometry-aware diffusion model. The joint learning framework strengthens spatial and geometric alignment across frames, enabling more precise camera control in the generated sequences. To support the training of this camera-conditioned model and ensure high geometric fidelity, we construct a camera-image-depth consistent dataset with metric-aligned RGB videos, depth maps, and accurate camera poses, which provides precise geometric supervision with notably improved inter-frame geometric consistency. Moreover, we introduce a geometry-aware transformer block that enables fine-grained camera control, enhancing control over the generated sequences. Extensive experiments show that IDC-Net achieves improvements over state-of-the-art approaches in both visual quality and geometric consistency of generated scene sequences. Notably, the generated RGB-D sequences can be directly feed for downstream 3D Scene reconstruction tasks without extra post-processing steps, showcasing the practical benefits of our joint learning framework. See more at https://idcnet-scene.github.io.

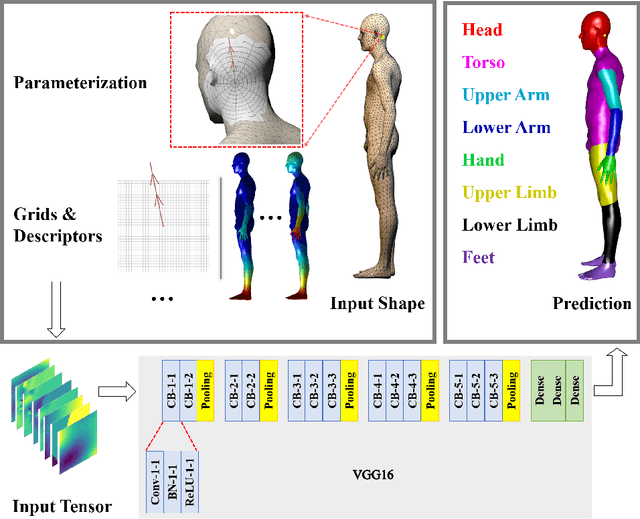

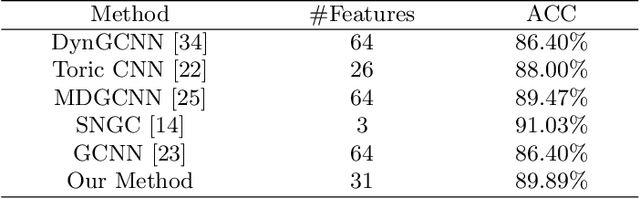

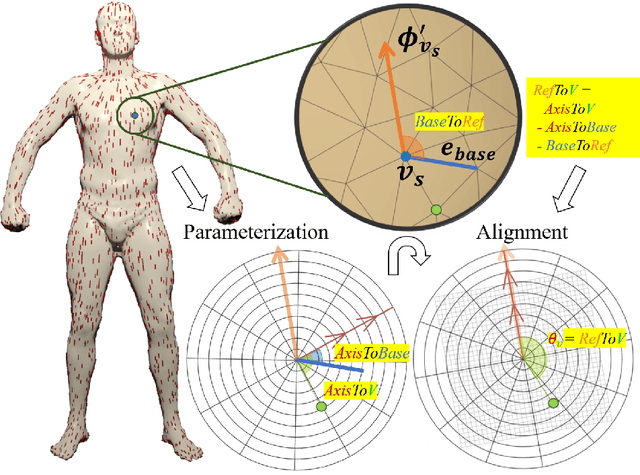

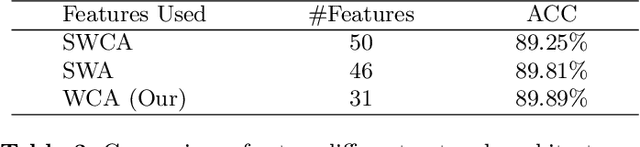

Deep Patch-based Human Segmentation

Jul 11, 2020

Abstract:3D human segmentation has seen noticeable progress in re-cent years. It, however, still remains a challenge to date. In this paper, weintroduce a deep patch-based method for 3D human segmentation. Wefirst extract a local surface patch for each vertex and then parameterizeit into a 2D grid (or image). We then embed identified shape descriptorsinto the 2D grids which are further fed into the powerful 2D Convolu-tional Neural Network for regressing corresponding semantic labels (e.g.,head, torso). Experiments demonstrate that our method is effective inhuman segmentation, and achieves state-of-the-art accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge