Doksoo Lee

Wayne

An Attention-based Spatio-Temporal Neural Operator for Evolving Physics

Jun 12, 2025Abstract:In scientific machine learning (SciML), a key challenge is learning unknown, evolving physical processes and making predictions across spatio-temporal scales. For example, in real-world manufacturing problems like additive manufacturing, users adjust known machine settings while unknown environmental parameters simultaneously fluctuate. To make reliable predictions, it is desired for a model to not only capture long-range spatio-temporal interactions from data but also adapt to new and unknown environments; traditional machine learning models excel at the first task but often lack physical interpretability and struggle to generalize under varying environmental conditions. To tackle these challenges, we propose the Attention-based Spatio-Temporal Neural Operator (ASNO), a novel architecture that combines separable attention mechanisms for spatial and temporal interactions and adapts to unseen physical parameters. Inspired by the backward differentiation formula (BDF), ASNO learns a transformer for temporal prediction and extrapolation and an attention-based neural operator for handling varying external loads, enhancing interpretability by isolating historical state contributions and external forces, enabling the discovery of underlying physical laws and generalizability to unseen physical environments. Empirical results on SciML benchmarks demonstrate that ASNO outperforms over existing models, establishing its potential for engineering applications, physics discovery, and interpretable machine learning.

Data-Driven Design for Metamaterials and Multiscale Systems: A Review

Jul 01, 2023Abstract:Metamaterials are artificial materials designed to exhibit effective material parameters that go beyond those found in nature. Composed of unit cells with rich designability that are assembled into multiscale systems, they hold great promise for realizing next-generation devices with exceptional, often exotic, functionalities. However, the vast design space and intricate structure-property relationships pose significant challenges in their design. A compelling paradigm that could bring the full potential of metamaterials to fruition is emerging: data-driven design. In this review, we provide a holistic overview of this rapidly evolving field, emphasizing the general methodology instead of specific domains and deployment contexts. We organize existing research into data-driven modules, encompassing data acquisition, machine learning-based unit cell design, and data-driven multiscale optimization. We further categorize the approaches within each module based on shared principles, analyze and compare strengths and applicability, explore connections between different modules, and identify open research questions and opportunities.

Hierarchical Deep Generative Models for Design Under Free-Form Geometric Uncertainty

Mar 09, 2022

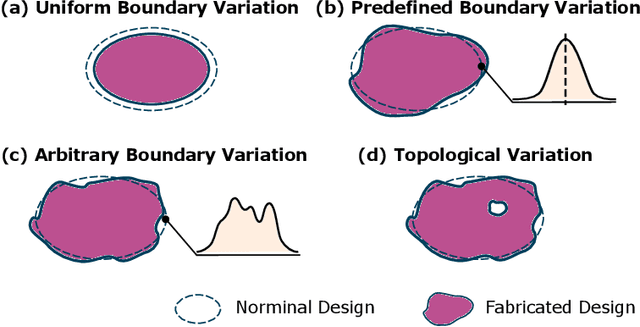

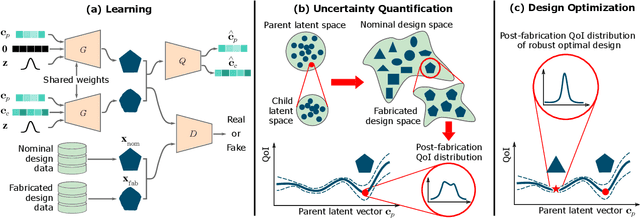

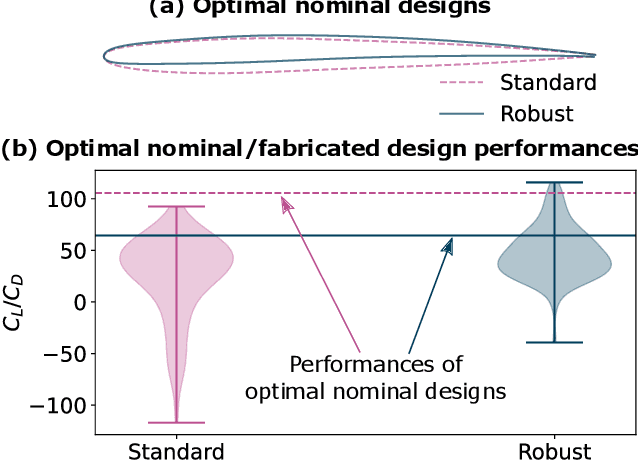

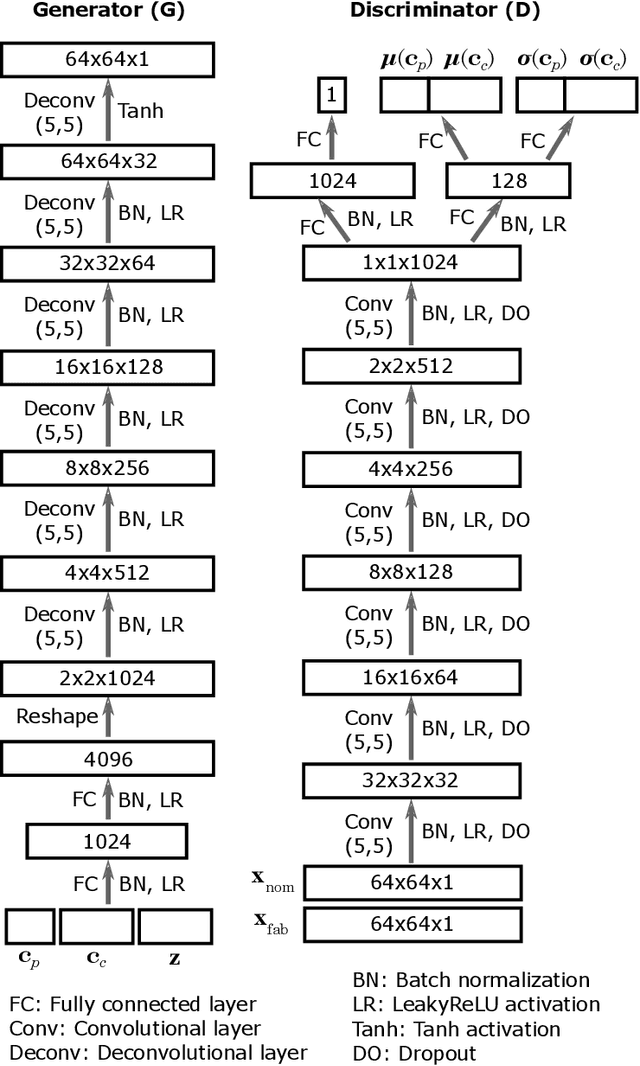

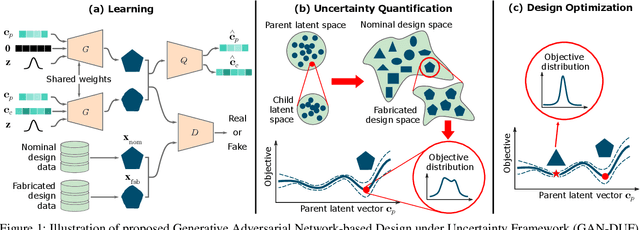

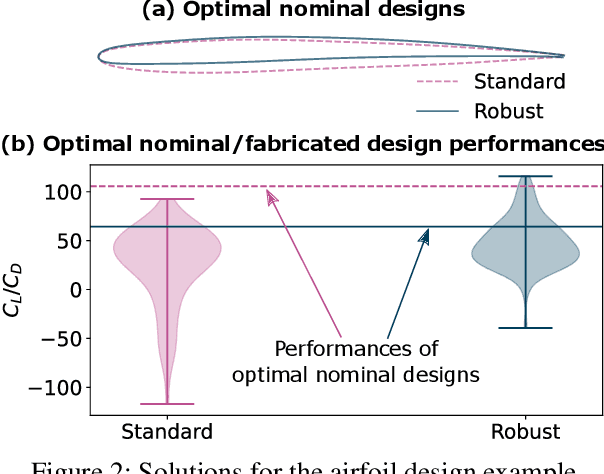

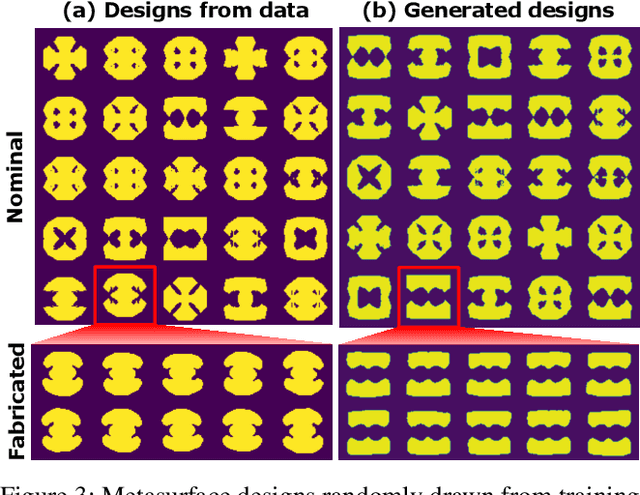

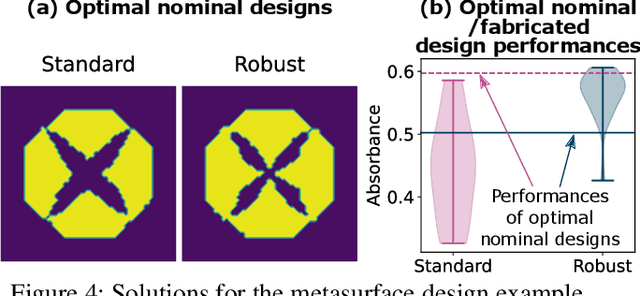

Abstract:Deep generative models have demonstrated effectiveness in learning compact and expressive design representations that significantly improve geometric design optimization. However, these models do not consider the uncertainty introduced by manufacturing or fabrication. Past work that quantifies such uncertainty often makes simplifying assumptions on geometric variations, while the "real-world", "free-form" uncertainty and its impact on design performance are difficult to quantify due to the high dimensionality. To address this issue, we propose a Generative Adversarial Network-based Design under Uncertainty Framework (GAN-DUF), which contains a deep generative model that simultaneously learns a compact representation of nominal (ideal) designs and the conditional distribution of fabricated designs given any nominal design. This opens up new possibilities of 1)~building a universal uncertainty quantification model compatible with both shape and topological designs, 2)~modeling free-form geometric uncertainties without the need to make any assumptions on the distribution of geometric variability, and 3)~allowing fast prediction of uncertainties for new nominal designs. We can combine the proposed deep generative model with robust design optimization or reliability-based design optimization for design under uncertainty. We demonstrated the framework on two real-world engineering design examples and showed its capability of finding the solution that possesses better performances after fabrication.

T-METASET: Task-Aware Generation of Metamaterial Datasets by Diversity-Based Active Learning

Feb 21, 2022

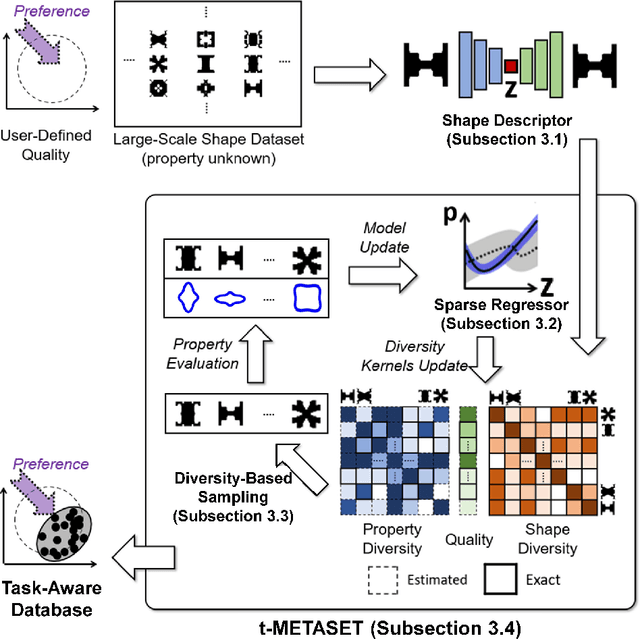

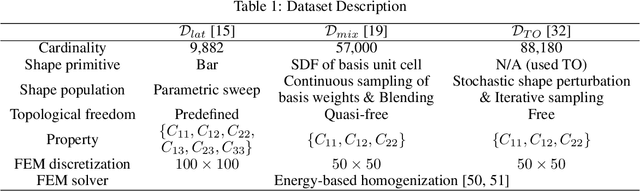

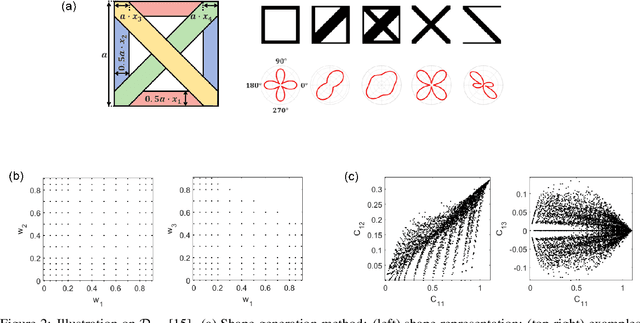

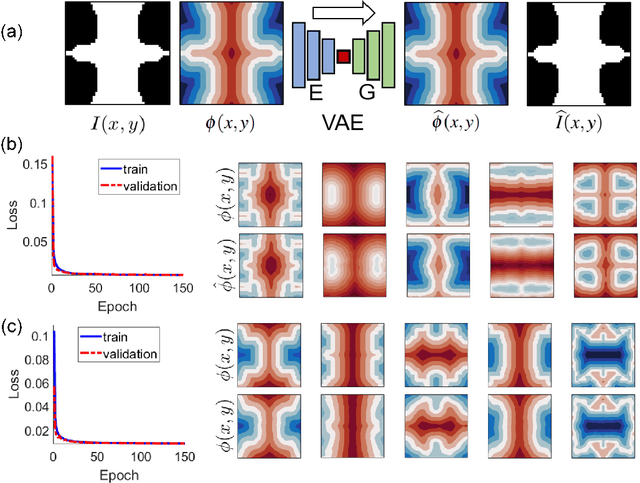

Abstract:Inspired by the recent success of deep learning in diverse domains, data-driven metamaterials design has emerged as a compelling design paradigm to unlock the potential of multiscale architecture. However, existing model-centric approaches lack principled methodologies dedicated to high-quality data generation. Resorting to space-filling design in shape descriptor space, existing metamaterial datasets suffer from property distributions that are either highly imbalanced or at odds with design tasks of interest. To this end, we propose t-METASET: an intelligent data acquisition framework for task-aware dataset generation. We seek a solution to a commonplace yet frequently overlooked scenario at early design stages: when a massive ($~\sim O(10^4)$) shape library has been prepared with no properties evaluated. The key idea is to exploit a data-driven shape descriptor learned from generative models, fit a sparse regressor as the start-up agent, and leverage diversity-related metrics to drive data acquisition to areas that help designers fulfill design goals. We validate the proposed framework in three hypothetical deployment scenarios, which encompass general use, task-aware use, and tailorable use. Two large-scale shape-only mechanical metamaterial datasets are used as test datasets. The results demonstrate that t-METASET can incrementally grow task-aware datasets. Applicable to general design representations, t-METASET can boost future advancements of not only metamaterials but data-driven design in other domains.

Deep Generative Models for Geometric Design Under Uncertainty

Dec 15, 2021

Abstract:Deep generative models have demonstrated effectiveness in learning compact and expressive design representations that significantly improve geometric design optimization. However, these models do not consider the uncertainty introduced by manufacturing or fabrication. Past work that quantifies such uncertainty often makes simplified assumptions on geometric variations, while the "real-world" uncertainty and its impact on design performance are difficult to quantify due to the high dimensionality. To address this issue, we propose a Generative Adversarial Network-based Design under Uncertainty Framework (GAN-DUF), which contains a deep generative model that simultaneously learns a compact representation of nominal (ideal) designs and the conditional distribution of fabricated designs given any nominal design. We demonstrated the framework on two real-world engineering design examples and showed its capability of finding the solution that possesses better performances after fabrication.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge