Dian Sheng

BrainSegNet: A Novel Framework for Whole-Brain MRI Parcellation Enhanced by Large Models

Jan 14, 2026Abstract:Whole-brain parcellation from MRI is a critical yet challenging task due to the complexity of subdividing the brain into numerous small, irregular shaped regions. Traditionally, template-registration methods were used, but recent advances have shifted to deep learning for faster workflows. While large models like the Segment Anything Model (SAM) offer transferable feature representations, they are not tailored for the high precision required in brain parcellation. To address this, we propose BrainSegNet, a novel framework that adapts SAM for accurate whole-brain parcellation into 95 regions. We enhance SAM by integrating U-Net skip connections and specialized modules into its encoder and decoder, enabling fine-grained anatomical precision. Key components include a hybrid encoder combining U-Net skip connections with SAM's transformer blocks, a multi-scale attention decoder with pyramid pooling for varying-sized structures, and a boundary refinement module to sharpen edges. Experimental results on the Human Connectome Project (HCP) dataset demonstrate that BrainSegNet outperforms several state-of-the-art methods, achieving higher accuracy and robustness in complex, multi-label parcellation.

Convolutional neural networks with fractional order gradient method

May 14, 2019

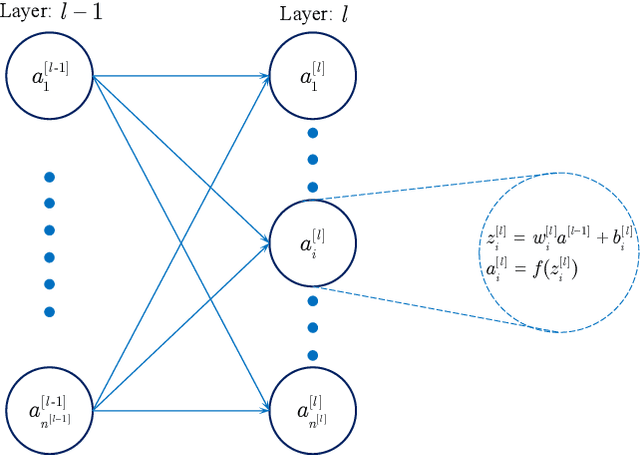

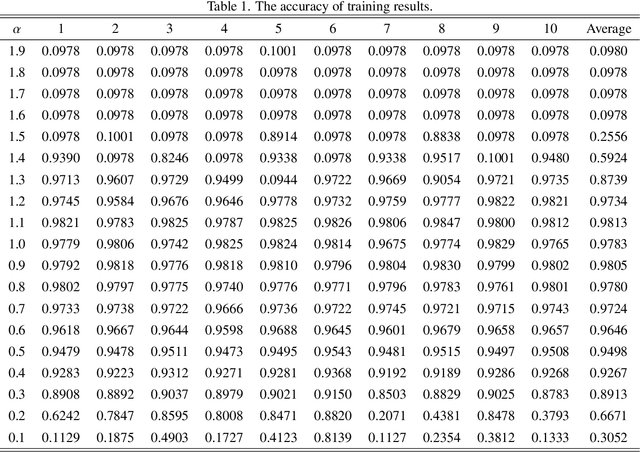

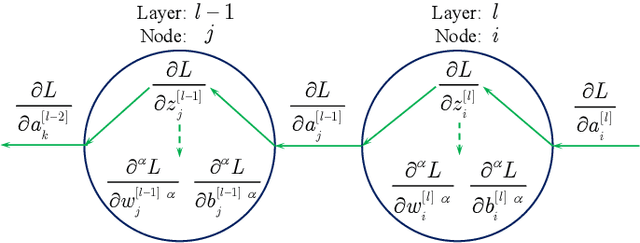

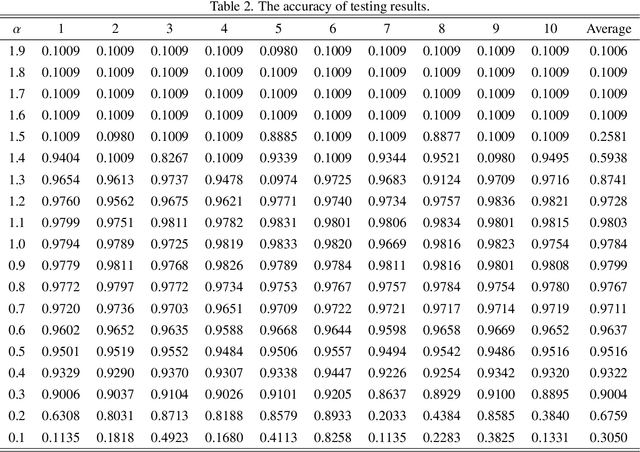

Abstract:This paper proposes a fractional order gradient method for the backward propagation of convolutional neural networks. To overcome the problem that fractional order gradient method cannot converge to real extreme point, a simplified fractional order gradient method is designed based on Caputo's definition. The parameters within layers are updated by the designed gradient method, but the propagations between layers still use integer order gradients, and thus the complicated derivatives of composite functions are avoided and the chain rule will be kept. By connecting every layers in series and adding loss functions, the proposed convolutional neural networks can be trained smoothly according to various tasks. Some practical experiments are carried out in order to demonstrate the effectiveness of neural networks at last.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge