David J. Miller

Improving the Sensitivity of Backdoor Detectors via Class Subspace Orthogonalization

Dec 09, 2025

Abstract:Most post-training backdoor detection methods rely on attacked models exhibiting extreme outlier detection statistics for the target class of an attack, compared to non-target classes. However, these approaches may fail: (1) when some (non-target) classes are easily discriminable from all others, in which case they may naturally achieve extreme detection statistics (e.g., decision confidence); and (2) when the backdoor is subtle, i.e., with its features weak relative to intrinsic class-discriminative features. A key observation is that the backdoor target class has contributions to its detection statistic from both the backdoor trigger and from its intrinsic features, whereas non-target classes only have contributions from their intrinsic features. To achieve more sensitive detectors, we thus propose to suppress intrinsic features while optimizing the detection statistic for a given class. For non-target classes, such suppression will drastically reduce the achievable statistic, whereas for the target class the (significant) contribution from the backdoor trigger remains. In practice, we formulate a constrained optimization problem, leveraging a small set of clean examples from a given class, and optimizing the detection statistic while orthogonalizing with respect to the class's intrinsic features. We dub this plug-and-play approach Class Subspace Orthogonalization (CSO) and assess it against challenging mixed-label and adaptive attacks.

Inverting Trojans in LLMs

Sep 19, 2025Abstract:While effective backdoor detection and inversion schemes have been developed for AIs used e.g. for images, there are challenges in "porting" these methods to LLMs. First, the LLM input space is discrete, which precludes gradient-based search over this space, central to many backdoor inversion methods. Second, there are ~30,000^k k-tuples to consider, k the token-length of a putative trigger. Third, for LLMs there is the need to blacklist tokens that have strong marginal associations with the putative target response (class) of an attack, as such tokens give false detection signals. However, good blacklists may not exist for some domains. We propose a LLM trigger inversion approach with three key components: i) discrete search, with putative triggers greedily accreted, starting from a select list of singletons; ii) implicit blacklisting, achieved by evaluating the average cosine similarity, in activation space, between a candidate trigger and a small clean set of samples from the putative target class; iii) detection when a candidate trigger elicits high misclassifications, and with unusually high decision confidence. Unlike many recent works, we demonstrate that our approach reliably detects and successfully inverts ground-truth backdoor trigger phrases.

On Trojans in Refined Language Models

Jun 12, 2024

Abstract:A Trojan in a language model can be inserted when the model is refined for a particular application such as determining the sentiment of product reviews. In this paper, we clarify and empirically explore variations of the data-poisoning threat model. We then empirically assess two simple defenses each for a different defense scenario. Finally, we provide a brief survey of related attacks and defenses.

Universal Post-Training Reverse-Engineering Defense Against Backdoors in Deep Neural Networks

Feb 03, 2024

Abstract:A variety of defenses have been proposed against backdoors attacks on deep neural network (DNN) classifiers. Universal methods seek to reliably detect and/or mitigate backdoors irrespective of the incorporation mechanism used by the attacker, while reverse-engineering methods often explicitly assume one. In this paper, we describe a new detector that: relies on internal feature map of the defended DNN to detect and reverse-engineer the backdoor and identify its target class; can operate post-training (without access to the training dataset); is highly effective for various incorporation mechanisms (i.e., is universal); and which has low computational overhead and so is scalable. Our detection approach is evaluated for different attacks on a benchmark CIFAR-10 image classifier.

Post-Training Overfitting Mitigation in DNN Classifiers

Sep 28, 2023

Abstract:Well-known (non-malicious) sources of overfitting in deep neural net (DNN) classifiers include: i) large class imbalances; ii) insufficient training-set diversity; and iii) over-training. In recent work, it was shown that backdoor data-poisoning also induces overfitting, with unusually large classification margins to the attacker's target class, mediated particularly by (unbounded) ReLU activations that allow large signals to propagate in the DNN. Thus, an effective post-training (with no knowledge of the training set or training process) mitigation approach against backdoors was proposed, leveraging a small clean dataset, based on bounding neural activations. Improving upon that work, we threshold activations specifically to limit maximum margins (MMs), which yields performance gains in backdoor mitigation. We also provide some analytical support for this mitigation approach. Most importantly, we show that post-training MM-based regularization substantially mitigates non-malicious overfitting due to class imbalances and overtraining. Thus, unlike adversarial training, which provides some resilience against attacks but which harms clean (attack-free) generalization, we demonstrate an approach originating from adversarial learning that helps clean generalization accuracy. Experiments on CIFAR-10 and CIFAR-100, in comparison with peer methods, demonstrate strong performance of our methods.

Backdoor Mitigation by Correcting the Distribution of Neural Activations

Aug 18, 2023

Abstract:Backdoor (Trojan) attacks are an important type of adversarial exploit against deep neural networks (DNNs), wherein a test instance is (mis)classified to the attacker's target class whenever the attacker's backdoor trigger is present. In this paper, we reveal and analyze an important property of backdoor attacks: a successful attack causes an alteration in the distribution of internal layer activations for backdoor-trigger instances, compared to that for clean instances. Even more importantly, we find that instances with the backdoor trigger will be correctly classified to their original source classes if this distribution alteration is corrected. Based on our observations, we propose an efficient and effective method that achieves post-training backdoor mitigation by correcting the distribution alteration using reverse-engineered triggers. Notably, our method does not change any trainable parameters of the DNN, but achieves generally better mitigation performance than existing methods that do require intensive DNN parameter tuning. It also efficiently detects test instances with the trigger, which may help to catch adversarial entities in the act of exploiting the backdoor.

Improved Activation Clipping for Universal Backdoor Mitigation and Test-Time Detection

Aug 08, 2023

Abstract:Deep neural networks are vulnerable to backdoor attacks (Trojans), where an attacker poisons the training set with backdoor triggers so that the neural network learns to classify test-time triggers to the attacker's designated target class. Recent work shows that backdoor poisoning induces over-fitting (abnormally large activations) in the attacked model, which motivates a general, post-training clipping method for backdoor mitigation, i.e., with bounds on internal-layer activations learned using a small set of clean samples. We devise a new such approach, choosing the activation bounds to explicitly limit classification margins. This method gives superior performance against peer methods for CIFAR-10 image classification. We also show that this method has strong robustness against adaptive attacks, X2X attacks, and on different datasets. Finally, we demonstrate a method extension for test-time detection and correction based on the output differences between the original and activation-bounded networks. The code of our method is online available.

Universal Post-Training Backdoor Detection

May 13, 2022

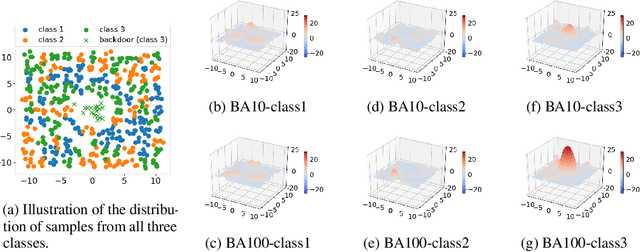

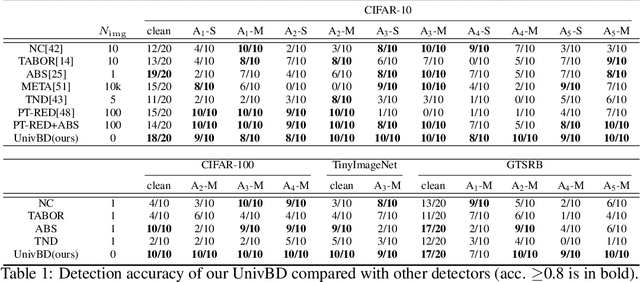

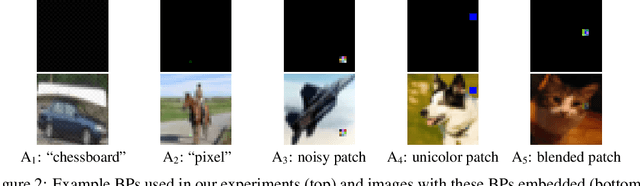

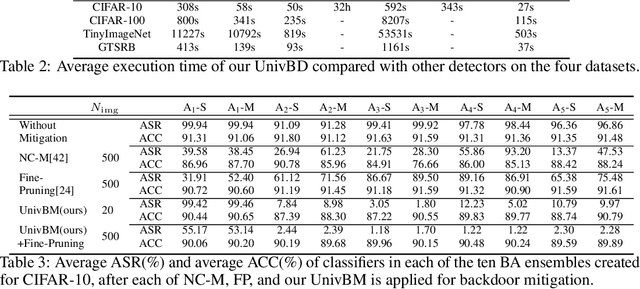

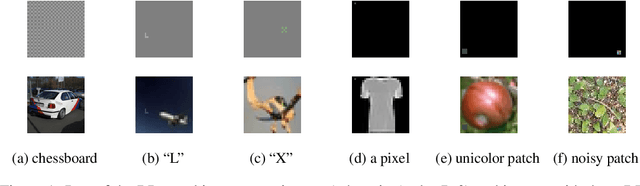

Abstract:A Backdoor attack (BA) is an important type of adversarial attack against deep neural network classifiers, wherein test samples from one or more source classes will be (mis)classified to the attacker's target class when a backdoor pattern (BP) is embedded. In this paper, we focus on the post-training backdoor defense scenario commonly considered in the literature, where the defender aims to detect whether a trained classifier was backdoor attacked, without any access to the training set. To the best of our knowledge, existing post-training backdoor defenses are all designed for BAs with presumed BP types, where each BP type has a specific embedding function. They may fail when the actual BP type used by the attacker (unknown to the defender) is different from the BP type assumed by the defender. In contrast, we propose a universal post-training defense that detects BAs with arbitrary types of BPs, without making any assumptions about the BP type. Our detector leverages the influence of the BA, independently of the BP type, on the landscape of the classifier's outputs prior to the softmax layer. For each class, a maximum margin statistic is estimated using a set of random vectors; detection inference is then performed by applying an unsupervised anomaly detector to these statistics. Thus, our detector is also an advance relative to most existing post-training methods by not needing any legitimate clean samples, and can efficiently detect BAs with arbitrary numbers of source classes. These advantages of our detector over several state-of-the-art methods are demonstrated on four datasets, for three different types of BPs, and for a variety of attack configurations. Finally, we propose a novel, general approach for BA mitigation once a detection is made.

Post-Training Detection of Backdoor Attacks for Two-Class and Multi-Attack Scenarios

Jan 20, 2022

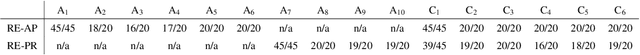

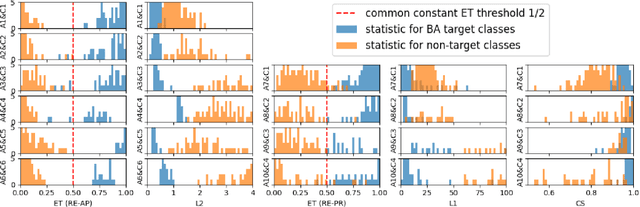

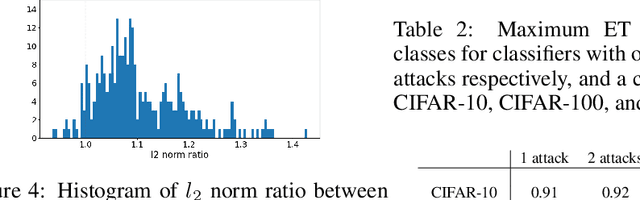

Abstract:Backdoor attacks (BAs) are an emerging threat to deep neural network classifiers. A victim classifier will predict to an attacker-desired target class whenever a test sample is embedded with the same backdoor pattern (BP) that was used to poison the classifier's training set. Detecting whether a classifier is backdoor attacked is not easy in practice, especially when the defender is, e.g., a downstream user without access to the classifier's training set. This challenge is addressed here by a reverse-engineering defense (RED), which has been shown to yield state-of-the-art performance in several domains. However, existing REDs are not applicable when there are only {\it two classes} or when {\it multiple attacks} are present. These scenarios are first studied in the current paper, under the practical constraints that the defender neither has access to the classifier's training set nor to supervision from clean reference classifiers trained for the same domain. We propose a detection framework based on BP reverse-engineering and a novel {\it expected transferability} (ET) statistic. We show that our ET statistic is effective {\it using the same detection threshold}, irrespective of the classification domain, the attack configuration, and the BP reverse-engineering algorithm that is used. The excellent performance of our method is demonstrated on six benchmark datasets. Notably, our detection framework is also applicable to multi-class scenarios with multiple attacks.

Test-Time Detection of Backdoor Triggers for Poisoned Deep Neural Networks

Dec 06, 2021

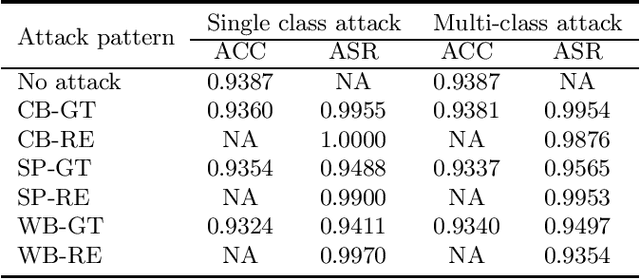

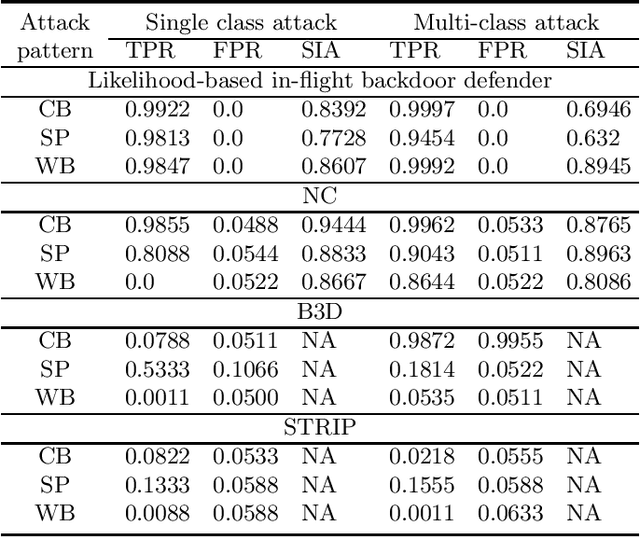

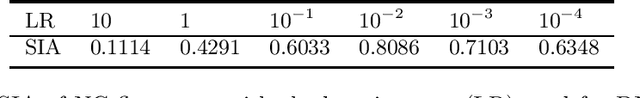

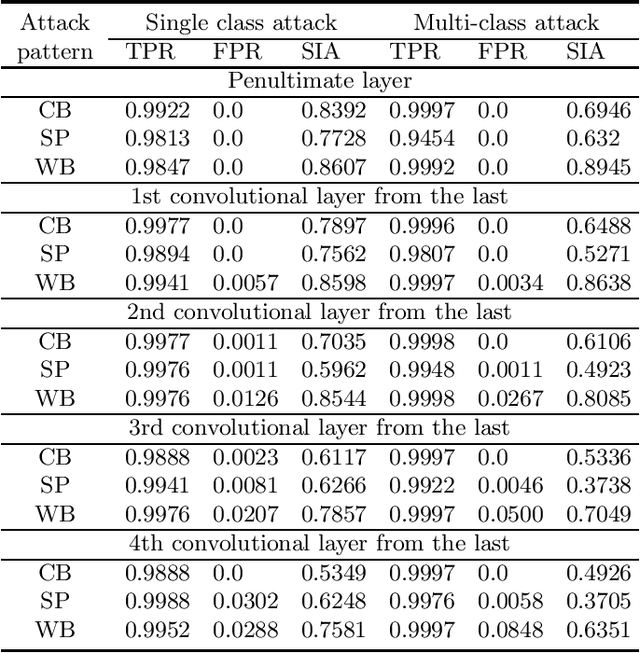

Abstract:Backdoor (Trojan) attacks are emerging threats against deep neural networks (DNN). A DNN being attacked will predict to an attacker-desired target class whenever a test sample from any source class is embedded with a backdoor pattern; while correctly classifying clean (attack-free) test samples. Existing backdoor defenses have shown success in detecting whether a DNN is attacked and in reverse-engineering the backdoor pattern in a "post-training" regime: the defender has access to the DNN to be inspected and a small, clean dataset collected independently, but has no access to the (possibly poisoned) training set of the DNN. However, these defenses neither catch culprits in the act of triggering the backdoor mapping, nor mitigate the backdoor attack at test-time. In this paper, we propose an "in-flight" defense against backdoor attacks on image classification that 1) detects use of a backdoor trigger at test-time; and 2) infers the class of origin (source class) for a detected trigger example. The effectiveness of our defense is demonstrated experimentally against different strong backdoor attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge