Daniele Perrone

Event-Based Eye Tracking. 2025 Event-based Vision Workshop

Apr 25, 2025

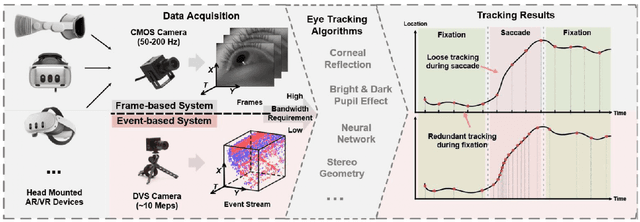

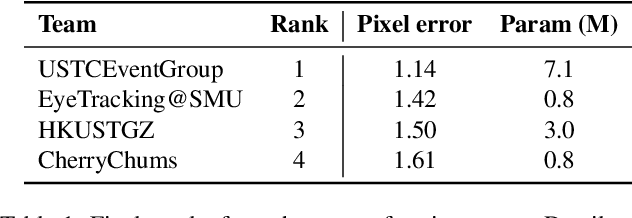

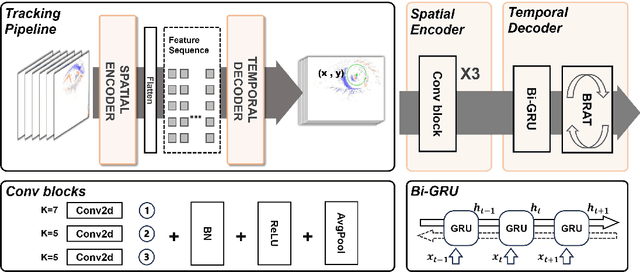

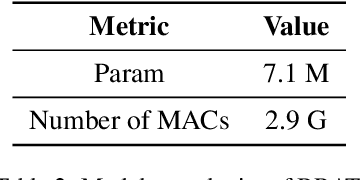

Abstract:This survey serves as a review for the 2025 Event-Based Eye Tracking Challenge organized as part of the 2025 CVPR event-based vision workshop. This challenge focuses on the task of predicting the pupil center by processing event camera recorded eye movement. We review and summarize the innovative methods from teams rank the top in the challenge to advance future event-based eye tracking research. In each method, accuracy, model size, and number of operations are reported. In this survey, we also discuss event-based eye tracking from the perspective of hardware design.

Motion Deblurring for Plenoptic Images

Feb 12, 2016

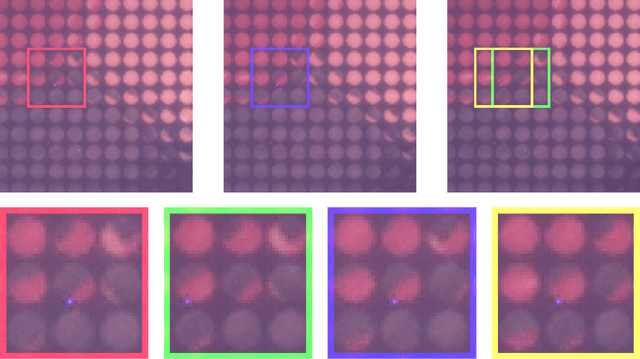

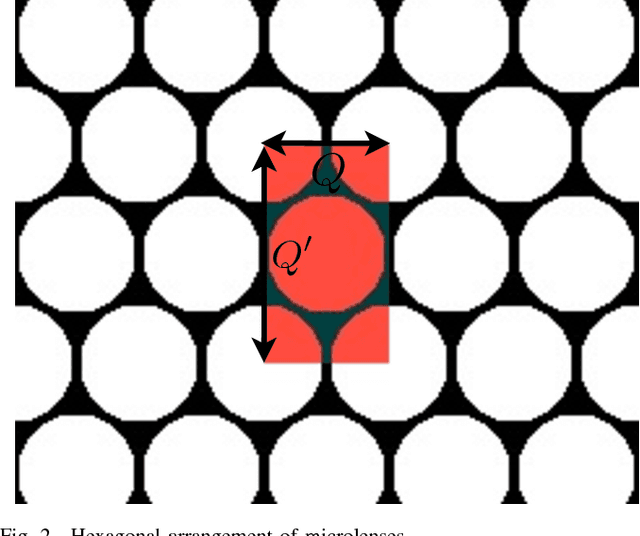

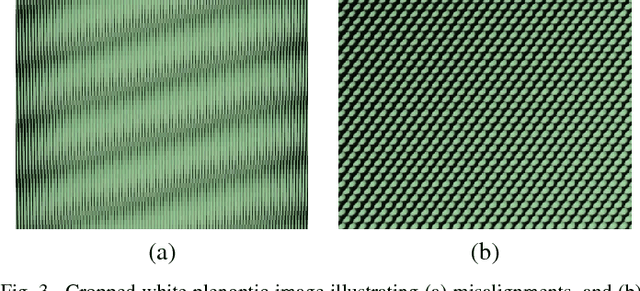

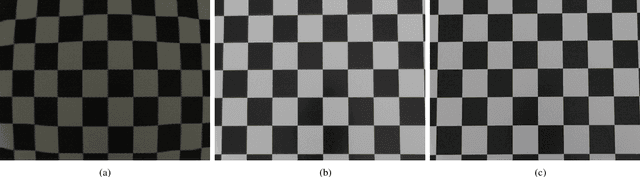

Abstract:We address for the first time the issue of motion blur in light field images captured from plenoptic cameras. We propose a solution to the estimation of a sharp high resolution scene radiance given a blurry light field image, when the motion blur point spread function is unknown, i.e., the so-called blind deconvolution problem. In a plenoptic camera, the spatial sampling in each view is not only decimated but also defocused. Consequently, current blind deconvolution approaches for traditional cameras are not applicable. Due to the complexity of the imaging model, we investigate first the case of uniform (shift-invariant) blur of Lambertian objects, i.e., when objects are sufficiently far away from the camera to be approximately invariant to depth changes and their reflectance does not vary with the viewing direction. We introduce a highly parallelizable model for light field motion blur that is computationally and memory efficient. We then adapt a regularized blind deconvolution approach to our model and demonstrate its performance on both synthetic and real light field data. Our method handles practical issues in real cameras such as radial distortion correction and alignment within an energy minimization framework.

A Clearer Picture of Blind Deconvolution

Nov 30, 2014

Abstract:Blind deconvolution is the problem of recovering a sharp image and a blur kernel from a noisy blurry image. Recently, there has been a significant effort on understanding the basic mechanisms to solve blind deconvolution. While this effort resulted in the deployment of effective algorithms, the theoretical findings generated contrasting views on why these approaches worked. On the one hand, one could observe experimentally that alternating energy minimization algorithms converge to the desired solution. On the other hand, it has been shown that such alternating minimization algorithms should fail to converge and one should instead use a so-called Variational Bayes approach. To clarify this conundrum, recent work showed that a good image and blur prior is instead what makes a blind deconvolution algorithm work. Unfortunately, this analysis did not apply to algorithms based on total variation regularization. In this manuscript, we provide both analysis and experiments to get a clearer picture of blind deconvolution. Our analysis reveals the very reason why an algorithm based on total variation works. We also introduce an implementation of this algorithm and show that, in spite of its extreme simplicity, it is very robust and achieves a performance comparable to the state of the art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge