Cong Kong

Adversarial Attacks on Medical Hyperspectral Imaging Exploiting Spectral-Spatial Dependencies and Multiscale Features

Jan 11, 2026Abstract:Medical hyperspectral imaging (HSI) enables accurate disease diagnosis by capturing rich spectral-spatial tissue information, but recent advances in deep learning have exposed its vulnerability to adversarial attacks. In this work, we identify two fundamental causes of this fragility: the reliance on local pixel dependencies for preserving tissue structure and the dependence on multiscale spectral-spatial representations for hierarchical feature encoding. Building on these insights, we propose a targeted adversarial attack framework for medical HSI, consisting of a Local Pixel Dependency Attack that exploits spatial correlations among neighboring pixels, and a Multiscale Information Attack that perturbs features across hierarchical spectral-spatial scales. Experiments on the Brain and MDC datasets demonstrate that our attacks significantly degrade classification performance, especially in tumor regions, while remaining visually imperceptible. Compared with existing methods, our approach reveals the unique vulnerabilities of medical HSI models and underscores the need for robust, structure-aware defenses in clinical applications.

Protecting Copyright of Medical Pre-trained Language Models: Training-Free Backdoor Watermarking

Sep 14, 2024

Abstract:Pre-training language models followed by fine-tuning on specific tasks is standard in NLP, but traditional models often underperform when applied to the medical domain, leading to the development of specialized medical pre-trained language models (Med-PLMs). These models are valuable assets but are vulnerable to misuse and theft, requiring copyright protection. However, no existing watermarking methods are tailored for Med-PLMs, and adapting general PLMs watermarking techniques to the medical domain faces challenges such as task incompatibility, loss of fidelity, and inefficiency. To address these issues, we propose the first training-free backdoor watermarking method for Med-PLMs. Our method uses rare special symbols as trigger words, which do not impact downstream task performance, embedding watermarks by replacing their original embeddings with those of specific medical terms in the Med-PLMs' word embeddings layer. After fine-tuning the watermarked Med-PLMs on various medical downstream tasks, the final models (FMs) respond to the trigger words in the same way they would to the corresponding medical terms. This property can be utilized to extract the watermark. Experiments demonstrate that our method achieves high fidelity while effectively extracting watermarks across various medical downstream tasks. Additionally, our method demonstrates robustness against various attacks and significantly enhances the efficiency of watermark embedding, reducing the embedding time from 10 hours to 10 seconds.

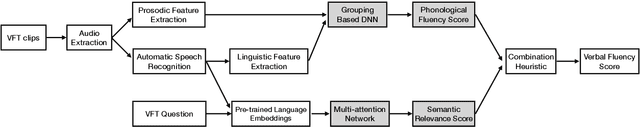

Dolphin: A Verbal Fluency Evaluation System for Elementary Education

Aug 04, 2019

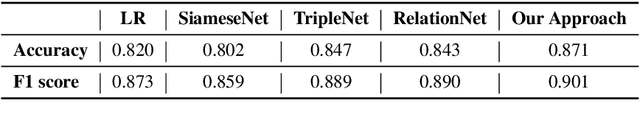

Abstract:Verbal fluency is critically important for children growth and personal development \cite{cohen1999verbal,berninger1992gender}. Due to the limited and imbalanced educational resource in China, elementary students barely have chances to improve their oral language skills in classes. Verbal fluency tasks (VFTs) were invented to let the students practice their oral language skills after school. VFTs are simple but concrete math related questions that ask students to not only report answers but speak out the entire thinking process. In spite of the great success of VFTs, they bring a heavy grading burden to elementary teachers. To alleviate this problem, we develop Dolphin, a verbal fluency evaluation system for Chinese elementary education. Dolphin is able to automatically evaluate both phonological fluency and semantic relevance of students' answers of their VFT assignments. We conduct a wide range of offline and online experiments to demonstrate the effectiveness of Dolphin. In our offline experiments, we show that Dolphin improves both phonological fluency and semantic relevance evaluation performance when compared to state-of-the-art baselines on real-world educational data sets. In our online A/B experiments, we test Dolphin with 183 teachers from 2 major cities (Hangzhou and Xi'an) in China for 10 weeks and the results show that VFT assignments grading coverage is improved by 22\%. To encourage the reproducible results, we make our code public on an anonymous git repo: \url{https://tinyurl.com/y52tzcw7}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge