Christoph Adami

Michigan State University

Promoting Cooperation in the Public Goods Game using Artificial Intelligent Agents

Dec 06, 2024Abstract:The tragedy of the commons illustrates a fundamental social dilemma where individual rational actions lead to collectively undesired outcomes, threatening the sustainability of shared resources. Strategies to escape this dilemma, however, are in short supply. In this study, we explore how artificial intelligence (AI) agents can be leveraged to enhance cooperation in public goods games, moving beyond traditional regulatory approaches to using AI as facilitators of cooperation. We investigate three scenarios: (1) Mandatory Cooperation Policy for AI Agents, where AI agents are institutionally mandated always to cooperate; (2) Player-Controlled Agent Cooperation Policy, where players evolve control over AI agents' likelihood to cooperate; and (3) Agents Mimic Players, where AI agents copy the behavior of players. Using a computational evolutionary model with a population of agents playing public goods games, we find that only when AI agents mimic player behavior does the critical synergy threshold for cooperation decrease, effectively resolving the dilemma. This suggests that we can leverage AI to promote collective well-being in societal dilemmas by designing AI agents to mimic human players.

Detecting Information Relays in Deep Neural Networks

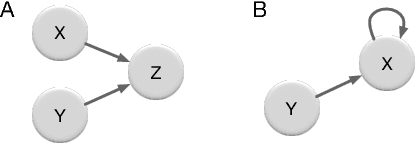

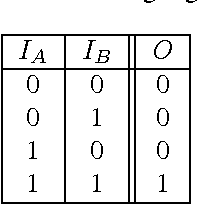

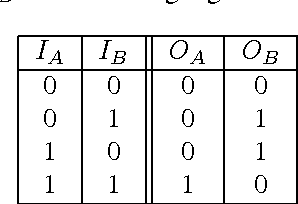

Jan 03, 2023Abstract:Deep-learning of artificial neural networks (ANNs) is creating highly functional tools that are, unfortunately, as hard to interpret as their natural counterparts. While it is possible to identify functional modules in natural brains using technologies such as fMRI, we do not have at our disposal similarly robust methods for artificial neural networks. Ideally, understanding which parts of an artificial neural network perform what function might help us to address a number of vexing problems in ANN research, such as catastrophic forgetting and overfitting. Furthermore, revealing a network's modularity could improve our trust in them by making these black boxes more transparent. Here we introduce a new information-theoretic concept that proves useful in understanding and analyzing a network's functional modularity: the relay information $I_R$. The relay information measures how much information groups of neurons that participate in a particular function (modules) relay from inputs to outputs. Combined with a greedy search algorithm, relay information can be used to {\em identify} computational modules in neural networks. We also show that the functionality of modules correlates with the amount of relay information they carry.

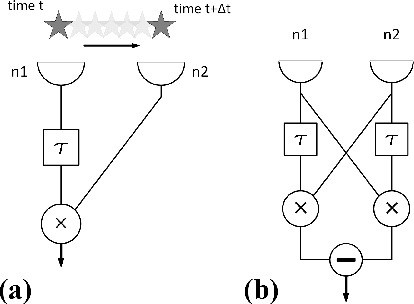

Can Transfer Entropy Infer Causality in Neuronal Circuits for Cognitive Processing?

Jan 22, 2019

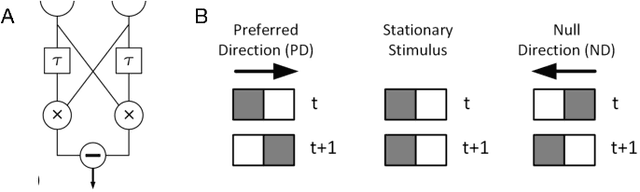

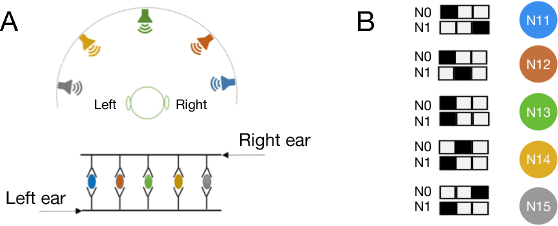

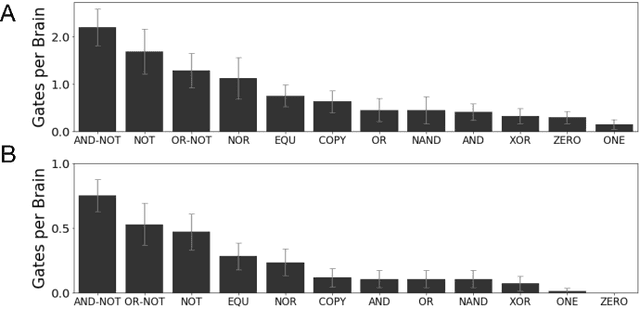

Abstract:Finding the causes to observed effects and establishing causal relationships between events is (and has been) an essential element of science and philosophy. Automated methods that can detect causal relationships would be very welcome, but practical methods that can infer causality are difficult to find, and the subject of ongoing research. While Shannon information only detects correlation, there are several information-theoretic notions of "directed information" that have successfully detected causality in some systems, in particular in the neuroscience community. However, recent work has shown that some directed information measures can sometimes inadequately estimate the extent of causal relations, or even fail to identify existing cause-effect relations between components of systems, especially if neurons contribute in a cryptographic manner to influence the effector neuron. Here, we test how often cryptographic logic emerges in an evolutionary process that generates artificial neural circuits for two fundamental cognitive tasks: motion detection and sound localization. Our results suggest that whether or not transfer entropy measures of causality are misleading depends strongly on the cognitive task considered. These results emphasize the importance of understanding the fundamental logic processes that contribute to cognitive processing, and quantifying their relevance in any given nervous system.

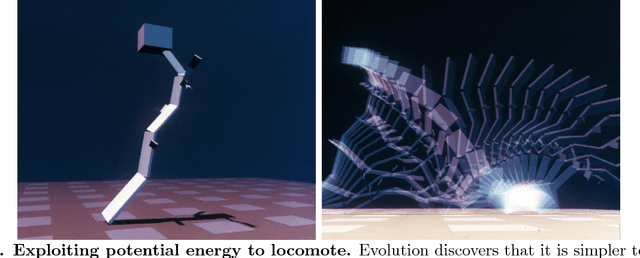

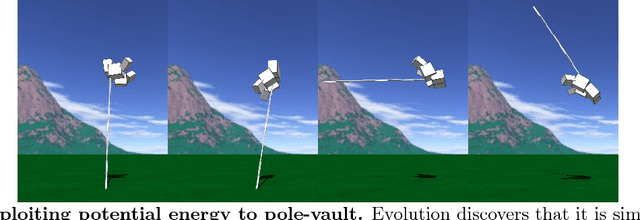

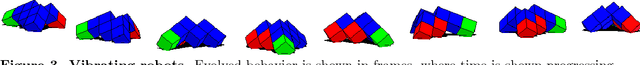

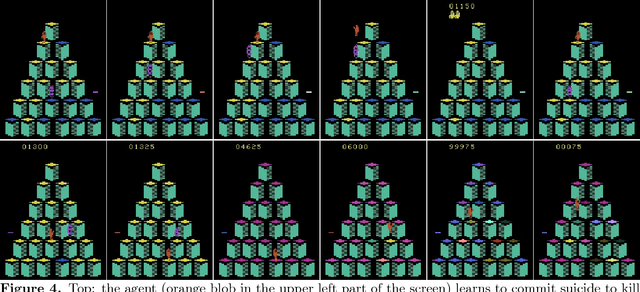

The Surprising Creativity of Digital Evolution: A Collection of Anecdotes from the Evolutionary Computation and Artificial Life Research Communities

Aug 14, 2018

Abstract:Biological evolution provides a creative fount of complex and subtle adaptations, often surprising the scientists who discover them. However, because evolution is an algorithmic process that transcends the substrate in which it occurs, evolution's creativity is not limited to nature. Indeed, many researchers in the field of digital evolution have observed their evolving algorithms and organisms subverting their intentions, exposing unrecognized bugs in their code, producing unexpected adaptations, or exhibiting outcomes uncannily convergent with ones in nature. Such stories routinely reveal creativity by evolution in these digital worlds, but they rarely fit into the standard scientific narrative. Instead they are often treated as mere obstacles to be overcome, rather than results that warrant study in their own right. The stories themselves are traded among researchers through oral tradition, but that mode of information transmission is inefficient and prone to error and outright loss. Moreover, the fact that these stories tend to be shared only among practitioners means that many natural scientists do not realize how interesting and lifelike digital organisms are and how natural their evolution can be. To our knowledge, no collection of such anecdotes has been published before. This paper is the crowd-sourced product of researchers in the fields of artificial life and evolutionary computation who have provided first-hand accounts of such cases. It thus serves as a written, fact-checked collection of scientifically important and even entertaining stories. In doing so we also present here substantial evidence that the existence and importance of evolutionary surprises extends beyond the natural world, and may indeed be a universal property of all complex evolving systems.

Evolution leads to a diversity of motion-detection neuronal circuits

Jun 05, 2018

Abstract:A central goal of evolutionary biology is to explain the origins and distribution of diversity across life. Beyond species or genetic diversity, we also observe diversity in the circuits (genetic or otherwise) underlying complex functional traits. However, while the theory behind the origins and maintenance of genetic and species diversity has been studied for decades, theory concerning the origin of diverse functional circuits is still in its infancy. It is not known how many different circuit structures can implement any given function, which evolutionary factors lead to different circuits, and whether the evolution of a particular circuit was due to adaptive or non-adaptive processes. Here, we use digital experimental evolution to study the diversity of neural circuits that encode motion detection in digital (artificial) brains. We find that evolution leads to an enormous diversity of potential neural architectures encoding motion detection circuits, even for circuits encoding the exact same function. Evolved circuits vary in both redundancy and complexity (as previously found in genetic circuits) suggesting that similar evolutionary principles underlie circuit formation using any substrate. We also show that a simple (designed) motion detection circuit that is optimally-adapted gains in complexity when evolved further, and that selection for mutational robustness led this gain in complexity.

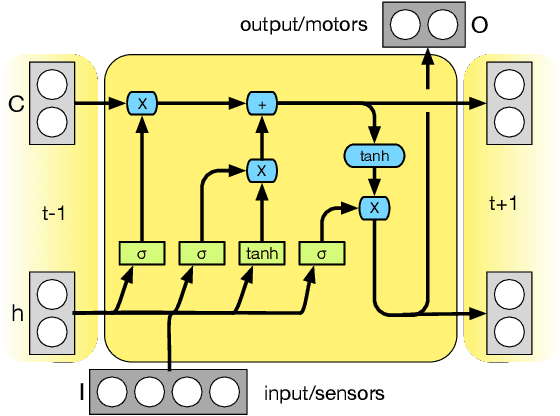

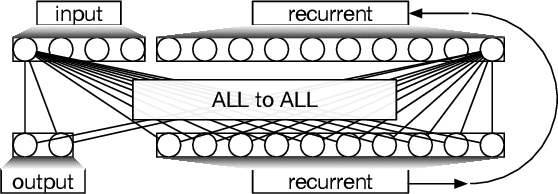

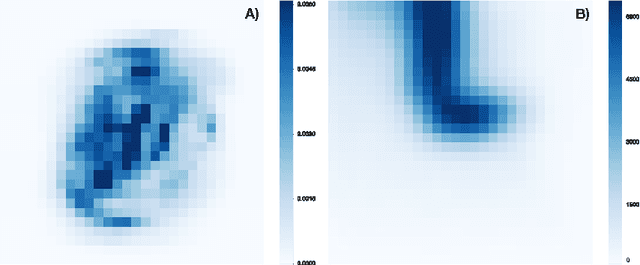

The structure of evolved representations across different substrates for artificial intelligence

Apr 05, 2018

Abstract:Artificial neural networks (ANNs), while exceptionally useful for classification, are vulnerable to misdirection. Small amounts of noise can significantly affect their ability to correctly complete a task. Instead of generalizing concepts, ANNs seem to focus on surface statistical regularities in a given task. Here we compare how recurrent artificial neural networks, long short-term memory units, and Markov Brains sense and remember their environments. We show that information in Markov Brains is localized and sparsely distributed, while the other neural network substrates "smear" information about the environment across all nodes, which makes them vulnerable to noise.

The mind as a computational system

Dec 01, 2017Abstract:The present document is an excerpt of an essay that I wrote as part of my application material to graduate school in Computer Science (with a focus on Artificial Intelligence), in 1986. I was not invited by any of the schools that received it, so I became a theoretical physicist instead. The essay's full title was "Some Topics in Philosophy and Computer Science". I am making this text (unchanged from 1985, preserving the typesetting as much as possible) available now in memory of Jerry Fodor, whose writings had influenced me significantly at the time (even though I did not always agree).

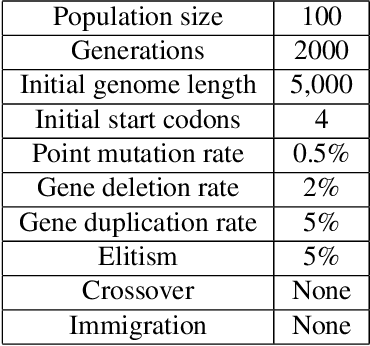

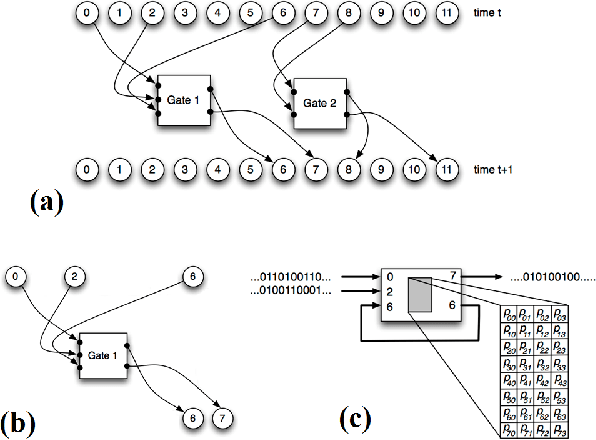

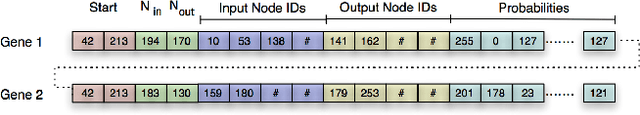

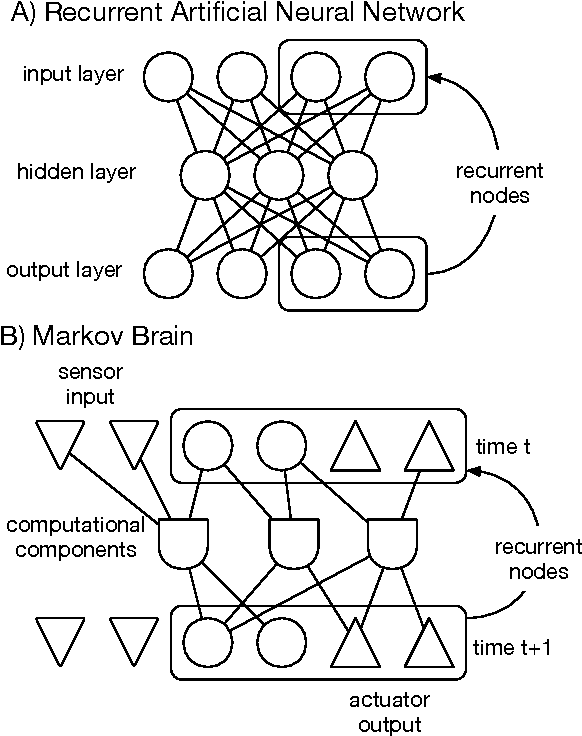

Markov Brains: A Technical Introduction

Sep 17, 2017

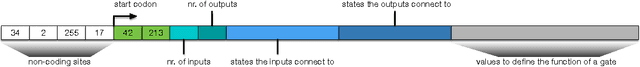

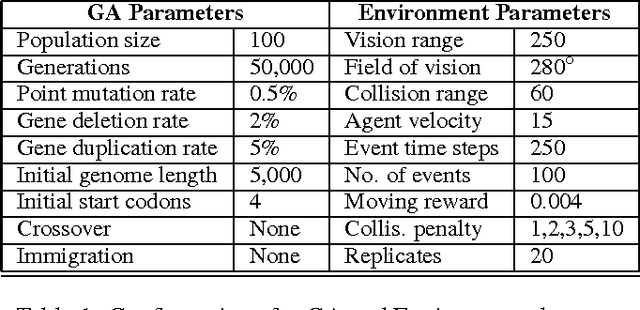

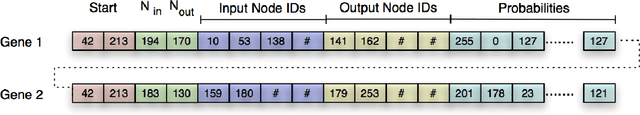

Abstract:Markov Brains are a class of evolvable artificial neural networks (ANN). They differ from conventional ANNs in many aspects, but the key difference is that instead of a layered architecture, with each node performing the same function, Markov Brains are networks built from individual computational components. These computational components interact with each other, receive inputs from sensors, and control motor outputs. The function of the computational components, their connections to each other, as well as connections to sensors and motors are all subject to evolutionary optimization. Here we describe in detail how a Markov Brain works, what techniques can be used to study them, and how they can be evolved.

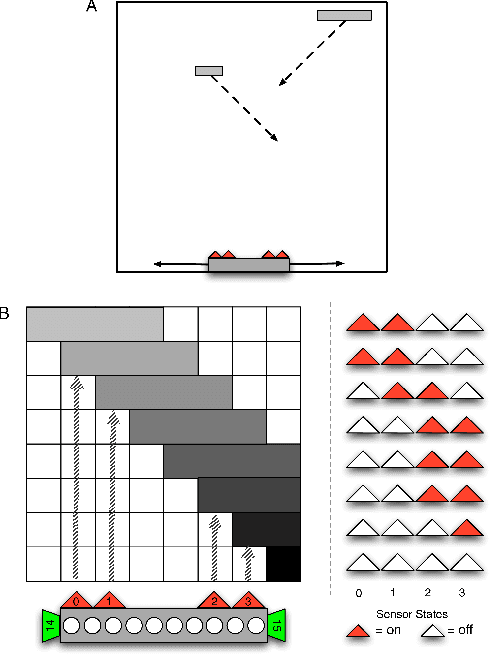

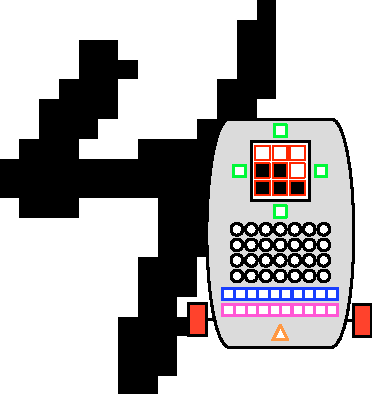

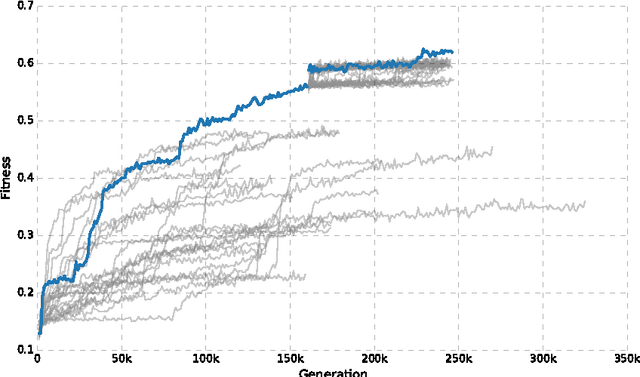

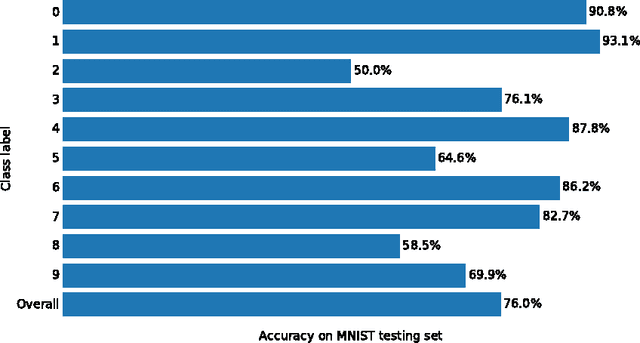

Evolution of active categorical image classification via saccadic eye movement

Jun 16, 2016

Abstract:Pattern recognition and classification is a central concern for modern information processing systems. In particular, one key challenge to image and video classification has been that the computational cost of image processing scales linearly with the number of pixels in the image or video. Here we present an intelligent machine (the "active categorical classifier," or ACC) that is inspired by the saccadic movements of the eye, and is capable of classifying images by selectively scanning only a portion of the image. We harness evolutionary computation to optimize the ACC on the MNIST hand-written digit classification task, and provide a proof-of-concept that the ACC works on noisy multi-class data. We further analyze the ACC and demonstrate its ability to classify images after viewing only a fraction of the pixels, and provide insight on future research paths to further improve upon the ACC presented here.

* 10 pages, 5 figures, to appear in PPSN 2016 conference proceedings

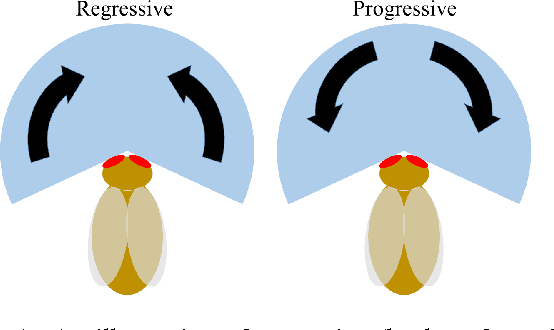

Flies as Ship Captains? Digital Evolution Unravels Selective Pressures to Avoid Collision in Drosophila

Mar 02, 2016

Abstract:Flies that walk in a covered planar arena on straight paths avoid colliding with each other, but which of the two flies stops is not random. High-throughput video observations, coupled with dedicated experiments with controlled robot flies have revealed that flies utilize the type of optic flow on their retina as a determinant of who should stop, a strategy also used by ship captains to determine which of two ships on a collision course should throw engines in reverse. We use digital evolution to test whether this strategy evolves when collision avoidance is the sole penalty. We find that the strategy does indeed evolve in a narrow range of cost/benefit ratios, for experiments in which the "regressive motion" cue is error free. We speculate that these stringent conditions may not be sufficient to evolve the strategy in real flies, pointing perhaps to auxiliary costs and benefits not modeled in our study

* 8 pages, 10 figures, submitted to 15th Artificial Life conference (ALife 2016)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge