TaylorBeamformer: Learning All-Neural Multi-Channel Speech Enhancement from Taylor's Approximation Theory

Mar 14, 2022Andong Li, Guochen Yu, Chengshi Zheng, Xiaodong Li

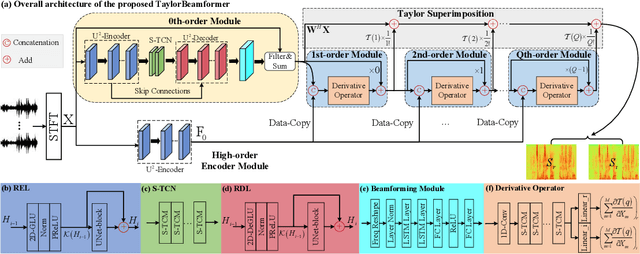

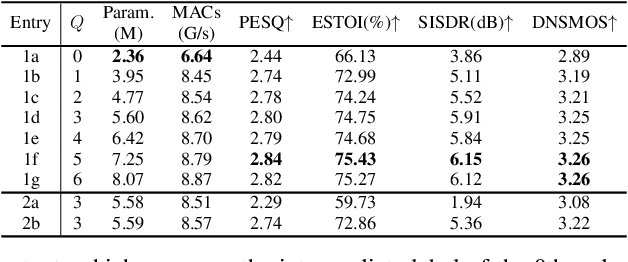

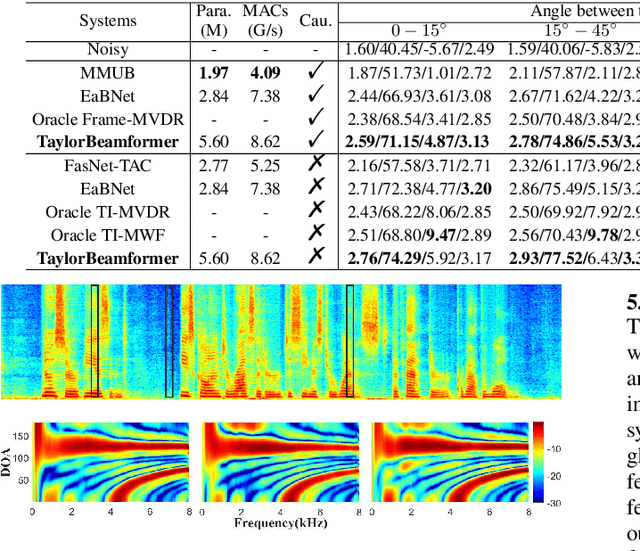

While existing end-to-end beamformers achieve impressive performance in various front-end speech tasks, they usually encapsulate the whole process into a black box and thus lack adequate interpretability. As an attempt to fill the blank, we propose a novel neural beamformer inspired by Taylor's approximation theory called TaylorBeamformer for multi-channel speech enhancement. The core idea is that the recovery process can be formulated as the spatial filtering in the neighborhood of the input mixture. Based on that, we decompose it into the superimposition of the 0th-order non-derivative and high-order derivative terms, where the former serves as the spatial filter and the latter are viewed as the residual noise canceller to further improve the speech quality. To enable end-to-end training, we replace the derivative operations with trainable networks and thus can learn from training data. Extensive experiments are conducted on the synthesized dataset based on LibriSpeech and results show that the proposed approach performs favorably against the previous advanced baselines

MDNet: Learning Monaural Speech Enhancement from Deep Prior Gradient

Mar 14, 2022Andong Li, Chengshi Zheng, Ziyang Zhang, Xiaodong Li

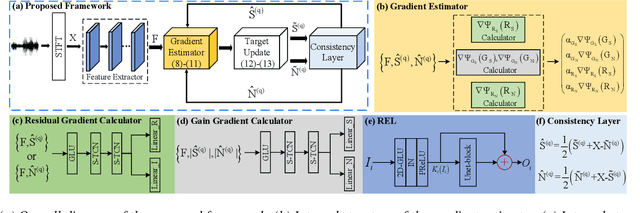

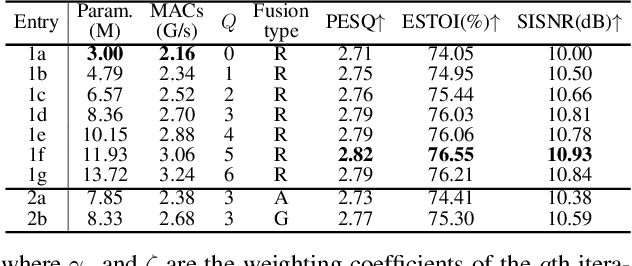

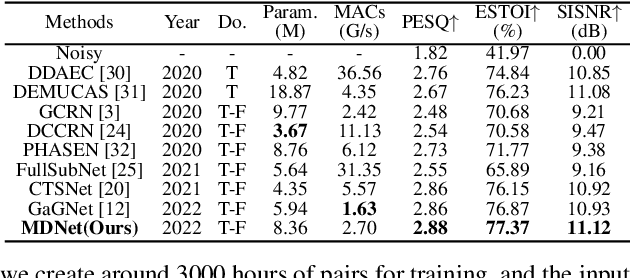

While traditional statistical signal processing model-based methods can derive the optimal estimators relying on specific statistical assumptions, current learning-based methods further promote the performance upper bound via deep neural networks but at the expense of high encapsulation and lack adequate interpretability. Standing upon the intersection between traditional model-based methods and learning-based methods, we propose a model-driven approach based on the maximum a posteriori (MAP) framework, termed as MDNet, for single-channel speech enhancement. Specifically, the original problem is formulated into the joint posterior estimation w.r.t. speech and noise components. Different from the manual assumption toward the prior terms, we propose to model the prior distribution via networks and thus can learn from training data. The framework takes the unfolding structure and in each step, the target parameters can be progressively estimated through explicit gradient descent operations. Besides, another network serves as the fusion module to further refine the previous speech estimation. The experiments are conducted on the WSJ0-SI84 and Interspeech2020 DNS-Challenge datasets, and quantitative results show that the proposed approach outshines previous state-of-the-art baselines.

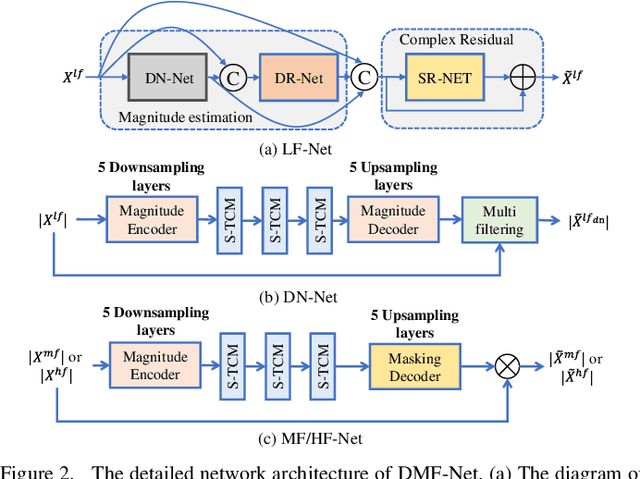

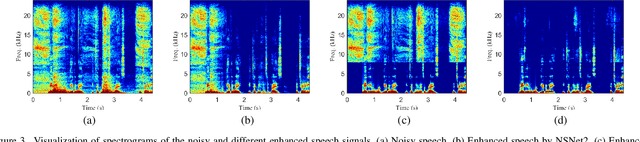

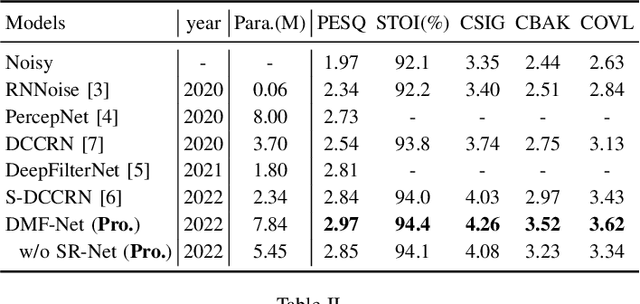

DMF-Net: A decoupling-style multi-band fusion model for real-time full-band speech enhancement

Mar 02, 2022Guochen Yu, Yuansheng Guan, Weixin Meng, Chengshi Zheng, Hui Wang

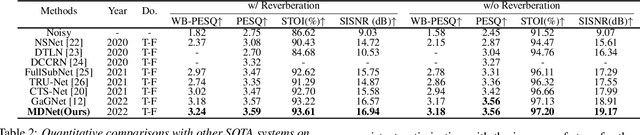

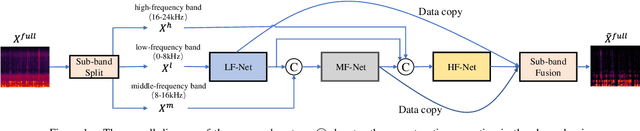

Full-band speech enhancement based on deep neural networks is still challenging for the difficulty of modeling more frequency bands and real-time implementation. Previous studies usually adopt compressed full-band speech features in Bark and ERB scale with relatively low frequency resolution, leading to degraded performance, especially in the high-frequency region. In this paper, we propose a decoupling-style multi-band fusion model to perform full-band speech denoising and dereverberation. Instead of optimizing the full-band speech by a single network structure, we decompose the full-band target into multi sub bands and then employ a multi-stage chain optimization strategy to estimate clean spectrum stage by stage. Specifically, the low- (0-8 kHz), middle- (8-16 kHz), and high-frequency (16-24 kHz) regions are mapped by three separate sub-networks and are then fused to obtain the full-band clean target STFT spectrum. Comprehensive experiments on two public datasets demonstrate that the proposed method outperforms previous advanced systems and yields promising performance in terms of speech quality and intelligibility in real complex scenarios.

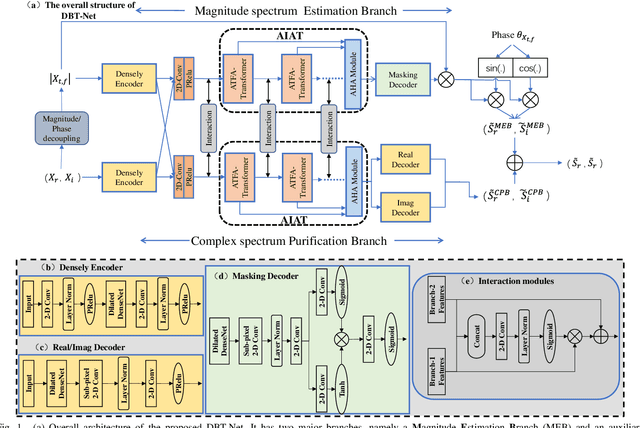

DBT-Net: Dual-branch federative magnitude and phase estimation with attention-in-attention transformer for monaural speech enhancement

Feb 16, 2022Guochen Yu, Andong Li, Hui Wang, Yutian Wang, Yuxuan Ke, Chengshi Zheng

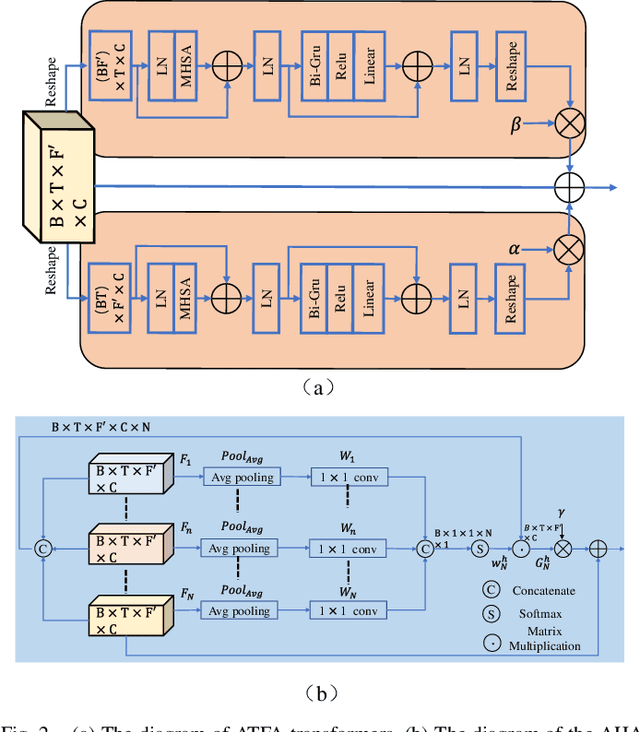

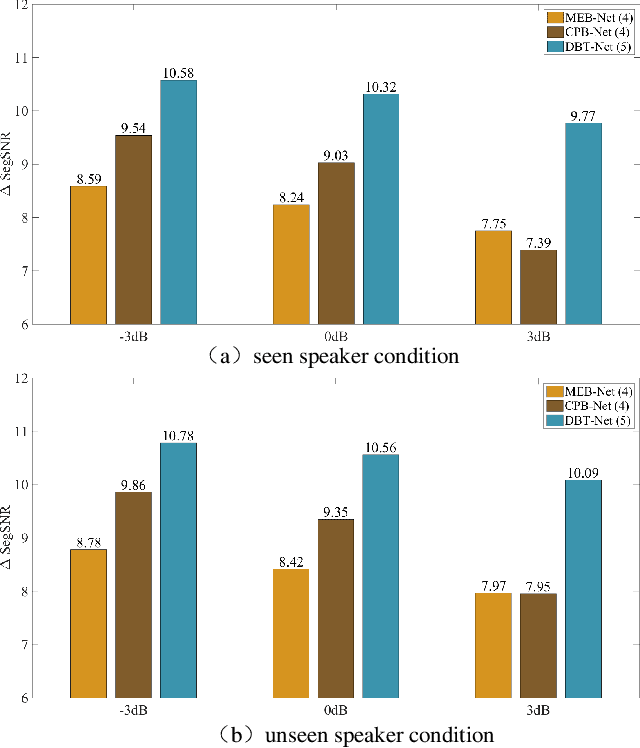

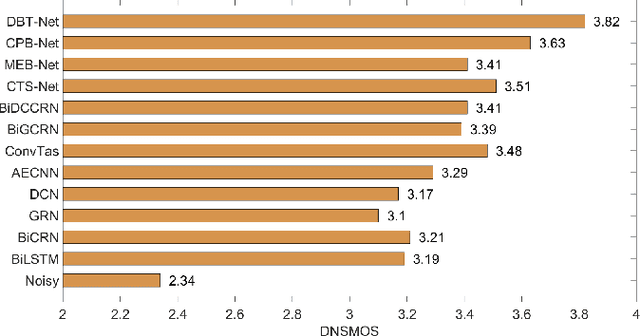

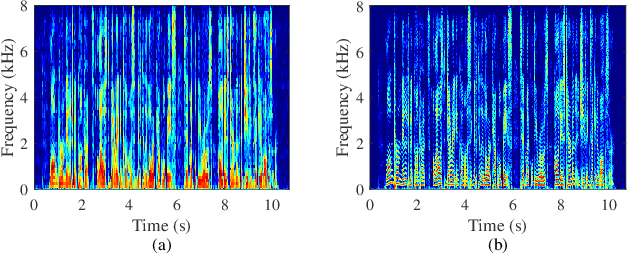

The decoupling-style concept begins to ignite in the speech enhancement area, which decouples the original complex spectrum estimation task into multiple easier sub-tasks (i.e., magnitude and phase), resulting in better performance and easier interpretability. In this paper, we propose a dual-branch federative magnitude and phase estimation framework, dubbed DBT-Net, for monaural speech enhancement, which aims at recovering the coarse- and fine-grained regions of the overall spectrum in parallel. From the complementary perspective, the magnitude estimation branch is designed to filter out dominant noise components in the magnitude domain, while the complex spectrum purification branch is elaborately designed to inpaint the missing spectral details and implicitly estimate the phase information in the complex domain. To facilitate the information flow between each branch, interaction modules are introduced to leverage features learned from one branch, so as to suppress the undesired parts and recover the missing components of the other branch. Instead of adopting the conventional RNNs and temporal convolutional networks for sequence modeling, we propose a novel attention-in-attention transformer-based network within each branch for better feature learning. More specially, it is composed of several adaptive spectro-temporal attention transformer-based modules and an adaptive hierarchical attention module, aiming to capture long-term time-frequency dependencies and further aggregate intermediate hierarchical contextual information. Comprehensive evaluations on the WSJ0-SI84 + DNS-Challenge and VoiceBank + DEMAND dataset demonstrate that the proposed approach consistently outperforms previous advanced systems and yields state-of-the-art performance in terms of speech quality and intelligibility.

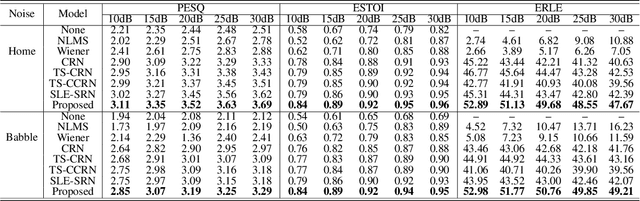

Low-latency Monaural Speech Enhancement with Deep Filter-bank Equalizer

Feb 14, 2022Chengshi Zheng, Wenzhe Liu, Andong Li, Yuxuan Ke, Xiaodong Li

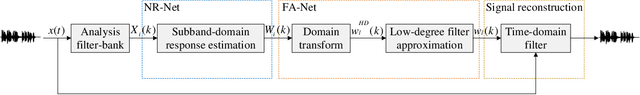

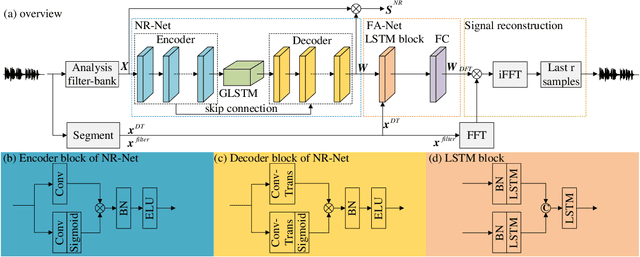

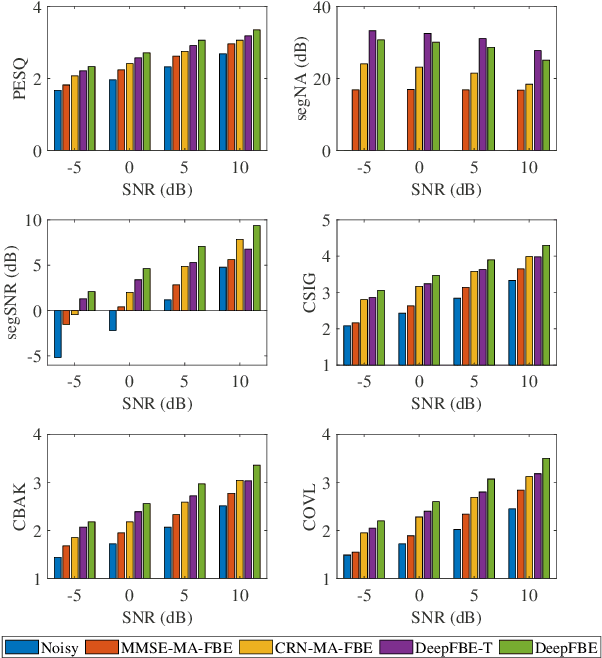

It is highly desirable that speech enhancement algorithms can achieve good performance while keeping low latency for many applications, such as digital hearing aids, acoustically transparent hearing devices, and public address systems. To improve the performance of traditional low-latency speech enhancement algorithms, a deep filter-bank equalizer (FBE) framework was proposed, which integrated a deep learning-based subband noise reduction network with a deep learning-based shortened digital filter mapping network. In the first network, a deep learning model was trained with a controllable small frame shift to satisfy the low-latency demand, i.e., $\le$ 4 ms, so as to obtain (complex) subband gains, which could be regarded as an adaptive digital filter in each frame. In the second network, to reduce the latency, this adaptive digital filter was implicitly shortened by a deep learning-based framework, and was then applied to noisy speech to reconstruct the enhanced speech without the overlap-add method. Experimental results on the WSJ0-SI84 corpus indicated that the proposed deep FBE with only 4-ms latency achieved much better performance than traditional low-latency speech enhancement algorithms in terms of the indices such as PESQ, STOI, and the amount of noise reduction.

A Neural Beam Filter for Real-time Multi-channel Speech Enhancement

Feb 05, 2022Wenzhe Liu, Andong Li, Chengshi Zheng, Xiaodong Li

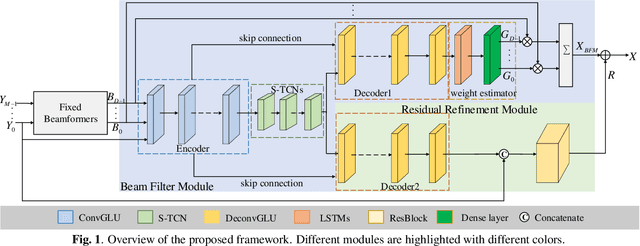

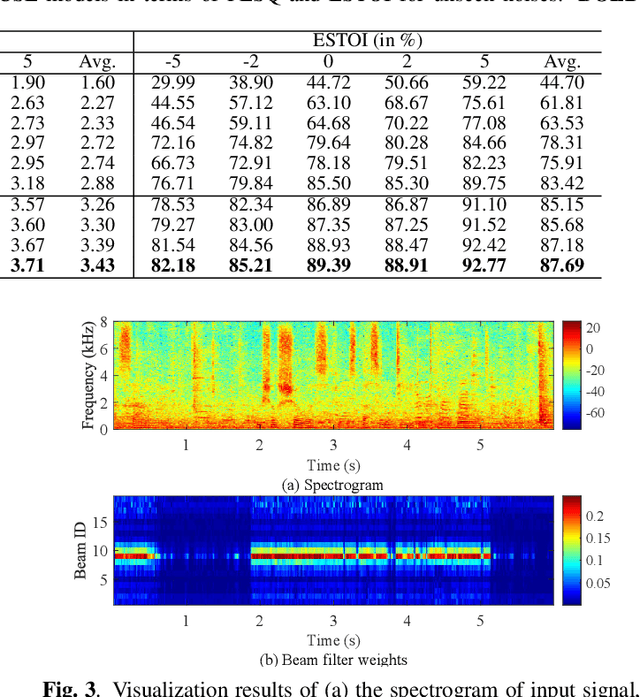

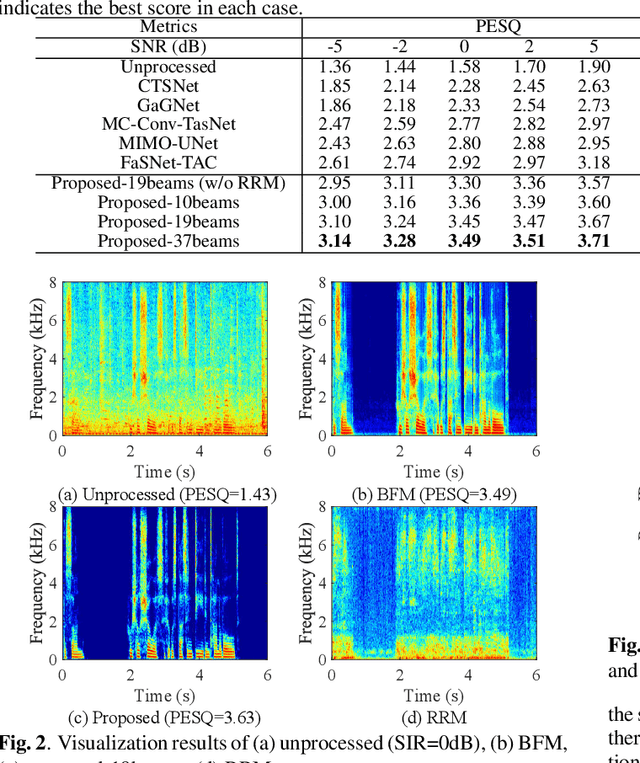

Most deep learning-based multi-channel speech enhancement methods focus on designing a set of beamforming coefficients to directly filter the low signal-to-noise ratio signals received by microphones, which hinders the performance of these approaches. To handle these problems, this paper designs a causal neural beam filter that fully exploits the spatial-spectral information in the beam domain. Specifically, multiple beams are designed to steer towards all directions using a parameterized super-directive beamformer in the first stage. After that, the neural spatial filter is learned by simultaneously modeling the spatial and spectral discriminability of the speech and the interference, so as to extract the desired speech coarsely in the second stage. Finally, to further suppress the interference components especially at low frequencies, a residual estimation module is adopted to refine the output of the second stage. Experimental results demonstrate that the proposed approach outperforms many state-of-the-art multi-channel methods on the generated multi-channel speech dataset based on the DNS-Challenge dataset.

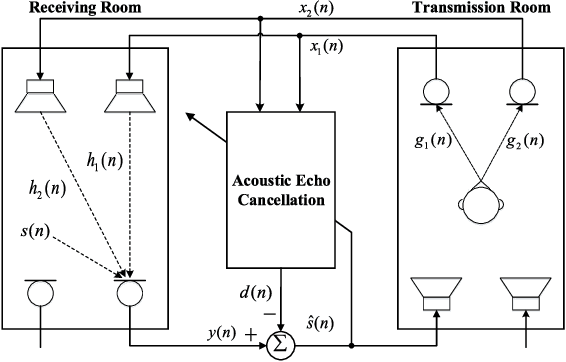

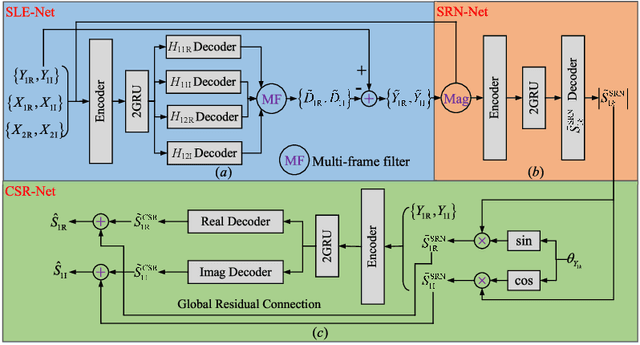

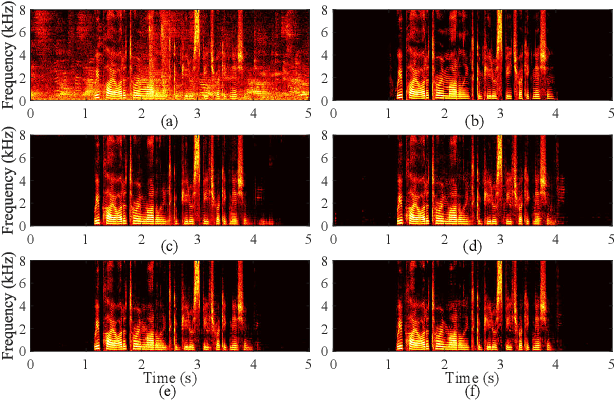

A deep complex network with multi-frame filtering for stereophonic acoustic echo cancellation

Feb 03, 2022Linjuan Cheng, Chengshi Zheng, Andong Li, Renhua Peng, Xiaodong Li

In hands-free communication system, the coupling between the loudspeaker and the microphone will generate echo signal, which can severely impair the quality of communication. Meanwhile, various types of noise in the communication environment further destroy the speech quality and intelligibility. It is hard to extract the near-end signal from the microphone input signal within one step, especially in low signal-to-noise ratios. In this paper, we propose a multi-stage approach to address this issue. On the one hand, we decompose the echo cancellation into two stages, including linear echo cancellation module and residual echo suppression module. A multi-frame filtering strategy is introduced to benefit estimating linear echo by utilizing more inter-frame information. On the other hand, we decouple the complex spectral mapping into magnitude estimation and complex spectra refine. Experimental results demonstrate that our proposed approach achieves stage-of-the-art performance over previous advanced algorithms under various conditions.

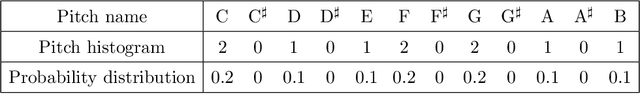

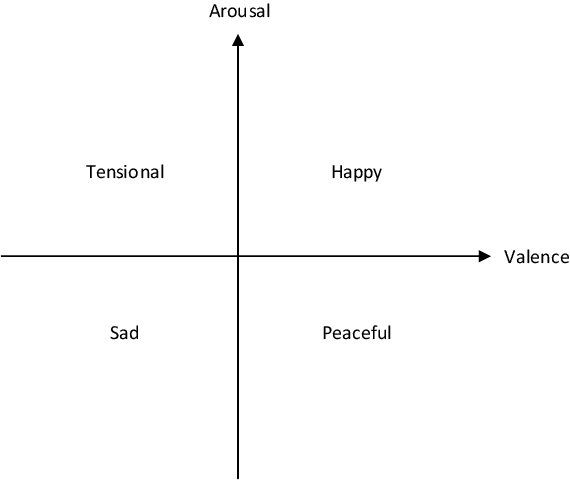

EmotionBox: a music-element-driven emotional music generation system using Recurrent Neural Network

Dec 16, 2021Kaitong Zheng, Ruijie Meng, Chengshi Zheng, Xiaodong Li, Jinqiu Sang, Juanjuan Cai, Jie Wang

With the development of deep neural networks, automatic music composition has made great progress. Although emotional music can evoke listeners' different emotions and it is important for artistic expression, only few researches have focused on generating emotional music. This paper presents EmotionBox -an music-element-driven emotional music generator that is capable of composing music given a specific emotion, where this model does not require a music dataset labeled with emotions. Instead, pitch histogram and note density are extracted as features that represent mode and tempo respectively to control music emotions. The subjective listening tests show that the Emotionbox has a more competitive and balanced performance in arousing a specified emotion than the emotion-label-based method.

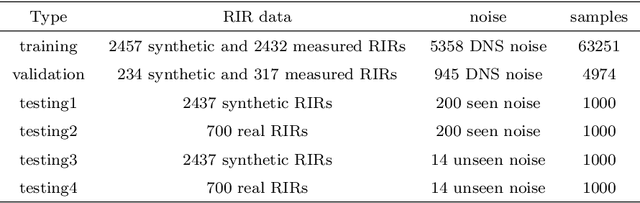

Noise-robust blind reverberation time estimation using noise-aware time-frequency masking

Dec 09, 2021Kaitong Zheng, Chengshi Zheng, Jinqiu Sang, Yulong Zhang, Xiaodong Li

The reverberation time is one of the most important parameters used to characterize the acoustic property of an enclosure. In real-world scenarios, it is much more convenient to estimate the reverberation time blindly from recorded speech compared to the traditional acoustic measurement techniques using professional measurement instruments. However, the recorded speech is often corrupted by noise, which has a detrimental effect on the estimation accuracy of the reverberation time. To address this issue, this paper proposes a two-stage blind reverberation time estimation method based on noise-aware time-frequency masking. This proposed method has a good ability to distinguish the reverberation tails from the noise, thus improving the estimation accuracy of reverberation time in noisy scenarios. The simulated and real-world acoustic experimental results show that the proposed method significantly outperforms other methods in challenging scenarios.

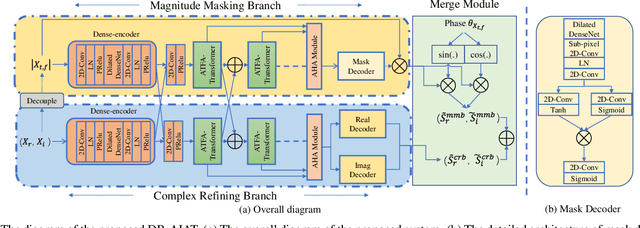

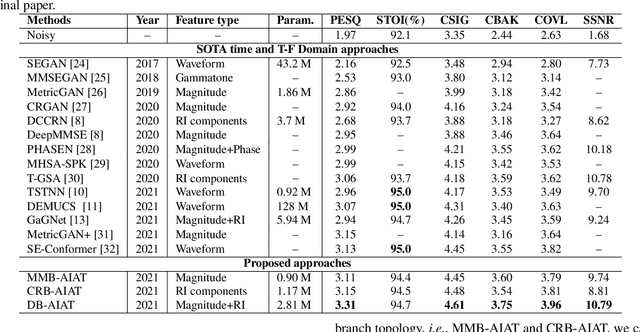

Dual-branch Attention-In-Attention Transformer for single-channel speech enhancement

Nov 05, 2021Guochen Yu, Andong Li, Yutian Wang, Yinuo Guo, Hui Wang, Chengshi Zheng

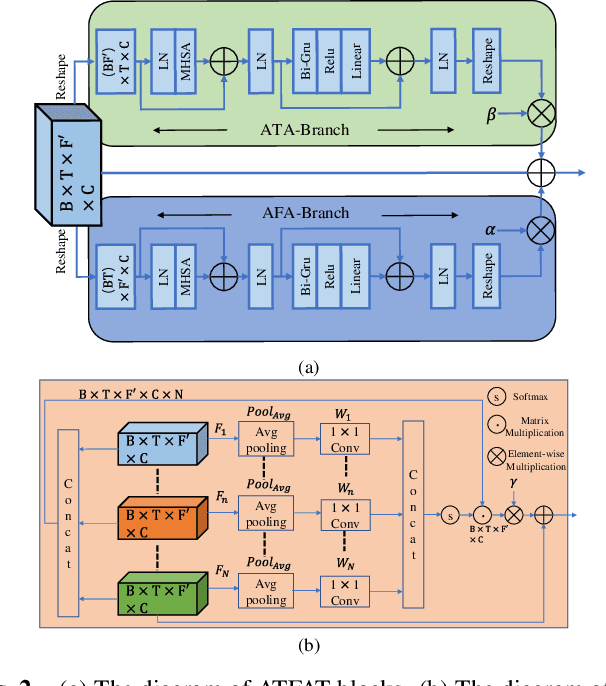

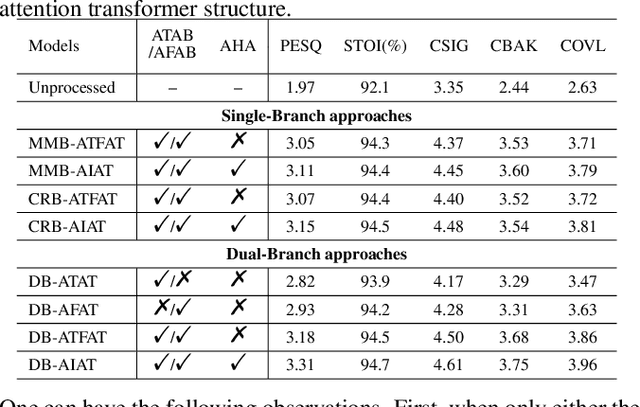

Curriculum learning begins to thrive in the speech enhancement area, which decouples the original spectrum estimation task into multiple easier sub-tasks to achieve better performance. Motivated by that, we propose a dual-branch attention-in-attention transformer dubbed DB-AIAT to handle both coarse- and fine-grained regions of the spectrum in parallel. From a complementary perspective, a magnitude masking branch is proposed to coarsely estimate the overall magnitude spectrum, and simultaneously a complex refining branch is elaborately designed to compensate for the missing spectral details and implicitly derive phase information. Within each branch, we propose a novel attention-in-attention transformer-based module to replace the conventional RNNs and temporal convolutional networks for temporal sequence modeling. Specifically, the proposed attention-in-attention transformer consists of adaptive temporal-frequency attention transformer blocks and an adaptive hierarchical attention module, aiming to capture long-term temporal-frequency dependencies and further aggregate global hierarchical contextual information. Experimental results on Voice Bank + DEMAND demonstrate that DB-AIAT yields state-of-the-art performance (e.g., 3.31 PESQ, 95.6% STOI and 10.79dB SSNR) over previous advanced systems with a relatively small model size (2.81M).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge