Asaf Shabtai

Adversarial Machine Learning Threat Analysis in Open Radio Access Networks

Jan 16, 2022Abstract:The Open Radio Access Network (O-RAN) is a new, open, adaptive, and intelligent RAN architecture. Motivated by the success of artificial intelligence in other domains, O-RAN strives to leverage machine learning (ML) to automatically and efficiently manage network resources in diverse use cases such as traffic steering, quality of experience prediction, and anomaly detection. Unfortunately, ML-based systems are not free of vulnerabilities; specifically, they suffer from a special type of logical vulnerabilities that stem from the inherent limitations of the learning algorithms. To exploit these vulnerabilities, an adversary can utilize an attack technique referred to as adversarial machine learning (AML). These special type of attacks has already been demonstrated in recent researches. In this paper, we present a systematic AML threat analysis for the O-RAN. We start by reviewing relevant ML use cases and analyzing the different ML workflow deployment scenarios in O-RAN. Then, we define the threat model, identifying potential adversaries, enumerating their adversarial capabilities, and analyzing their main goals. Finally, we explore the various AML threats in the O-RAN and review a large number of attacks that can be performed to materialize these threats and demonstrate an AML attack on a traffic steering model.

Adversarial Mask: Real-World Adversarial Attack Against Face Recognition Models

Nov 21, 2021

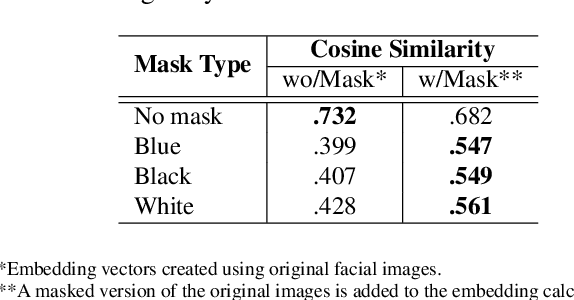

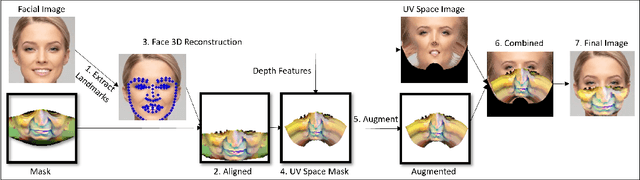

Abstract:Deep learning-based facial recognition (FR) models have demonstrated state-of-the-art performance in the past few years, even when wearing protective medical face masks became commonplace during the COVID-19 pandemic. Given the outstanding performance of these models, the machine learning research community has shown increasing interest in challenging their robustness. Initially, researchers presented adversarial attacks in the digital domain, and later the attacks were transferred to the physical domain. However, in many cases, attacks in the physical domain are conspicuous, requiring, for example, the placement of a sticker on the face, and thus may raise suspicion in real-world environments (e.g., airports). In this paper, we propose Adversarial Mask, a physical adversarial universal perturbation (UAP) against state-of-the-art FR models that is applied on face masks in the form of a carefully crafted pattern. In our experiments, we examined the transferability of our adversarial mask to a wide range of FR model architectures and datasets. In addition, we validated our adversarial mask effectiveness in real-world experiments by printing the adversarial pattern on a fabric medical face mask, causing the FR system to identify only 3.34% of the participants wearing the mask (compared to a minimum of 83.34% with other evaluated masks).

Dodging Attack Using Carefully Crafted Natural Makeup

Sep 14, 2021

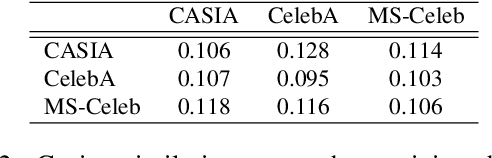

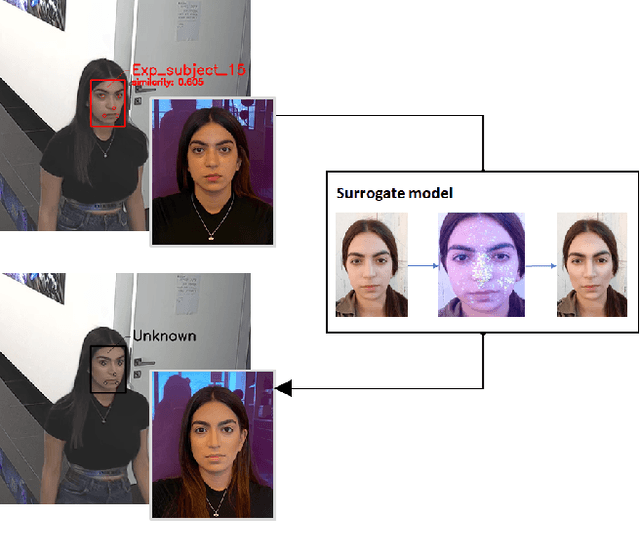

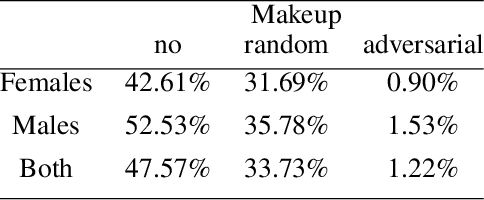

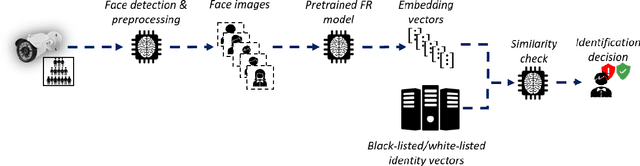

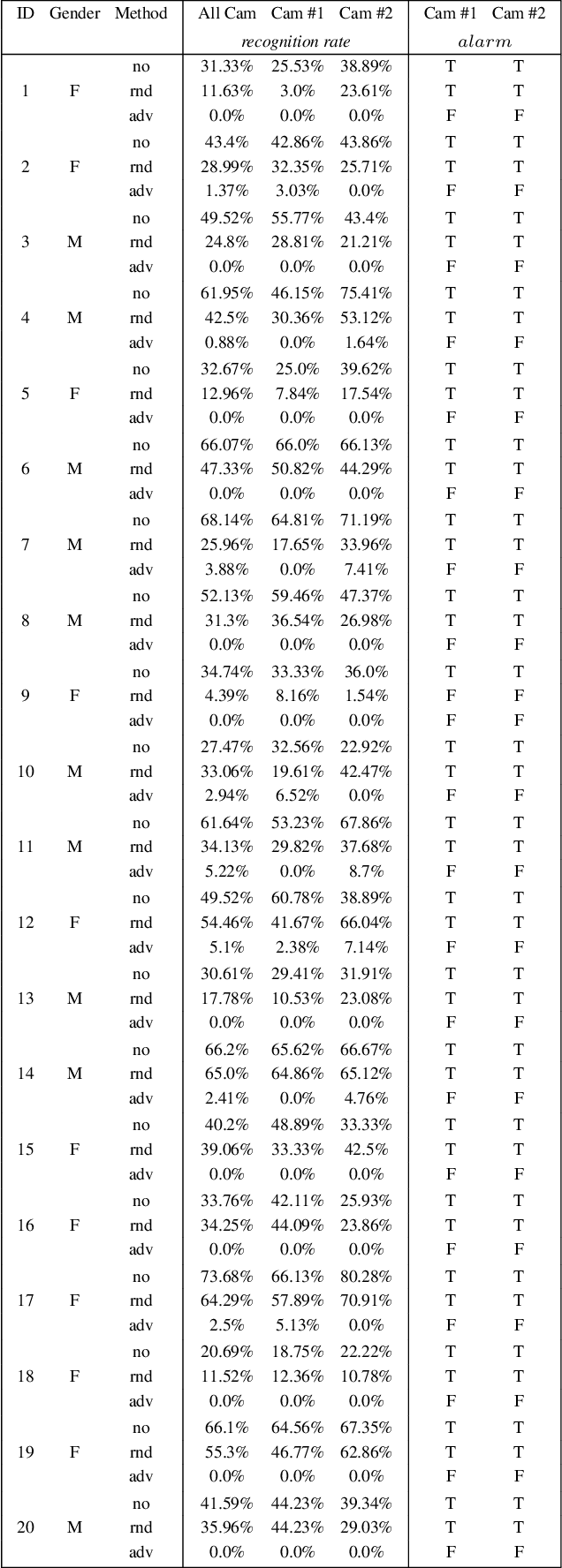

Abstract:Deep learning face recognition models are used by state-of-the-art surveillance systems to identify individuals passing through public areas (e.g., airports). Previous studies have demonstrated the use of adversarial machine learning (AML) attacks to successfully evade identification by such systems, both in the digital and physical domains. Attacks in the physical domain, however, require significant manipulation to the human participant's face, which can raise suspicion by human observers (e.g. airport security officers). In this study, we present a novel black-box AML attack which carefully crafts natural makeup, which, when applied on a human participant, prevents the participant from being identified by facial recognition models. We evaluated our proposed attack against the ArcFace face recognition model, with 20 participants in a real-world setup that includes two cameras, different shooting angles, and different lighting conditions. The evaluation results show that in the digital domain, the face recognition system was unable to identify all of the participants, while in the physical domain, the face recognition system was able to identify the participants in only 1.22% of the frames (compared to 47.57% without makeup and 33.73% with random natural makeup), which is below a reasonable threshold of a realistic operational environment.

A Framework for Evaluating the Cybersecurity Risk of Real World, Machine Learning Production Systems

Jul 05, 2021

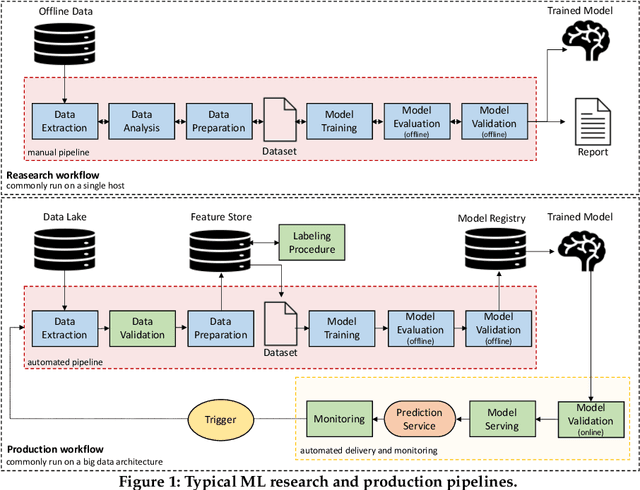

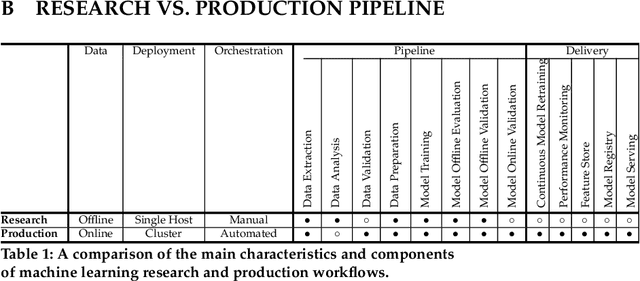

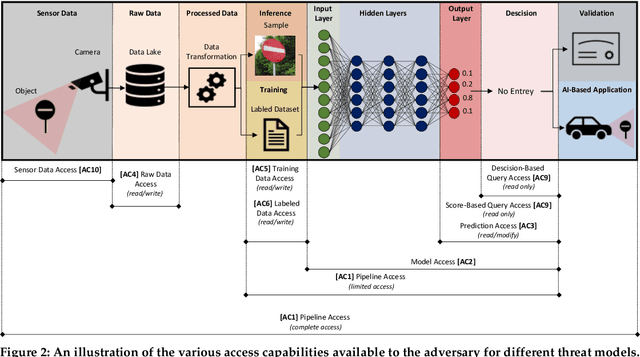

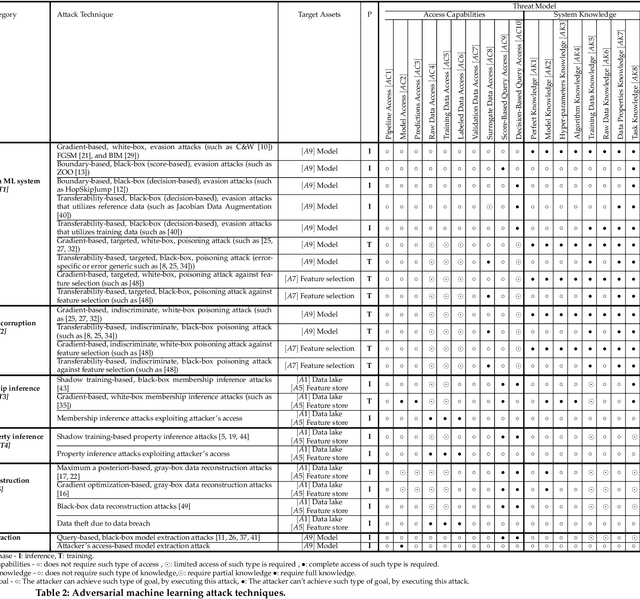

Abstract:Although cyberattacks on machine learning (ML) production systems can be destructive, many industry practitioners are ill equipped, lacking tactical and strategic tools that would allow them to analyze, detect, protect against, and respond to cyberattacks targeting their ML-based systems. In this paper, we take a significant step toward securing ML production systems by integrating these systems and their vulnerabilities into cybersecurity risk assessment frameworks. Specifically, we performed a comprehensive threat analysis of ML production systems and developed an extension to the MulVAL attack graph generation and analysis framework to incorporate cyberattacks on ML production systems. Using the proposed extension, security practitioners can apply attack graph analysis methods in environments that include ML components, thus providing security experts with a practical tool for evaluating the impact and quantifying the risk of a cyberattack targeting an ML production system.

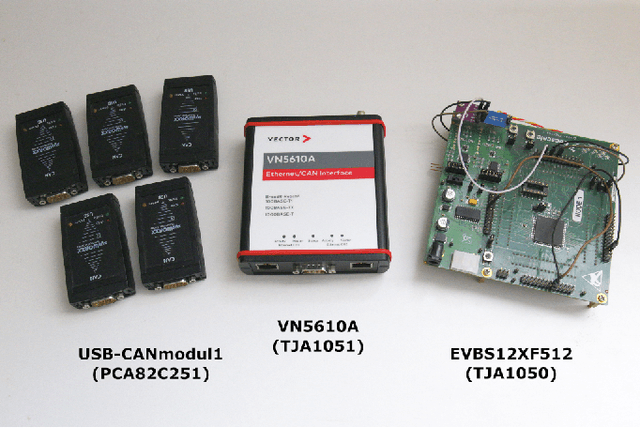

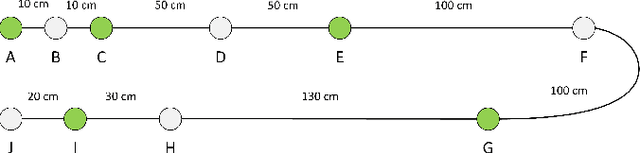

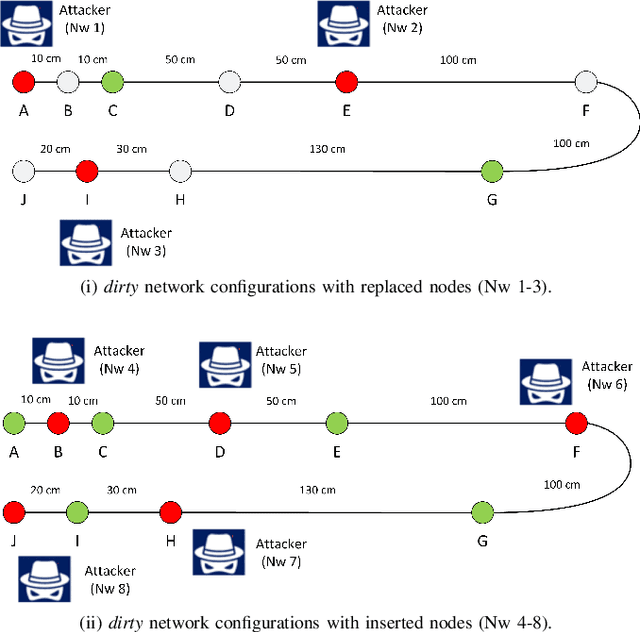

CAN-LOC: Spoofing Detection and Physical Intrusion Localization on an In-Vehicle CAN Bus Based on Deep Features of Voltage Signals

Jun 15, 2021

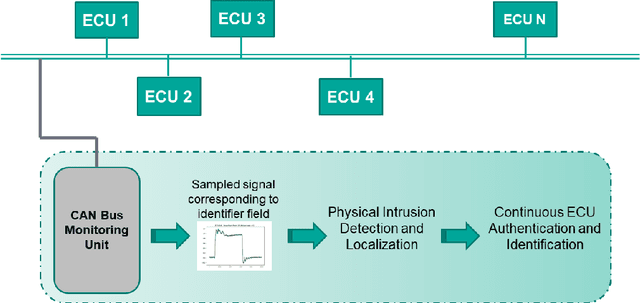

Abstract:The Controller Area Network (CAN) is used for communication between in-vehicle devices. The CAN bus has been shown to be vulnerable to remote attacks. To harden vehicles against such attacks, vehicle manufacturers have divided in-vehicle networks into sub-networks, logically isolating critical devices. However, attackers may still have physical access to various sub-networks where they can connect a malicious device. This threat has not been adequately addressed, as methods proposed to determine physical intrusion points have shown weak results, emphasizing the need to develop more advanced techniques. To address this type of threat, we propose a security hardening system for in-vehicle networks. The proposed system includes two mechanisms that process deep features extracted from voltage signals measured on the CAN bus. The first mechanism uses data augmentation and deep learning to detect and locate physical intrusions when the vehicle starts; this mechanism can detect and locate intrusions, even when the connected malicious devices are silent. This mechanism's effectiveness (100% accuracy) is demonstrated in a wide variety of insertion scenarios on a CAN bus prototype. The second mechanism is a continuous device authentication mechanism, which is also based on deep learning; this mechanism's robustness (99.8% accuracy) is demonstrated on a real moving vehicle.

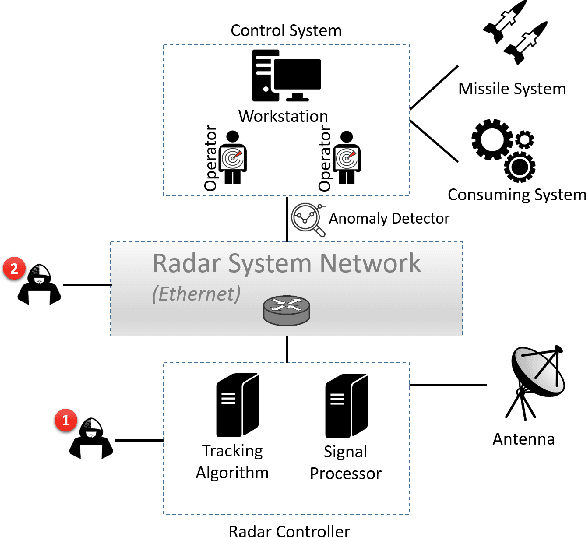

RadArnomaly: Protecting Radar Systems from Data Manipulation Attacks

Jun 13, 2021

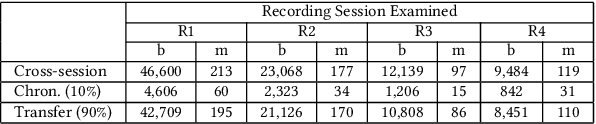

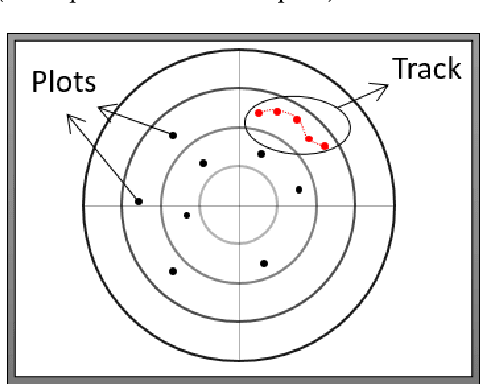

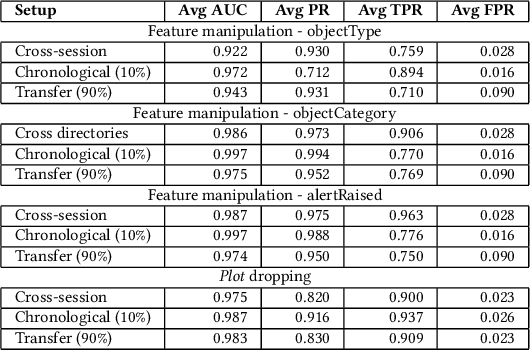

Abstract:Radar systems are mainly used for tracking aircraft, missiles, satellites, and watercraft. In many cases, information regarding the objects detected by the radar system is sent to, and used by, a peripheral consuming system, such as a missile system or a graphical user interface used by an operator. Those systems process the data stream and make real-time, operational decisions based on the data received. Given this, the reliability and availability of information provided by radar systems has grown in importance. Although the field of cyber security has been continuously evolving, no prior research has focused on anomaly detection in radar systems. In this paper, we present a deep learning-based method for detecting anomalies in radar system data streams. We propose a novel technique which learns the correlation between numerical features and an embedding representation of categorical features in an unsupervised manner. The proposed technique, which allows the detection of malicious manipulation of critical fields in the data stream, is complemented by a timing-interval anomaly detection mechanism proposed for the detection of message dropping attempts. Real radar system data is used to evaluate the proposed method. Our experiments demonstrate the method's high detection accuracy on a variety of data stream manipulation attacks (average detection rate of 88% with 1.59% false alarms) and message dropping attacks (average detection rate of 92% with 2.2% false alarms).

TANTRA: Timing-Based Adversarial Network Traffic Reshaping Attack

Mar 10, 2021

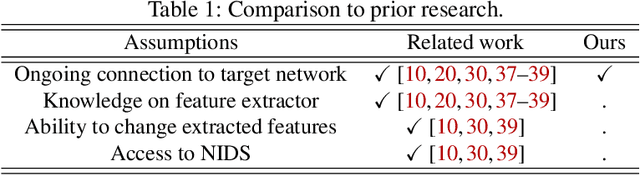

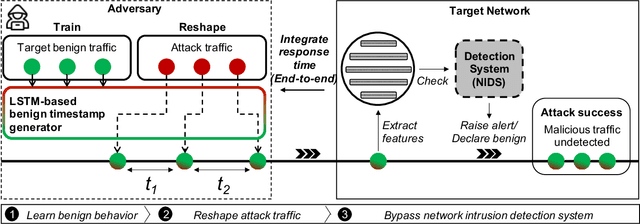

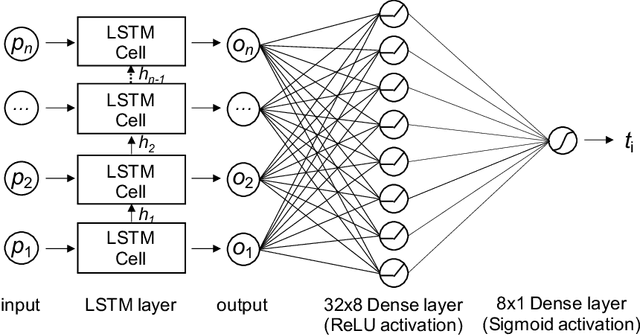

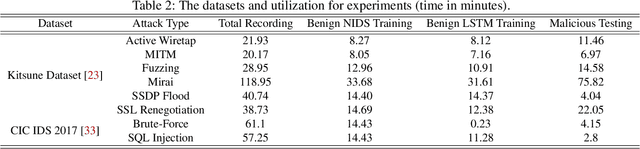

Abstract:Network intrusion attacks are a known threat. To detect such attacks, network intrusion detection systems (NIDSs) have been developed and deployed. These systems apply machine learning models to high-dimensional vectors of features extracted from network traffic to detect intrusions. Advances in NIDSs have made it challenging for attackers, who must execute attacks without being detected by these systems. Prior research on bypassing NIDSs has mainly focused on perturbing the features extracted from the attack traffic to fool the detection system, however, this may jeopardize the attack's functionality. In this work, we present TANTRA, a novel end-to-end Timing-based Adversarial Network Traffic Reshaping Attack that can bypass a variety of NIDSs. Our evasion attack utilizes a long short-term memory (LSTM) deep neural network (DNN) which is trained to learn the time differences between the target network's benign packets. The trained LSTM is used to set the time differences between the malicious traffic packets (attack), without changing their content, such that they will "behave" like benign network traffic and will not be detected as an intrusion. We evaluate TANTRA on eight common intrusion attacks and three state-of-the-art NIDS systems, achieving an average success rate of 99.99\% in network intrusion detection system evasion. We also propose a novel mitigation technique to address this new evasion attack.

Poisoning Attacks on Cyber Attack Detectors for Industrial Control Systems

Dec 23, 2020

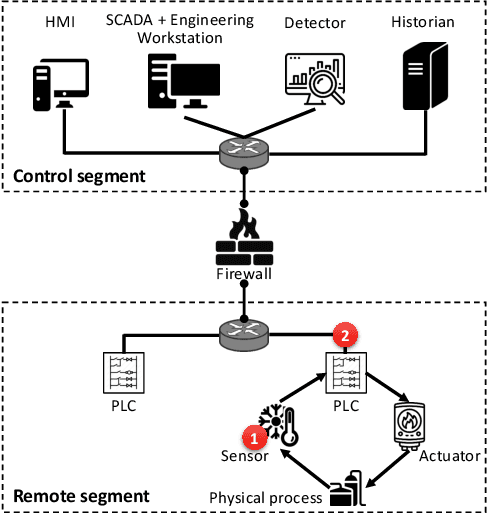

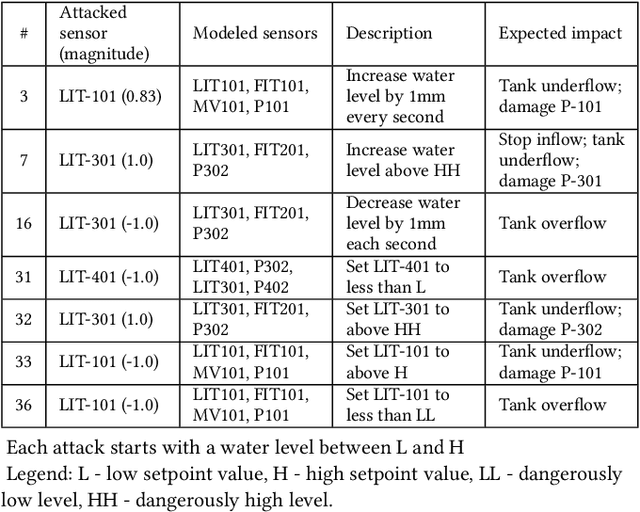

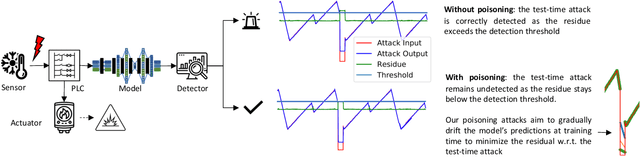

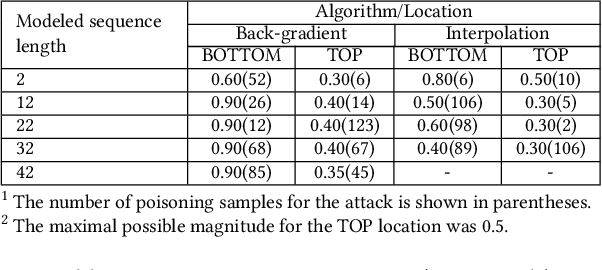

Abstract:Recently, neural network (NN)-based methods, including autoencoders, have been proposed for the detection of cyber attacks targeting industrial control systems (ICSs). Such detectors are often retrained, using data collected during system operation, to cope with the natural evolution (i.e., concept drift) of the monitored signals. However, by exploiting this mechanism, an attacker can fake the signals provided by corrupted sensors at training time and poison the learning process of the detector such that cyber attacks go undetected at test time. With this research, we are the first to demonstrate such poisoning attacks on ICS cyber attack online NN detectors. We propose two distinct attack algorithms, namely, interpolation- and back-gradient based poisoning, and demonstrate their effectiveness on both synthetic and real-world ICS data. We also discuss and analyze some potential mitigation strategies.

BENN: Bias Estimation Using Deep Neural Network

Dec 23, 2020

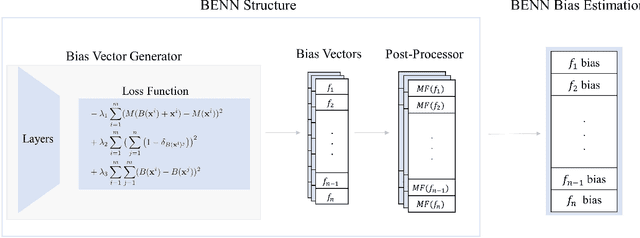

Abstract:The need to detect bias in machine learning (ML) models has led to the development of multiple bias detection methods, yet utilizing them is challenging since each method: i) explores a different ethical aspect of bias, which may result in contradictory output among the different methods, ii) provides an output of a different range/scale and therefore, can't be compared with other methods, and iii) requires different input, and therefore a human expert needs to be involved to adjust each method according to the examined model. In this paper, we present BENN -- a novel bias estimation method that uses a pretrained unsupervised deep neural network. Given a ML model and data samples, BENN provides a bias estimation for every feature based on the model's predictions. We evaluated BENN using three benchmark datasets and one proprietary churn prediction model used by a European Telco and compared it with an ensemble of 21 existing bias estimation methods. Evaluation results highlight the significant advantages of BENN over the ensemble, as it is generic (i.e., can be applied to any ML model) and there is no need for a domain expert, yet it provides bias estimations that are aligned with those of the ensemble.

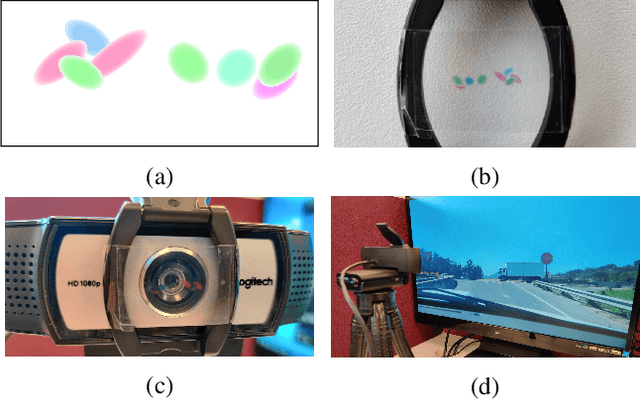

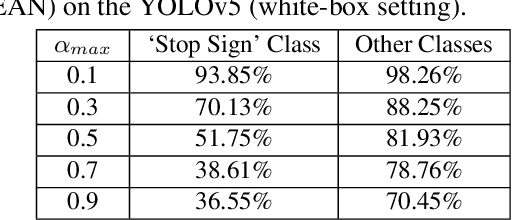

The Translucent Patch: A Physical and Universal Attack on Object Detectors

Dec 23, 2020

Abstract:Physical adversarial attacks against object detectors have seen increasing success in recent years. However, these attacks require direct access to the object of interest in order to apply a physical patch. Furthermore, to hide multiple objects, an adversarial patch must be applied to each object. In this paper, we propose a contactless translucent physical patch containing a carefully constructed pattern, which is placed on the camera's lens, to fool state-of-the-art object detectors. The primary goal of our patch is to hide all instances of a selected target class. In addition, the optimization method used to construct the patch aims to ensure that the detection of other (untargeted) classes remains unharmed. Therefore, in our experiments, which are conducted on state-of-the-art object detection models used in autonomous driving, we study the effect of the patch on the detection of both the selected target class and the other classes. We show that our patch was able to prevent the detection of 42.27% of all stop sign instances while maintaining high (nearly 80%) detection of the other classes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge