Edita Grolman

Rogue Cell: Adversarial Attack and Defense in Untrusted O-RAN Setup Exploiting the Traffic Steering xApp

May 03, 2025Abstract:The Open Radio Access Network (O-RAN) architecture is revolutionizing cellular networks with its open, multi-vendor design and AI-driven management, aiming to enhance flexibility and reduce costs. Although it has many advantages, O-RAN is not threat-free. While previous studies have mainly examined vulnerabilities arising from O-RAN's intelligent components, this paper is the first to focus on the security challenges and vulnerabilities introduced by transitioning from single-operator to multi-operator RAN architectures. This shift increases the risk of untrusted third-party operators managing different parts of the network. To explore these vulnerabilities and their potential mitigation, we developed an open-access testbed environment that integrates a wireless network simulator with the official O-RAN Software Community (OSC) RAN intelligent component (RIC) cluster. This environment enables realistic, live data collection and serves as a platform for demonstrating APATE (adversarial perturbation against traffic efficiency), an evasion attack in which a malicious cell manipulates its reported key performance indicators (KPIs) and deceives the O-RAN traffic steering to gain unfair allocations of user equipment (UE). To ensure that O-RAN's legitimate activity continues, we introduce MARRS (monitoring adversarial RAN reports), a detection framework based on a long-short term memory (LSTM) autoencoder (AE) that learns contextual features across the network to monitor malicious telemetry (also demonstrated in our testbed). Our evaluation showed that by executing APATE, an attacker can obtain a 248.5% greater UE allocation than it was supposed to in a benign scenario. In addition, the MARRS detection method was also shown to successfully classify malicious cell activity, achieving accuracy of 99.2% and an F1 score of 0.978.

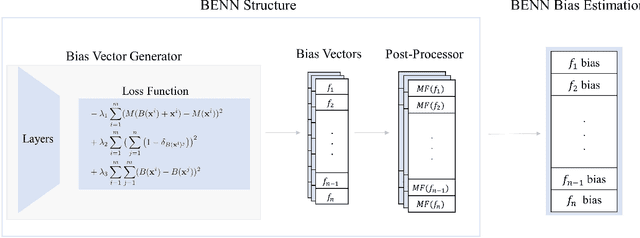

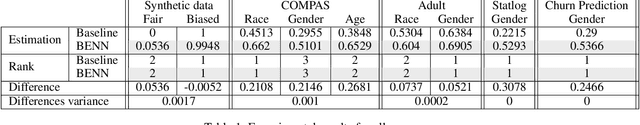

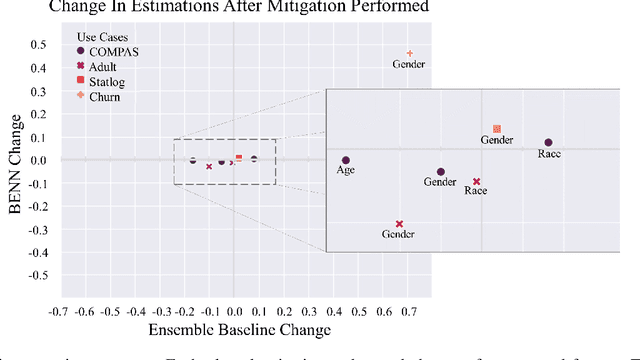

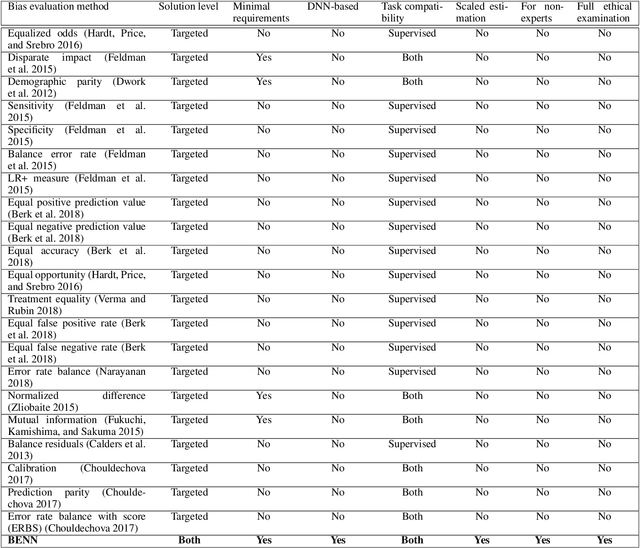

BENN: Bias Estimation Using Deep Neural Network

Dec 23, 2020

Abstract:The need to detect bias in machine learning (ML) models has led to the development of multiple bias detection methods, yet utilizing them is challenging since each method: i) explores a different ethical aspect of bias, which may result in contradictory output among the different methods, ii) provides an output of a different range/scale and therefore, can't be compared with other methods, and iii) requires different input, and therefore a human expert needs to be involved to adjust each method according to the examined model. In this paper, we present BENN -- a novel bias estimation method that uses a pretrained unsupervised deep neural network. Given a ML model and data samples, BENN provides a bias estimation for every feature based on the model's predictions. We evaluated BENN using three benchmark datasets and one proprietary churn prediction model used by a European Telco and compared it with an ensemble of 21 existing bias estimation methods. Evaluation results highlight the significant advantages of BENN over the ensemble, as it is generic (i.e., can be applied to any ML model) and there is no need for a domain expert, yet it provides bias estimations that are aligned with those of the ensemble.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge