Arpan Biswas

Physically-Constrained Autoencoder-Assisted Bayesian Optimization for Refinement of High-Dimensional Defect-Sensitive Single Crystalline Structure

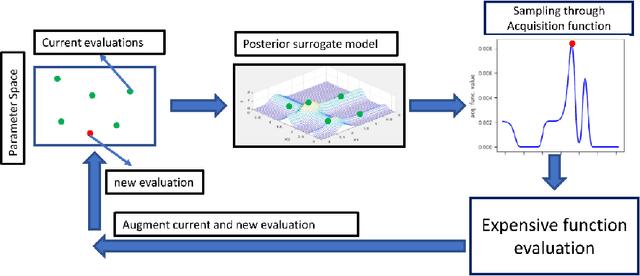

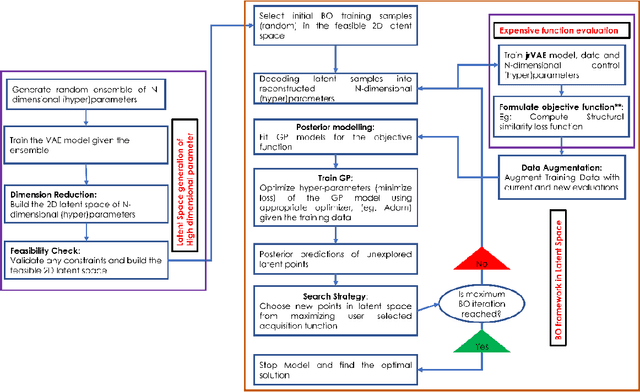

Dec 29, 2025Abstract:Physical properties and functionalities of materials are dictated by global crystal structures as well as local defects. To establish a structure-property relationship, not only the crystallographic symmetry but also quantitative knowledge about defects are required. Here we present a hybrid Machine Learning framework that integrates a physically-constrained variational-autoencoder (pcVAE) with different Bayesian Optimization (BO) methods to systematically accelerate and improve crystal structure refinement with resolution of defects. We chose the pyrochlore structured Ho2Ti2O7 as a model system and employed the GSAS2 package for benchmarking crystallographic parameters from Rietveld refinement. However, the function space of these material systems is highly nonlinear, which limits optimizers like traditional Rietveld refinement, into trapping at local minima. Also, these naive methods don't provide an extensive learning about the overall function space, which is essential for large space, large time consuming explorations to identify various potential regions of interest. Thus, we present the approach of exploring the high Dimensional structure parameters of defect sensitive systems via pretrained pcVAE assisted BO and Sparse Axis Aligned BO. The pcVAE projects high-Dimensional diffraction data consisting of thousands of independently measured diffraction orders into a lowD latent space while enforcing scaling invariance and physical relevance. Then via BO methods, we aim to minimize the L2 norm based chisq errors in the real and latent spaces separately between experimental and simulated diffraction patterns, thereby steering the refinement towards potential optimum crystal structure parameters. We investigated and compared the results among different pcVAE assisted BO, non pcVAE assisted BO, and Rietveld refinement.

A Fourier-Based Global Denoising Model for Smart Artifacts Removing of Microscopy Images

Nov 12, 2025Abstract:Microscopy such as Scanning Tunneling Microscopy (STM), Atomic Force Microscopy (AFM) and Scanning Electron Microscopy (SEM) are essential tools in material imaging at micro- and nanoscale resolutions to extract physical knowledge and materials structure-property relationships. However, tuning microscopy controls (e.g. scanning speed, current setpoint, tip bias etc.) to obtain a high-quality of images is a non-trivial and time-consuming effort. On the other hand, with sub-standard images, the key features are not accurately discovered due to noise and artifacts, leading to erroneous analysis. Existing denoising models mostly build on generalizing the weak signals as noises while the strong signals are enhanced as key features, which is not always the case in microscopy images, thus can completely erase a significant amount of hidden physical information. To address these limitations, we propose a global denoising model (GDM) to smartly remove artifacts of microscopy images while preserving weaker but physically important features. The proposed model is developed based on 1) first designing a two-imaging input channel of non-pair and goal specific pre-processed images with user-defined trade-off information between two channels and 2) then integrating a loss function of pixel- and fast Fourier-transformed (FFT) based on training the U-net model. We compared the proposed GDM with the non-FFT denoising model over STM-generated images of Copper(Cu) and Silicon(Si) materials, AFM-generated Pantoea sp.YR343 bio-film images and SEM-generated plastic degradation images. We believe this proposed workflow can be extended to improve other microscopy image quality and will benefit the experimentalists with the proposed design flexibility to smartly tune via domain-experts preferences.

A Bi-channel Aided Stitching of Atomic Force Microscopy Images

Mar 11, 2025Abstract:Microscopy is an essential tool in scientific research, enabling the visualization of structures at micro- and nanoscale resolutions. However, the field of microscopy often encounters limitations in field-of-view (FOV), restricting the amount of sample that can be imaged in a single capture. To overcome this limitation, image stitching techniques have been developed to seamlessly merge multiple overlapping images into a single, high-resolution composite. The images collected from microscope need to be optimally stitched before accurate physical information can be extracted from post analysis. However, the existing stitching tools either struggle to stitch images together when the microscopy images are feature sparse or cannot address all the transformations of images. To address these issues, we propose a bi-channel aided feature-based image stitching method and demonstrate its use on AFM generated biofilm images. The topographical channel image of AFM data captures the morphological details of the sample, and a stitched topographical image is desired for researchers. We utilize the amplitude channel of AFM data to maximize the matching features and to estimate the position of the original topographical images and show that the proposed bi-channel aided stitching method outperforms the traditional stitching approach. Furthermore, we found that the differentiation of the topographical images along the x-axis provides similar feature information to the amplitude channel image, which generalizes our approach when the amplitude images are not available. Here we demonstrated the application on AFM, but similar approaches could be employed of optical microscopy with brightfield and fluorescence channels. We believe this proposed workflow will benefit the experimentalist to avoid erroneous analysis and discovery due to incorrect stitching.

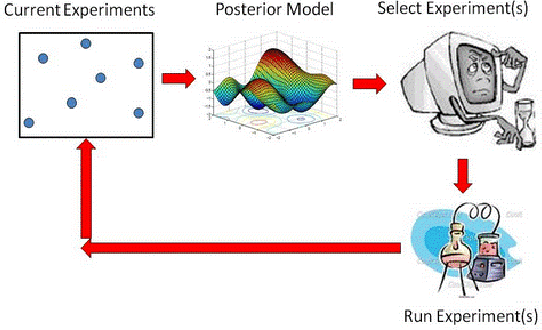

Towards accelerating physical discovery via non-interactive and interactive multi-fidelity Bayesian Optimization: Current challenges and future opportunities

Feb 20, 2024Abstract:Both computational and experimental material discovery bring forth the challenge of exploring multidimensional and often non-differentiable parameter spaces, such as phase diagrams of Hamiltonians with multiple interactions, composition spaces of combinatorial libraries, processing spaces, and molecular embedding spaces. Often these systems are expensive or time-consuming to evaluate a single instance, and hence classical approaches based on exhaustive grid or random search are too data intensive. This resulted in strong interest towards active learning methods such as Bayesian optimization (BO) where the adaptive exploration occurs based on human learning (discovery) objective. However, classical BO is based on a predefined optimization target, and policies balancing exploration and exploitation are purely data driven. In practical settings, the domain expert can pose prior knowledge on the system in form of partially known physics laws and often varies exploration policies during the experiment. Here, we explore interactive workflows building on multi-fidelity BO (MFBO), starting with classical (data-driven) MFBO, then structured (physics-driven) sMFBO, and extending it to allow human in the loop interactive iMFBO workflows for adaptive and domain expert aligned exploration. These approaches are demonstrated over highly non-smooth multi-fidelity simulation data generated from an Ising model, considering spin-spin interaction as parameter space, lattice sizes as fidelity spaces, and the objective as maximizing heat capacity. Detailed analysis and comparison show the impact of physics knowledge injection and on-the-fly human decisions for improved exploration, current challenges, and potential opportunities for algorithm development with combining data, physics and real time human decisions.

Human-in-the-loop: The future of Machine Learning in Automated Electron Microscopy

Oct 08, 2023Abstract:Machine learning methods are progressively gaining acceptance in the electron microscopy community for de-noising, semantic segmentation, and dimensionality reduction of data post-acquisition. The introduction of the APIs by major instrument manufacturers now allows the deployment of ML workflows in microscopes, not only for data analytics but also for real-time decision-making and feedback for microscope operation. However, the number of use cases for real-time ML remains remarkably small. Here, we discuss some considerations in designing ML-based active experiments and pose that the likely strategy for the next several years will be human-in-the-loop automated experiments (hAE). In this paradigm, the ML learning agent directly controls beam position and image and spectroscopy acquisition functions, and human operator monitors experiment progression in real- and feature space of the system and tunes the policies of the ML agent to steer the experiment towards specific objectives.

A dynamic Bayesian optimized active recommender system for curiosity-driven Human-in-the-loop automated experiments

Apr 05, 2023Abstract:Optimization of experimental materials synthesis and characterization through active learning methods has been growing over the last decade, with examples ranging from measurements of diffraction on combinatorial alloys at synchrotrons, to searches through chemical space with automated synthesis robots for perovskites. In virtually all cases, the target property of interest for optimization is defined apriori with limited human feedback during operation. In contrast, here we present the development of a new type of human in the loop experimental workflow, via a Bayesian optimized active recommender system (BOARS), to shape targets on the fly, employing human feedback. We showcase examples of this framework applied to pre-acquired piezoresponse force spectroscopy of a ferroelectric thin film, and then implement this in real time on an atomic force microscope, where the optimization proceeds to find symmetric piezoresponse amplitude hysteresis loops. It is found that such features appear more affected by subsurface defects than the local domain structure. This work shows the utility of human-augmented machine learning approaches for curiosity-driven exploration of systems across experimental domains. The analysis reported here is summarized in Colab Notebook for the purpose of tutorial and application to other data: https://github.com/arpanbiswas52/varTBO

Combining Variational Autoencoders and Physical Bias for Improved Microscopy Data Analysis

Feb 08, 2023Abstract:Electron and scanning probe microscopy produce vast amounts of data in the form of images or hyperspectral data, such as EELS or 4D STEM, that contain information on a wide range of structural, physical, and chemical properties of materials. To extract valuable insights from these data, it is crucial to identify physically separate regions in the data, such as phases, ferroic variants, and boundaries between them. In order to derive an easily interpretable feature analysis, combining with well-defined boundaries in a principled and unsupervised manner, here we present a physics augmented machine learning method which combines the capability of Variational Autoencoders to disentangle factors of variability within the data and the physics driven loss function that seeks to minimize the total length of the discontinuities in images corresponding to latent representations. Our method is applied to various materials, including NiO-LSMO, BiFeO3, and graphene. The results demonstrate the effectiveness of our approach in extracting meaningful information from large volumes of imaging data. The fully notebook containing implementation of the code and analysis workflow is available at https://github.com/arpanbiswas52/PaperNotebooks

Optimizing Training Trajectories in Variational Autoencoders via Latent Bayesian Optimization Approach

Jun 30, 2022

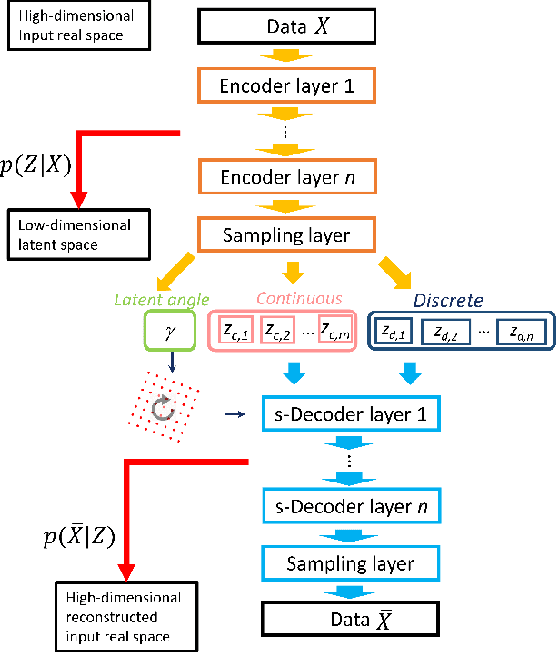

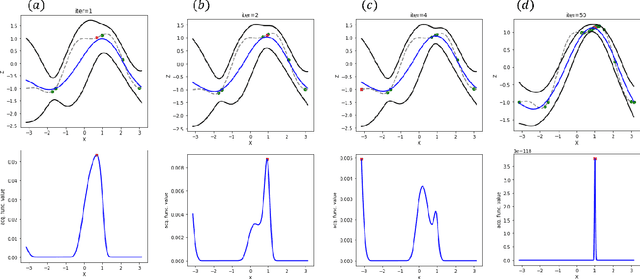

Abstract:Unsupervised and semi-supervised ML methods such as variational autoencoders (VAE) have become widely adopted across multiple areas of physics, chemistry, and materials sciences due to their capability in disentangling representations and ability to find latent manifolds for classification and regression of complex experimental data. Like other ML problems, VAEs require hyperparameter tuning, e.g., balancing the Kullback Leibler (KL) and reconstruction terms. However, the training process and resulting manifold topology and connectivity depend not only on hyperparameters, but also their evolution during training. Because of the inefficiency of exhaustive search in a high-dimensional hyperparameter space for the expensive to train models, here we explored a latent Bayesian optimization (zBO) approach for the hyperparameter trajectory optimization for the unsupervised and semi-supervised ML and demonstrate for joint-VAE with rotational invariances. We demonstrate an application of this method for finding joint discrete and continuous rotationally invariant representations for MNIST and experimental data of a plasmonic nanoparticles material system. The performance of the proposed approach has been discussed extensively, where it allows for any high dimensional hyperparameter tuning or trajectory optimization of other ML models.

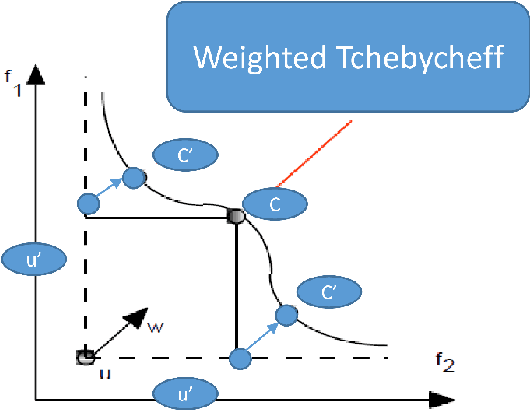

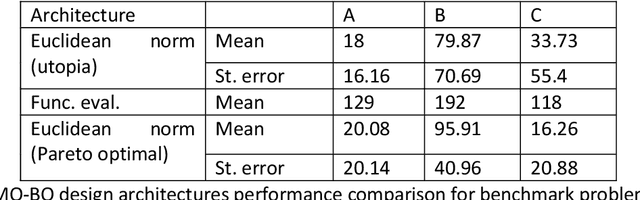

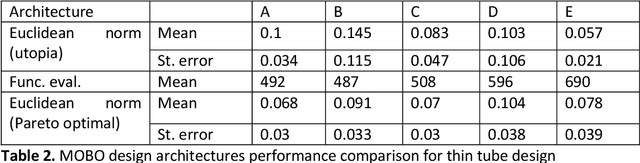

A Nested Weighted Tchebycheff Multi-Objective Bayesian Optimization Approach for Flexibility of Unknown Utopia Estimation in Expensive Black-box Design Problems

Oct 16, 2021

Abstract:We propose a nested weighted Tchebycheff Multi-objective Bayesian optimization framework where we build a regression model selection procedure from an ensemble of models, towards better estimation of the uncertain parameters of the weighted-Tchebycheff expensive black-box multi-objective function. In existing work, a weighted Tchebycheff MOBO approach has been demonstrated which attempts to estimate the unknown utopia in formulating acquisition function, through calibration using a priori selected regression model. However, the existing MOBO model lacks flexibility in selecting the appropriate regression models given the guided sampled data and therefore, can under-fit or over-fit as the iterations of the MOBO progress, reducing the overall MOBO performance. As it is too complex to a priori guarantee a best model in general, this motivates us to consider a portfolio of different families of predictive models fitted with current training data, guided by the WTB MOBO; the best model is selected following a user-defined prediction root mean-square-error-based approach. The proposed approach is implemented in optimizing a multi-modal benchmark problem and a thin tube design under constant loading of temperature-pressure, with minimizing the risk of creep-fatigue failure and design cost. Finally, the nested weighted Tchebycheff MOBO model performance is compared with different MOBO frameworks with respect to accuracy in parameter estimation, Pareto-optimal solutions and function evaluation cost. This method is generalized enough to consider different families of predictive models in the portfolio for best model selection, where the overall design architecture allows for solving any high-dimensional (multiple functions) complex black-box problems and can be extended to any other global criterion multi-objective optimization methods where prior knowledge of utopia is required.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge